ABBYY FineReader (2/2) text recognition

Content

General Recognition Theory

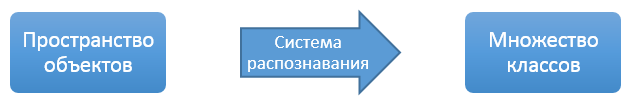

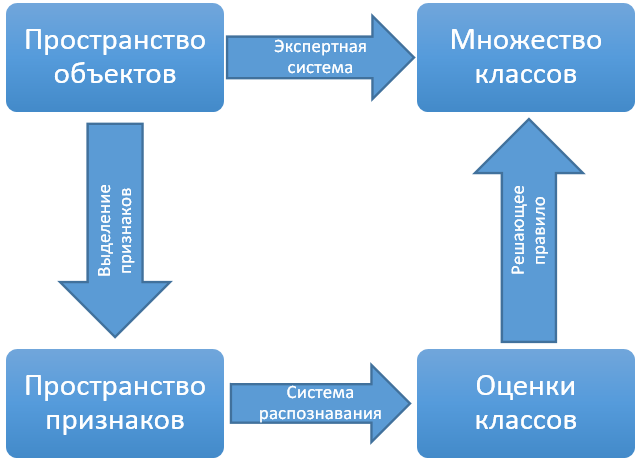

We finally got to the most interesting topic - character recognition. But first, let's take a little look at the theory to make it clearer what exactly and why we are doing. The general task of automatic recognition or machine learning is as follows.

There is a certain set of classes C and the object space R. There is some external “expert” system with which it can be determined for an arbitrary object which class it belongs to.

The task of automatic recognition is to construct such a system that, on the basis of a limited sample transferred to it, would produce in advance for any new object transferred to it a class corresponding to it. At the same time, the total difference in classification between the “expert” system and the automatic recognition system should be minimal.

')

The system of classes can be discrete or continuous, the set of objects can be of any kind of structure, the expert system can be arbitrary, starting with ordinary human experts, the accuracy can be estimated only on a certain sample of objects. But basically, almost any task of automatic recognition (from ranking search results to medical diagnostics) is reduced precisely to building a link between objects from a given space and a set of classes.

But in such a formulation, the task is virtually meaningless, because it is completely incomprehensible what kind of objects we have and how to work with them in general.

Therefore, the following scheme is usually used: a certain feature space is constructed in which we can at least determine the distance between objects in any form. A transformation is constructed from the space of objects into the space of attributes. The main requirement of the transformation under construction is to try to comply with the “compactness hypothesis”: objects that are close to each other in the feature space must have the same or similar classes.

Thus, the initial task is divided into the following steps:

- Build a transformation from object space to feature space taking into account the “compactness hypothesis”

- Mark the space of attributes based on the available marked-up selection of objects, so that each point corresponds to a set of assessments of the belonging of this point to different classes.

- Make a decisive rule that, according to a set of assessments, would make the final decision to which class the object belongs.

About the third step of the algorithm, I will say only a few words, because this is one of the rare places in the system where the idea is simple and the implementation is also simple. There are several character recognition systems; each of these systems provides a list of recognition options with some confidence. The task of the decision rule is to merge the lists of recognition options from all systems into one general list and combine the recognition quality from several systems into one integral quality. A combination of quality is made using a formula specially selected for our classifiers.

And one more note about the recognition classes - in the article I use the word "symbols", because it is understood and known by everyone. But this is done only to simplify the text, in fact, we recognize not symbols, but graphemes. Grapheme is a specific way to graphically represent a symbol. The relationship between symbols and graphemes is quite complicated - several symbols can correspond to one grapheme (a small “c” and a large “C” in Latin and Cyrillic are all one grapheme), and one symbol can correspond to several graphemes (the letter “a” in different fonts may be different graphemes). A standard list of graphemes does not exist, we compiled it ourselves, and for each grapheme a list of symbols is given which it can correspond to. Conversion from graphemes into symbols occurs after recognition of symbols at the stage of generating word recognition variants.

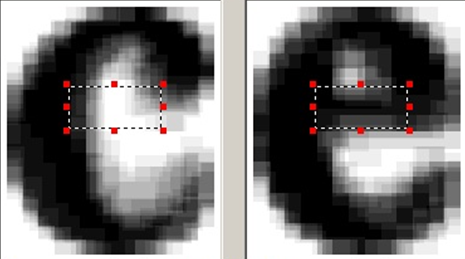

An example of two different graphemes of the letter "a".

A couple of facts about our assessment of the quality of work of classifiers:

- We use several classifiers that complement each other. That is, the normal quality assessment in any case is only the assessment of the integrated list of options, obtained after the application of the decision rule.

- At the stage of searching for variants of words, a lot of contextual information is used, which simply does not exist within a single character. Therefore, classifiers are not required to always put the required recognition option in the first place - instead, it is necessary that the correct version be in the first two or three recognition options.

Recognition system

In our program, several different classifiers are used, which mainly differ in the features used, but at the same time have a practically identical structure of the recognition system itself.

The requirements that we have for the character recognition system are quite simple:

- The system should work equally on any source set of possible symbols. Because we never know in advance which language or combination of languages the user will choose for recognition. Moreover, the user usually has the opportunity to create his own language with a generally arbitrary alphabet.

- The limitation of possible characters for recognition should increase the quality and speed up the work of classifiers. If the user selects a recognition language for his document, he should notice improvements.

In connection with these two requirements, the use of complex and intelligent recognition systems is not very common. People often ask a question about neural networks, so it’s better to write here right away - no, we don’t use them for many reasons. The main problem with them is the rigidly fixed set of recognizable classes and excessive internal complexity, which extremely complicates the precise final tuning of the classifier. Further discussion of neural networks is a topic for a large holivar, so it’s better to talk about what we use.

And we use a variety of Bayesian classifier. His idea is as follows:

We need for a particular point in the space of attributes (x) to estimate the probability of its belonging to different classes (C 1 , C 2 , ..., C n )

If we write formally, then we need to calculate the probability that the class of an object is C i , provided that the object is described in the attribute space by the point x - P (C i | x).

We apply the Baeys formula from the basic course of probability theory:

P (C i | x) = P (C i ) * P (x | C i ) / P (x);

We finally compare the probabilities for different classes to determine the answer for a symbol, so the denominator is discarded from the formula, it is common for all classes. We will need to compare the set of P (C i ) * P (x | C i ) values for different classes and select the largest value from them.

P (C i ) is the a priori probability of a class. It does not depend on a recognizable object, it can be calculated in advance. For any set of possible classes (possible alphabets), it can be calculated in advance by the frequency of occurrence of letters in the specified language. And practice shows that sometimes it is even better to take the same a priori probabilities of all symbols.

P (x | C i ) is the probability for a certain class C i to get an object with a description of x. We can determine this probability in advance at the stage of training the classifier. Absolutely standard methods from mathematical statistics work here - we make an assumption about the form of distribution (normal, uniform, ...) for a class in the feature space, take a large sample of objects of this class, and from this sample we determine the necessary parameters for the selected distribution.

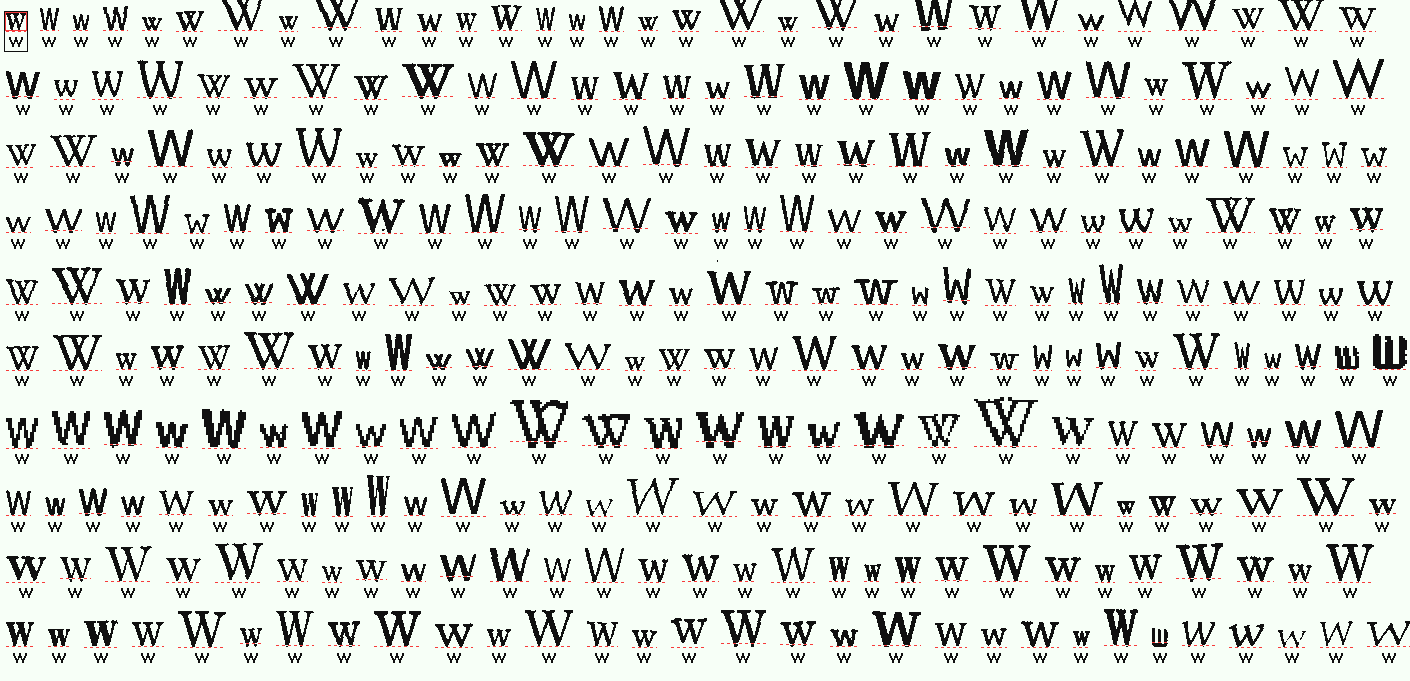

Quite a small fragment of our database of images for training classifiers.

What gives us the use of Bayesian classifier? This system does not depend on the alphabet of recognition. The probability distribution for each class is considered to be absolutely independent in the sample. It is better not to use a priori probabilities for classes in practice. The final grade for each class is a probability, and, therefore, for any class it is in the same range.

Thus, we get a system that can work without any additional settings both on the binary code image of zeroes and ones, and on the text from the phrasebook in 4-5 languages. An additional advantage is that probabilistic models are very easy to interpret and you can independently train individual characters if such a need arises.

Feature space

As I said before, we use several character classifiers in our system and the main difference between these classifiers is precisely in the feature space.

The space of attributes for our classifiers is built on a combination of two ideas:

- It is very easy for the Bayesian classifier to work if the feature space consists of numerical vectors of a fixed length — for numerical vectors, it is very easy to read the expectation, variance, and other characteristics that are necessary to restore distribution parameters.

- If you count the basic characteristics on the image, such as the amount of black in a certain fragment of the image, then each such characteristic alone will not say anything about the image belonging to a particular symbol. But if you collect a lot of such characteristics in one vector, then these vectors in the sum will already differ noticeably between different classes.

So we have several classifiers constructed as follows: we select many (about a hundred) basic features, collect a vector from them, declare such vectors as our feature space and build a Bayesian classifier on them.

As a simplified example, we divide the image into several vertical and horizontal stripes, and for each strip we consider the ratio of black and white pixels. At the same time, we consider that the distribution of the resulting vectors is normal. For a normal distribution, we need to calculate the expectation and variance to find out the distribution parameters. To do this on vectors, with a sufficiently large sample, is elementary. Thus, we derive from a very simple feature and with the help of simple mathematics a classifier, which in practice is of fairly high quality.

It was a simplified example, and which features and which combinations are actually used are just the result of accumulated experience and long research, so I will not give a detailed description of them here.

Raster classifier

Separately, you need to write about the raster classifier. The simplest feature space that you can think of for character recognition is the image itself. We choose a certain (small) size, any image is reduced to this size and we actually get a vector of values of a fixed length. We all know how to add and average a vector, so that we have a completely normal feature space.

This is the most basic classifier - raster. We bring all the images of one grapheme from the training base to the same size, and then average them. The result is a gray image where the color of each point corresponds to the probability that this point should be white or black for a given symbol. The raster classifier, if desired, can be interpreted as Bayesian and consider some probability that the given image appeared at the given reference for the symbol. But for this classifier, this approach only complicates everything, so we use the usual distance between a black and white image and a gray standard to assess whether an image belongs to a given class.

The advantage of the raster classifier is its simplicity and speed. A minus - low accuracy, mainly due to the fact that when casting to the same size, we lose all the information about the geometry of characters - say the point and the letter “I” in this classifier are reduced to the same black square. As a result, this classifier is an ideal mechanism for generating the initial list of recognition options, which can then be clarified with the help of more complex classifiers.

Several examples of raster patterns for one letter.

Structural Classifier

In addition to the printed text, our system can also recognize handwritten text. And the problem of handwriting is that its variability is very large, even if a person tries to write in block letters.

Therefore, for handwriting, all standard classifiers cannot provide sufficient quality. So for this task we have one more step in the system - the structural classifier.

His idea is as follows: each symbol has a clearly defined structure. But if we just try to isolate this structure in the image, it will not work, the variation is very large and we still need to somehow compare the found structure with the structure of each possible symbol. But we have basic classifiers - they are inaccurate, but in 99.9% of cases the correct recognition option will still be on the list of, say, the top 10 options.

So let's go from the back end:

- For each character we describe its structure through the basic elements - line, arc, ring, ... The description will have to be done manually, this is a big problem, but nobody has yet come up with an automatic selection of the topology with acceptable quality for us.

- For the structural description of the symbol, let us describe separately how exactly to search for it on the image - from which elements to start and in what order to go.

- When recognizing a character, we obtain a small list of possible recognition options using basic classifiers.

- For each recognition option, we will try to find the corresponding structural description on the image.

- If the description fits, we evaluate its quality. It did not fit - it means that the recognition option does not fit at all.

Since in this approach we check specific hypotheses, we always know exactly what to look for in the image and in what order - this is how the algorithm for selecting the structure is greatly simplified.

An example of the selected structural elements in the symbol image

Differential Classifier

As a superstructure over a set of classifiers, we still have a differential classifier. The reason for its appearance in the following - there are pairs of characters that are very similar to each other, with the exception of some small, very specific features. And for each pair of characters, these features are different. If knowledge of these features is attempted to be built into the general classifier, then the general classifier will be overloaded with features, each of which will not be of use in the overwhelming majority of cases. On the other hand, after the work of ordinary classifiers, we already have a list of recognition hypotheses, and we know exactly what options we need to compare with each other.

Therefore, for similar pairs of characters, we find special features that distinguish precisely these two classes and construct a classifier based on these features only for these two classes. If we have two similar variants with similar estimates in recognition options, such a classifier can say exactly which of these two options is preferable.

In our system, differential classifiers are used after the work of all the main classifiers in order to additionally re-sort the list of symbol recognition options. To determine similar pairs of characters, an automatic system is used, which looks at which characters are confused with which ones most often when using only basic classifiers. Then, for each suspicious pair, additional features are manually selected and a differential classifier is trained.

Practice shows that for ordinary languages there are not very many pairs of close characters. At the same time, the addition of each new differential classifier improves recognition very clearly.

An example of an area to highlight additional features for a pair of similar characters.

As a conclusion

This article does not in any way pretend to an exact description of the mechanisms that are used within FineReader and our other recognition products. This description of only the basic principles that were used to create technologies. The real system is too big and complex to be described in two or three articles on Habré. If you really wonder how everything is really arranged there, better.

Source: https://habr.com/ru/post/228251/

All Articles