Testing flash storage. IBM RamSan FlashSystem 820

The basics of the topic we discussed earlier in the article " Testing flash storage. The theoretical part ." Today we will move on to practice. Our first patient will be the IBM RamSan FlashSystem 820 . Excellent working system, released in April 2013. It was the top model until January of this year, giving way to the FlashSystem 840.

During testing, the following tasks were solved:

Scheme and configuration of the stand are shown in the figure.

Testing consisted of 2 groups of tests. Tests were performed by creating a synthetic load on the block device using fio, which is a

Unfortunately, the scope of the article will not allow us to cite the entire amount of the data obtained, but we will certainly show you the basic materials.

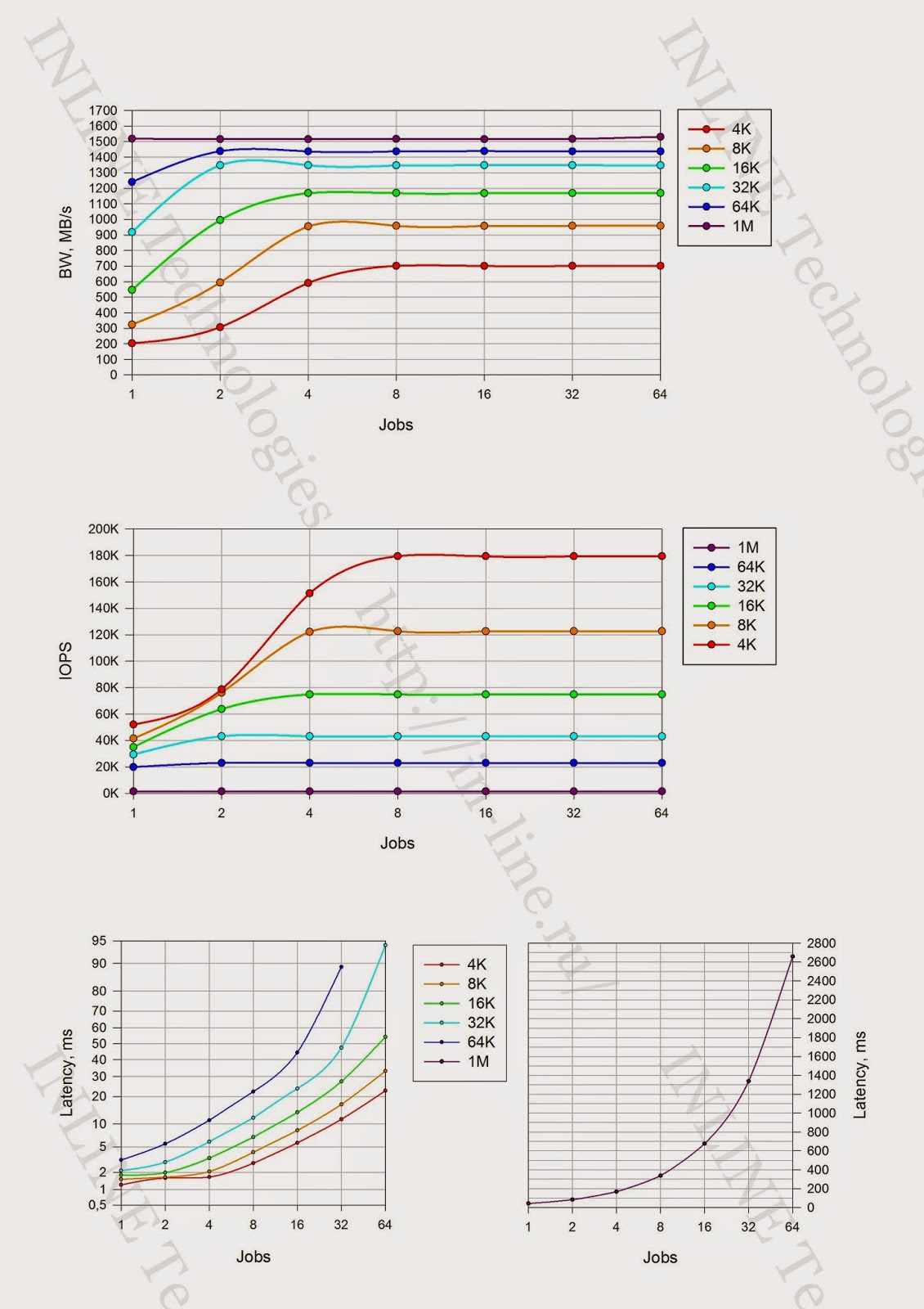

Record:

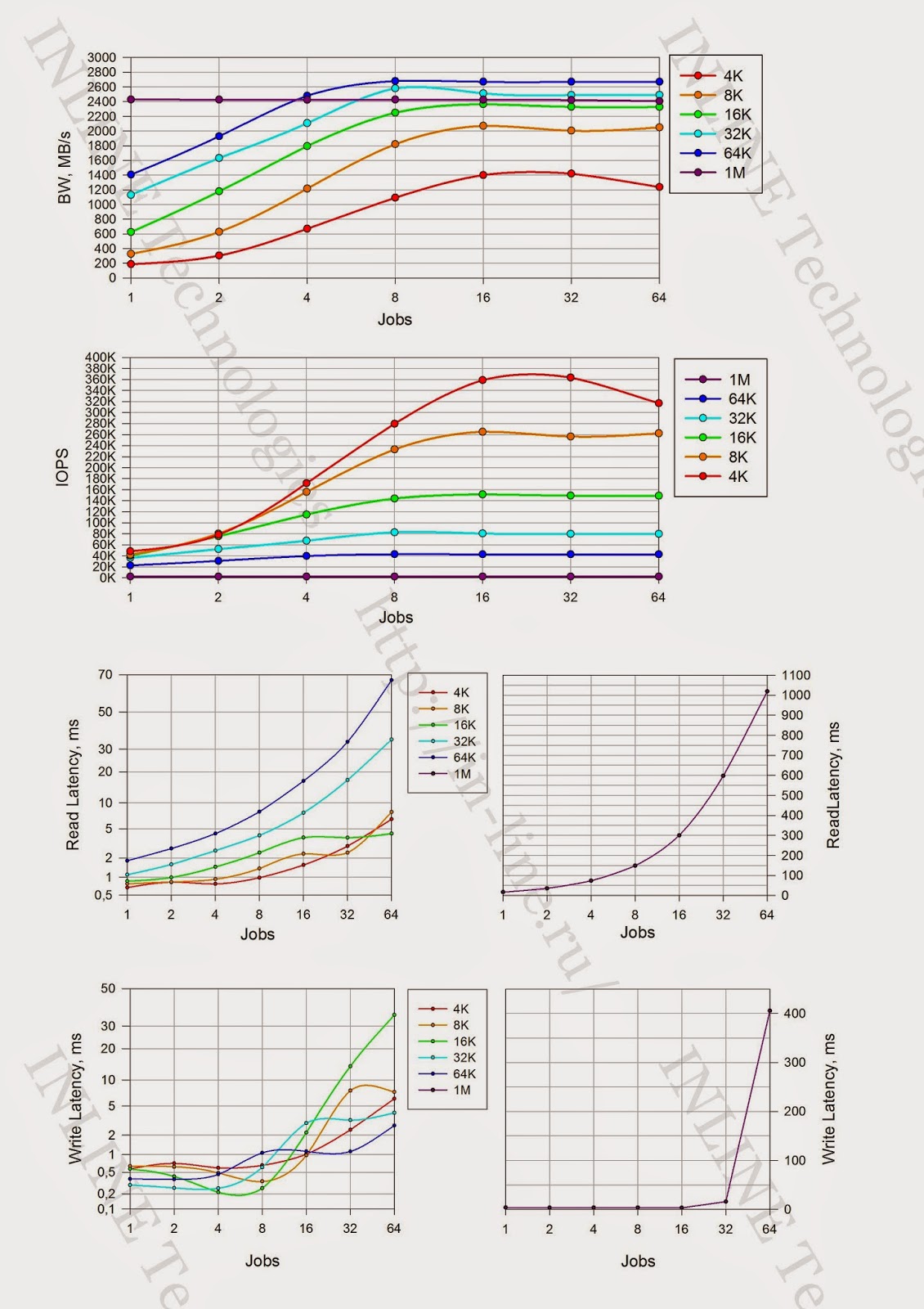

Reading:

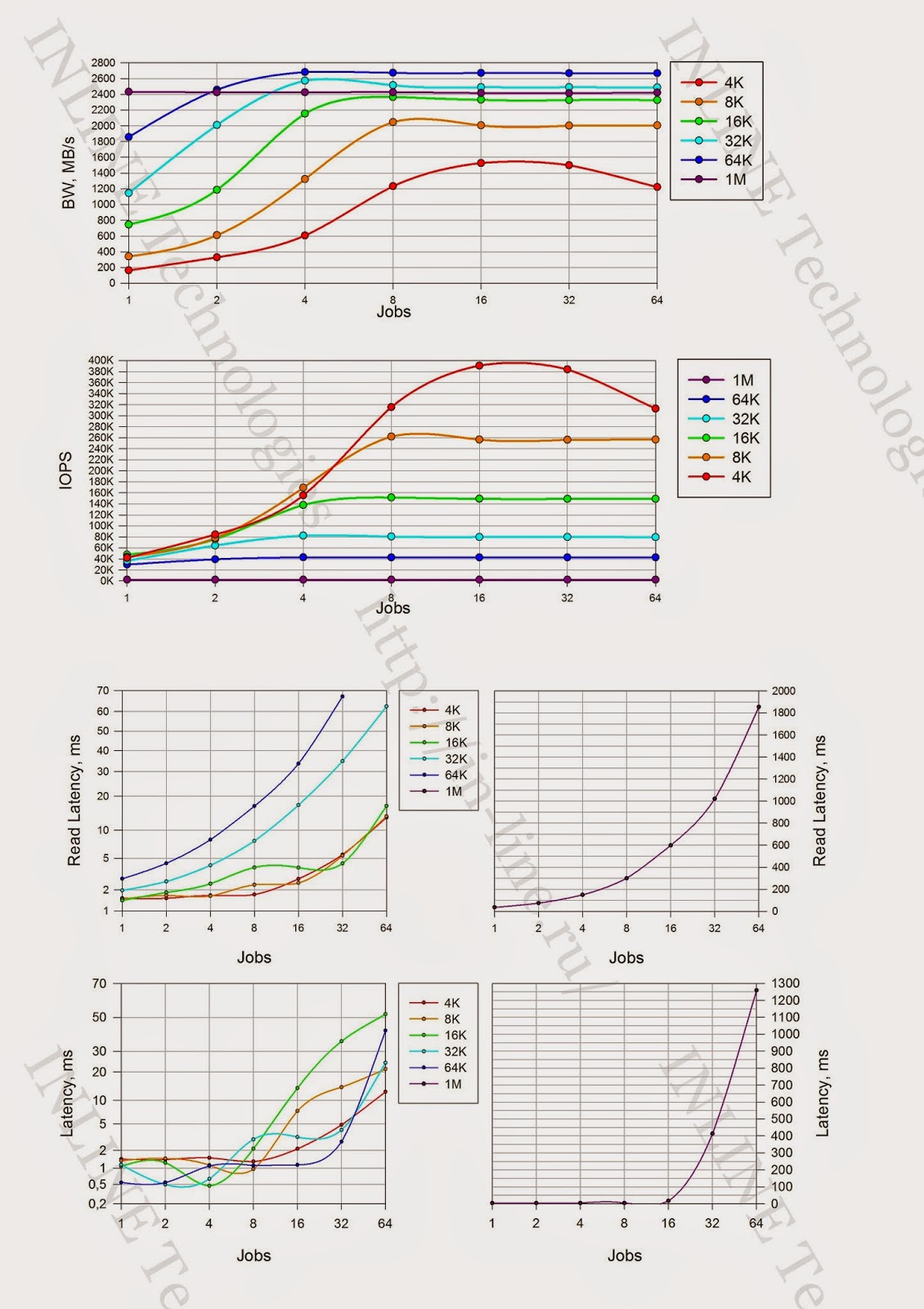

Mixed load (70/30 rw)

Minimal latency fixed:

When the system enters the saturation mode on the read load, there is a decrease in the performance of the storage system as the load increases (this is probably related to the overhead of the storage system for processing large I / O queues).

According to the subjective impressions and the sum of indicators, FlashSystem 820 turned out to be an excellent working tool. The data obtained by us, in general, coincide with the declared by the manufacturer . Of the significant differences, only lower write performance can be noted, which can be explained by the different configuration of test benches. We used RAID-5. IBM most likely uses the standard RAID-0 algorithm.

The disadvantages are, perhaps, the lack of additional functions standard for an enterprise storage system, such as snapshot, replication, deduplication, etc. On the other hand, all these are only additional benefits, far from always necessary.

I hope you were just as interesting as me.

Soon I will write about Violin Memory 6000 and HDS HUS VM. At different stages - testing of several more systems from different manufacturers. If circumstances allow, I hope to familiarize you with their results soon.

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin and all other company employees who participated in the preparation of this material.

Testing method

During testing, the following tasks were solved:

- explore the process of storage performance degradation during long-term write write and read;

- explore the performance of IBM FlashSystem 820 storage systems under various load profiles;

Testbed Configuration

Scheme and configuration of the stand are shown in the figure.

|

| Figure 1 Block diagram of the test bench. (clickable) |

See tiresome details and all sorts of clever words.

As an additional software, Symantec Storage Foundation 6.1 with Hot Fix 6.1HF100 is installed on the test server, which implements:

On the test server, settings are made to reduce the disk I / O latency:

On the storage configuration settings are performed on the partitioning of disk space:

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

The following utilities are used to remove performance indicators under synthetic load:

Removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at 5 s intervals.

- Logical volume manager (Veritas Volume Manager);

- Vxfs file system;

- Functional fault-tolerant connection to disk arrays (Dynamic Multi Pathing)

On the test server, settings are made to reduce the disk I / O latency:

- Changed the I / O scheduler from

cfqtonoopby adding the kernel boot parameterelevator=noop; - Added a parameter in /etc/sysctl.conf that minimizes the queue size at the level of the Symantec logical volume manager:

vxvm.vxio.vol_use_rq = 0; - Increased queue size on FC adapters; by adding the options

ql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64)to the configuration file /etc/modprobe.d/modprobe.confql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64);

On the storage configuration settings are performed on the partitioning of disk space:

- The configuration of Flash modules of RAID5 (10 + P) & HS is realized - the effective capacity is 9.37TiB;

- For all tests, except test 2 of the first group, 8 LUNs of the same volume are created with a total volume covering the entire usable capacity of the disk array. For test 2, 8 LUNs of the same volume are created with a total volume covering 80% of the usable capacity of the disk array. Block size LUN - 512 byte. Created LUNs are presented to the test server.

Software used in the testing process

To create a synthetic load (performance of synthetic tests) on the storage system, the Flexible IO Tester (fio) version 2.1.4 utility is used. All synthetic tests use the following fio configuration parameters of the [global] section:

- thread = 0

- direct = 1

- group_reporting = 1

- norandommap = 1

- time_based = 1

- randrepeat = 0

- ramp_time = 6

The following utilities are used to remove performance indicators under synthetic load:

- iostat, part of the sysstat version 9.0.4 package with

txkkeys; - vxstat, which is part of Symantec Storage Foundation 6.1 with

svdkeys; - vxdmpadm, part of Symantec Storage Foundation 6.1 with the

-q iostatkeys; - fio version 2.1.4, to generate a summary report for each load profile.

Removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at 5 s intervals.

Testing program.

Testing consisted of 2 groups of tests. Tests were performed by creating a synthetic load on the block device using fio, which is a

stripe, 8 column, stripe unit size=1MiB logical volume stripe, 8 column, stripe unit size=1MiB , created using Veritas Volume Manager from 8 LUNs on the system under test and presented to the test server .Ask for details

')

When creating a test load, the following additional parameters of the fio program are used (except those already described):

Group 1 consists of four tests that differ in the total volume of LUNs presented with the tested storage system, the size of the block of input-output operations and the direction of input-output (write or read):

According to the test results, based on the data output by the vxstat team, the graphs are formed combining the results of two tests:

The analysis of the received information is carried out and conclusions are drawn about:

During testing, the following types of loads are investigated:

A group consists of a set of tests representing all possible combinations of the above types of load. To reduce the impact of garbage collection service processes on test results, a pause is implemented between tests equal to the amount of information recorded during the test, divided by the performance of the storage service processes (determined by the results of the first group of tests).

Based on the test results, based on the output of fio software, upon completion of each test, graphs are generated for each combination of the following load types: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values:

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at latency <1ms.

Group 1: Tests that implement long-term load.

')

When creating a test load, the following additional parameters of the fio program are used (except those already described):

- rw = randwrite

- blocksize = 4K

- numjobs = 64

- iodepth = 64

Group 1 consists of four tests that differ in the total volume of LUNs presented with the tested storage system, the size of the block of input-output operations and the direction of input-output (write or read):

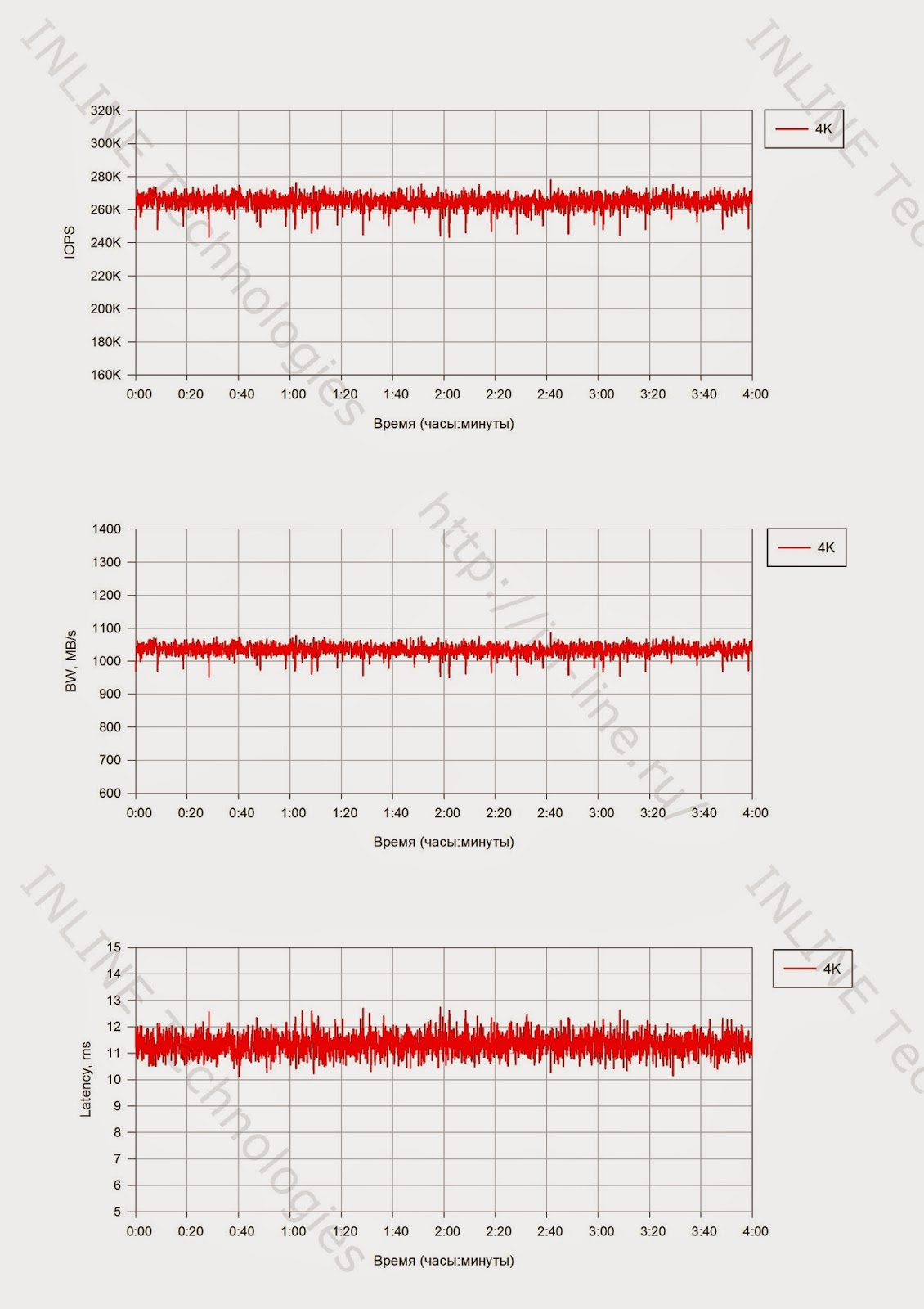

- Test 1. Record test performed on a fully-marked storage system. The total volume of the presented LUN is equal to the effective storage capacity of the storage system, the test duration is 12 hours;

- Test 2. Recording test performed on storage marked at 80%. The total volume of the presented LUN is 80% of the effective storage capacity, the test duration is 5 hours.

- Test 3. Test for reading performed on a fully-marked storage system. The test duration is 4 hours.

- Test 4. Recording tests with varying block size: 4,8,16,32,64,1024 KiB, performed on a fully-marked storage system, the duration of each test is 2 hours.

According to the test results, based on the data output by the vxstat team, the graphs are formed combining the results of two tests:

- IOPS as a function of time;

- Latency, as a function of time.

The analysis of the received information is carried out and conclusions are drawn about:

- the presence of performance degradation during long-term load on the record and reading;

- the degree of influence of the volume of the marked storage disk space on the performance;

- performance of storage service processes (garbage collection), limiting the performance of the disk array to write during a long peak load;

- the degree of influence of the size of the block of I / O operations on the performance of the storage service processes;

- the amount of space reserved on the storage system for leveling the impact of service processes.

Group 2: Disk array performance tests with different types of load, executed at the block device level.

During testing, the following types of loads are investigated:

- load profiles (changeable software parameters

fio: randomrw, rwmixedread):

- random recording 100%;

- random write 30%, random read 70%;

- random read 100%.

- block sizes: 1KB, 8KB, 16KB, 32KB, 64KB, 1MB (changeable software parameter

fio: blocksize); - methods of processing I / O operations: synchronous, asynchronous (variable parameter in

fio: ioenginesoftwarefio: ioengine); - the number of load generating processes: 1, 2, 4, 8, 16, 32, 64, 128 (changeable software parameter

fio: numjobs); - queue depth (for asynchronous I / O operations): 32, 64 (variable parameter in

fio: iodepth).

A group consists of a set of tests representing all possible combinations of the above types of load. To reduce the impact of garbage collection service processes on test results, a pause is implemented between tests equal to the amount of information recorded during the test, divided by the performance of the storage service processes (determined by the results of the first group of tests).

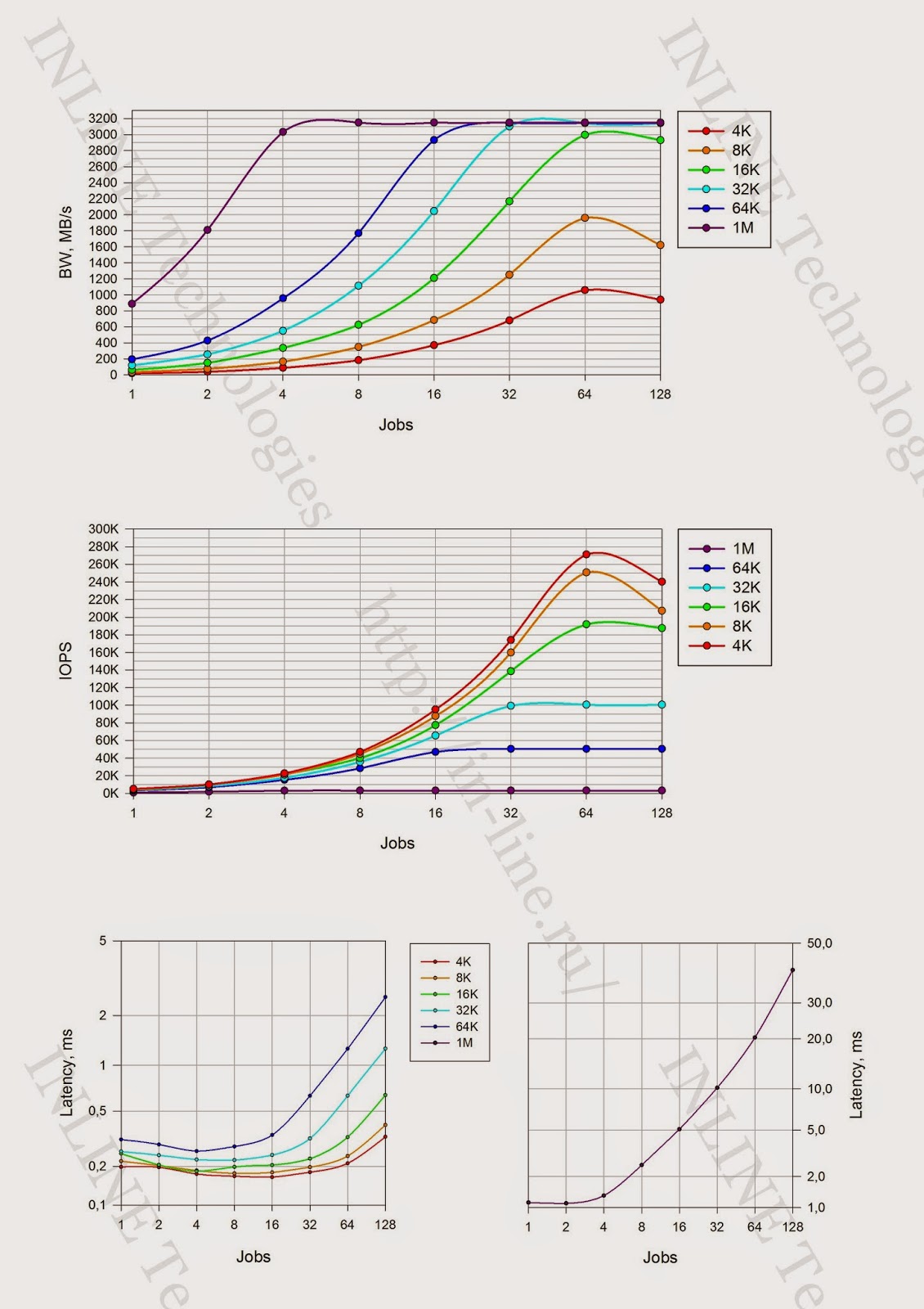

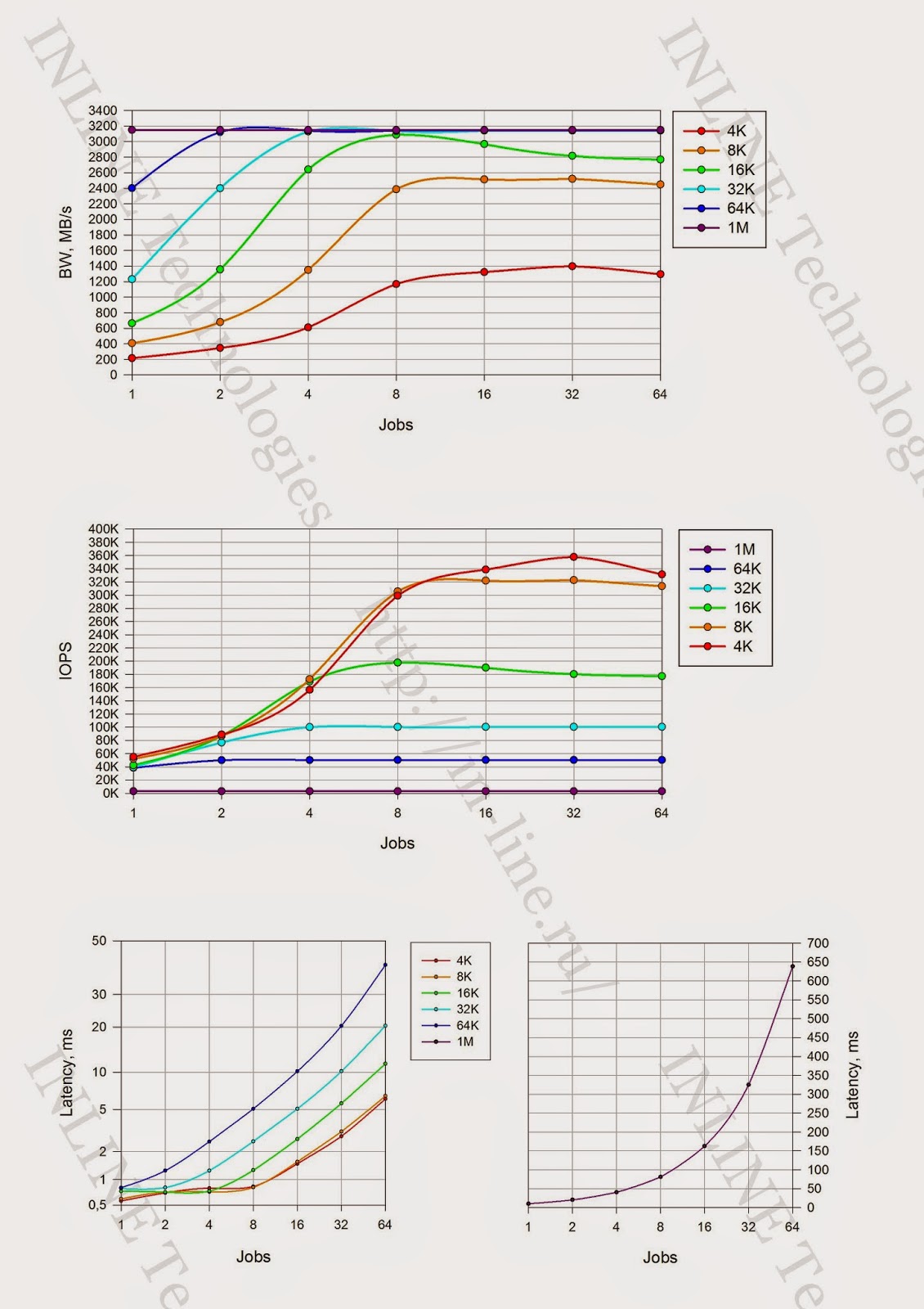

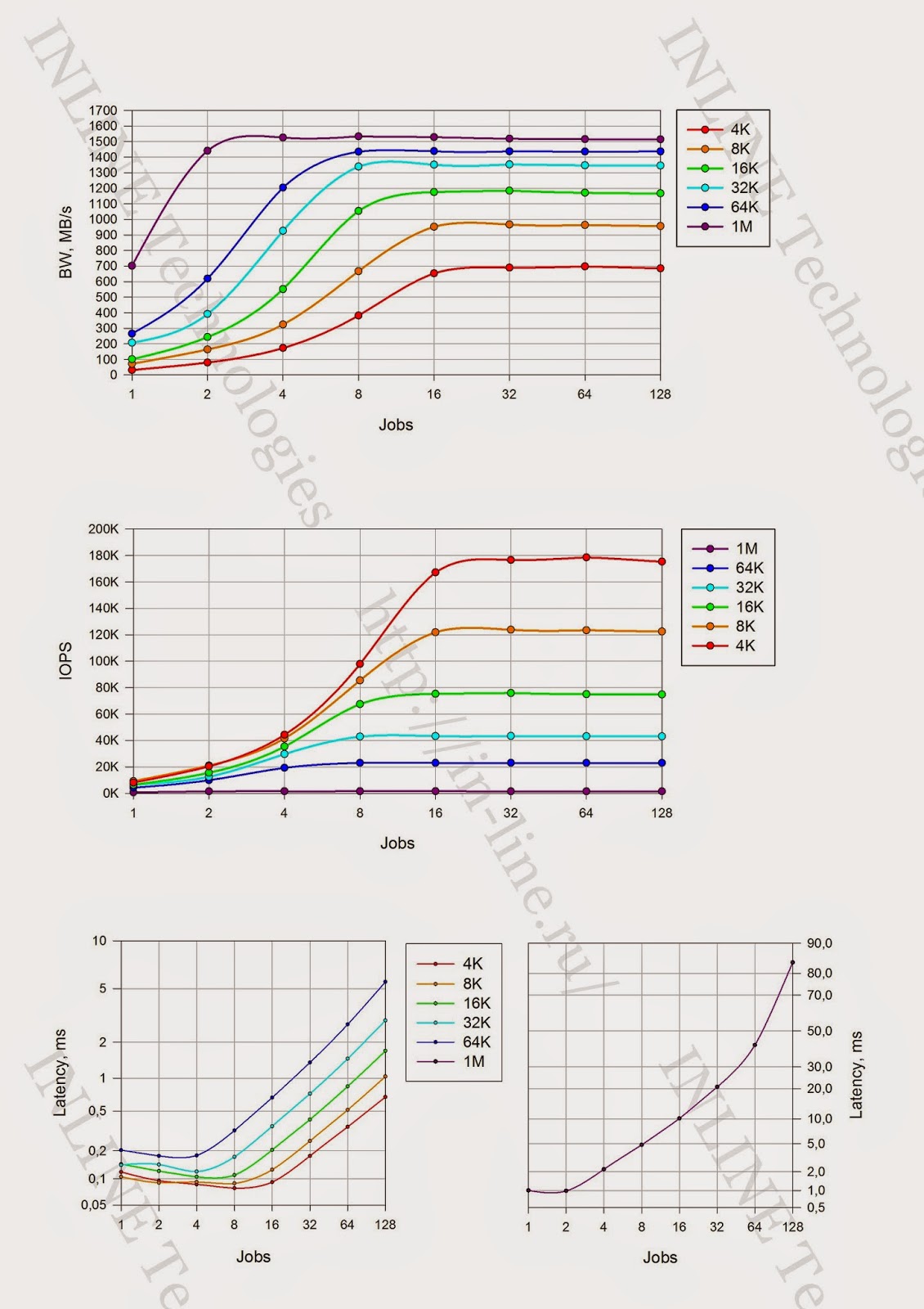

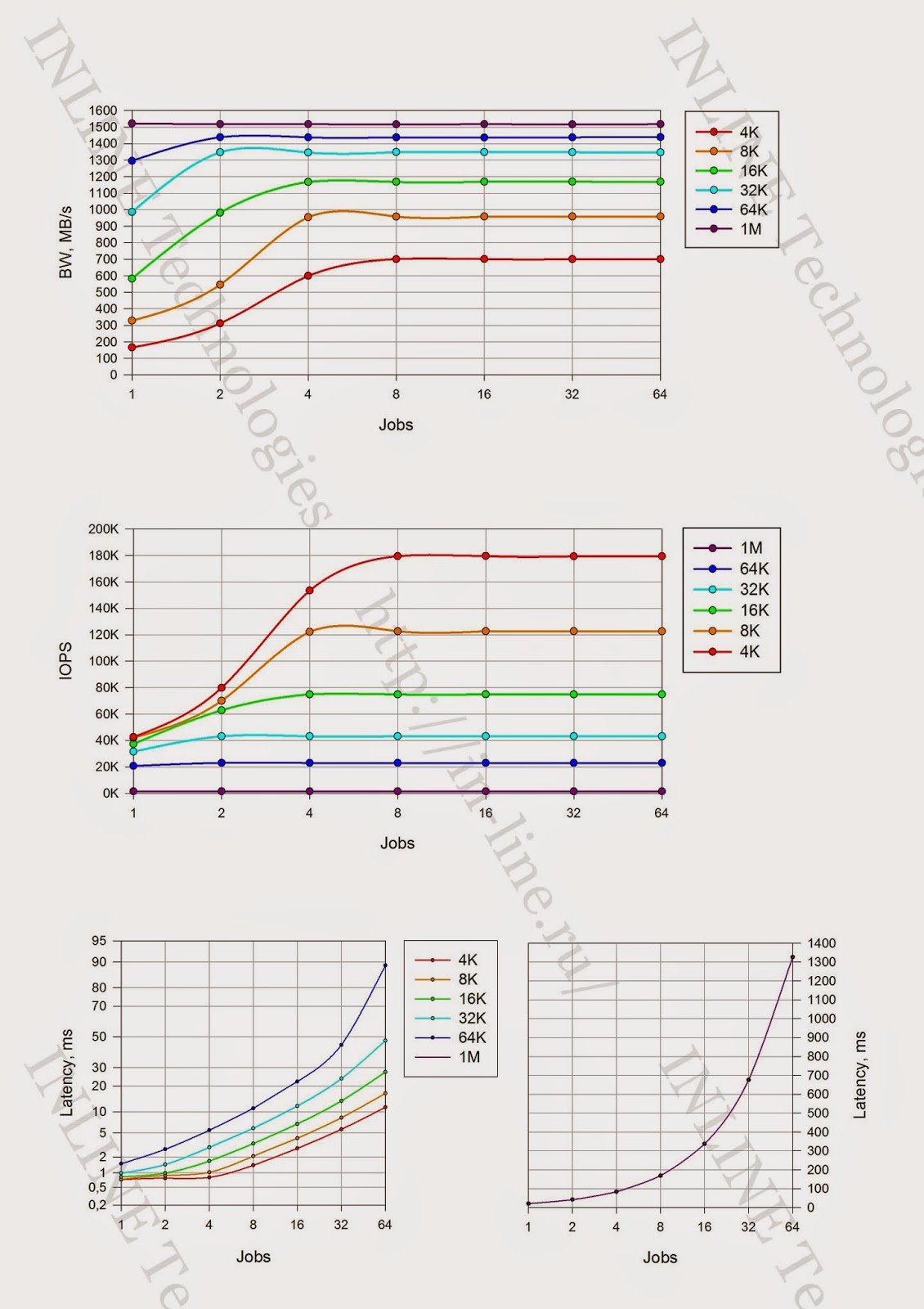

Based on the test results, based on the output of fio software, upon completion of each test, graphs are generated for each combination of the following load types: load profile, method of processing I / O operations, queue depth, which combine tests with different I / O block values:

- IOPS as a function of the number of load generating processes;

- Bandwidth as a function of the number of processes that generate the load;

- Latitude (clat) as a function of the number of load generating processes;

The analysis of the obtained results is carried out, conclusions are drawn on the load characteristics of the disk array at latency <1ms.

Test results

Unfortunately, the scope of the article will not allow us to cite the entire amount of the data obtained, but we will certainly show you the basic materials.

Group 1: Tests that implement long-term load.

1. With a long write load, a significant degradation of storage performance associated with the inclusion of garbage collection (GC) processes is recorded. The performance of the disk array, fixed with running GC processes, can be considered as the maximum average performance of the disk array.

|

| Changing the speed of I / O operations (iops) during long-term 4K recording |

|

| Changing delays during long-term 4K recording |

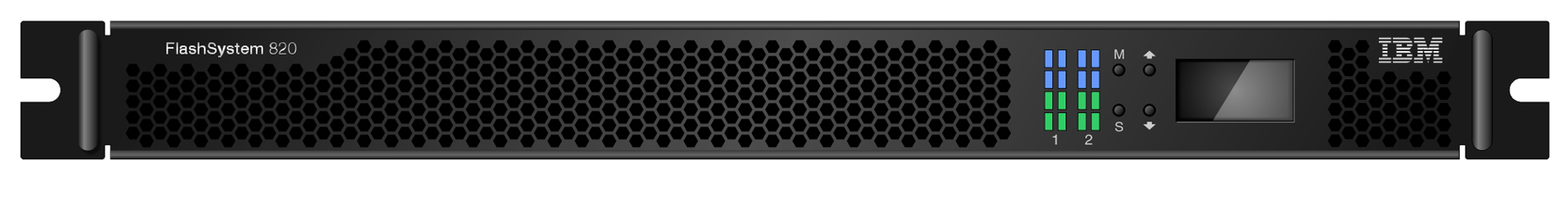

2. With a long reading load, the performance of the disk array does not change over time.

|

| Changing performance parameters during long-term reading with a 4K block |

3. Storage density does not affect the operation of the disk array.

|

| Change in the speed of I / O operations (iops) and delays (Latency) during long-term recording at 100% and 80% storage density. |

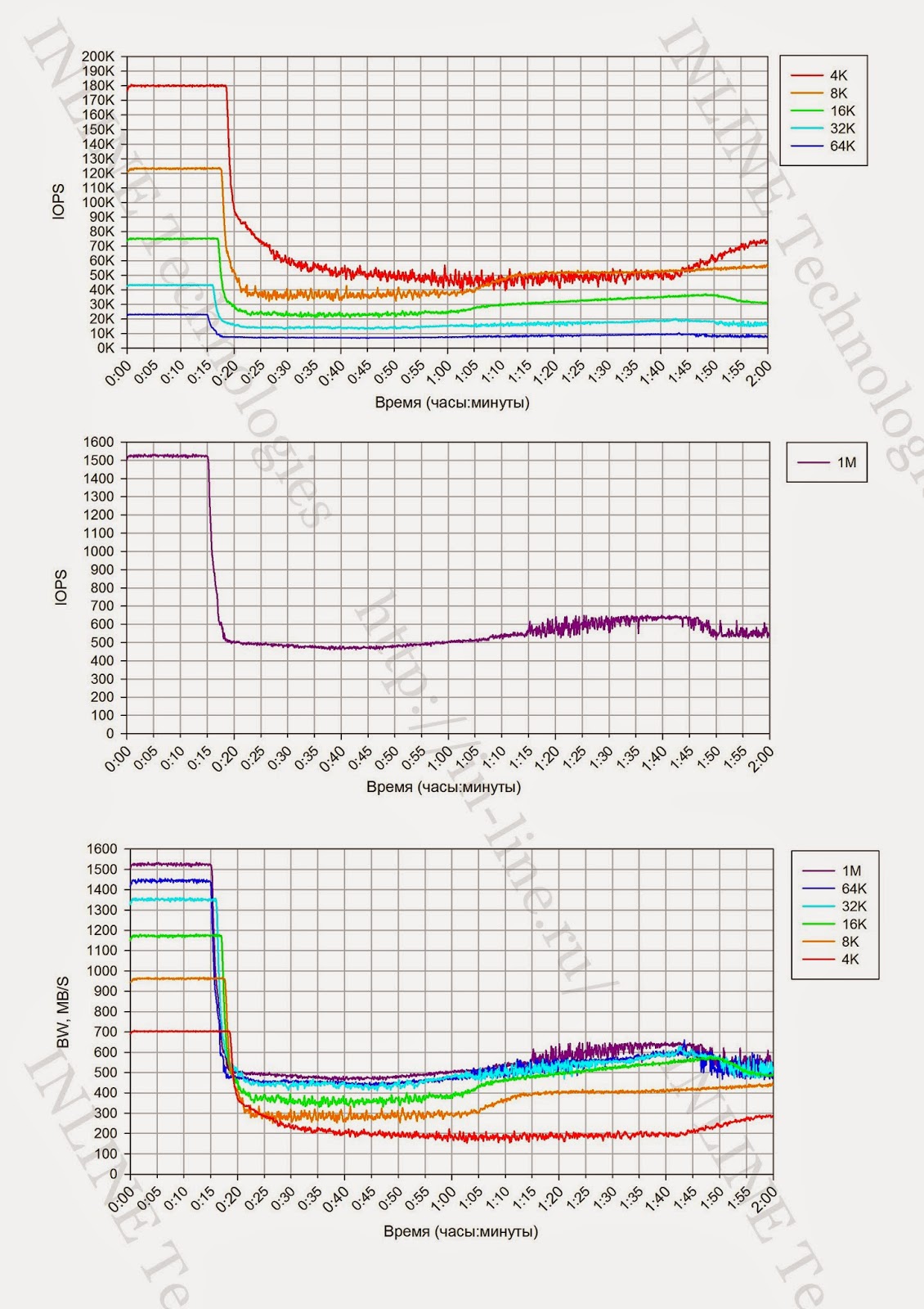

4. The block size affects the performance during long-term load on the record, which results in a change in the point of a significant drop in storage performance and the amount of data recorded before the indicated moment.

|

| Change of the I / O speed (iops) and data transfer rate (bandwidth) during long-term recording with various block sizes. |

|

| The dependence of storage performance on block size during long-term recording load |

Group 2: Disk array performance tests with different types of load, executed at the block device level.

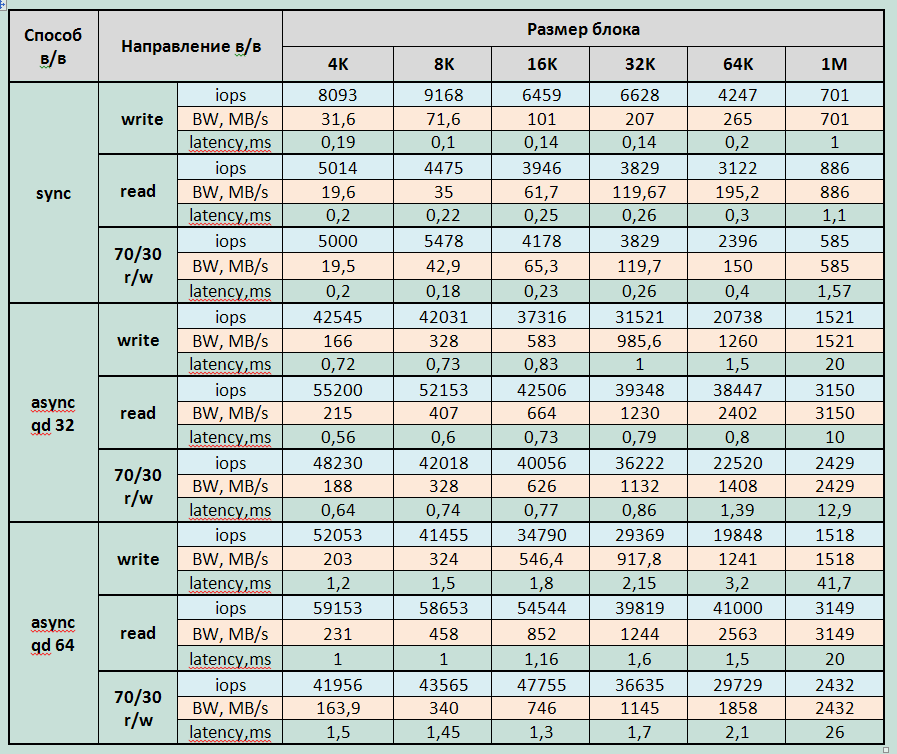

Block device performance tables.

|

| Storage performance with one load generating process (jobs = 1) |

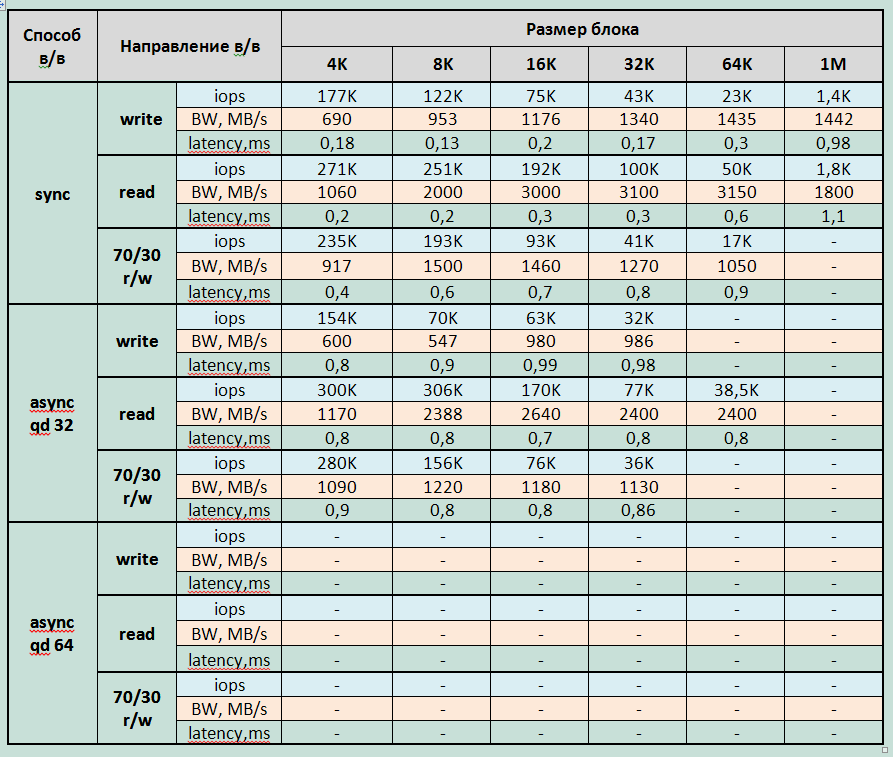

|

| Maximum storage performance with delays less than 1ms |

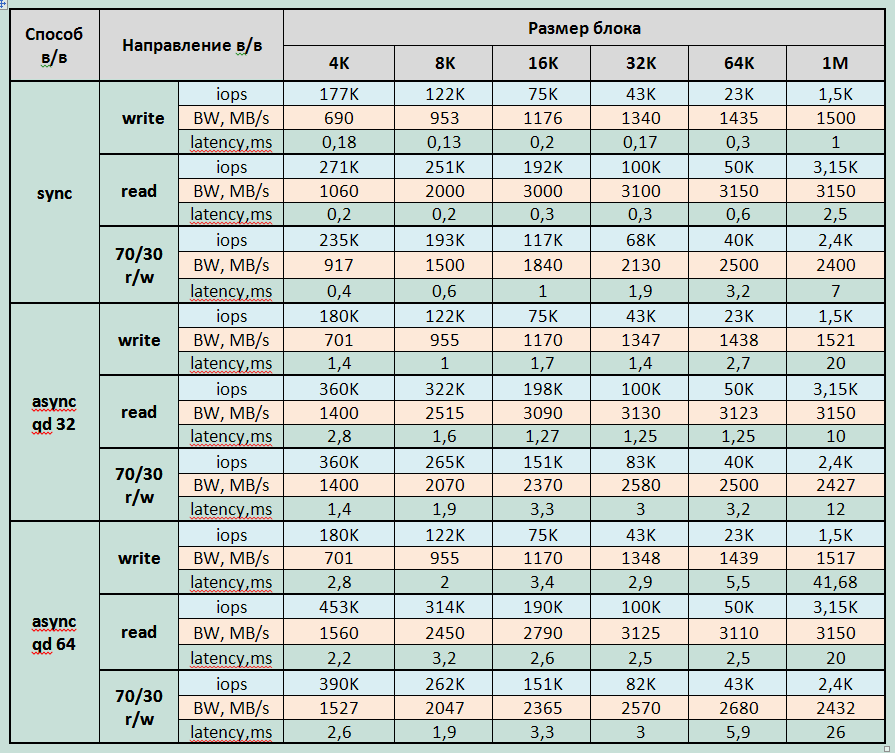

|

| Maximum storage performance at a different load profile. |

Block device performance graphs.

(All pictures are clickable)

| Synchronous way in / in | Asynchronous way in / in with a queue depth of 32 | Asynchronous way in / in with a queue depth of 64 | |

| Random reading |  |  |  |

| With random recording |  |  |  |

| With mixed load (70% read, 30% write) |  |  |  |

Maximum recorded performance parameters for FlashSystem 820:

Record:

- 180,000 IOPS with latency 0.2ms (4KB sync block)

- Bandwidth: 1520MB / c for 1MB block

Reading:

- 306,000 IOPS with latency of 0.8ms (8KB async qd 32 block);

- 453,000 IOPS with latency 2.2ms (4KB async qd 64 block);

- Bandwidth: 3150MB / s for the 1MB block.

Mixed load (70/30 rw)

- 280,000 IOPS with latency 0.8ms (4KB async qd 32 block);

- 390,000 IOPS with latency 2.6ms (4KB async qd 64 block);

- Bandwidth 2430MB / s for 1MB block

Minimal latency fixed:

- When recording - 0.08ms for 4K block, jobs = 8 with a performance of 100,000 IOPS;

- With a reading of 0.166ms for a 4K block, jobs = 16 with a capacity of 95,000 IOPS.

When the system enters the saturation mode on the read load, there is a decrease in the performance of the storage system as the load increases (this is probably related to the overhead of the storage system for processing large I / O queues).

findings

According to the subjective impressions and the sum of indicators, FlashSystem 820 turned out to be an excellent working tool. The data obtained by us, in general, coincide with the declared by the manufacturer . Of the significant differences, only lower write performance can be noted, which can be explained by the different configuration of test benches. We used RAID-5. IBM most likely uses the standard RAID-0 algorithm.

The disadvantages are, perhaps, the lack of additional functions standard for an enterprise storage system, such as snapshot, replication, deduplication, etc. On the other hand, all these are only additional benefits, far from always necessary.

I hope you were just as interesting as me.

Soon I will write about Violin Memory 6000 and HDS HUS VM. At different stages - testing of several more systems from different manufacturers. If circumstances allow, I hope to familiarize you with their results soon.

PS The author expresses cordial thanks to Pavel Katasonov, Yuri Rakitin and all other company employees who participated in the preparation of this material.

Source: https://habr.com/ru/post/227887/

All Articles