Application Porno or how to find secrets in mobile applications and take out everything

In the news feed, I recently found a curious study where the guys downloaded and downloaded the Android Playmarket, analyzed hundreds of thousands of applications for the presence of wired secret tokens and passwords.

The fact that the result of their work concerned only the analysis of the decompiled code for Android cpodvig me to write about the study, which I conducted a year ago, and not only for Android, but also for iOS applications, and which, as a result, resulted in a whole online tool , which I will discuss at the very end, when its meaning becomes obvious. A part of what was written below was presented at the ZeroNights conference and in the pages of Hacker magazine. (Since the material was not published online, the editors gave the go-ahead, for publication here). So let's go.

Much has been written about manual analysis of mobile applications, test methods have been developed, and checklists have been compiled. However, most of these checks concern the user's security: how his data is stored, how it is transmitted, and how it can be accessed using application vulnerabilities.

But why would an attacker dig into how the application works on a specific user’s device if it is possible to try to attack the server side and divert the data of ALL users? How can a mobile application be useful for attacking directly the cloud infrastructure of this application? And how about taking and analyzing thousands or, even better, tens of thousands of applications, checking them for typical bugs - stitched tokens, authentication keys and other secrets?

')

Since the link from the introduction is exhaustively written about GooglePlay, then my story, for interest, will be about the App Store, more specifically about iOS applications. How to implement an automatic download from the AppStore, the theme for a separate large article, I can only say that it is an order of magnitude more time-consuming task than the “rocking” for Google Play. But with tears, blood, sniffer and python, still solved :)

In articles where the issue of distribution of iOS applications is raised, they write that:

Behind all these statements is the fact that in the distribution package of the application (which is a simple ZIP archive), the compiled code is encrypted with binding to the device. All other content exists in the clear.

The first thing that comes to mind (authorization tokens, keys, and the like) is the strings and grep tools. It is not suitable for automation. Stupid search for strings creates such a quantity of garbage that requires manual parsing that automation loses all meaning.

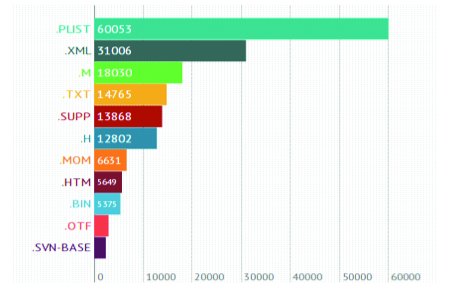

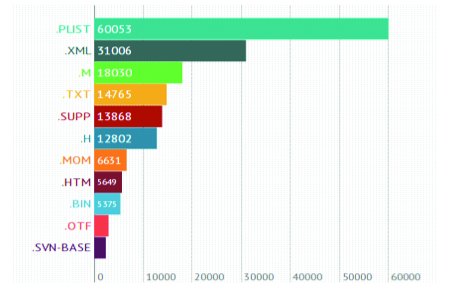

To write an acceptable automatic analysis system, you need to carefully look at what the distribution kit consists of. Having unpacked the distributions for ~ 15,000 applications and discarding the deliberate garbage (pictures, audio, video, resources), we get 224,061 files of 1396 types.

* .m and * .h (source) - this, of course, is interesting, but do not forget about configs, or more precisely, XML, PList and SQLite containers. Accepting this simplification, we will build TOP types of popularity that we are interested in. The total number of files of interest to us is 94,452, which is 42% of the original.

The application, which we conditionally call normal, consists of:

Total, the task is reduced to two:

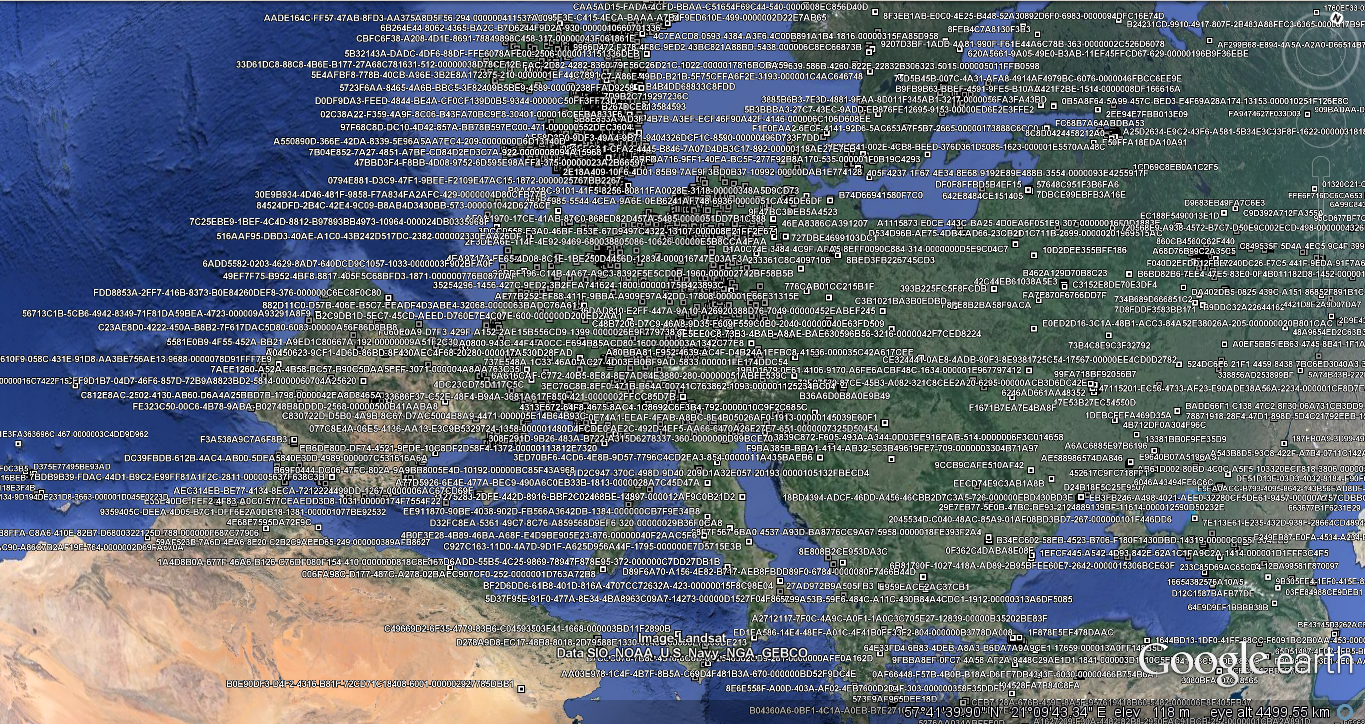

Apparently, for many developers it is not obvious that the published application becomes public. So, periodically there are Oauth tokens of twitter and other popular services. An indicative case was an application that collected contacts, photos, geolocation and deviceID of users and saved them in the Amazon cloud, and yes - using a token that was wired into one of the PList files. Using this token, you can easily merge data on all users and monitor the movement of devices in real time.

For some reason, an important circumstance is left without attention: libraries that allow flexible management of push notifications (for example, UrbanAirship). The manuals clearly state that in no case does the master secret (with which the server side of the application sends pushy) be stored in the application. But master secrets still occur. That is, I can send a notification to all users of the application.

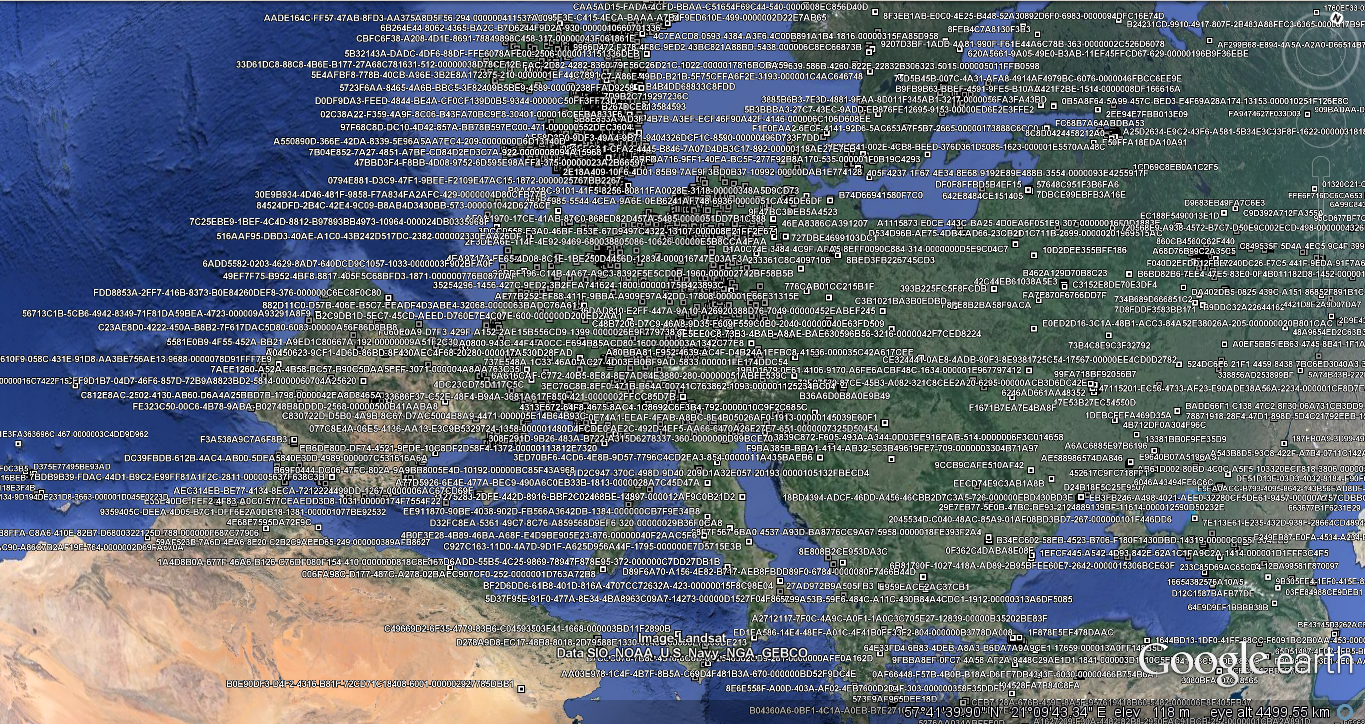

You should not overlook the various artifacts of the testing and development process, that is, links to debugging interfaces, version control systems, and links to dev environments. This information can be extremely interesting to attackers, as it includes SQL dumps of databases with real-life users. Developers, as a rule, do not deal with the security of the test environment (leaving, for example, the default passwords), while often using real user data for better testing. An outstanding find was the script through which (without authentication) push notifications could be sent to all users of the application.

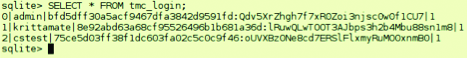

The fact that the distributions contain information about the test environment and information about version control systems is expected. But some things continue to amaze:

Detection of the content described above is explainable in principle, but in applications you can periodically find unexplainable things, for example, a PKCS container with a developer certificate ... and a private key to it

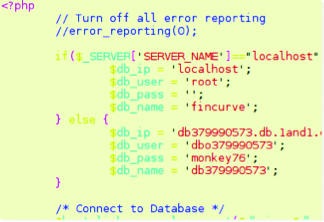

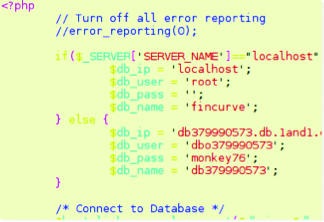

Or pieces of PHP-code with logins, passwords to the database.

... and my favorite is the working client OpenVPN config.

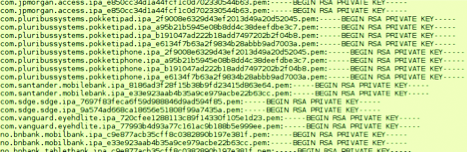

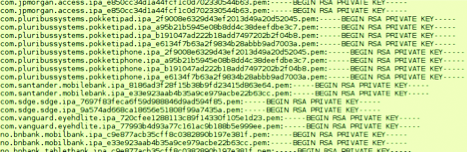

And also not encrypted private keys "of all varieties and colors" (c)

As if in its essence, the issue of licensing was not controversial, but he found his place here. Many developers use framework code in their programs that are licensed under the GPL, which requires code disclosure. And how the GPL works with paid and free apps on the App Store is a question that can create room for the patent trolls to maneuver.

In total, we have thousands of applications that contain the described “jambs”, but the developers are not particularly in a hurry to do something about it. What is the problem here? On the githaba there are a lot of super constructions for auditing application security, but in order to work with them, the developer needs:

this creates a situation in which wealthy corporations, which are very worried about their brand or financial structures, and holding tightly to the “gills” of regulators, can afford a safe development. And the number of unsafe applications is growing.

The answer to all these factors was the emergence of HackApp , a tool that provides basic analysis of application security and develops in accordance with the principles:

Now HackApp exists in 2 versions: basic and Pro (with a paid subscription), but that's another story.

The fact that the result of their work concerned only the analysis of the decompiled code for Android cpodvig me to write about the study, which I conducted a year ago, and not only for Android, but also for iOS applications, and which, as a result, resulted in a whole online tool , which I will discuss at the very end, when its meaning becomes obvious. A part of what was written below was presented at the ZeroNights conference and in the pages of Hacker magazine. (Since the material was not published online, the editors gave the go-ahead, for publication here). So let's go.

Target - Stores

Much has been written about manual analysis of mobile applications, test methods have been developed, and checklists have been compiled. However, most of these checks concern the user's security: how his data is stored, how it is transmitted, and how it can be accessed using application vulnerabilities.

But why would an attacker dig into how the application works on a specific user’s device if it is possible to try to attack the server side and divert the data of ALL users? How can a mobile application be useful for attacking directly the cloud infrastructure of this application? And how about taking and analyzing thousands or, even better, tens of thousands of applications, checking them for typical bugs - stitched tokens, authentication keys and other secrets?

')

Since the link from the introduction is exhaustively written about GooglePlay, then my story, for interest, will be about the App Store, more specifically about iOS applications. How to implement an automatic download from the AppStore, the theme for a separate large article, I can only say that it is an order of magnitude more time-consuming task than the “rocking” for Google Play. But with tears, blood, sniffer and python, still solved :)

In articles where the issue of distribution of iOS applications is raised, they write that:

- Application is encrypted

- DRM protected application

- The application to be installed is bound to the device.

Behind all these statements is the fact that in the distribution package of the application (which is a simple ZIP archive), the compiled code is encrypted with binding to the device. All other content exists in the clear.

Where to begin ?

The first thing that comes to mind (authorization tokens, keys, and the like) is the strings and grep tools. It is not suitable for automation. Stupid search for strings creates such a quantity of garbage that requires manual parsing that automation loses all meaning.

To write an acceptable automatic analysis system, you need to carefully look at what the distribution kit consists of. Having unpacked the distributions for ~ 15,000 applications and discarding the deliberate garbage (pictures, audio, video, resources), we get 224,061 files of 1396 types.

* .m and * .h (source) - this, of course, is interesting, but do not forget about configs, or more precisely, XML, PList and SQLite containers. Accepting this simplification, we will build TOP types of popularity that we are interested in. The total number of files of interest to us is 94,452, which is 42% of the original.

The application, which we conditionally call normal, consists of:

- media content: images, audio, interface resources;

- compiled code (which is encrypted)

- data containers - SQLite, XML, PList, BPList

- trash that got into the distribution for an unknown reason

Total, the task is reduced to two:

- Recursive search for various secrets in SQLite, XML, PList

- Search for any "unusual" junk and private keys

Keep this token in secret

Apparently, for many developers it is not obvious that the published application becomes public. So, periodically there are Oauth tokens of twitter and other popular services. An indicative case was an application that collected contacts, photos, geolocation and deviceID of users and saved them in the Amazon cloud, and yes - using a token that was wired into one of the PList files. Using this token, you can easily merge data on all users and monitor the movement of devices in real time.

For some reason, an important circumstance is left without attention: libraries that allow flexible management of push notifications (for example, UrbanAirship). The manuals clearly state that in no case does the master secret (with which the server side of the application sends pushy) be stored in the application. But master secrets still occur. That is, I can send a notification to all users of the application.

TEST-DEV

You should not overlook the various artifacts of the testing and development process, that is, links to debugging interfaces, version control systems, and links to dev environments. This information can be extremely interesting to attackers, as it includes SQL dumps of databases with real-life users. Developers, as a rule, do not deal with the security of the test environment (leaving, for example, the default passwords), while often using real user data for better testing. An outstanding find was the script through which (without authentication) push notifications could be sent to all users of the application.

Tap to enter

The fact that the distributions contain information about the test environment and information about version control systems is expected. But some things continue to amaze:

- SQLite database with credentials services

- Business card application with client authentication

- Private key for signing transactions

What is it doing here ?!

Detection of the content described above is explainable in principle, but in applications you can periodically find unexplainable things, for example, a PKCS container with a developer certificate ... and a private key to it

Or pieces of PHP-code with logins, passwords to the database.

... and my favorite is the working client OpenVPN config.

And also not encrypted private keys "of all varieties and colors" (c)

What other than secrets?

As if in its essence, the issue of licensing was not controversial, but he found his place here. Many developers use framework code in their programs that are licensed under the GPL, which requires code disclosure. And how the GPL works with paid and free apps on the App Store is a question that can create room for the patent trolls to maneuver.

Is there an App for That?

In total, we have thousands of applications that contain the described “jambs”, but the developers are not particularly in a hurry to do something about it. What is the problem here? On the githaba there are a lot of super constructions for auditing application security, but in order to work with them, the developer needs:

- Spend time and energy to figure out for yourself how it works. That is, extra work that is not paid for.

- Build and maintain infrastructures.

- If there are many applications, then a separate specialist for full time is needed, which will be done in points 1 and 2

this creates a situation in which wealthy corporations, which are very worried about their brand or financial structures, and holding tightly to the “gills” of regulators, can afford a safe development. And the number of unsafe applications is growing.

The answer to all these factors was the emergence of HackApp , a tool that provides basic analysis of application security and develops in accordance with the principles:

- The report should not “load” the developer with technical details (such as listings and traces), but clearly and clearly state that where you need to fix

- Should not require investments in infrastructure. (i.e., some dedicated client-side facilities)

- To have an interface for automatic interaction, work with which can be embedded in the process of pre-release testing of an application, to become, from the point of view of integration into development, yet another testing tool

Now HackApp exists in 2 versions: basic and Pro (with a paid subscription), but that's another story.

Source: https://habr.com/ru/post/227747/

All Articles