Sample web performance

In any complex system, the optimization process for the most part consists of disentangling the links between different layers of the system, each of which has its own set of constraints. So far, we have considered a number of individual network components in detail - various physical tools and data transfer protocols. Now we can turn our attention to a broader, more comprehensive picture of optimizing web performance:

Optimization of connections between different layers, levels of the system is little different from the process of solving a system of equations. Each equation depends on the others and affects the number of possible solutions for the system as a whole. It should be noted that there are no strictly fixed recommendations or ready-made solutions. Individual components continue to evolve: browsers are becoming faster, user connection profiles are changing, web applications continue to change in content, and the tasks that are being set are becoming more complex.

Therefore, before we delve into the review and analysis of advanced modern experience, it is important to take a step back and determine what the problem really is: what a modern web application is, what tools we have, how we measure web performance, and what parts of the system help and hinder our progress.

')

As a result of the evolution of the Internet over the past few decades, we have obtained three different approaches to presenting information online:

It should be recognized that at times it is difficult for the end user to see the boundary between the last two approaches, but in terms of performance, each of them requires a different methodology, assessment and definition of performance.

Hypertext is at the heart of the World Wide Web as plain text with some basic formatting features and support for hyperlinks.

Now it is unlikely to seem interesting, but hypertext was extremely useful for the world wide web, was a prerequisite to all that we currently use.

The HTML working group and the developers of the first browsers tried to expand the possibilities of hypertext and introduced support for the media: image and sound. The result was the era of web pages, which is distinguished by the presence of developed forms of media content, visually flawless, but with a small amount of interactivity. Web pages in this do not differ much from the usual printed newspaper page.

The addition of JavaScript, the late introduction of support for revolutionary Dynamic HTML (DHTML) and AJAX, has shaken the industry and turned simple web pages into interactive web applications, which made it possible to respond to user requests directly in the browser. All this paved the way for the first implementations of full browser applications. For example, Outlook Web Access (this started XMLHTTP support in IE 5). We can say that this was the beginning of a new era of complex links between scripts, cascading style sheets and markup.

A session in HTTP 0.9 can be submitted from a request for one document, which is sufficient for the correct display of hypertext. One document, one TCP connection, then a connection reset. Based on the above, it is not difficult to imagine an optimization process, it was very simple. All that was required was to improve the performance of a single HTTP request for a short TCP connection.

The appearance of web pages has changed this formula. From the request / receipt of one document, we went to the request of the document and its dependent resources. The concept of metadata (headers) has been introduced in HTTP 1.0, the concept is expanded in HTTP 1.1 with the indication of various directives that focus on performance. For example, strictly defined caching, KeepAlive, and more. Thus, we get several simultaneous TCP connections and the key performance indicator of the document load time is no longer. It is customary to evaluate page load time, abbreviated PLT.

Finally, web pages have gradually turned into web applications that use media content as a supplement to the main content. If you use an integrated approach, the whole system fits into the following scheme: html markup defines the skeleton, the basic structure of the application, css determines the location of elements within the framework, scripts create an interactive application that responds to user requests and potentially modifies the markup and styles to implement these requests.

Therefore, a parameter such as page load time, which previously had a decisive role in analyzing web performance, is no longer sufficient for evaluating performance. We are no longer just creating web pages, we are building responsive, dynamic, interactive web applications.

Instead of PLT, we are now interested in the answers to the following questions:

Successful performance and optimization strategies are directly dependent on the ability to define an iterator to follow certain criteria and benchmarks. Nothing can be compared with a specific application of knowledge about the specifics of the application, the use of measurement results, especially if all this is one of the goals of your business.

What exactly do we mean by “complex dependency of scripts, styles and markup” in a modern web application? To answer this question, we need to understand the architecture of the browser, understand how markup, styles and scripts should come together to draw pixels on the screen.

Browsing parsing process

Parsing an HTML document is a build process according to the Document Object Model (DOM). We also often forget that they are also using the cascading style sheet object model (CSS Object Model), which is built according to the given style and resource rules. These two unite and create the so-called render tree, which is enough for the browser to render something. So far so good. But, unfortunately, right now we have to introduce our best friend and at the same time the enemy: JavaScript. Running the script can produce a synchronous doc.write, which blocks the parsing and assembly of the DOM. Similarly, scripts can query the calculated styles of any object and this blocks the execution of CSS. Building DOM and CSSOM objects is often intertwined: building a DOM is not possible while JS is running and JS cannot continue while CSSOM is available. The performance of the application, especially the first load and the “time for rendering” directly depends on how successfully the problem of relationships between markup, styles and scripts is solved.

By the way, remember this popular rule - first styles, after scripts?

Now you understand why! Script execution is blocked at the style level, so give the CSS to the user as quickly as you can.

What is a modern web application? HTTP Archive can help us answer this question. This project tracks how the web is built, periodically referring to the most popular sites (300,000+ from Alexa Top 1M) for recording and analyzing the amount of resources used, content types, headers and other metadata.

On average, a web application requires the following:

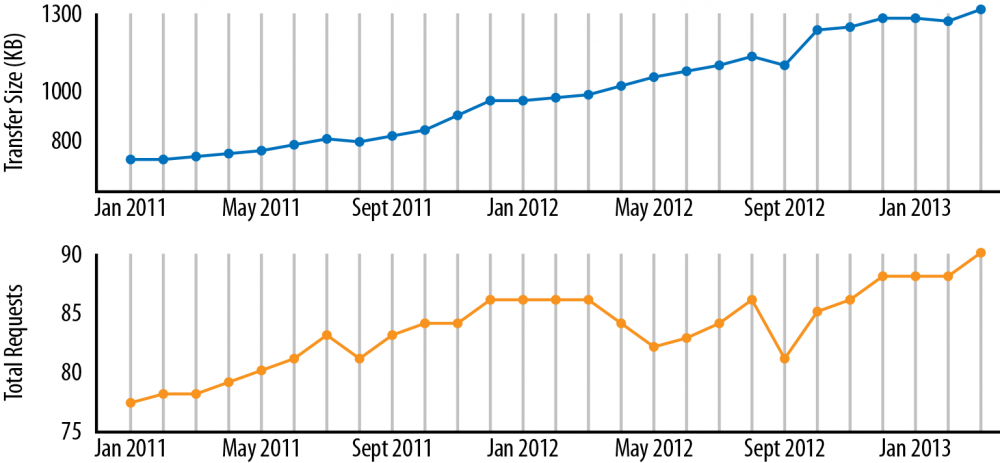

While you read the numbers have changed and increased, there is a stable and reliable growth trend with no signs of stopping. On average, a modern web application takes up more than 1 MB and consists of about 100 different resources that come from more than 15 different hosts!

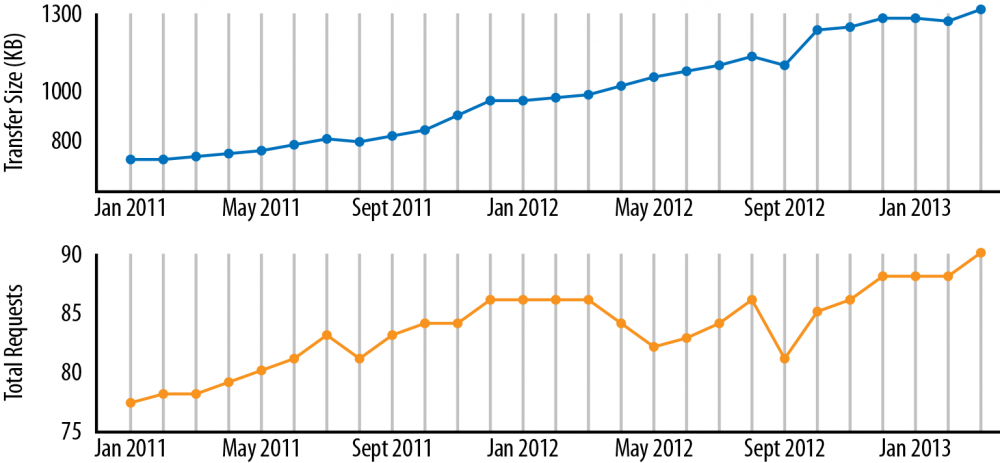

Average size and number of requests (HTTP Archive)

Unlike desktop solutions, a web application does not require a separate installation process. Enter the URL, hit Enter and you're done! However, desktop applications can only be installed once and forget about the installation process later on. When a web application is “installed” every time we visit a particular address on the network, resources are loaded, DOM and CSSOM are built, js scripts are executed. No wonder web performance is a hot and hot topic of discussion!

Hundreds of resources, megabytes of data, dozens of hosts, all this should be combined in hundreds of milliseconds and give the opportunity to work instantly on the web.

Speed and performance are always relative. Each application dictates its own set of requirements for this based on business criteria, context, user expectations, and the complexity of the tasks to be performed. If we say that the application must respond and respond to the user, we must plan the speed of the reaction and the operation of the application, taking into account the specifics of the user's perception. Despite the fact that the heat of life is accelerating, the time of our reaction remains unchanged. It does not depend on the type of application (online or offline) or device (desktop, laptop or mobile devices).

Time and user wait:

We can call the application work instant, if the response to a user action is provided for hundreds of milliseconds. After a second or more, the user's attention is dissipated and interaction with the application is no longer so dense. If 10 seconds pass, and there was no feedback, then most often the task is simply abandoned.

Add up network latency due to DNS lookup, opening a TCP connection and a few round-trip transfers, and much, if not all, of the milliseconds that you’ll spend is just on network interaction. Not surprisingly, many users, especially when it comes to a mobile or wireless network, require a better browser performance!

Speed is a feature of your application. It makes no sense to improve the performance of the load just for the sake of speed. Based on research from Google, Microsoft and Amazon, we can conclude that they all translate web performance into dollars and cents. For example, a delay of 2000 ms on the Bing pages did not allow Microsoft to get revenue from each user by 4.3% more!

Studies of more than 160 organizations show that an extra second of download time leads to a loss of 7% conversion, 11% of page views, customer satisfaction decreases by 16%.

A quick site gives you more views, high levels of engagement and conversion. But do not take our word for it! Better to strengthen the faith in a practical way, evaluate how the performance of your site affects the conversion.

For the sake of completeness, web performance cannot be ignored without mentioning the data load schedule. Moreover, this should be paid attention to because the load schedule is one of the most accurate diagnostic tools.

Modern browsers provide various tools for analyzing this graph, but there are also excellent online services that allow you to evaluate all aspects, for example, WebPageTest .

WebPageTest.org is a free project that provides a web service for testing the performance of web pages. One of the features is that testing occurs from several locations. The virtual machine starts a browser that can be configured to work with a number of connections. Test results are immediately available in a visual web interface, which makes WebPageTest an indispensable tool for a web developer.

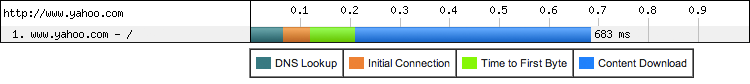

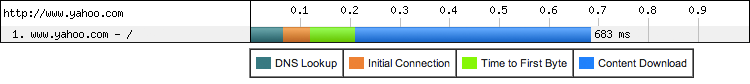

For a start, it is important to understand that each HTTP request consists of several separate stages: DNS lookup, opening a TCP connection, TLS exchange (optional), sending an HTTP request, and then downloading content.

HTTP request components (WebPageTest)

Careful analysis shows that the loading time of the Yahoo! takes 683 ms, which is interesting - more than 200 ms was spent waiting for the network, which is 30% of the total time! However, a document request is just the beginning. As we know, a modern web application also needs other diverse resources.

Yahoo.com page load schedule

To load the Yahoo! home page. The browser requests 52 resources from 30 different hosts. Resource size is 486 KB in total.

Resource graph allows you to make a number of important conclusions about the structure of the page and the processing of this page by the browser. First of all, pay attention, while the browser receives the contents of the HTML document, new HTTP requests are sent: HTML parsing is carried out gradually, allowing the browser to quickly find the necessary resources and at the same time send new requests.

Thus, the order of requests and receiving content is determined largely by markup. The browser can change the priorities of requests, but the additional opening of each resource in the document creates a so-called “waterfall effect” on the chart.

Next, notice that the element “Start Rendering” (vertical green line) is located much earlier than the resource loading ends. This suggests that content rendering begins early on, which allows the user to quickly begin interacting with the page when its loading is not yet complete. By the way, the mark “Document loading is over” is also located earlier than the actual end of loading of all resources. In other words, the user can go about his business, but downloading additional content (for example, ads or widgets) will continue in the background.

The difference between the start of rendering, the “loaded document” mark and the actual completion of the content download indicated above is a good indication that you need to understand the context correctly when discussing web performance metrics. Which of these three indicators is the right one to track? The secret is that there is no clear answer: each web application uses a different approach to downloading content. Yahoo! We decided to optimize the pages so that the user could work without waiting for the download to complete. At the same time, they had to understand the exact understanding of the importance and priority of resources, what can be loaded earlier and what later.

Do not forget that browsers can use different logic of resource loading and this may affect performance. Note that, in addition to location, WebPageTest allows you to select a browser and its version, which allows you to evaluate application performance in all modern browsers.

Networking is a powerful tool that can help test the optimization of any page and application. The previous resource schedule is often referred to as front-end application analysis. However, it is misleading to many. This may indicate that the entire performance problem lies only in the client. However, although JS, CSS, and the rendering process are critical and resource-intensive steps, working on the server response and reducing network delays is equally important for optimizing performance. In the end, no matter how much effort you put into content optimization, there is little use in this if the resource is unavailable on the network!

To demonstrate this, we will go to the connection view on the chart from WebPageTest.

Network connection (connection view) Yahoo.com

Unlike the resource graph, where each entry describes a specific HTTP request, each TCP connection is shown on the network. In this example, all 30 of them are used to load the Yahoo home page resources ... Does anything stand out? It should be noted that the download time, which is highlighted in blue, is only a small fraction of the total connection time. You can see that there were 15 DNS lookup requests, a TCP connection was opened 30 times and many network delays caused by waiting for the first byte of the response.

Lastly, we left the most interesting. What is at the bottom of the network graph can be a real surprise for many: the use of bandwidth is being considered. With the exception of a few short packets, the use of the available channel looks very insignificant: we are not limited by the bandwidth of our channel! Is this an anomaly or a browser error? Unfortunately, no answer is correct. It turns out that we are not limited to the width of the channel. Problem in delays between client and server.

Running a web application primarily involves three tasks: selecting resources, building a page and rendering, and executing JavaScript. The stages of visualization and execution of scripts follow a single-threaded, alternating model of execution; parallel modifications of the obtained document object model (DOM) are impossible. Therefore, optimizing both the rendering and execution of scripts that work simultaneously, as we saw in “DOM, CSSOM, and JavaScript”, is crucial.

However, optimization of the execution of JavaScript and visualization pipelines will also not go perfectly if the browser is blocked on the network, waiting for the delivery of resources. Fast and efficient delivery of network resources is a cornerstone of the performance of each application running in the browser.

The speed of the Internet is getting faster every day, so if this problem will not be solved by itself? Yes, our applications are getting bigger, but if the global average speed is already 3.1 Mbit (“Bandwidth at the edge of the network”) continues to grow, then why bother, isn't it? Unfortunately, as you might suggest, and as the example of Yahoo! Shows, if this were so, you would not have read this article. Let's take a closer look.

Hold your horses! Of course, bandwidth issues matter! Access to higher data rates is always good, especially in cases involving mass data transfers: video and streaming audio or any other type of transmission of large amounts of data. However, when it comes to everyday web browsing, which requires swapping hundreds of relatively small resources from dozens of different hosts, latency is a limiting factor.

Streaming high-definition video from Yahoo! limited bandwidth. Download and visualize the Yahoo! home page. limited to latency.

Depending on the quality and encoding of the video you are trying to play, you may need anywhere from a few hundred kilobits to several Mbps of bandwidth, for example, 3+ Mbps for an HD 1080p video stream. This data transfer rate is now within reach for many users, as evidenced by the growing popularity of streaming video services such as Netflix. Why, then, would a much smaller web application load such a problem for a connection capable of streaming a high-resolution movie?

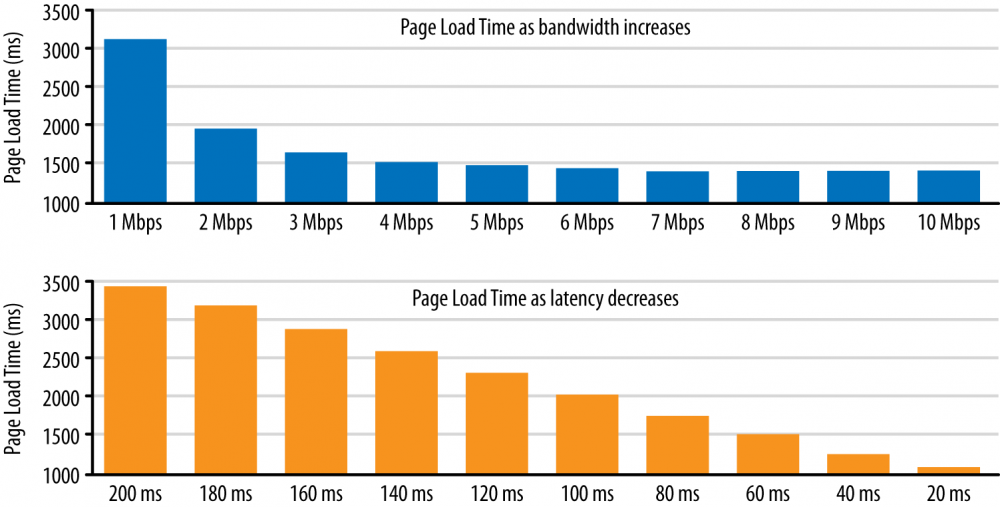

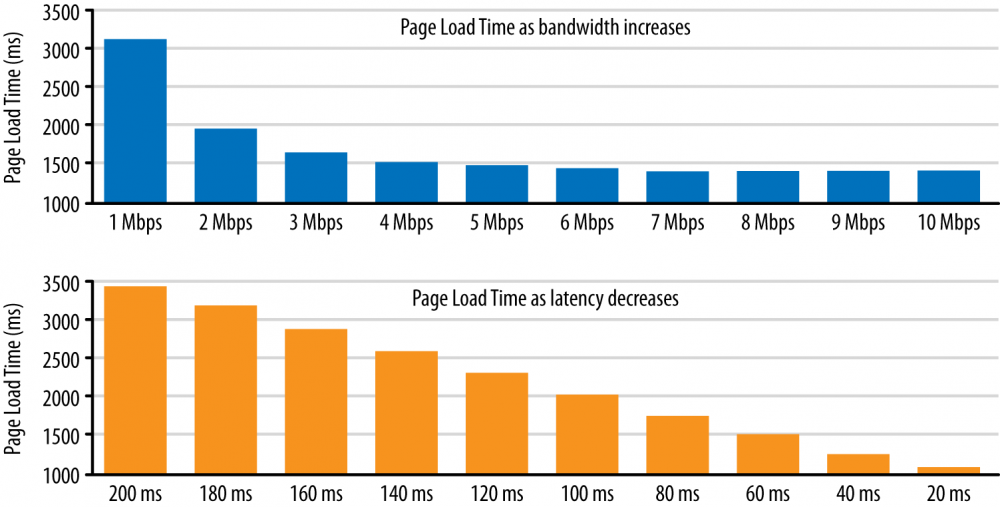

Let's look at the results of quantitative analysis of page load time depending on the change in bandwidth and delays made by Mike Belshe, one of the creators of the SPDY protocol and one of the developers of the HTTP 2.0 protocol:

In the first test, the delays in loading the page are fairly constant, although the bandwidth of the channel increases from 1 to 10 megabits per second. Note that the channel upgrade from 1 to 2 megabits gives a significant increase in download speed, which does not happen so much further. An upgrade from 5 to 10 megabits gives only 5% of the acceleration of loading in each iteration.

However, the experiment with delays shows us a completely different picture: for every 20 milliseconds of delay, we get a linear decrease in page load speed!

To speed up the Internet as a whole, we need to look at ways to reduce delays. What if we can reduce cross-atlantic delays from 150 to 100 ms? This will have a greater effect on the speed of Internet access than increasing the bandwidth of the channel from 3.9 Mbit to 10 or even to Gigabit. Another approach to reducing page load time is to reduce the number of requests required to load a page. Today, web pages require a significant number of connections between the client and the server. The number of requests in both ends is largely due to the official data exchange to start communication between the client and the server (for example, in DNS, TCP, HTTP) and this is also affected by, for example, the slow start of TCP. If we can improve the protocols for transferring data with fewer connections, we can also increase the speed of loading pages. This is one of the goals of SPDY.

Mike Belshe.

The results shown are surprising for many, but they should not be, as they are the result of the direct influence of the performance characteristics of the protocols: TCP, control and coordination of flows, blocking due to packet loss. Most of the HTTP data streams contain small, pulsating data transmissions, while TCP is optimized for long-lived connections and packet data. Network latency is also a bottleneck for HTTP and most web applications running on top of it.

If latency is the limiting factor for most wired connections, imagine what a significant role they play for wireless clients: wireless delays are usually higher, making network optimizations a critical priority for web performance.

If we can measure something, we can improve it. The question is, is the correct measurement criterion chosen and is the process thorough? As we noted earlier, measuring the performance of a modern web application is not a trivial task: there is no single general indicator that remains the same for any application, which means that it is necessary to carefully determine a specific indicator in each individual case. Then, when the criterion is selected, we need to get information about the performance, which is done using a combination of synthetic and custom tests.

In general, synthetic testing is applied to any process under controlled measurement conditions: the local build process is launched through performance testing software, the load is tested against an intermediate infrastructure or a set of geo-distributed servers that periodically run a set of scripted actions and log the output. Each of these tests can check different parts of the infrastructure (for example, application server bandwidth, database performance, DNS timing, etc.), and serves as a stable basis for determining the performance drop in a particular component of the system.

Well-tuned synthetic testing provides a controlled and reproducible testing environment, which makes it great for detecting and correcting performance drops before they reach the end user. Tip: identify key performance indicators and highlight “budget” (power) for each as part of your synthetic testing. If the “budget” is exceeded, sound the alarm!

However, synthetic testing is not enough to identify all performance bottlenecks. More specifically, the problem is that the measurements obtained do not reflect the whole variety of real factors that will determine the real user experience with the application.

Some contributing factors to this include:

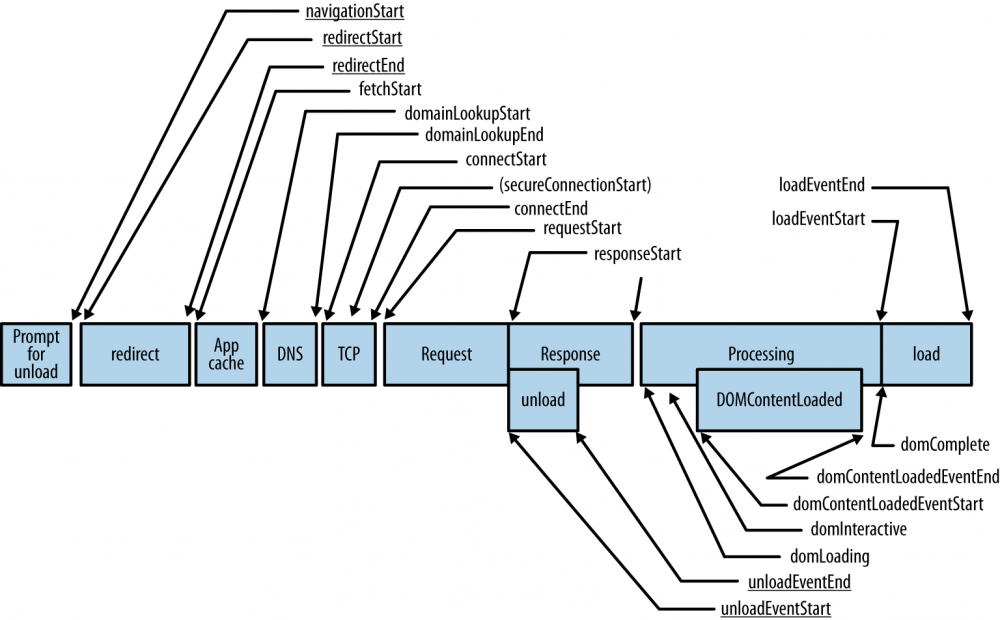

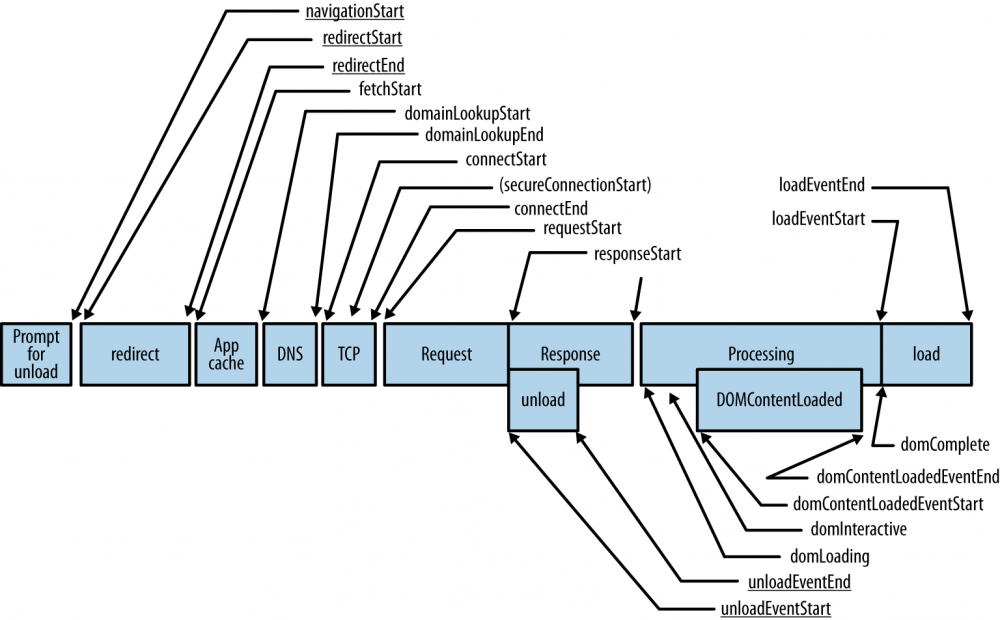

, , (real-user measurment, RUM), . , W3C Web Perfomance Working Group , API (Navigation Timing Api), .

Navigation Timing

Navigation Timing , , DNS TCP ( ) perfomance.timing .

, : , . , , , , .

, : , . , .

igvita.com

: (2 ).

, . Google, . Navigation Timing ( ), . , , Navigation Timing.

, W3C Performance Group API — User Timing ( ) Resource Timing ( ). Navigation Timing , Resource Timing , . , User Timing JavaScript API , , :

1. Save the mark with the associated name.

2. Execute the application code.

3. We repeat and log the measurement data.

4. We display the object Navigation Timing of a specific page.

The combination of the Navigation, Resource and User timing API provides all the tools you need to measure performance in real time, there are no more excuses for why you did something wrong. We optimize what we measure. Synthetic testing and RUM are complementary approaches, they will help you identify all problem areas of performance and give an idea of how the user perceives your application.

Custom and application-based metrics are key to creating a robust performance optimization strategy. There is no universal way to measure and determine the quality of user experience. Instead, for each application it is necessary to determine the specific stages of development of the application. This process requires collaboration between all parties to the project: owners, designers, and developers.

It would be an omission not to mention that a modern browser is more than just a simple socket manager. Performance is one of the competitive advantages of the browser. Therefore, it is not surprising that browsers become smarter from day to day. Pre-resolution search probable DNS, a preliminary connection to probable hosts, pre-selection and prioritization of resources on the page and much more.

The exact list of executables is different for different browsers, but all of this can be divided into two large classes:

The network protocol stack is integrated with the document. Html, css and js parsing helps to prioritize critical network resources, load them earlier and get the pages with the interactive part as quickly as possible. Often this is done on the basis of a list of priority resources, with the help of preliminary parsing and similar methods.

The browser can learn custom navigation patterns over time and perform speculative optimizations in an attempt to predict the likely actions of the user by first resolving the DNS, connecting to likely hosts, and so on.

The good news is that all these optimizations are performed automatically on our behalf and with their help you can often save up to hundreds of milliseconds. It is important to understand how and why it works that way, and how we can help the browser load our web applications even faster.

There are four methods used by most browsers:

HTML, CSS, and JS may pass additional information to the network stack to communicate the relative priority of each resource: resources that do not allow other resources to be obtained will be requested first, while low priority requests will be queued.

Hosts can be defined in advance, thus avoiding delays during the DNS resolution phase of an HTTP request. The browser addresses of hosts can be found by analyzing the history of visits, user actions, such as hovering over a link and more.

After associating a domain with the host ip, the browser can pre-open a TCP connection, waiting for an HTTP request. If the connection is confirmed, you can make a TCP handshake in the same way.

Some browsers allow you to render pages in a hidden tab, and when the user initiates a request, the tab is no longer hidden.

Externally, the network stack in modern browsers is nothing more than a mechanism for obtaining content, but from the inside it is a complex and fascinating case, the study of which will help optimize web performance.

How can you help the browser? First, you need to pay attention to the structure of each page:

In addition to all this, we can leave for the browser additional tips that can warn the browser about the need for additional optimization. The browser will do everything on our behalf:

1. Pre-permit host name.

2. Preload a critical resource later on the page.

3. Preload the resource for current or future navigation.

4. Rendering a specific page, anticipating the next user action.

Each of these tips reminds of speculative optimization. The browser cannot guarantee the fulfillment of these prompts, but it can use the prompt to improve strategy and logic. Unfortunately, not all browsers support these features.

For most users and even web developers, DNS, TCP, and SSL delays are not serious and are overcome at the network levels that few of us go to. Yet, each of these steps is crucial to the overall user experience, since each additional call on the network adds tens or hundreds of milliseconds of network latency. By helping the browser to anticipate these things, we can deal with problem areas and download our web application much faster and better.

HTML document is parsed gradually. This means that the server can and should clear the markup document as often as possible. This will allow the client to go to the missing important resources faster.

Google Search is one of the best examples. While the client’s browser parses the header layout, the search request is sent to the search index, and the rest of the document, which includes the search results, is delivered to the user as soon as the results are ready. Currently, the dynamic parts of the header, such as the name of the logged-in user, are populated with JavaScript.

Successful use of Infobox and InfoboxCloud ! Let sites and apps run fast and reliable!

- the impact of latency and bandwidth on web performance;

- the restrictions that the TCP protocol imposes on HTTP;

- features and disadvantages of the HTTP protocol itself;

- Web application development trends and performance requirements;

- browser restrictions and the possibility of optimization.

Optimization of connections between different layers, levels of the system is little different from the process of solving a system of equations. Each equation depends on the others and affects the number of possible solutions for the system as a whole. It should be noted that there are no strictly fixed recommendations or ready-made solutions. Individual components continue to evolve: browsers are becoming faster, user connection profiles are changing, web applications continue to change in content, and the tasks that are being set are becoming more complex.

Therefore, before we delve into the review and analysis of advanced modern experience, it is important to take a step back and determine what the problem really is: what a modern web application is, what tools we have, how we measure web performance, and what parts of the system help and hinder our progress.

')

Hypertext, web pages and web applications

As a result of the evolution of the Internet over the past few decades, we have obtained three different approaches to presenting information online:

- hypertext document.

- Web page with various forms of media content.

- interactive web application.

It should be recognized that at times it is difficult for the end user to see the boundary between the last two approaches, but in terms of performance, each of them requires a different methodology, assessment and definition of performance.

Hypertext Documents

Hypertext is at the heart of the World Wide Web as plain text with some basic formatting features and support for hyperlinks.

Now it is unlikely to seem interesting, but hypertext was extremely useful for the world wide web, was a prerequisite to all that we currently use.

Webpage

The HTML working group and the developers of the first browsers tried to expand the possibilities of hypertext and introduced support for the media: image and sound. The result was the era of web pages, which is distinguished by the presence of developed forms of media content, visually flawless, but with a small amount of interactivity. Web pages in this do not differ much from the usual printed newspaper page.

Web application

The addition of JavaScript, the late introduction of support for revolutionary Dynamic HTML (DHTML) and AJAX, has shaken the industry and turned simple web pages into interactive web applications, which made it possible to respond to user requests directly in the browser. All this paved the way for the first implementations of full browser applications. For example, Outlook Web Access (this started XMLHTTP support in IE 5). We can say that this was the beginning of a new era of complex links between scripts, cascading style sheets and markup.

A session in HTTP 0.9 can be submitted from a request for one document, which is sufficient for the correct display of hypertext. One document, one TCP connection, then a connection reset. Based on the above, it is not difficult to imagine an optimization process, it was very simple. All that was required was to improve the performance of a single HTTP request for a short TCP connection.

The appearance of web pages has changed this formula. From the request / receipt of one document, we went to the request of the document and its dependent resources. The concept of metadata (headers) has been introduced in HTTP 1.0, the concept is expanded in HTTP 1.1 with the indication of various directives that focus on performance. For example, strictly defined caching, KeepAlive, and more. Thus, we get several simultaneous TCP connections and the key performance indicator of the document load time is no longer. It is customary to evaluate page load time, abbreviated PLT.

Finally, web pages have gradually turned into web applications that use media content as a supplement to the main content. If you use an integrated approach, the whole system fits into the following scheme: html markup defines the skeleton, the basic structure of the application, css determines the location of elements within the framework, scripts create an interactive application that responds to user requests and potentially modifies the markup and styles to implement these requests.

Therefore, a parameter such as page load time, which previously had a decisive role in analyzing web performance, is no longer sufficient for evaluating performance. We are no longer just creating web pages, we are building responsive, dynamic, interactive web applications.

Instead of PLT, we are now interested in the answers to the following questions:

- What are the main steps in the process of downloading the application?

- When exactly is the first request from the user required?

- What kind of requests are needed from the user?

- What is the speed of involvement and conversion of each user?

Successful performance and optimization strategies are directly dependent on the ability to define an iterator to follow certain criteria and benchmarks. Nothing can be compared with a specific application of knowledge about the specifics of the application, the use of measurement results, especially if all this is one of the goals of your business.

DOM, CSSOM, and JavaScript

What exactly do we mean by “complex dependency of scripts, styles and markup” in a modern web application? To answer this question, we need to understand the architecture of the browser, understand how markup, styles and scripts should come together to draw pixels on the screen.

Browsing parsing process

Parsing an HTML document is a build process according to the Document Object Model (DOM). We also often forget that they are also using the cascading style sheet object model (CSS Object Model), which is built according to the given style and resource rules. These two unite and create the so-called render tree, which is enough for the browser to render something. So far so good. But, unfortunately, right now we have to introduce our best friend and at the same time the enemy: JavaScript. Running the script can produce a synchronous doc.write, which blocks the parsing and assembly of the DOM. Similarly, scripts can query the calculated styles of any object and this blocks the execution of CSS. Building DOM and CSSOM objects is often intertwined: building a DOM is not possible while JS is running and JS cannot continue while CSSOM is available. The performance of the application, especially the first load and the “time for rendering” directly depends on how successfully the problem of relationships between markup, styles and scripts is solved.

By the way, remember this popular rule - first styles, after scripts?

Now you understand why! Script execution is blocked at the style level, so give the CSS to the user as quickly as you can.

Anatomy of a modern web application

What is a modern web application? HTTP Archive can help us answer this question. This project tracks how the web is built, periodically referring to the most popular sites (300,000+ from Alexa Top 1M) for recording and analyzing the amount of resources used, content types, headers and other metadata.

On average, a web application requires the following:

- 90 requests, from 15 hosts, with 1311 KB total.

- HTML: 10 requests, 52 Kb

- Images: 55 requests, 812 KB

- JavaScript: 15 requests, 216 Kb

- CSS: 5 requests, 36 Kb

- Other: 5 requests, 195 Kb

While you read the numbers have changed and increased, there is a stable and reliable growth trend with no signs of stopping. On average, a modern web application takes up more than 1 MB and consists of about 100 different resources that come from more than 15 different hosts!

Average size and number of requests (HTTP Archive)

Unlike desktop solutions, a web application does not require a separate installation process. Enter the URL, hit Enter and you're done! However, desktop applications can only be installed once and forget about the installation process later on. When a web application is “installed” every time we visit a particular address on the network, resources are loaded, DOM and CSSOM are built, js scripts are executed. No wonder web performance is a hot and hot topic of discussion!

Hundreds of resources, megabytes of data, dozens of hosts, all this should be combined in hundreds of milliseconds and give the opportunity to work instantly on the web.

Speed, performance, and human perception

Speed and performance are always relative. Each application dictates its own set of requirements for this based on business criteria, context, user expectations, and the complexity of the tasks to be performed. If we say that the application must respond and respond to the user, we must plan the speed of the reaction and the operation of the application, taking into account the specifics of the user's perception. Despite the fact that the heat of life is accelerating, the time of our reaction remains unchanged. It does not depend on the type of application (online or offline) or device (desktop, laptop or mobile devices).

Time and user wait:

- 0-100 ms - instantly

- 100-300 ms - slightly perceptible delay

- 300-1000 ms - noticeable delay

- 1000+ ms - the user switches the context to something else

- 10 000+ ms - the task is rejected

We can call the application work instant, if the response to a user action is provided for hundreds of milliseconds. After a second or more, the user's attention is dissipated and interaction with the application is no longer so dense. If 10 seconds pass, and there was no feedback, then most often the task is simply abandoned.

Add up network latency due to DNS lookup, opening a TCP connection and a few round-trip transfers, and much, if not all, of the milliseconds that you’ll spend is just on network interaction. Not surprisingly, many users, especially when it comes to a mobile or wireless network, require a better browser performance!

Web performance in dollars and cents

Speed is a feature of your application. It makes no sense to improve the performance of the load just for the sake of speed. Based on research from Google, Microsoft and Amazon, we can conclude that they all translate web performance into dollars and cents. For example, a delay of 2000 ms on the Bing pages did not allow Microsoft to get revenue from each user by 4.3% more!

Studies of more than 160 organizations show that an extra second of download time leads to a loss of 7% conversion, 11% of page views, customer satisfaction decreases by 16%.

A quick site gives you more views, high levels of engagement and conversion. But do not take our word for it! Better to strengthen the faith in a practical way, evaluate how the performance of your site affects the conversion.

Analyzing data loading graph: waterfall effect

For the sake of completeness, web performance cannot be ignored without mentioning the data load schedule. Moreover, this should be paid attention to because the load schedule is one of the most accurate diagnostic tools.

Modern browsers provide various tools for analyzing this graph, but there are also excellent online services that allow you to evaluate all aspects, for example, WebPageTest .

WebPageTest.org is a free project that provides a web service for testing the performance of web pages. One of the features is that testing occurs from several locations. The virtual machine starts a browser that can be configured to work with a number of connections. Test results are immediately available in a visual web interface, which makes WebPageTest an indispensable tool for a web developer.

For a start, it is important to understand that each HTTP request consists of several separate stages: DNS lookup, opening a TCP connection, TLS exchange (optional), sending an HTTP request, and then downloading content.

HTTP request components (WebPageTest)

Careful analysis shows that the loading time of the Yahoo! takes 683 ms, which is interesting - more than 200 ms was spent waiting for the network, which is 30% of the total time! However, a document request is just the beginning. As we know, a modern web application also needs other diverse resources.

Yahoo.com page load schedule

To load the Yahoo! home page. The browser requests 52 resources from 30 different hosts. Resource size is 486 KB in total.

Resource graph allows you to make a number of important conclusions about the structure of the page and the processing of this page by the browser. First of all, pay attention, while the browser receives the contents of the HTML document, new HTTP requests are sent: HTML parsing is carried out gradually, allowing the browser to quickly find the necessary resources and at the same time send new requests.

Thus, the order of requests and receiving content is determined largely by markup. The browser can change the priorities of requests, but the additional opening of each resource in the document creates a so-called “waterfall effect” on the chart.

Next, notice that the element “Start Rendering” (vertical green line) is located much earlier than the resource loading ends. This suggests that content rendering begins early on, which allows the user to quickly begin interacting with the page when its loading is not yet complete. By the way, the mark “Document loading is over” is also located earlier than the actual end of loading of all resources. In other words, the user can go about his business, but downloading additional content (for example, ads or widgets) will continue in the background.

The difference between the start of rendering, the “loaded document” mark and the actual completion of the content download indicated above is a good indication that you need to understand the context correctly when discussing web performance metrics. Which of these three indicators is the right one to track? The secret is that there is no clear answer: each web application uses a different approach to downloading content. Yahoo! We decided to optimize the pages so that the user could work without waiting for the download to complete. At the same time, they had to understand the exact understanding of the importance and priority of resources, what can be loaded earlier and what later.

Do not forget that browsers can use different logic of resource loading and this may affect performance. Note that, in addition to location, WebPageTest allows you to select a browser and its version, which allows you to evaluate application performance in all modern browsers.

Networking is a powerful tool that can help test the optimization of any page and application. The previous resource schedule is often referred to as front-end application analysis. However, it is misleading to many. This may indicate that the entire performance problem lies only in the client. However, although JS, CSS, and the rendering process are critical and resource-intensive steps, working on the server response and reducing network delays is equally important for optimizing performance. In the end, no matter how much effort you put into content optimization, there is little use in this if the resource is unavailable on the network!

To demonstrate this, we will go to the connection view on the chart from WebPageTest.

Network connection (connection view) Yahoo.com

Unlike the resource graph, where each entry describes a specific HTTP request, each TCP connection is shown on the network. In this example, all 30 of them are used to load the Yahoo home page resources ... Does anything stand out? It should be noted that the download time, which is highlighted in blue, is only a small fraction of the total connection time. You can see that there were 15 DNS lookup requests, a TCP connection was opened 30 times and many network delays caused by waiting for the first byte of the response.

Lastly, we left the most interesting. What is at the bottom of the network graph can be a real surprise for many: the use of bandwidth is being considered. With the exception of a few short packets, the use of the available channel looks very insignificant: we are not limited by the bandwidth of our channel! Is this an anomaly or a browser error? Unfortunately, no answer is correct. It turns out that we are not limited to the width of the channel. Problem in delays between client and server.

Performance Pillars: Computing, Visualizing, Spreading

Running a web application primarily involves three tasks: selecting resources, building a page and rendering, and executing JavaScript. The stages of visualization and execution of scripts follow a single-threaded, alternating model of execution; parallel modifications of the obtained document object model (DOM) are impossible. Therefore, optimizing both the rendering and execution of scripts that work simultaneously, as we saw in “DOM, CSSOM, and JavaScript”, is crucial.

However, optimization of the execution of JavaScript and visualization pipelines will also not go perfectly if the browser is blocked on the network, waiting for the delivery of resources. Fast and efficient delivery of network resources is a cornerstone of the performance of each application running in the browser.

The speed of the Internet is getting faster every day, so if this problem will not be solved by itself? Yes, our applications are getting bigger, but if the global average speed is already 3.1 Mbit (“Bandwidth at the edge of the network”) continues to grow, then why bother, isn't it? Unfortunately, as you might suggest, and as the example of Yahoo! Shows, if this were so, you would not have read this article. Let's take a closer look.

Large bandwidth does not have (large) value

Hold your horses! Of course, bandwidth issues matter! Access to higher data rates is always good, especially in cases involving mass data transfers: video and streaming audio or any other type of transmission of large amounts of data. However, when it comes to everyday web browsing, which requires swapping hundreds of relatively small resources from dozens of different hosts, latency is a limiting factor.

Streaming high-definition video from Yahoo! limited bandwidth. Download and visualize the Yahoo! home page. limited to latency.

Depending on the quality and encoding of the video you are trying to play, you may need anywhere from a few hundred kilobits to several Mbps of bandwidth, for example, 3+ Mbps for an HD 1080p video stream. This data transfer rate is now within reach for many users, as evidenced by the growing popularity of streaming video services such as Netflix. Why, then, would a much smaller web application load such a problem for a connection capable of streaming a high-resolution movie?

Delays like performance neck

Let's look at the results of quantitative analysis of page load time depending on the change in bandwidth and delays made by Mike Belshe, one of the creators of the SPDY protocol and one of the developers of the HTTP 2.0 protocol:

In the first test, the delays in loading the page are fairly constant, although the bandwidth of the channel increases from 1 to 10 megabits per second. Note that the channel upgrade from 1 to 2 megabits gives a significant increase in download speed, which does not happen so much further. An upgrade from 5 to 10 megabits gives only 5% of the acceleration of loading in each iteration.

However, the experiment with delays shows us a completely different picture: for every 20 milliseconds of delay, we get a linear decrease in page load speed!

To speed up the Internet as a whole, we need to look at ways to reduce delays. What if we can reduce cross-atlantic delays from 150 to 100 ms? This will have a greater effect on the speed of Internet access than increasing the bandwidth of the channel from 3.9 Mbit to 10 or even to Gigabit. Another approach to reducing page load time is to reduce the number of requests required to load a page. Today, web pages require a significant number of connections between the client and the server. The number of requests in both ends is largely due to the official data exchange to start communication between the client and the server (for example, in DNS, TCP, HTTP) and this is also affected by, for example, the slow start of TCP. If we can improve the protocols for transferring data with fewer connections, we can also increase the speed of loading pages. This is one of the goals of SPDY.

Mike Belshe.

The results shown are surprising for many, but they should not be, as they are the result of the direct influence of the performance characteristics of the protocols: TCP, control and coordination of flows, blocking due to packet loss. Most of the HTTP data streams contain small, pulsating data transmissions, while TCP is optimized for long-lived connections and packet data. Network latency is also a bottleneck for HTTP and most web applications running on top of it.

If latency is the limiting factor for most wired connections, imagine what a significant role they play for wireless clients: wireless delays are usually higher, making network optimizations a critical priority for web performance.

Synthetic and custom performance tests

If we can measure something, we can improve it. The question is, is the correct measurement criterion chosen and is the process thorough? As we noted earlier, measuring the performance of a modern web application is not a trivial task: there is no single general indicator that remains the same for any application, which means that it is necessary to carefully determine a specific indicator in each individual case. Then, when the criterion is selected, we need to get information about the performance, which is done using a combination of synthetic and custom tests.

In general, synthetic testing is applied to any process under controlled measurement conditions: the local build process is launched through performance testing software, the load is tested against an intermediate infrastructure or a set of geo-distributed servers that periodically run a set of scripted actions and log the output. Each of these tests can check different parts of the infrastructure (for example, application server bandwidth, database performance, DNS timing, etc.), and serves as a stable basis for determining the performance drop in a particular component of the system.

Well-tuned synthetic testing provides a controlled and reproducible testing environment, which makes it great for detecting and correcting performance drops before they reach the end user. Tip: identify key performance indicators and highlight “budget” (power) for each as part of your synthetic testing. If the “budget” is exceeded, sound the alarm!

However, synthetic testing is not enough to identify all performance bottlenecks. More specifically, the problem is that the measurements obtained do not reflect the whole variety of real factors that will determine the real user experience with the application.

Some contributing factors to this include:

- Scenario and page selection: copying the navigation paths of a real user is difficult.

- Browser cache: performance may vary widely depending on user cache.

- Intermediaries: performance may vary depending on intermediate proxies and caches.

- A variety of hardware: a wide range of performance CPU, GPU, and memory.

- : , .

- : .

, , (real-user measurment, RUM), . , W3C Web Perfomance Working Group , API (Navigation Timing Api), .

Navigation Timing

Navigation Timing , , DNS TCP ( ) perfomance.timing .

, : , . , , , , .

, : , . , .

igvita.com

: (2 ).

, . Google, . Navigation Timing ( ), . , , Navigation Timing.

, W3C Performance Group API — User Timing ( ) Resource Timing ( ). Navigation Timing , Resource Timing , . , User Timing JavaScript API , , :

function init() { performance.mark("startTask1"); 1 applicationCode1(); 2 performance.mark("endTask1"); logPerformance(); } function logPerformance() { var perfEntries = performance.getEntriesByType("mark"); for (var i = 0; i < perfEntries.length; i++) { 3 console.log("Name: " + perfEntries[i].name + " Entry Type: " + perfEntries[i].entryType + " Start Time: " + perfEntries[i].startTime + " Duration: " + perfEntries[i].duration + "\n"); } console.log(performance.timing); 4 } 1. Save the mark with the associated name.

2. Execute the application code.

3. We repeat and log the measurement data.

4. We display the object Navigation Timing of a specific page.

The combination of the Navigation, Resource and User timing API provides all the tools you need to measure performance in real time, there are no more excuses for why you did something wrong. We optimize what we measure. Synthetic testing and RUM are complementary approaches, they will help you identify all problem areas of performance and give an idea of how the user perceives your application.

Custom and application-based metrics are key to creating a robust performance optimization strategy. There is no universal way to measure and determine the quality of user experience. Instead, for each application it is necessary to determine the specific stages of development of the application. This process requires collaboration between all parties to the project: owners, designers, and developers.

Browser Optimization

It would be an omission not to mention that a modern browser is more than just a simple socket manager. Performance is one of the competitive advantages of the browser. Therefore, it is not surprising that browsers become smarter from day to day. Pre-resolution search probable DNS, a preliminary connection to probable hosts, pre-selection and prioritization of resources on the page and much more.

The exact list of executables is different for different browsers, but all of this can be divided into two large classes:

Document-aware optimization

The network protocol stack is integrated with the document. Html, css and js parsing helps to prioritize critical network resources, load them earlier and get the pages with the interactive part as quickly as possible. Often this is done on the basis of a list of priority resources, with the help of preliminary parsing and similar methods.

Speculative optimization

The browser can learn custom navigation patterns over time and perform speculative optimizations in an attempt to predict the likely actions of the user by first resolving the DNS, connecting to likely hosts, and so on.

The good news is that all these optimizations are performed automatically on our behalf and with their help you can often save up to hundreds of milliseconds. It is important to understand how and why it works that way, and how we can help the browser load our web applications even faster.

There are four methods used by most browsers:

Pre-selecting resources and prioritizing their downloads

HTML, CSS, and JS may pass additional information to the network stack to communicate the relative priority of each resource: resources that do not allow other resources to be obtained will be requested first, while low priority requests will be queued.

DNS advance resolution

Hosts can be defined in advance, thus avoiding delays during the DNS resolution phase of an HTTP request. The browser addresses of hosts can be found by analyzing the history of visits, user actions, such as hovering over a link and more.

Pre-opening TCP connection

After associating a domain with the host ip, the browser can pre-open a TCP connection, waiting for an HTTP request. If the connection is confirmed, you can make a TCP handshake in the same way.

Preview rendering

Some browsers allow you to render pages in a hidden tab, and when the user initiates a request, the tab is no longer hidden.

Externally, the network stack in modern browsers is nothing more than a mechanism for obtaining content, but from the inside it is a complex and fascinating case, the study of which will help optimize web performance.

How can you help the browser? First, you need to pay attention to the structure of each page:

- Resources like CSS and JS should be given as early as possible.

- CSS should be given as early as possible to unlock rendering and javascript execution.

- Not too important JavaScript should be given later to avoid blocking the DOM and CSSOM assemblies.

- HTML is parsed gradually, so the document must be periodically cleaned to improve performance.

In addition to all this, we can leave for the browser additional tips that can warn the browser about the need for additional optimization. The browser will do everything on our behalf:

<link rel="dns-prefetch" href="//hostname_to_resolve.com"> 1 <link rel="subresource" href="/javascript/myapp.js"> 2 <link rel="prefetch" href="/images/big.jpeg"> 3 <link rel="prerender" href="//example.org/next_page.html"> 4 1. Pre-permit host name.

2. Preload a critical resource later on the page.

3. Preload the resource for current or future navigation.

4. Rendering a specific page, anticipating the next user action.

Each of these tips reminds of speculative optimization. The browser cannot guarantee the fulfillment of these prompts, but it can use the prompt to improve strategy and logic. Unfortunately, not all browsers support these features.

For most users and even web developers, DNS, TCP, and SSL delays are not serious and are overcome at the network levels that few of us go to. Yet, each of these steps is crucial to the overall user experience, since each additional call on the network adds tens or hundreds of milliseconds of network latency. By helping the browser to anticipate these things, we can deal with problem areas and download our web application much faster and better.

Optimize time to first byte for Google search

HTML document is parsed gradually. This means that the server can and should clear the markup document as often as possible. This will allow the client to go to the missing important resources faster.

Google Search is one of the best examples. While the client’s browser parses the header layout, the search request is sent to the search index, and the rest of the document, which includes the search results, is delivered to the user as soon as the results are ready. Currently, the dynamic parts of the header, such as the name of the logged-in user, are populated with JavaScript.

Successful use of Infobox and InfoboxCloud ! Let sites and apps run fast and reliable!

Source: https://habr.com/ru/post/226289/

All Articles