EBay data center goes to water cooled doors

Data center operators tend to place more computing power per unit area. As they add more servers and thereby increase the power density of their racks, there may be an economic need to choose the optimal cooling method for this environment.

This is about eBay, which recently modified the server room with a high density of equipment in its Phoenix data center. The company has moved from air purge cooling equipment to water-cooled tailgate refrigeration units from Motivair. These blocks cool the excess heat, which is removed from the server through the back of the rack. They have been used for many years, but this system is more expensive than traditional air cooling.

')

According to Dean Nelson, vice president of the Global Services Foundation at eBay, as the power density changes, mathematically sound cooling investments change. The machine room in Phoenix has 16 rows of racks, each of which can hold 30–35 kW of servers, which goes far beyond the 4–8 kW range that is typical for most racks in data centers, enterprise level. Cooling this equipment requires six blowing devices in each row, i.e. eBay had to sacrifice six rack positions for cooling.

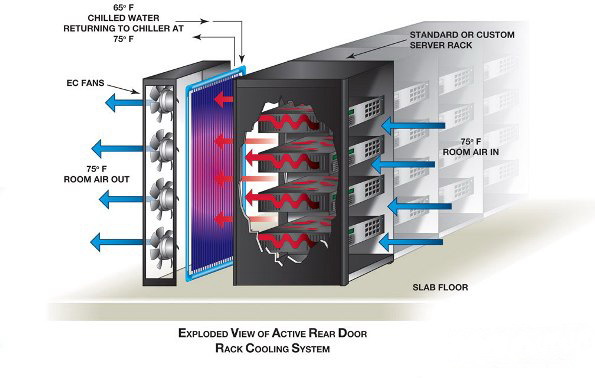

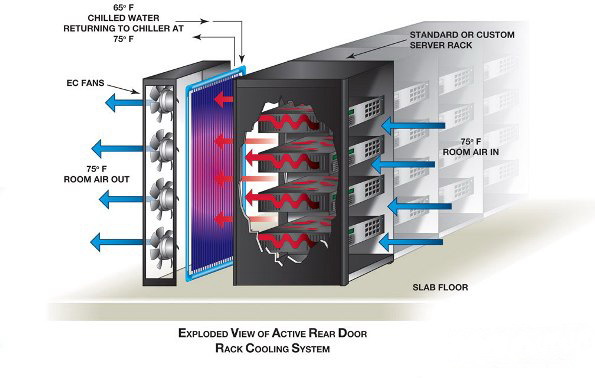

Switching to the rear door units allowed eBay to free up these six racks and fill them with servers, thereby increasing the computing power per unit area. Motivair cooling doors are active units with built-in fans that help blow air through the rack and through the cooling coils of the unit. Some rear cooling doors are passive and save energy, relying only on server fans to provide air circulation. According to Nelson, due to the high power density, it is necessary to use active doors in Phoenix.

Motivair doors use high-performance EC (electronically-switched) fans, which somewhat changed the power consumption (12.8 kW vs. 11 kW for the purge system). The system uses less energy because it can use warm water, operating at a water temperature of 15 degrees Celsius versus 7 degrees in many other refrigeration systems.

“We thought the rear doors would cost a lot, but when the six pillars were put back into operation, the costs paid off,” said Nelson.

The saving scenario worked out also because eBay constructively provided for the possibility of supporting water cooling in advance. Nelson believes that the largest data centers are close to the limit of conventional air cooling capabilities, and considers water cooling as a critical need for a future increase in equipment density. eBay uses the hot water cooling system on the roof of its data center Phoenix, which served as a test site for new developments being implemented in the data center of the e-commerce giant.

On the roof, eBay deployed Dell container modules for data centers that were able to use a water loop with temperatures up to 30 degrees Celsius and still keep servers in their safe working range. To make the system operate at a higher water temperature, an unusually large difference between the air inlet temperature of the server and the outlet to the rear rack was conceived.

In the photo below you can see what the finished room of the Phoenix object looks like:

This is about eBay, which recently modified the server room with a high density of equipment in its Phoenix data center. The company has moved from air purge cooling equipment to water-cooled tailgate refrigeration units from Motivair. These blocks cool the excess heat, which is removed from the server through the back of the rack. They have been used for many years, but this system is more expensive than traditional air cooling.

')

According to Dean Nelson, vice president of the Global Services Foundation at eBay, as the power density changes, mathematically sound cooling investments change. The machine room in Phoenix has 16 rows of racks, each of which can hold 30–35 kW of servers, which goes far beyond the 4–8 kW range that is typical for most racks in data centers, enterprise level. Cooling this equipment requires six blowing devices in each row, i.e. eBay had to sacrifice six rack positions for cooling.

Switching to the rear door units allowed eBay to free up these six racks and fill them with servers, thereby increasing the computing power per unit area. Motivair cooling doors are active units with built-in fans that help blow air through the rack and through the cooling coils of the unit. Some rear cooling doors are passive and save energy, relying only on server fans to provide air circulation. According to Nelson, due to the high power density, it is necessary to use active doors in Phoenix.

Weighing tradeoffs

Motivair doors use high-performance EC (electronically-switched) fans, which somewhat changed the power consumption (12.8 kW vs. 11 kW for the purge system). The system uses less energy because it can use warm water, operating at a water temperature of 15 degrees Celsius versus 7 degrees in many other refrigeration systems.

“We thought the rear doors would cost a lot, but when the six pillars were put back into operation, the costs paid off,” said Nelson.

The saving scenario worked out also because eBay constructively provided for the possibility of supporting water cooling in advance. Nelson believes that the largest data centers are close to the limit of conventional air cooling capabilities, and considers water cooling as a critical need for a future increase in equipment density. eBay uses the hot water cooling system on the roof of its data center Phoenix, which served as a test site for new developments being implemented in the data center of the e-commerce giant.

On the roof, eBay deployed Dell container modules for data centers that were able to use a water loop with temperatures up to 30 degrees Celsius and still keep servers in their safe working range. To make the system operate at a higher water temperature, an unusually large difference between the air inlet temperature of the server and the outlet to the rear rack was conceived.

In the photo below you can see what the finished room of the Phoenix object looks like:

Source: https://habr.com/ru/post/226035/

All Articles