In-Memory OLTP in SQL Server 2014. Part II

In the first part, we briefly reviewed the basic SQL Server in-memory processing capabilities applied to analytics and transactional and applications, focusing on the latter, since in-memory OLTP (Hekaton) is the most significant innovation in SQL Server 2014. In this article we will continue our review. functionality of Hecaton on the example of a previously created database.

Since we are talking about transactional processing in memory, it is useful to recall the acronym ACID: atomicity, integrity, isolation and residuality (effect), specifically letter I. Since it is expensive to achieve complete isolation, isolation levels that allow some degree of compromise are maintained in the DBMS . This is a classic of the genre. As you approach the ideal, SQL Server supports: dirty read (violations of the form are allowed: the first transaction changes data, the second reads changes, the first rolls back, it turns out that the second read the non-existent), read committed (perhaps a non-repeating read: the first transaction may change the data in read scale second, and second time second transaction reads already changed data), repeatable read (first transaction cannot change records at second scale, but can insert new ones - phantoms), serializable (most strict - phantoms do not go away). Once upon a time, when the pages in SQL Server were 2 kilobytes, it only supported page locks, so the last two levels were synonymous. Full write lock support appeared in 7.0 (1998). In 2005, a snapshot of Isolation was added to the listed levels, which, strictly speaking, is not an isolation level, but turns SQL Server from a blocker into a versioner. Just snapshot is the main for Hecaton, because optimistic concurrency is the most natural approach when working with objects in memory. It means in its essence, since record versions are not stored in tempdb in this case. Each write operation has a continuously increasing transaction number used for subsequent reads. Uncommitted records are stored in memory, but are not visible until a commit, therefore there are no dirty reads. Old records are deleted during the garbage collection process and free up memory.

Also in Hecaton, Repeatable Read is supported, which (unlike the disk version) does not block anyone. When a re-read occurs somewhere at the end of a transaction, if the records have changed, the transaction is canceled with the error 41305 “The current transaction failed to complete the message”. Serializable works the same way - in case of phantoms, the transaction is canceled.

The isolation level is specified in the ATOMIC block of a natively compiled stored procedure (see below) or in the table hint of a T-SQL query. Alternatively, with the help of the MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT database option, which, when accessing tables in memory, raises Read UnCommitted / Committed to Snapshot. If usual to write

there will be an error Msg 41333, Level 16, State 1, Line 3

The following transactions are compiled in the procedures underneath the transaction.

')

In the T-SQL script, the isolation level is set by the hint:

Theoretically, Read Committed for memory optimized tables is also supported, but only in the case of autocommit (single statement) transactions.

The cost of blocking is minimal, for which, in fact, everything was started.

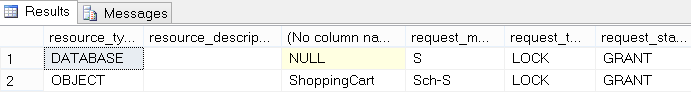

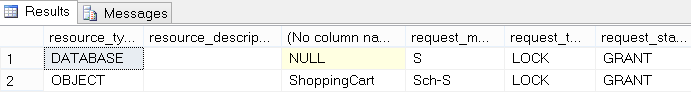

Please note that Hecaton "hangs" only Schema Stability on the table. There are no X and IX in mention:

Pic1

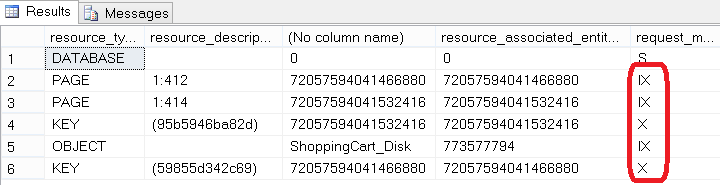

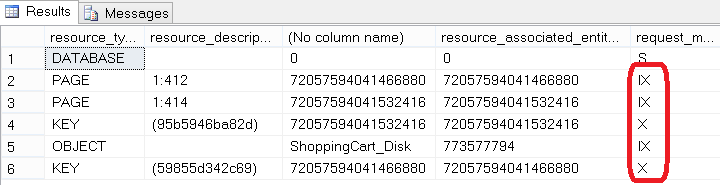

Let us compare for comparison what happens in the case of traditional tables. Create a similar disk table, insert the same rowset as prefilling and repeat the transaction:

What is called, feel the difference:

Pic2

Minimal blocking is one of the obvious advantages of Hecaton. The application will benefit from the transfer to memory if it is made in the style of OLTP, i.e. characterized by relatively short transactions with a high percentage of competition from multiple connections, and the problem is that locks or physical locks hang for a long time and do not release resources. Porting will be easier if stored procedures are used, and performance problems can be distinguished into a relatively small subset of tables / stored procedures. Of course, not all scenarios win. The limiting case is one table of one record, which everyone updates with a new value. The desire to shove anything and everything into memory will lead to the fact that it will end stupidly. By the way, they often ask the question: what will happen if Hecaton consumes all the memory allocated to him? In general, it is clear that there is nothing good: Msg 701, Level 17, State 103. There is a pool of default for the query. What to do in this case is usually nothing. SQL Server clears the memory pretty quickly, and on the release I have not yet managed to drive it into a stupor, which can only be treated with a restart (as opposed to PP). Garbage collection is designed to be non-blocking and cooperative, which means that despite a special thread for this purpose, most of the work is done by user processes that check and mark entries for later removal from time to time and then delete. Specialized flow is enabled when user activity is very small.

How to avoid overflow in the process? Obviously, it is correct to evaluate reality when tables are transferred to memory, especially since nobody canceled sp_spaceused.

You can use the Memory Optimization Advisor, which performs static analysis of the schema, indexes, etc. for potential problems, and more intelligent AMR (Analysis, Migrate and Report) for dynamic analysis on the nature of use (takes statistics from

Performance Data Warehouse). You can preemptively limit Hecaton using the resource controller:

The influence of the Resource Governor applies only to the memory allocated by the In-Memory OLTP allocators, i.e. if there are no memory optimized objects in the database, the memory is not removed from the resource pool. The in-memory OLTP engine is integrated into a common SQL Server Memory Manager, which you can verify by running

The first friend will be the memory allocated by the system, the last (memory_node = 64) is the dedicated administrative connection, and between them the user databases with MEMORY_OPTIMIZED_DATA.

As noted in the first part, it is completely unnecessary to thrust the entire base into memory. Possible combined queries between tables in memory and familiar tables, for example,

Script 1

In addition to tables, stored procedures can be stored in memory. Such procedures can work only with in-memory tables. The procedure is necessarily created with the SCHEMABINDING option, which means that the tables referenced by the procedure cannot be modified or deleted before it. Traditional default procedures are performed with the EXECUTE AS CALLER option. For procedures in memory, it is not supported. The options are: EXECUTE AS OWNER, EXECUTE AS 'user_name' or EXECUTE AS SELF (creator). There are other limitations as well. See BOL , “Stored procedures compiled into native code.”

The body of the procedure is in the BEGIN ATOMIC block from the ANSI SQL standard, and at the moment this is its only use in T-SQL. It differs from BEGIN TRAN in that it automatically rolls back on an error, while TRY / CATCH and ROLLBACK must be used in a transaction, since About SET XACT_ABORT ON there are nuances. The construction options are the TRANSACTION ISOLATION LEVEL = SNAPSHOT | REPEATABLEREAD | SERIALIZABLE and LANGUAGE, as which you can use any language from sys.languages. It defines the date | time format and the language of the system messages.

Script 2

As in the case of tables, a native compilation takes place for the procedures, which turns the interpreted T-SQL commands into C code and then into machine code, so if we now repeat the query Script 4 from the previous part , we will see that the 3rd dll, corresponding to the newly created procedure.

Performance is the key to using objects in memory. For comparison, we will create a similar stored procedure of the traditional storage method, which will also insert the specified number of records in the same way, but into the disk table:

Script 3

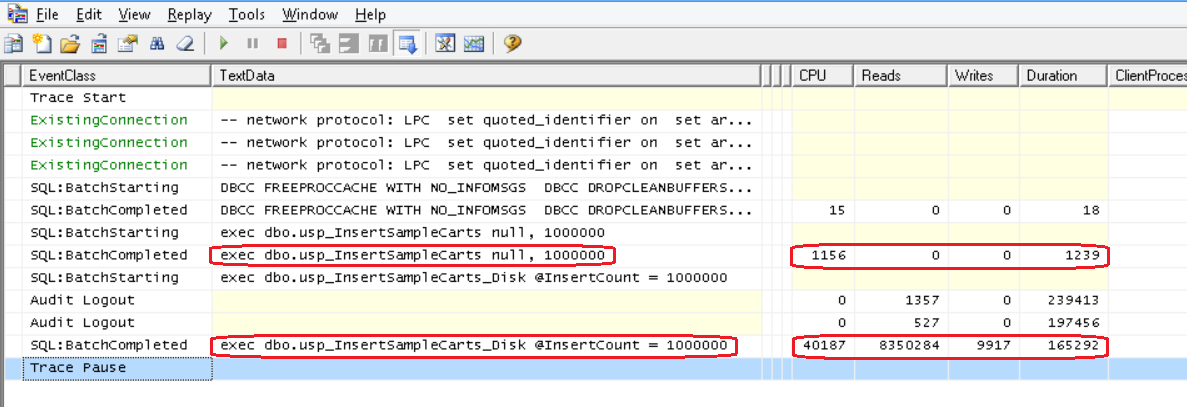

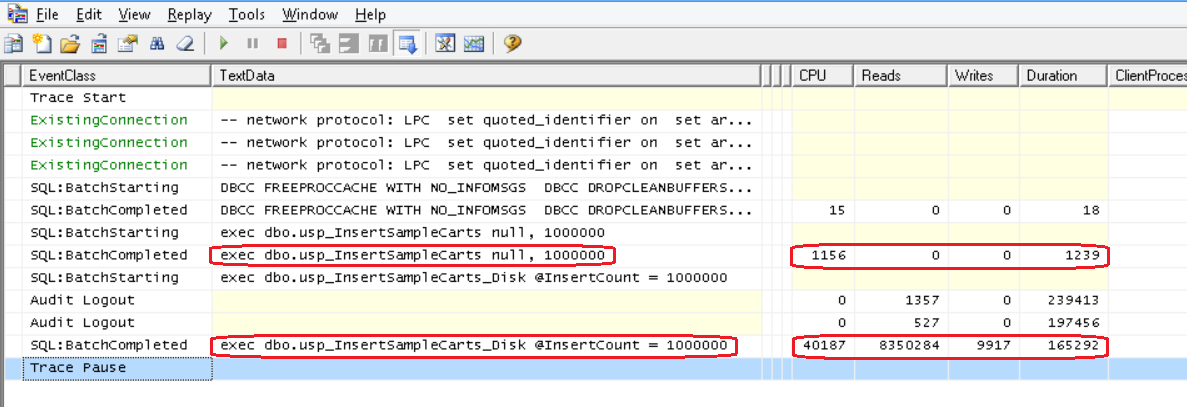

And put in and out there with a million entries:

As they say, the difference is obvious:

Pic.3

Of the features it should be noted that SQL Server 2014 does not support automatic recompilation of native stored procedures. The plan is fixed. Parameter sniffing is not used, all parameters rely unknown. Instead of recompilation, you may have to delete and recreate the procedure when the data changes (to generate a new plan). It may be enough recompilation that occurs when the server starts, moves to the backup node, etc. It is clear that the newer the statistics on the tables, the more correctly the plan will be constructed, and the statistics, as we remember from the first part, are not automatically updated. Must be done manually using UPDATE STATISTICS or sp_updatestats. The latter always updates statistics for memory-optimized tables (for normal tables, as needed).

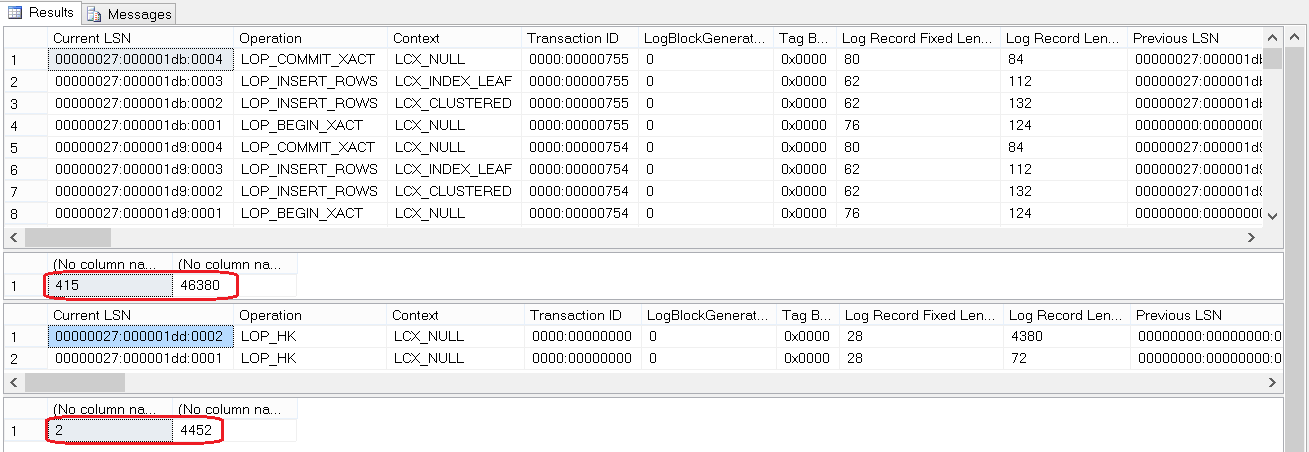

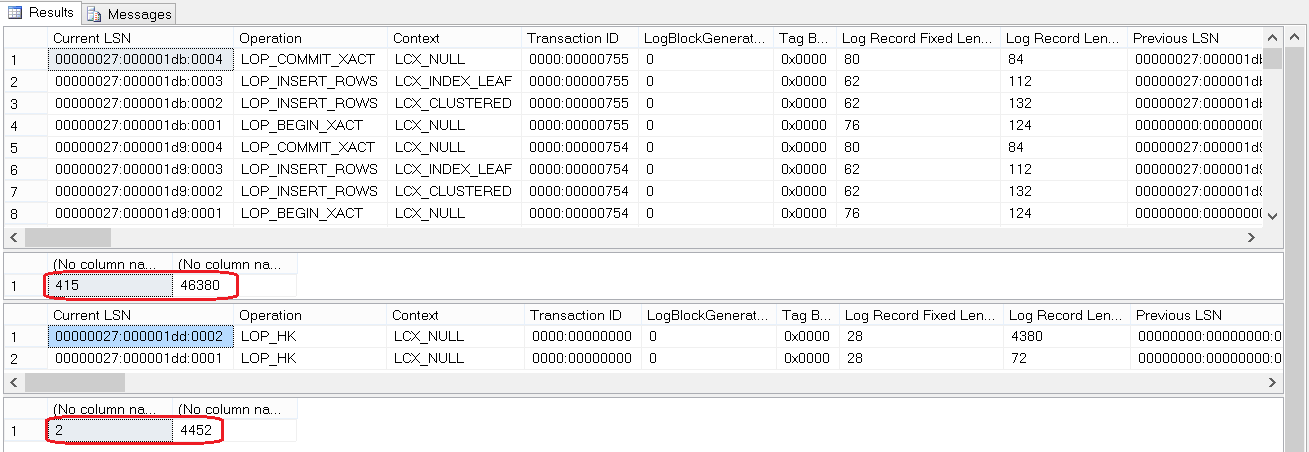

From visual things let's see more difference in journaling. In the classic version, if we write a million records to a table with a nonclustered index (and updates touch some column of this index), we will get two million log entries, which naturally affects the performance. In addition (write-ahead logging), all of this is put into the log buffer immediately and in loaded systems leads to high competition for the buffer. In the case of Hekaton, uncommitted transactions are not flushed to disk at all, so there is no need to store undo information or aggressively insert into the log buffer. Index operations are not logged — they are not stored between restarts. Only one consolidated log entry is created during commit, which contains the necessary information about all the details of the changes, for all the entries affected by the transaction. We look.

Pic.4

And we see that the insertion of 100 records in the case of in-memory tables is logged in the form of only two log entries of the type LOP_HK (Log Operation Hekaton). As already noted, these records are consolidated. You can see what they are really revealed in using the new undocumented function that I borrowed from Kalen Delaney. As the Current LSN, we set the values from the corresponding column of the third resultset in Figure 4:

Pic.5

The first record, as we see, consists of 102 records: begin tx, 100 inserts, commit. Despite this, their total volume (see the second and fourth results of Fig.4) is 10 times more compact than in the case of a disk table. If you take a non-durable table (DURABILITY = SCHEMA_ONLY), there will be no journaling at all.

Since we are talking about transactional processing in memory, it is useful to recall the acronym ACID: atomicity, integrity, isolation and residuality (effect), specifically letter I. Since it is expensive to achieve complete isolation, isolation levels that allow some degree of compromise are maintained in the DBMS . This is a classic of the genre. As you approach the ideal, SQL Server supports: dirty read (violations of the form are allowed: the first transaction changes data, the second reads changes, the first rolls back, it turns out that the second read the non-existent), read committed (perhaps a non-repeating read: the first transaction may change the data in read scale second, and second time second transaction reads already changed data), repeatable read (first transaction cannot change records at second scale, but can insert new ones - phantoms), serializable (most strict - phantoms do not go away). Once upon a time, when the pages in SQL Server were 2 kilobytes, it only supported page locks, so the last two levels were synonymous. Full write lock support appeared in 7.0 (1998). In 2005, a snapshot of Isolation was added to the listed levels, which, strictly speaking, is not an isolation level, but turns SQL Server from a blocker into a versioner. Just snapshot is the main for Hecaton, because optimistic concurrency is the most natural approach when working with objects in memory. It means in its essence, since record versions are not stored in tempdb in this case. Each write operation has a continuously increasing transaction number used for subsequent reads. Uncommitted records are stored in memory, but are not visible until a commit, therefore there are no dirty reads. Old records are deleted during the garbage collection process and free up memory.

Also in Hecaton, Repeatable Read is supported, which (unlike the disk version) does not block anyone. When a re-read occurs somewhere at the end of a transaction, if the records have changed, the transaction is canceled with the error 41305 “The current transaction failed to complete the message”. Serializable works the same way - in case of phantoms, the transaction is canceled.

The isolation level is specified in the ATOMIC block of a natively compiled stored procedure (see below) or in the table hint of a T-SQL query. Alternatively, with the help of the MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT database option, which, when accessing tables in memory, raises Read UnCommitted / Committed to Snapshot. If usual to write

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE BEGIN TRAN UPDATE dbo.UserSession SET ShoppingCartId=3 WHERE SessionId=4 there will be an error Msg 41333, Level 16, State 1, Line 3

The following transactions are compiled in the procedures underneath the transaction.

')

In the T-SQL script, the isolation level is set by the hint:

BEGIN TRAN UPDATE dbo.UserSession WITH (SNAPSHOT) SET ShoppingCartId=3 WHERE SessionId=4 UPDATE dbo.ShoppingCart WITH (SNAPSHOT) SET TotalPrice=100.00 WHERE ShoppingCartId=3 COMMIT (1 row(s) affected) (1 row(s) affected) Theoretically, Read Committed for memory optimized tables is also supported, but only in the case of autocommit (single statement) transactions.

The cost of blocking is minimal, for which, in fact, everything was started.

BEGIN TRAN INSERT dbo.ShoppingCart VALUES (5,500,GETUTCDATE(),50.5) SELECT resource_type, resource_description, object_name(resource_associated_entity_id), request_mode, request_type, request_status FROM sys.dm_tran_locks WHERE request_session_id = @@SPID Please note that Hecaton "hangs" only Schema Stability on the table. There are no X and IX in mention:

Pic1

Let us compare for comparison what happens in the case of traditional tables. Create a similar disk table, insert the same rowset as prefilling and repeat the transaction:

CREATE TABLE dbo.ShoppingCart_Disk ( ShoppingCartId int not null primary key, UserId int not null, CreatedDate datetime2 not null, TotalPrice money, INDEX ixUserid nonclustered (UserId)) ... BEGIN TRAN INSERT dbo.ShoppingCart_Disk VALUES (5,500,GETUTCDATE(),50.5) SELECT resource_type, resource_description, case resource_type when 'object' then object_name(resource_associated_entity_id) else cast(resource_associated_entity_id as sysname) end, resource_associated_entity_id, request_mode, request_type, request_status FROM sys.dm_tran_locks WHERE request_session_id = @@SPID What is called, feel the difference:

Pic2

Minimal blocking is one of the obvious advantages of Hecaton. The application will benefit from the transfer to memory if it is made in the style of OLTP, i.e. characterized by relatively short transactions with a high percentage of competition from multiple connections, and the problem is that locks or physical locks hang for a long time and do not release resources. Porting will be easier if stored procedures are used, and performance problems can be distinguished into a relatively small subset of tables / stored procedures. Of course, not all scenarios win. The limiting case is one table of one record, which everyone updates with a new value. The desire to shove anything and everything into memory will lead to the fact that it will end stupidly. By the way, they often ask the question: what will happen if Hecaton consumes all the memory allocated to him? In general, it is clear that there is nothing good: Msg 701, Level 17, State 103. There is a pool of default for the query. What to do in this case is usually nothing. SQL Server clears the memory pretty quickly, and on the release I have not yet managed to drive it into a stupor, which can only be treated with a restart (as opposed to PP). Garbage collection is designed to be non-blocking and cooperative, which means that despite a special thread for this purpose, most of the work is done by user processes that check and mark entries for later removal from time to time and then delete. Specialized flow is enabled when user activity is very small.

How to avoid overflow in the process? Obviously, it is correct to evaluate reality when tables are transferred to memory, especially since nobody canceled sp_spaceused.

You can use the Memory Optimization Advisor, which performs static analysis of the schema, indexes, etc. for potential problems, and more intelligent AMR (Analysis, Migrate and Report) for dynamic analysis on the nature of use (takes statistics from

Performance Data Warehouse). You can preemptively limit Hecaton using the resource controller:

CREATE RESOURCE POOL mem_optpool WITH (MAX_MEMORY_PERCENT = ) EXEC sp_xtp_bind_db_resource_pool 'mydatabase', 'mem_optpool' The influence of the Resource Governor applies only to the memory allocated by the In-Memory OLTP allocators, i.e. if there are no memory optimized objects in the database, the memory is not removed from the resource pool. The in-memory OLTP engine is integrated into a common SQL Server Memory Manager, which you can verify by running

select type, name, memory_node_id, pages_kb/1024 as pages_MB from sys.dm_os_memory_clerks where type like '%xtp%' The first friend will be the memory allocated by the system, the last (memory_node = 64) is the dedicated administrative connection, and between them the user databases with MEMORY_OPTIMIZED_DATA.

As noted in the first part, it is completely unnecessary to thrust the entire base into memory. Possible combined queries between tables in memory and familiar tables, for example,

select m1.ShoppingCartId, m2.UserId, d.CreatedDate, d.TotalPrice from ShoppingCart m1 join ShoppingCart_Disk d on m1.ShoppingCartId = d.ShoppingCartId join UserSession m2 on d.ShoppingCartId = m2.ShoppingCartId delete from ShoppingCart insert ShoppingCart select * from ShoppingCart_Disk update d set TotalPrice = m.TotalPrice from ShoppingCart_Disk d join ShoppingCart m on d.ShoppingCartId = m.ShoppingCartId where m.UserID <= 100 Script 1

In addition to tables, stored procedures can be stored in memory. Such procedures can work only with in-memory tables. The procedure is necessarily created with the SCHEMABINDING option, which means that the tables referenced by the procedure cannot be modified or deleted before it. Traditional default procedures are performed with the EXECUTE AS CALLER option. For procedures in memory, it is not supported. The options are: EXECUTE AS OWNER, EXECUTE AS 'user_name' or EXECUTE AS SELF (creator). There are other limitations as well. See BOL , “Stored procedures compiled into native code.”

The body of the procedure is in the BEGIN ATOMIC block from the ANSI SQL standard, and at the moment this is its only use in T-SQL. It differs from BEGIN TRAN in that it automatically rolls back on an error, while TRY / CATCH and ROLLBACK must be used in a transaction, since About SET XACT_ABORT ON there are nuances. The construction options are the TRANSACTION ISOLATION LEVEL = SNAPSHOT | REPEATABLEREAD | SERIALIZABLE and LANGUAGE, as which you can use any language from sys.languages. It defines the date | time format and the language of the system messages.

CREATE PROCEDURE dbo.usp_InsertSampleCarts @StartId int, @InsertCount int WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER AS BEGIN ATOMIC WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english') IF @StartId is null SELECT @StartId = isnull(MAX(ShoppingCartId), 0) + 1 FROM dbo.ShoppingCart DECLARE @i int = 0 WHILE @i < @InsertCount BEGIN INSERT INTO dbo.ShoppingCart VALUES (@StartId + @i, RAND() * 1000, GETUTCDATE(), NULL) SET @i += 1 END END Script 2

As in the case of tables, a native compilation takes place for the procedures, which turns the interpreted T-SQL commands into C code and then into machine code, so if we now repeat the query Script 4 from the previous part , we will see that the 3rd dll, corresponding to the newly created procedure.

Performance is the key to using objects in memory. For comparison, we will create a similar stored procedure of the traditional storage method, which will also insert the specified number of records in the same way, but into the disk table:

CREATE PROCEDURE dbo.usp_InsertSampleCarts_Disk @StartId int = null, @InsertCount int AS BEGIN IF @StartId is null SELECT @StartId = isnull(MAX(ShoppingCartId), 0) + 1 FROM dbo.ShoppingCart DECLARE @i int = 0 WHILE @i < @InsertCount BEGIN INSERT INTO dbo.ShoppingCart_Disk VALUES (@StartId + @i, RAND() * 1000, GETUTCDATE(), NULL) SET @i += 1 END END Script 3

And put in and out there with a million entries:

SET NOCOUNT ON; DBCC FREEPROCCACHE WITH NO_INFOMSGS; DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS exec dbo.usp_InsertSampleCarts null, 1000000 exec dbo.usp_InsertSampleCarts_Disk @InsertCount = 1000000 As they say, the difference is obvious:

Pic.3

Of the features it should be noted that SQL Server 2014 does not support automatic recompilation of native stored procedures. The plan is fixed. Parameter sniffing is not used, all parameters rely unknown. Instead of recompilation, you may have to delete and recreate the procedure when the data changes (to generate a new plan). It may be enough recompilation that occurs when the server starts, moves to the backup node, etc. It is clear that the newer the statistics on the tables, the more correctly the plan will be constructed, and the statistics, as we remember from the first part, are not automatically updated. Must be done manually using UPDATE STATISTICS or sp_updatestats. The latter always updates statistics for memory-optimized tables (for normal tables, as needed).

From visual things let's see more difference in journaling. In the classic version, if we write a million records to a table with a nonclustered index (and updates touch some column of this index), we will get two million log entries, which naturally affects the performance. In addition (write-ahead logging), all of this is put into the log buffer immediately and in loaded systems leads to high competition for the buffer. In the case of Hekaton, uncommitted transactions are not flushed to disk at all, so there is no need to store undo information or aggressively insert into the log buffer. Index operations are not logged — they are not stored between restarts. Only one consolidated log entry is created during commit, which contains the necessary information about all the details of the changes, for all the entries affected by the transaction. We look.

declare @lsn nvarchar(46) = (select max([Current LSN]) from sys.fn_dblog(null, null)) exec dbo.usp_InsertSampleCarts_Disk @InsertCount = 100 select * from sys.fn_dblog(null, NULL) where [Current LSN] > @lsn order by [Current LSN] desc select count(1), sum([Log Record Length]) from sys.fn_dblog(null, NULL) where [Current LSN] > @lsn set @lsn = (select max([Current LSN]) from sys.fn_dblog(null, null)) exec dbo.usp_InsertSampleCarts null, 100 select * from sys.fn_dblog(null, NULL) where [Current LSN] > @lsn order by [Current LSN] desc select count(1), sum([Log Record Length]) from sys.fn_dblog(null, NULL) where [Current LSN] > @lsn

Pic.4

And we see that the insertion of 100 records in the case of in-memory tables is logged in the form of only two log entries of the type LOP_HK (Log Operation Hekaton). As already noted, these records are consolidated. You can see what they are really revealed in using the new undocumented function that I borrowed from Kalen Delaney. As the Current LSN, we set the values from the corresponding column of the third resultset in Figure 4:

SELECT [current lsn], [transaction id], operation, operation_desc, tx_end_timestamp, total_size, object_name(table_id) AS TableName FROM sys.fn_dblog_xtp(null, null) WHERE [Current LSN] = '00000027:000001dd:0002' SELECT [current lsn], [transaction id], operation, operation_desc, tx_end_timestamp, total_size, object_name(table_id) AS TableName FROM sys.fn_dblog_xtp(null, null) WHERE [Current LSN] = '00000027:000001dd:0001'

Pic.5

The first record, as we see, consists of 102 records: begin tx, 100 inserts, commit. Despite this, their total volume (see the second and fourth results of Fig.4) is 10 times more compact than in the case of a disk table. If you take a non-durable table (DURABILITY = SCHEMA_ONLY), there will be no journaling at all.

Source: https://habr.com/ru/post/225935/

All Articles