How to build VDI for complex graphics

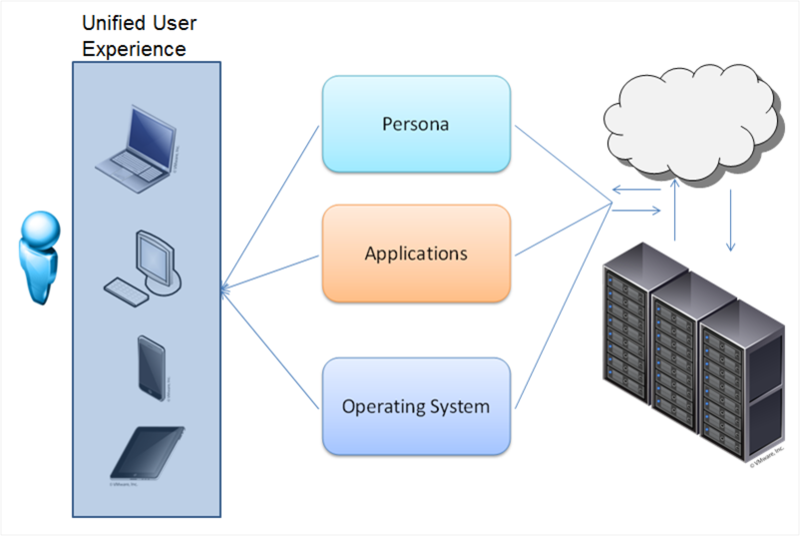

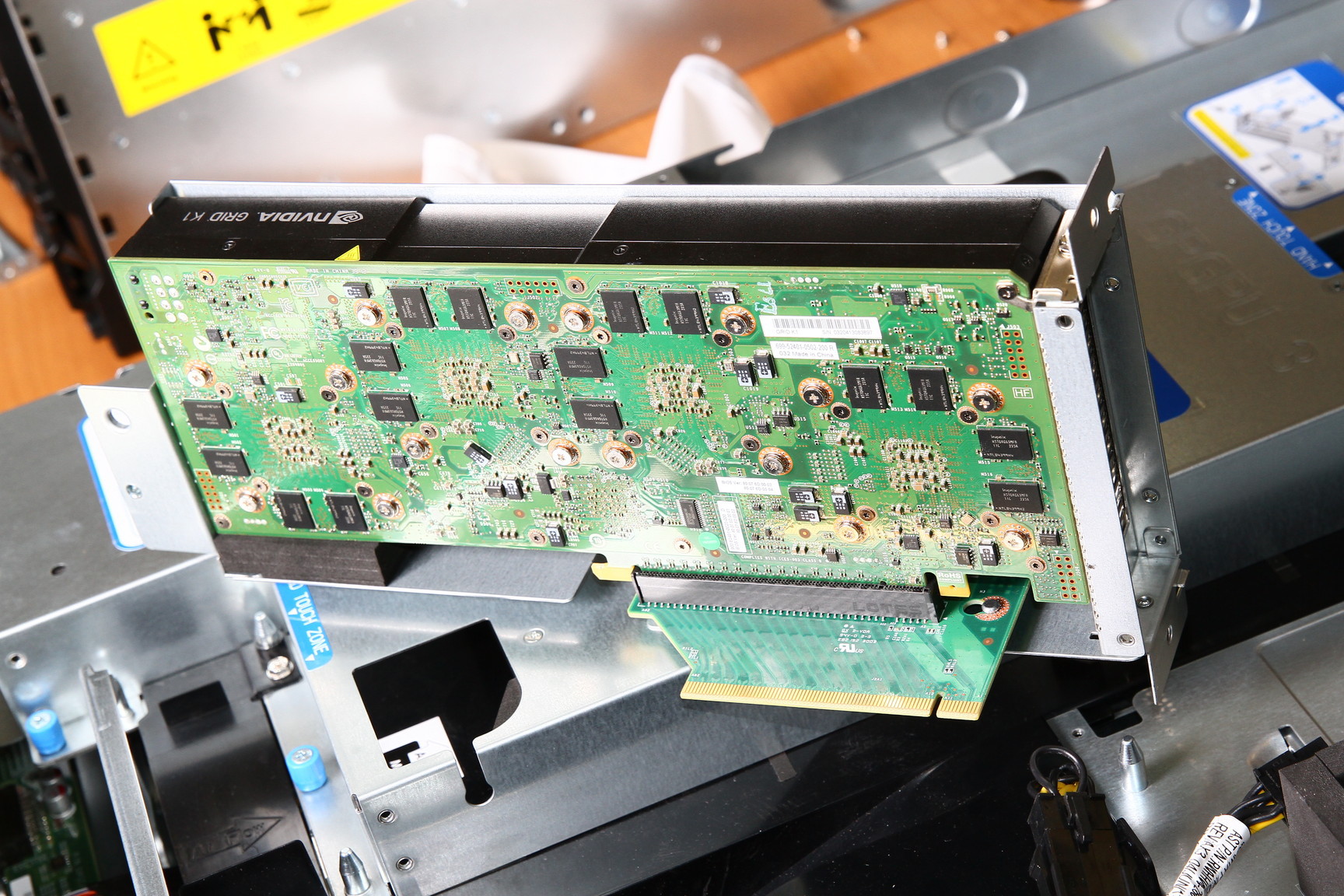

The centralization of desktops and client PCs in the data center today is increasingly becoming the subject of discussion in the IT community, and this approach is particularly interesting for large organizations. One of the hottest technologies in this respect is VDI. VDI allows you to centralize service for client environments, simplify the deployment of applications, their configuration and configuration, as well as updating and monitoring compliance with security requirements.

VDI unplugs the user's desktop from the hardware. You can deploy as a permanent virtual desktop, and (the most frequent option) flexible virtual machine. Virtual machines include an individual set of applications and settings, which is deployed in the base OS when a user is authorized. After logging out, the OS returns to a “clean” state, removing any changes and malware.

')

For a system administrator, this is very convenient - manageability, security, reliability at the height, you can update applications in a single center, and not on every PC. Office packages, database interfaces, Internet browsers and other applications that are not demanding to graphics can work on any server (all well-known 1C terminal clients).

But what to do if you want to virtualize a more serious graphics station?

There can not do without the virtualization of the graphics subsystem.

There are three options for work:

Consider more

Dedicated GPU

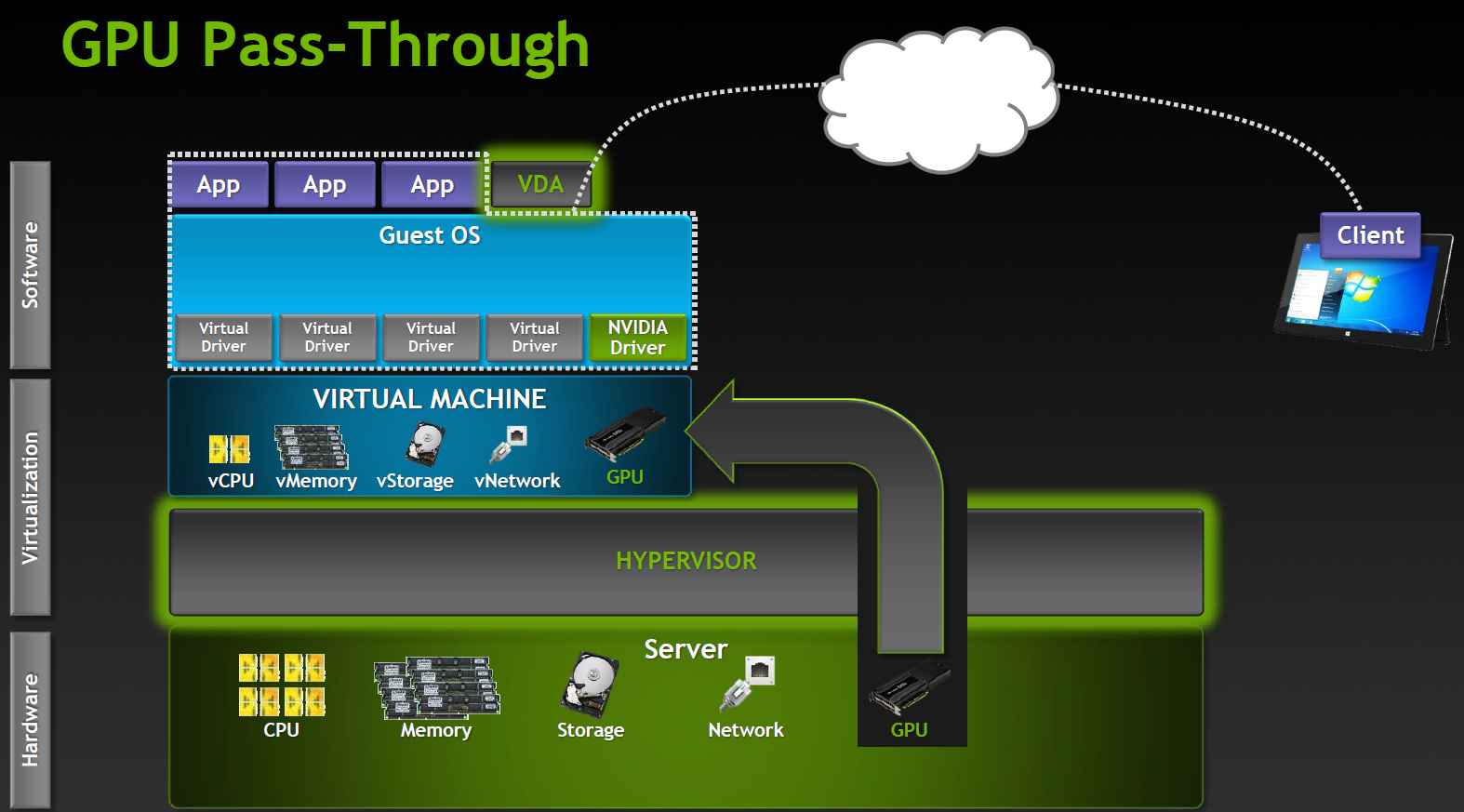

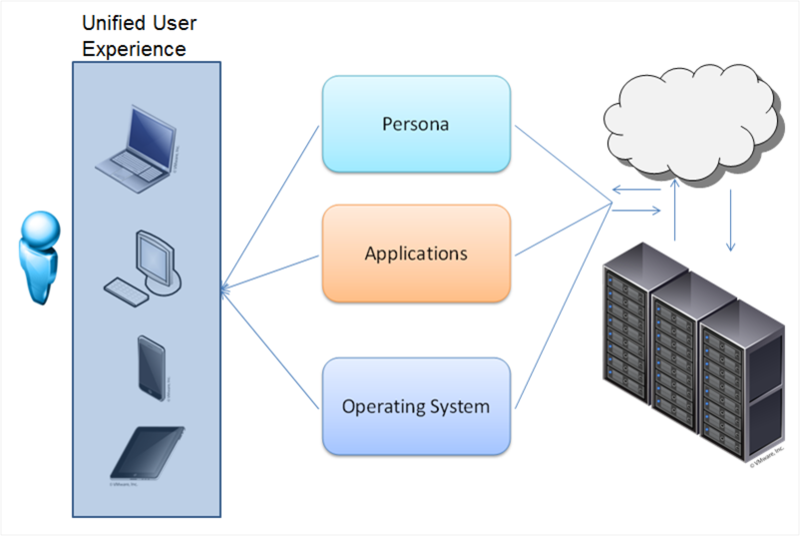

The most productive mode of operation, supported by Citrix XenDesktop 7 VDI delivery and VMware Horizon View (5.3 or higher) with vDGA. Fully working NVIDIA CUDA, DirectX 9,10,11, OpenGL 4.4. All other components (processors, memory, drives, network adapters) are virtualized and shared between hypervisor instances, but one GPU remains one GPU. Each virtual machine gets its GPU with almost no penalty for performance.

The obvious limit is that the number of such virtual machines is limited by the number of available graphics cards in the system.

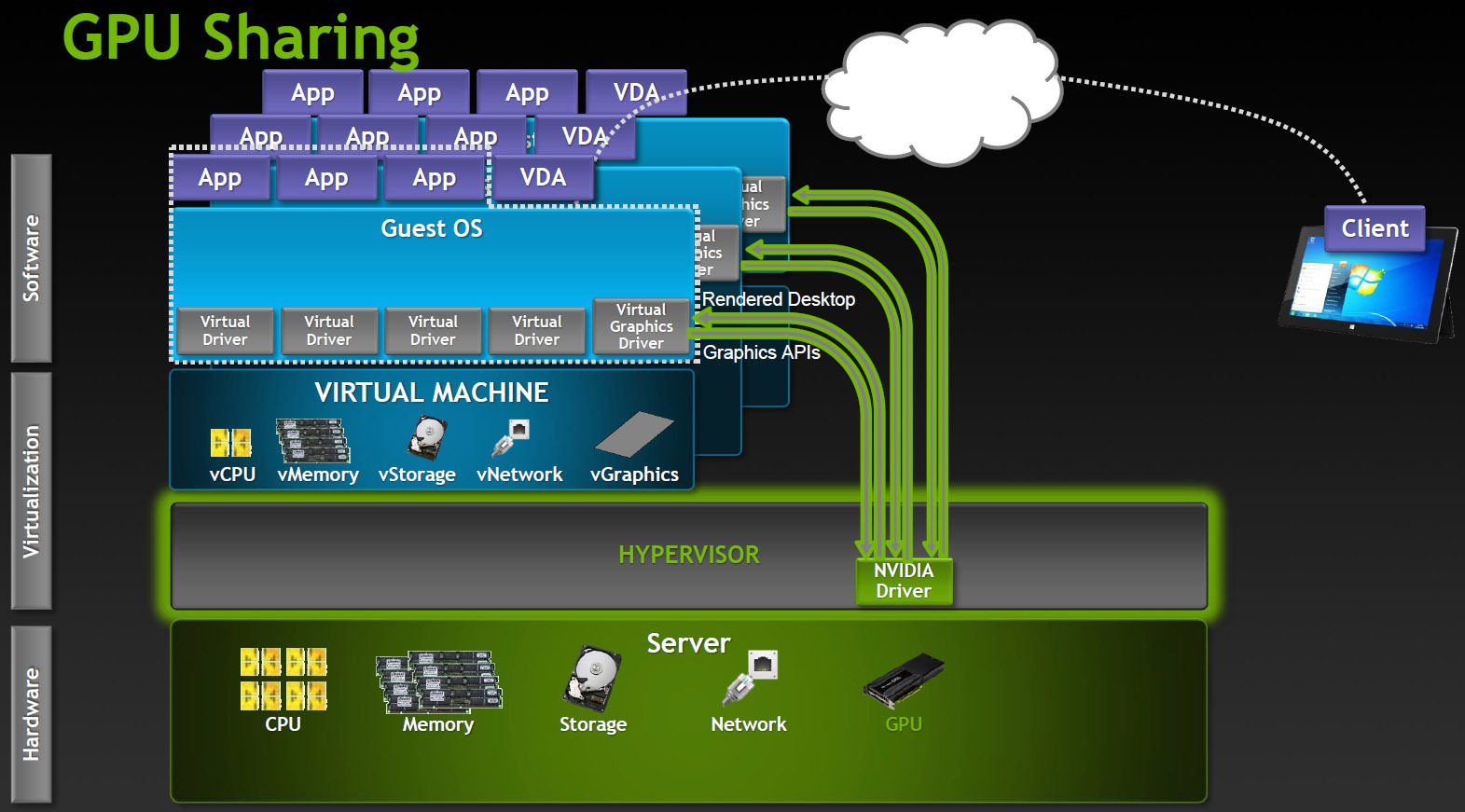

Shared GPU

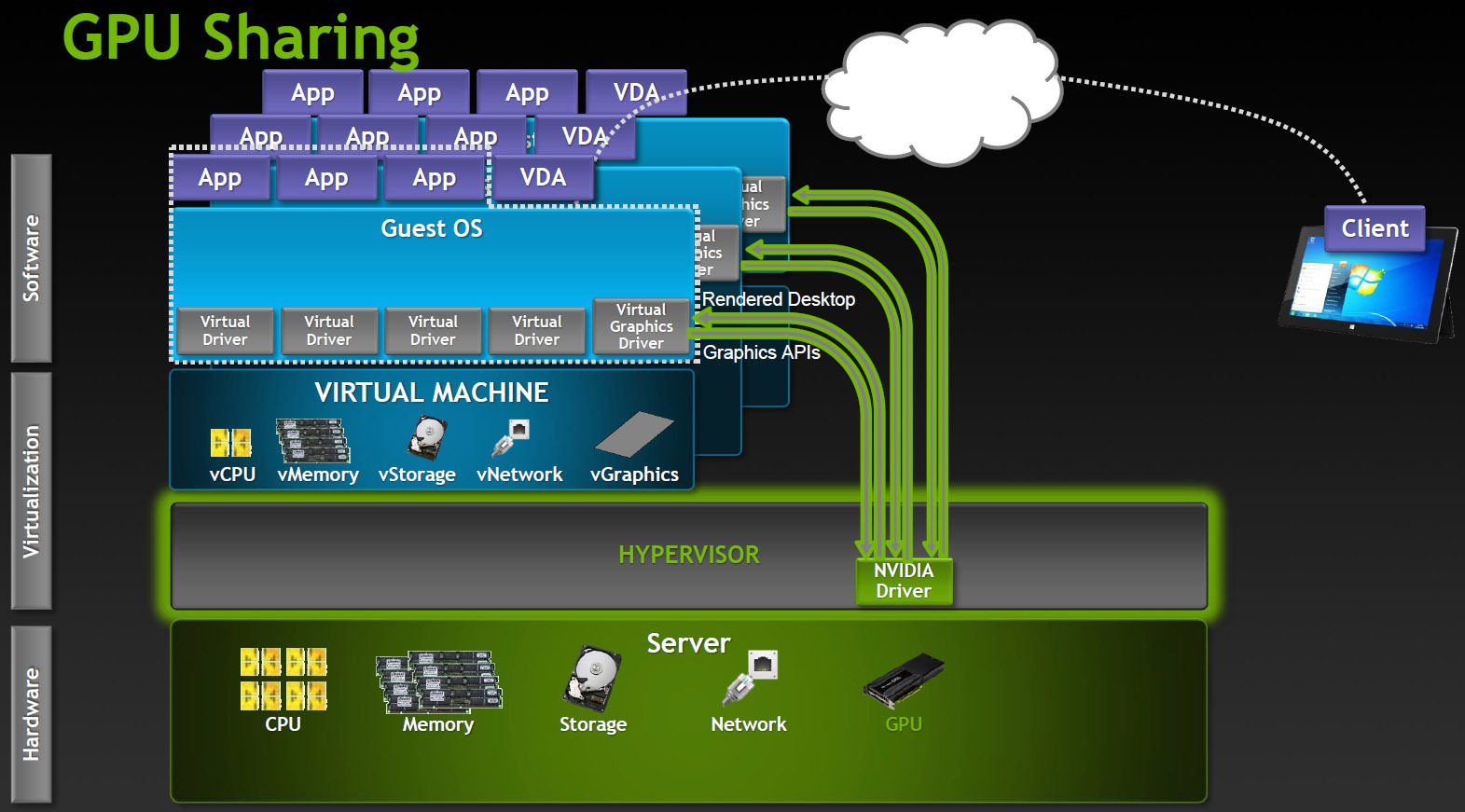

Works in Microsoft RemoteFX, VMware vSGA. This option relies on the capabilities of the VDI software, the virtual machine works as if with a dedicated adapter, and the server GPU also believes that it works with the same host, although in fact it is a level of abstraction. The hypervisor intercepts API calls and transmits all commands, drawing requests, etc., before transferring it to the graphics driver, and the host machine works with the virtual card driver.

Shared GPU is a reasonable solution for many cases. If the applications are not too complex, then a significant number of users can work at the same time. On the other hand, quite a lot of resources are spent on translating the API and it’s impossible to guarantee compatibility with applications. Especially with those applications that use newer versions of the API than existing at the time of development of the VDI product.

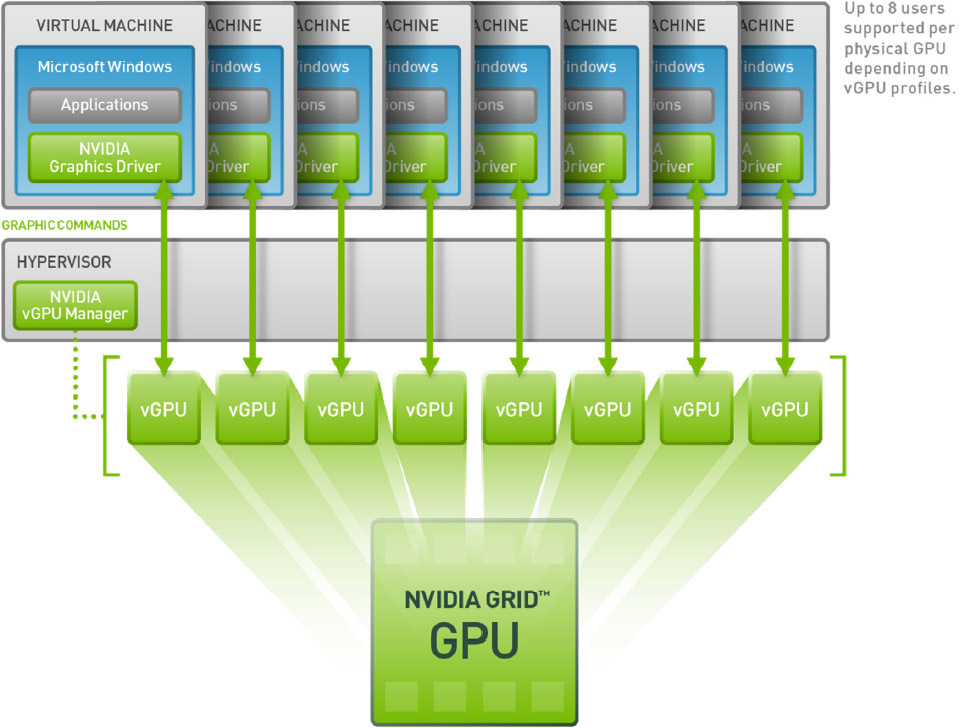

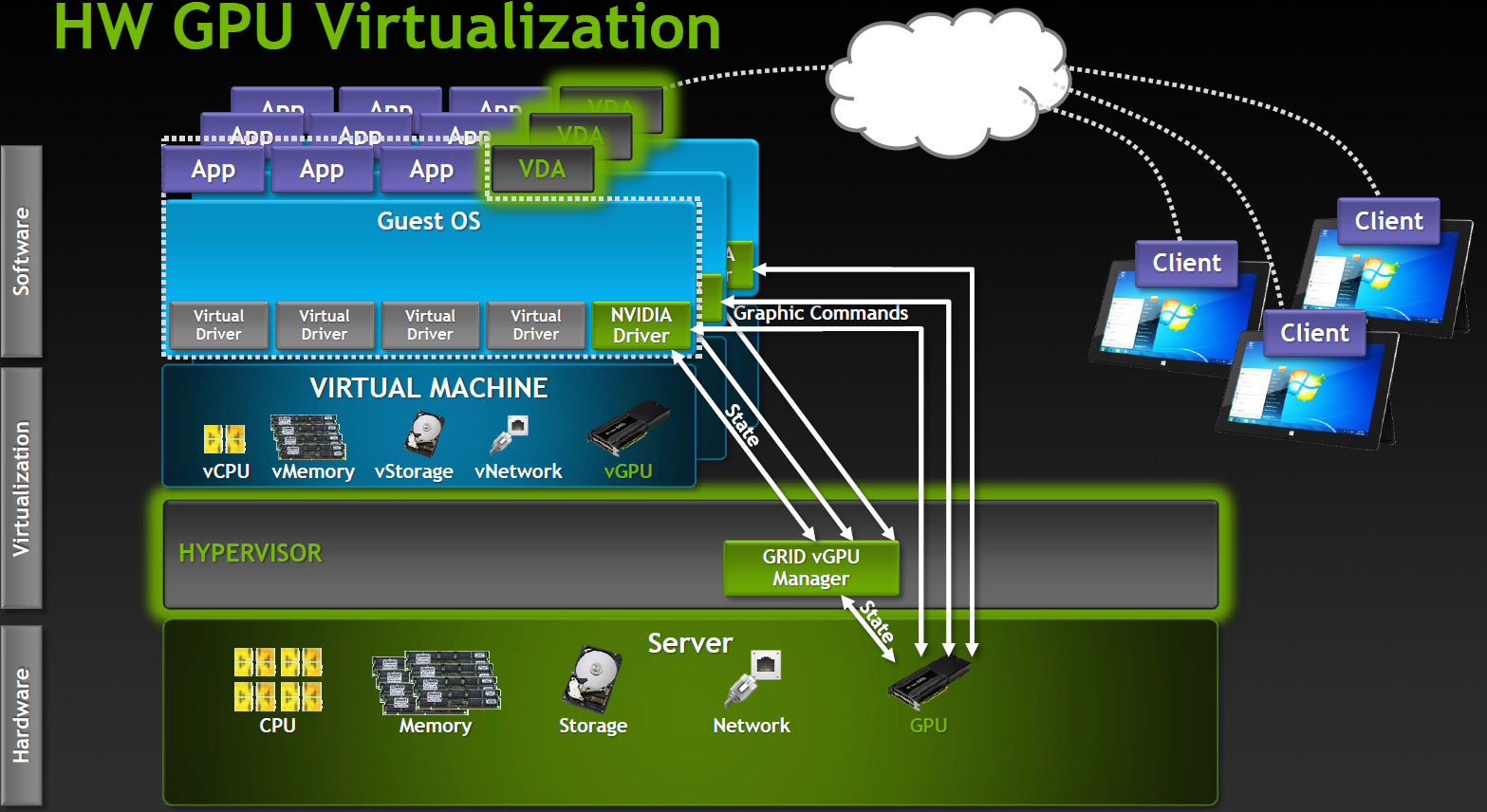

Virtual GPU

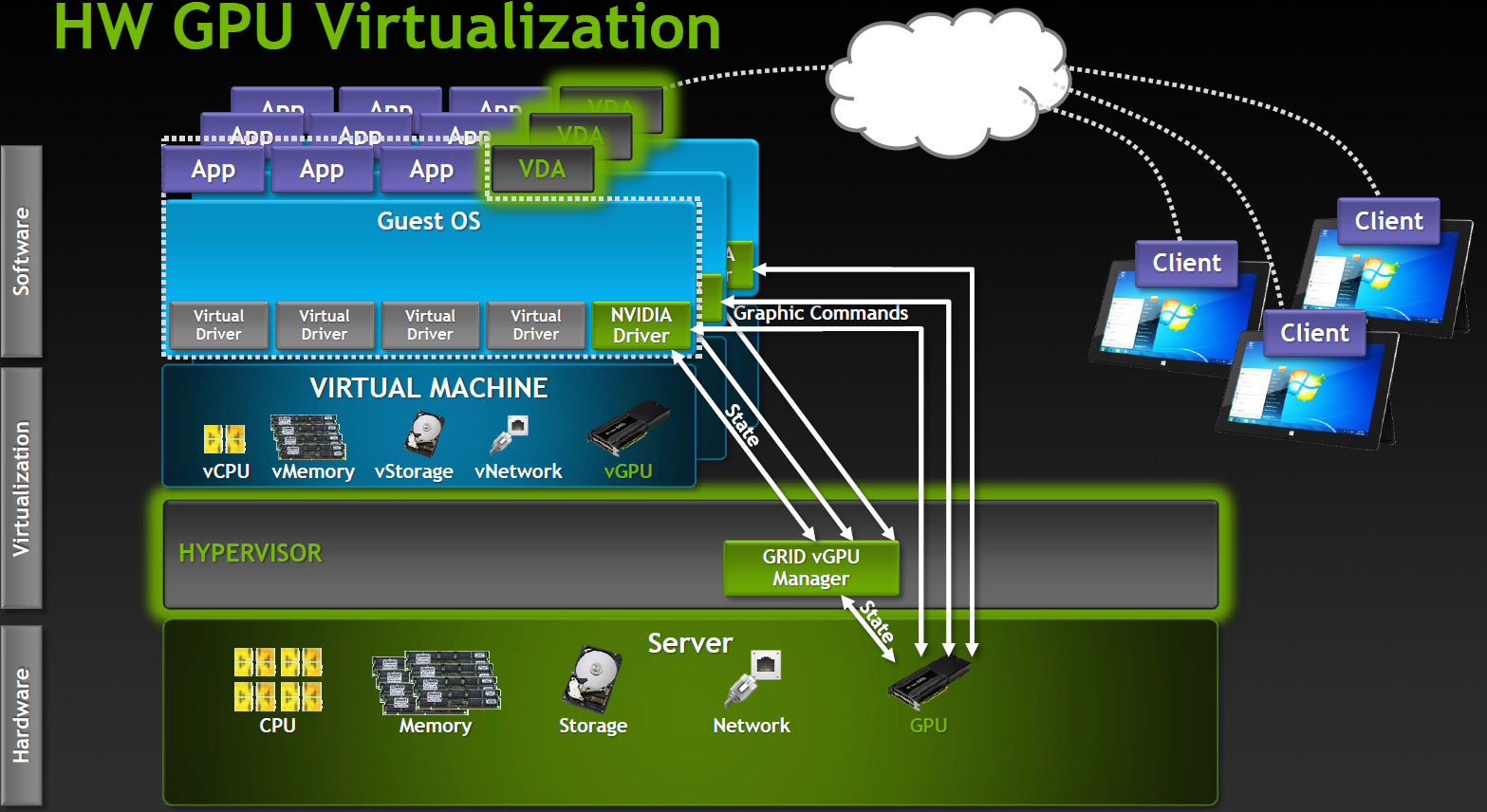

The most advanced option of sharing GPU between users is currently supported only in Citrix products.

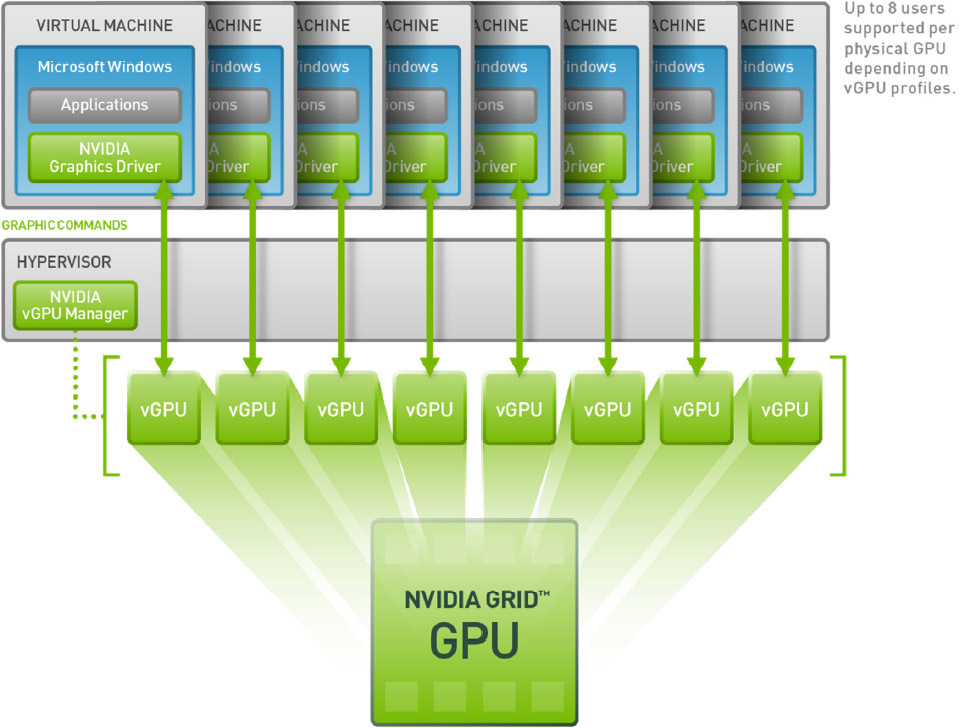

How it works? In a VDI environment with vGPU, each virtual machine works through a hypervisor with a dedicated vGPU driver, which is in each virtual machine. Each vGPU driver sends commands and controls one physical GPU using a dedicated channel.

The processed frames are returned by the driver to the virtual machine for sending to the user.

This mode of operation has become possible in the latest generation of NVIDIA GPU - Kepler. Kepler has a Memory Management Unit (MMU) that translates virtual host addresses into physical system addresses. Each process operates in its own virtual address space, and the MMU shares their physical addresses so that there is no intersection and struggle for resources.

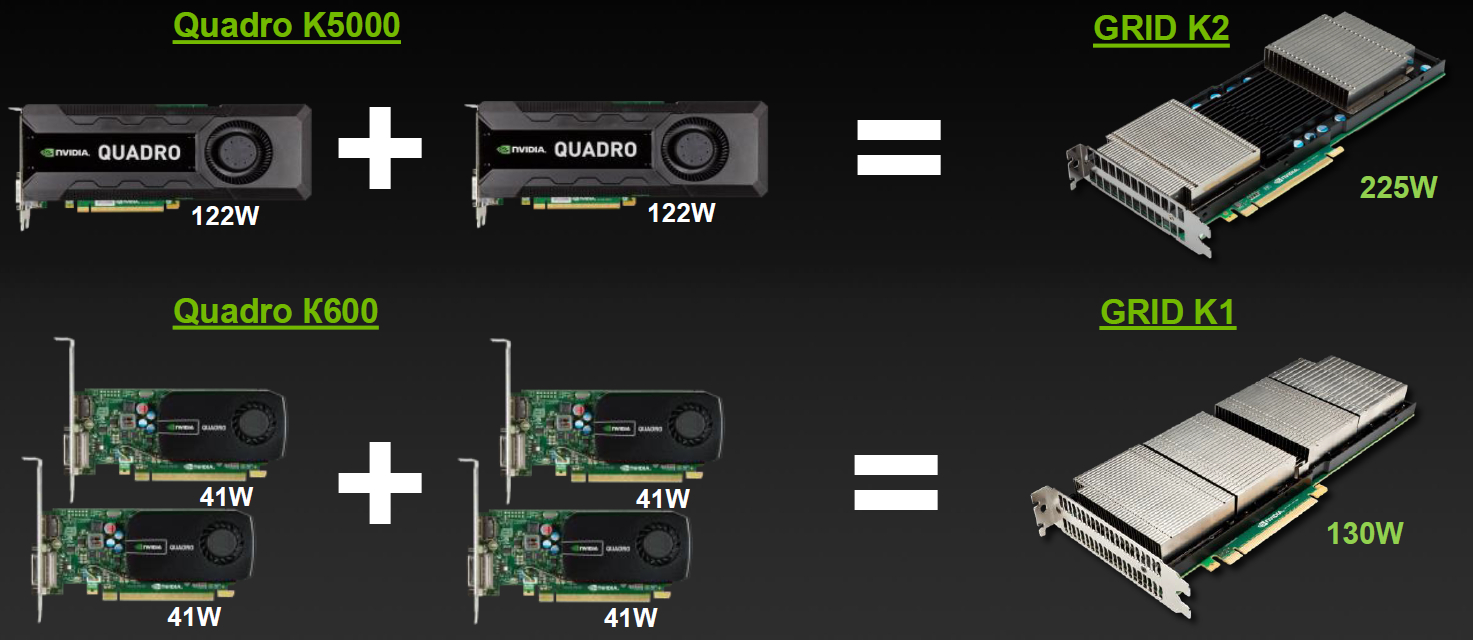

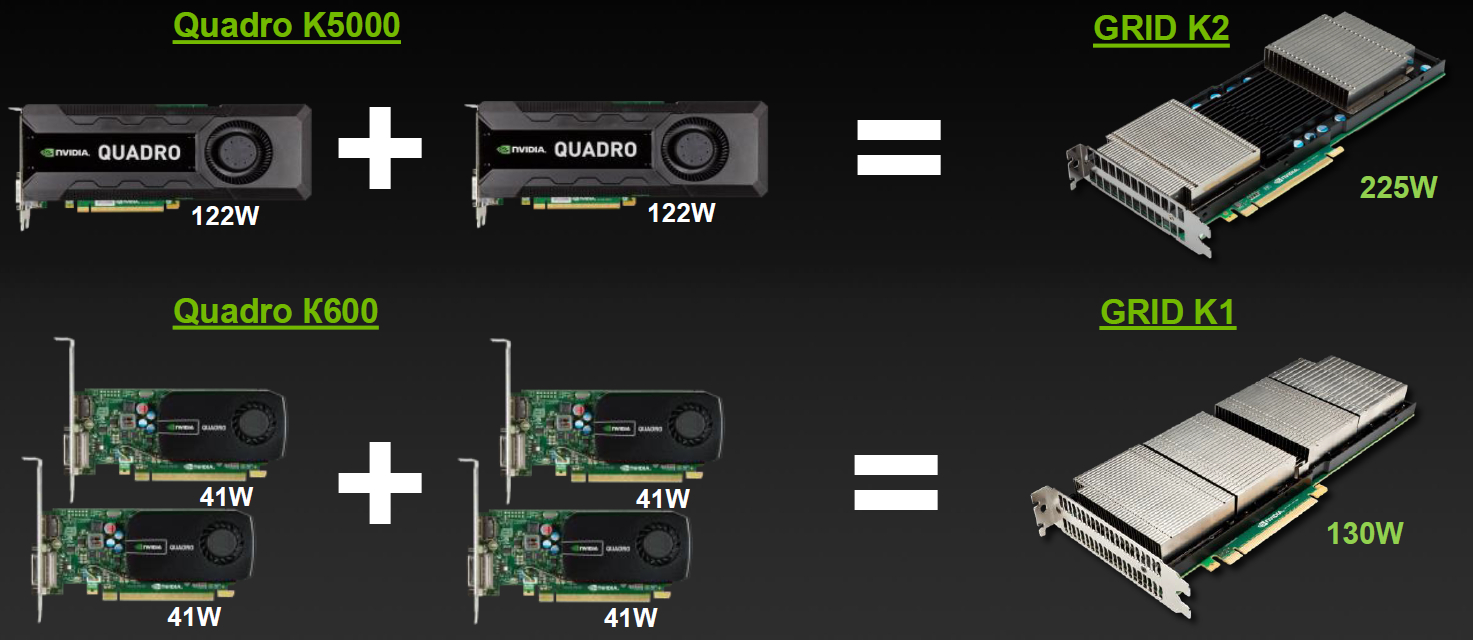

Card Incarnation

The lineup

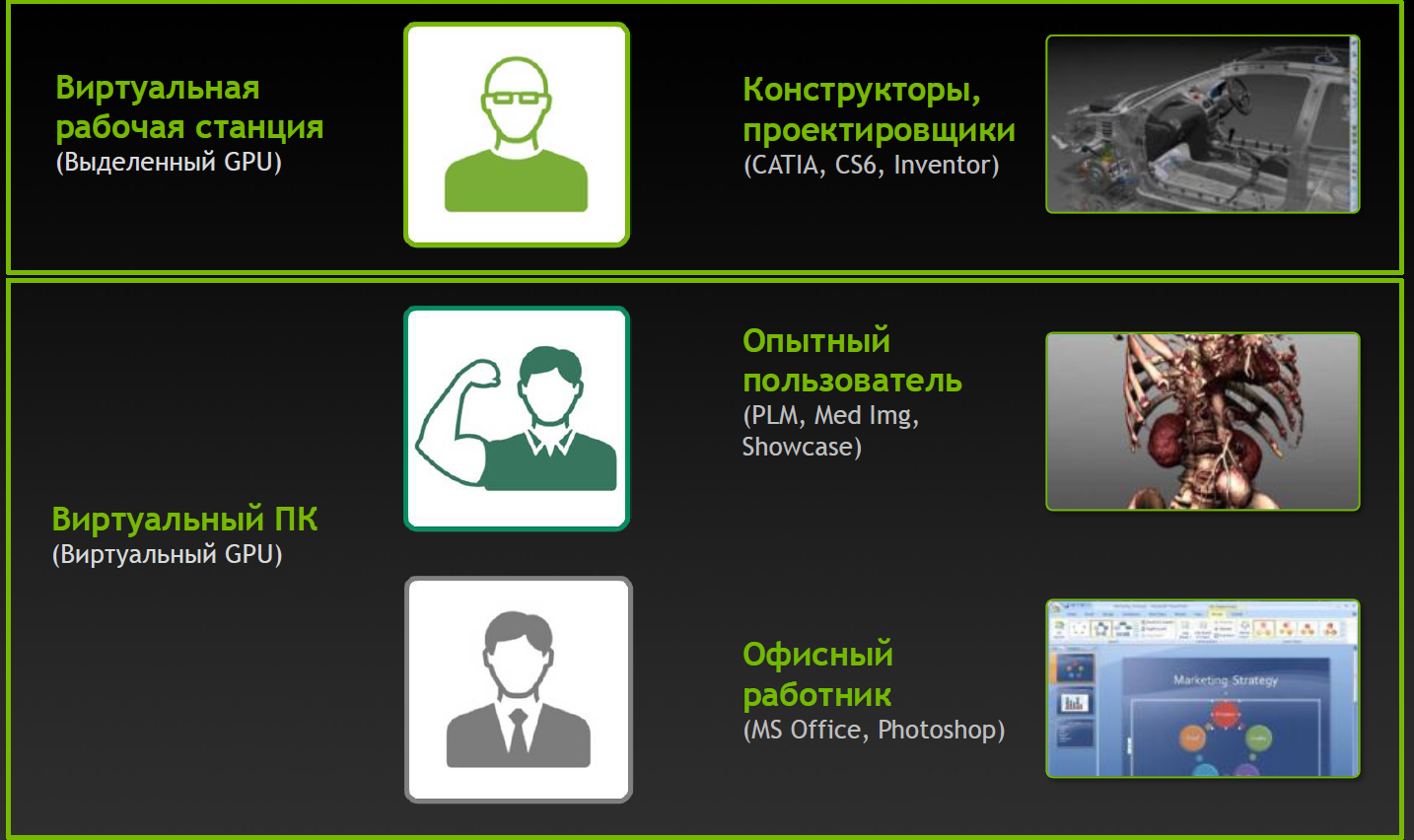

Varieties for VDI

But there is little simple division by characteristics, division into virtual profiles of vGPU is available for GRID.

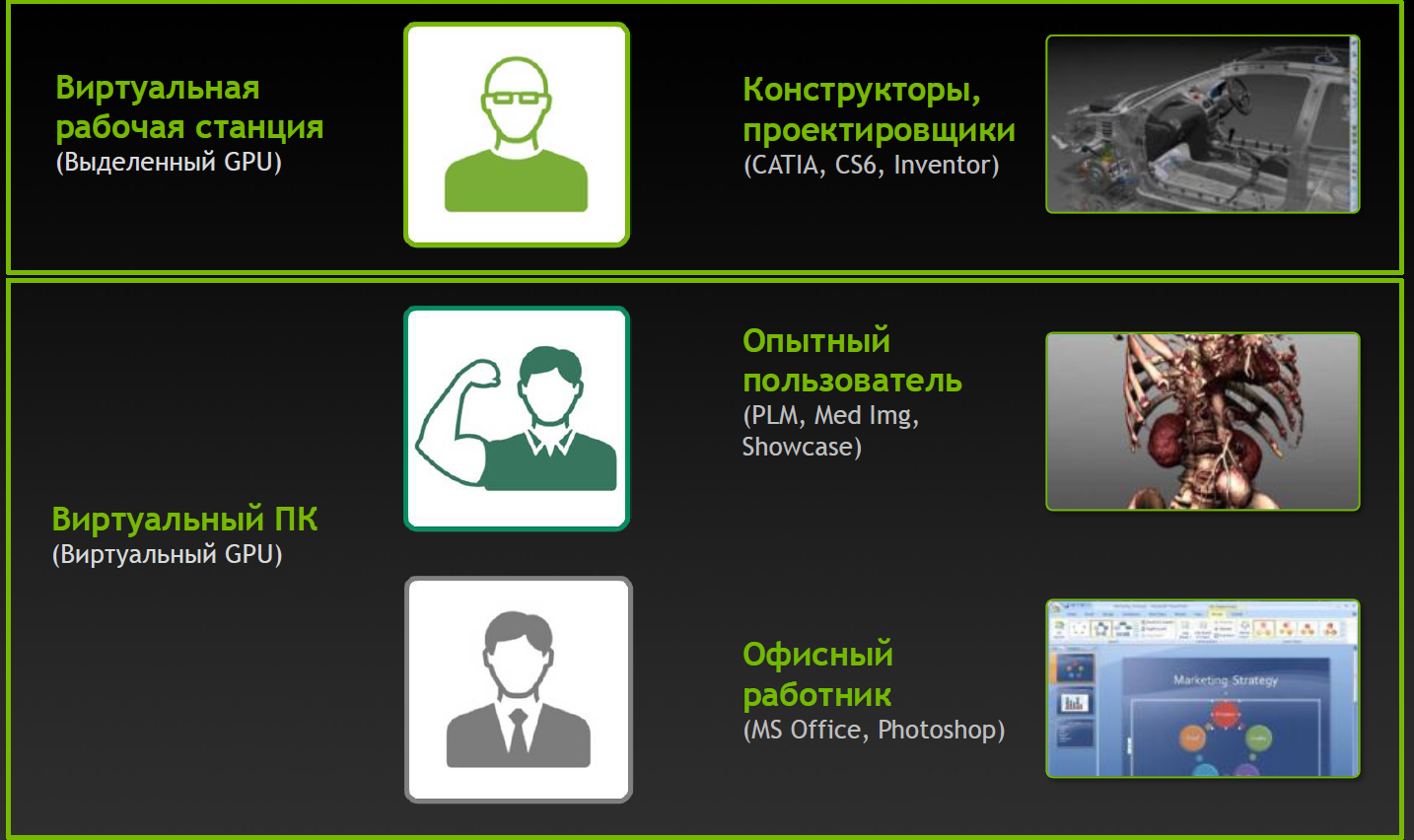

User Type Vision

Profiles and Applications

Profiles with Q index are certified for a number of professional applications (for example, Autodesk Inventor 2014 and PTC Creo) as well as Quadro cards.

Full virtual happiness!

NVIDIA wouldn't be true to itself if it hadn't thought about additional varieties. Game services on demand (Gaming-as-a-Service, GaaS) are gaining a certain popularity, where you can also get a good bonus from virtualization and the ability to divide the GPU between users.

Varieties for cloud games

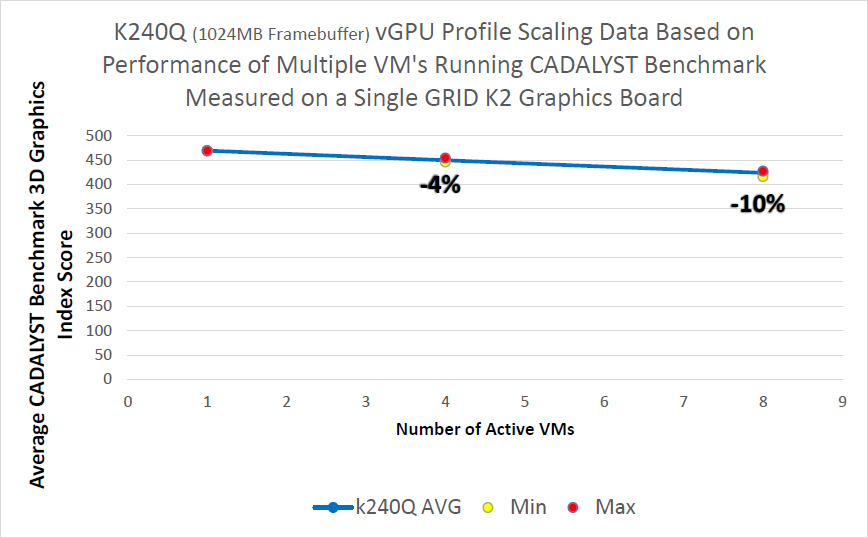

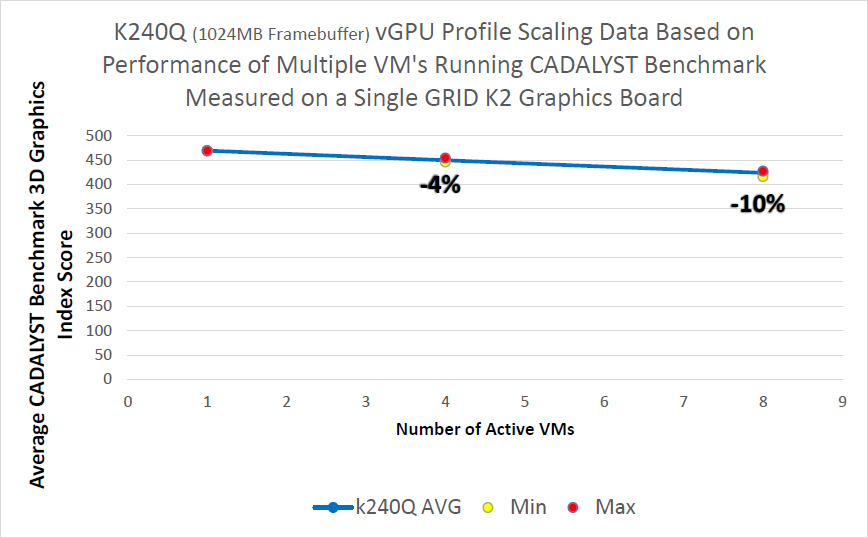

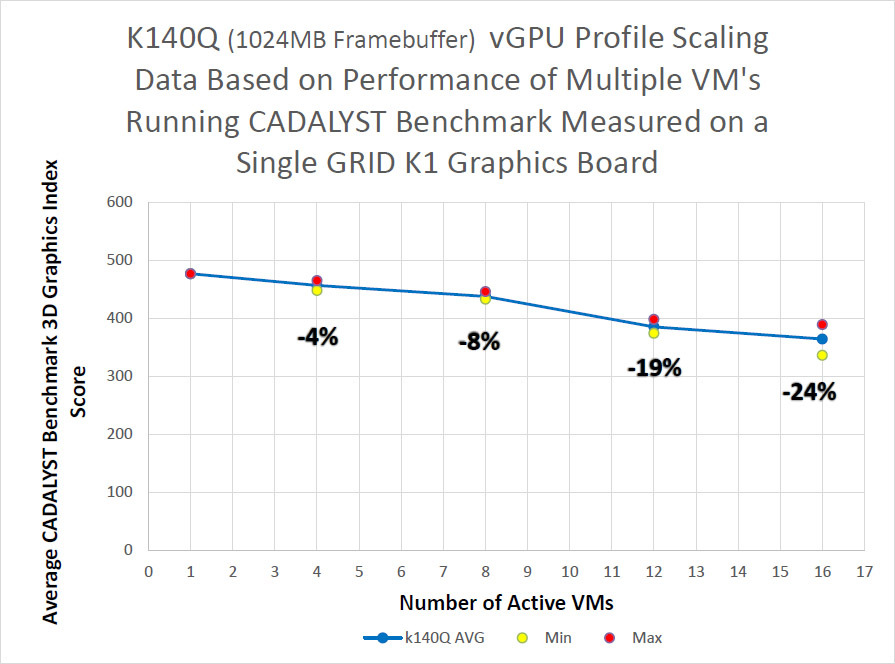

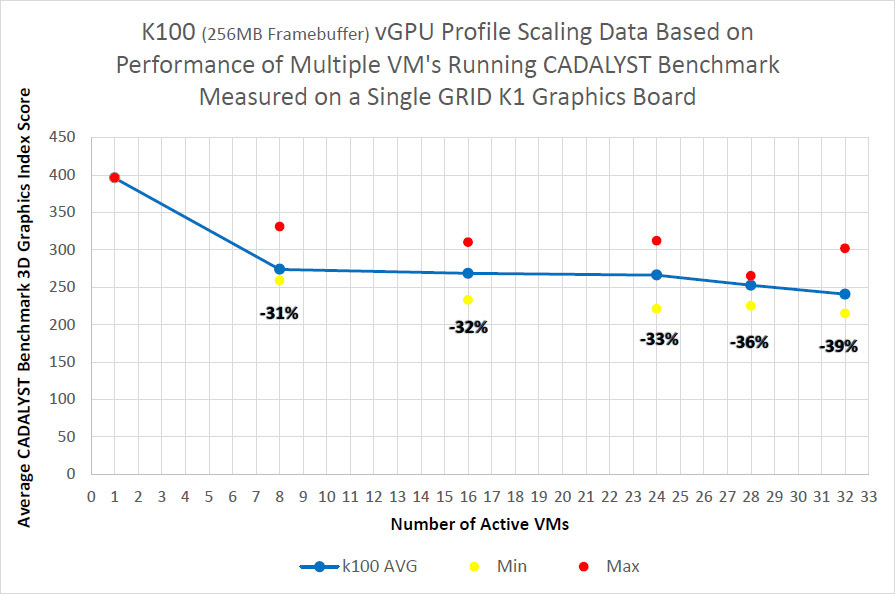

The impact of virtualization on performance

Server configuration:

Intel Xeon CPU E5-2670 2.6GHz, Dual Socket (16 Physical CPU, 32 vCPU with HT)

Memory 384GB

XenServer 6.2 Tech Preview Build 74074c

Virtual machine configuration:

VM Vcpu: 4 Virtual CPU

Memory: 11GB

XenDesktop 7.1 RTM HDX 3D Pro

AutoCAD 2014

Benchmark CADALYST C2012

NVIDIA Driver: vGPU Manager: 331.24

Guest driver: 331.82

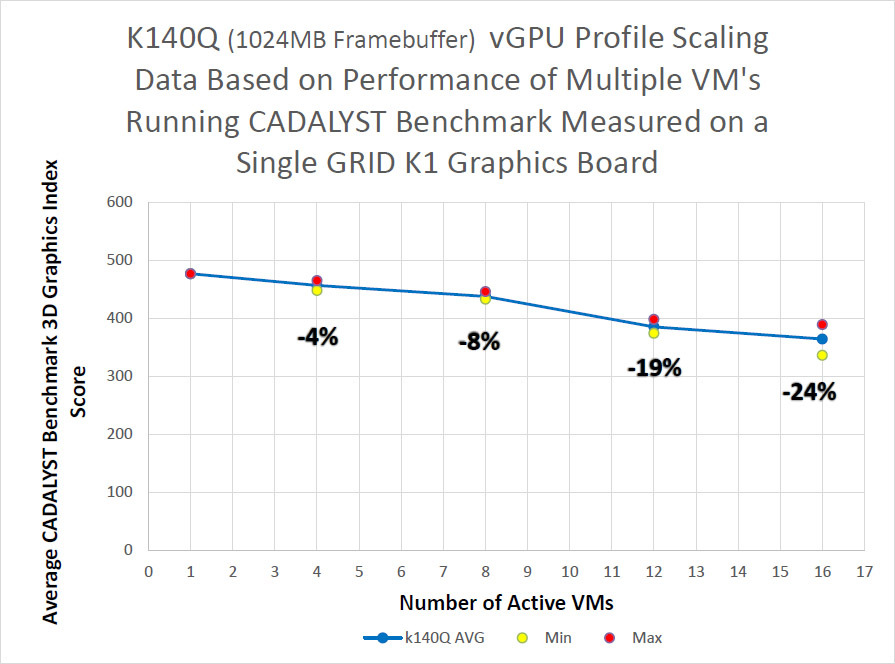

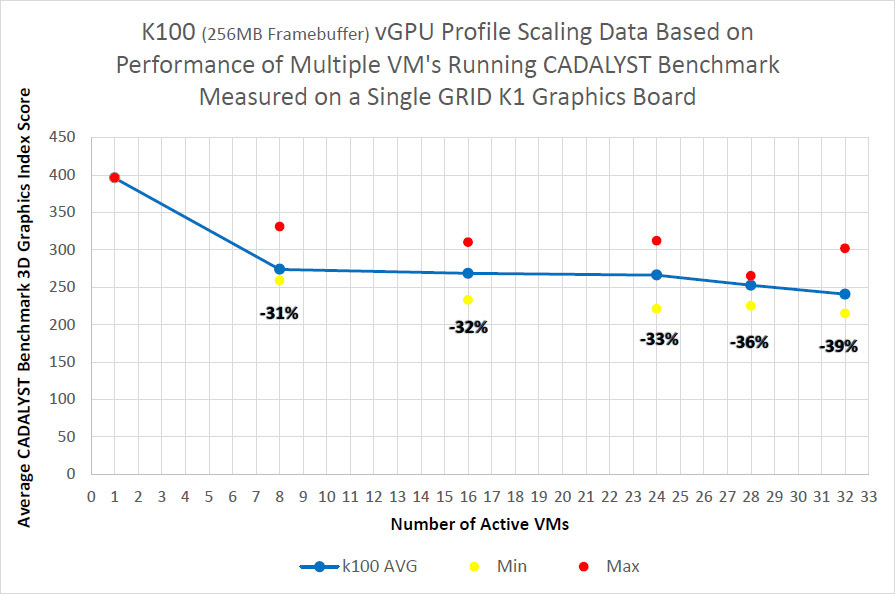

The measurement technique is simple - the CADALYST test was launched in virtual machines and performance was compared when adding new virtual machines.

As can be seen from the results, for the older K2 model and the certified profile, the drop is about 10% when running 8 virtual machines, for the K1 model the drop is stronger, but the virtual machines are twice as large.

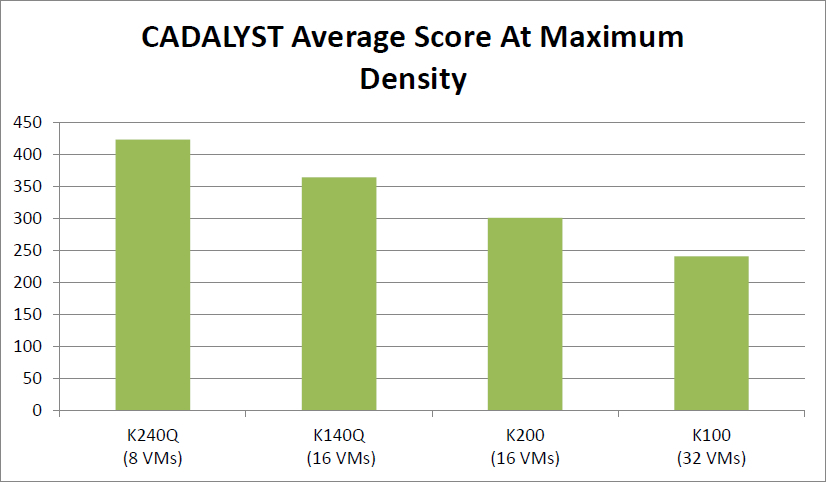

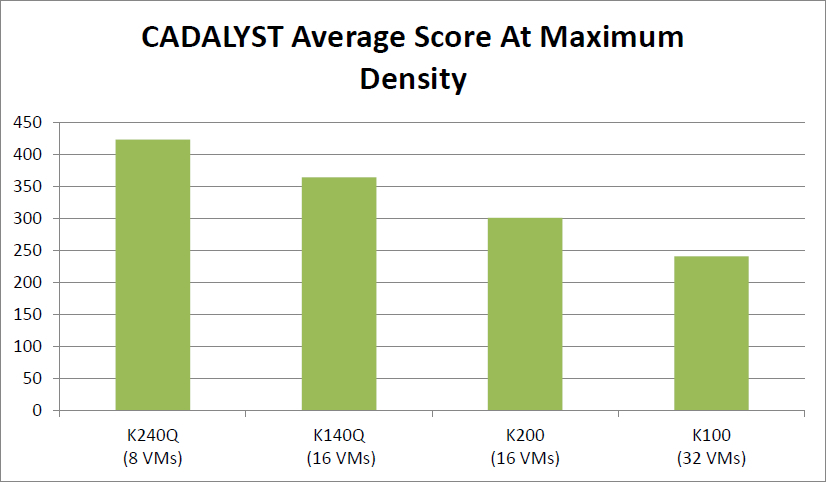

The absolute result of the cards with the maximum number of virtual machines:

Where to put cards?

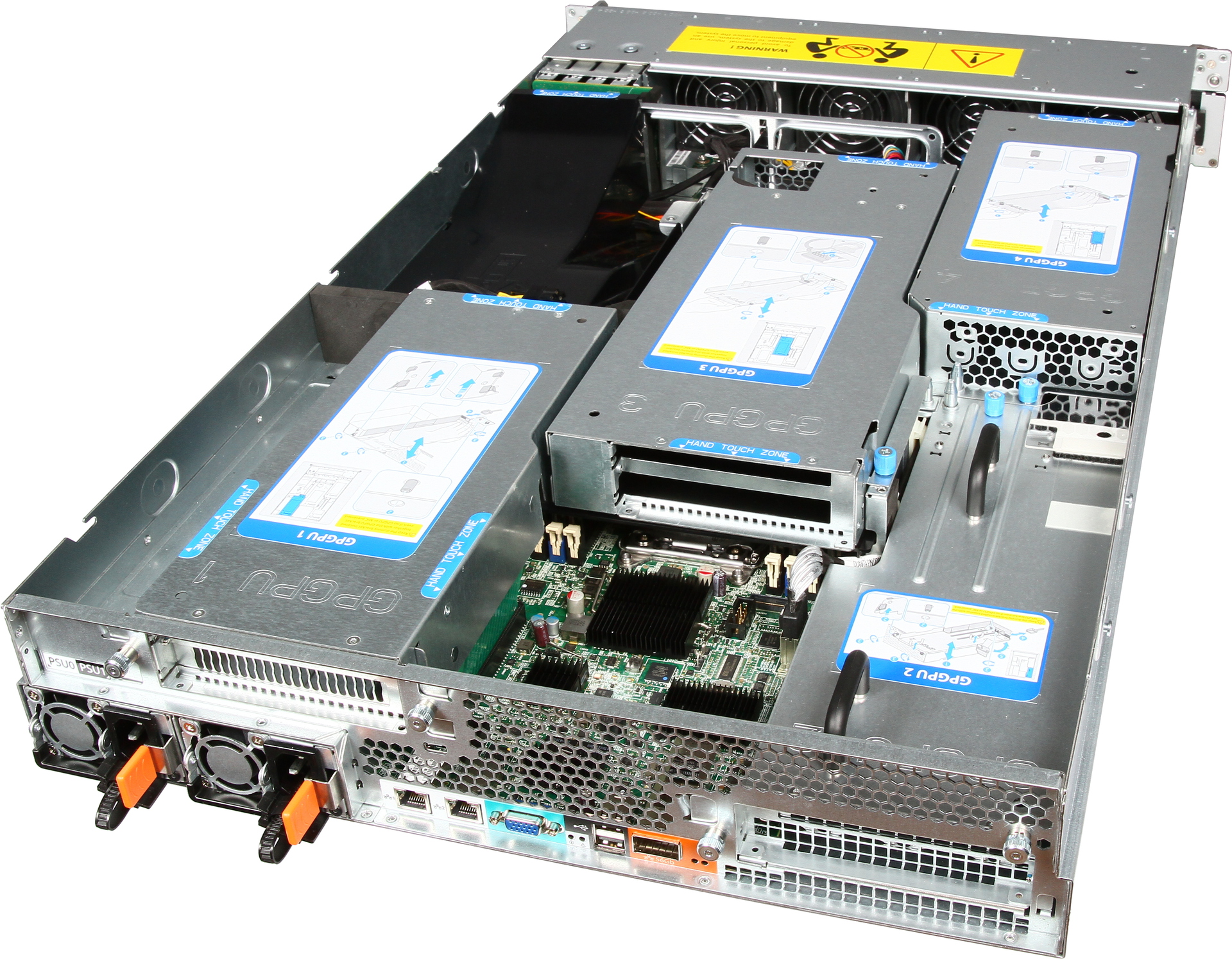

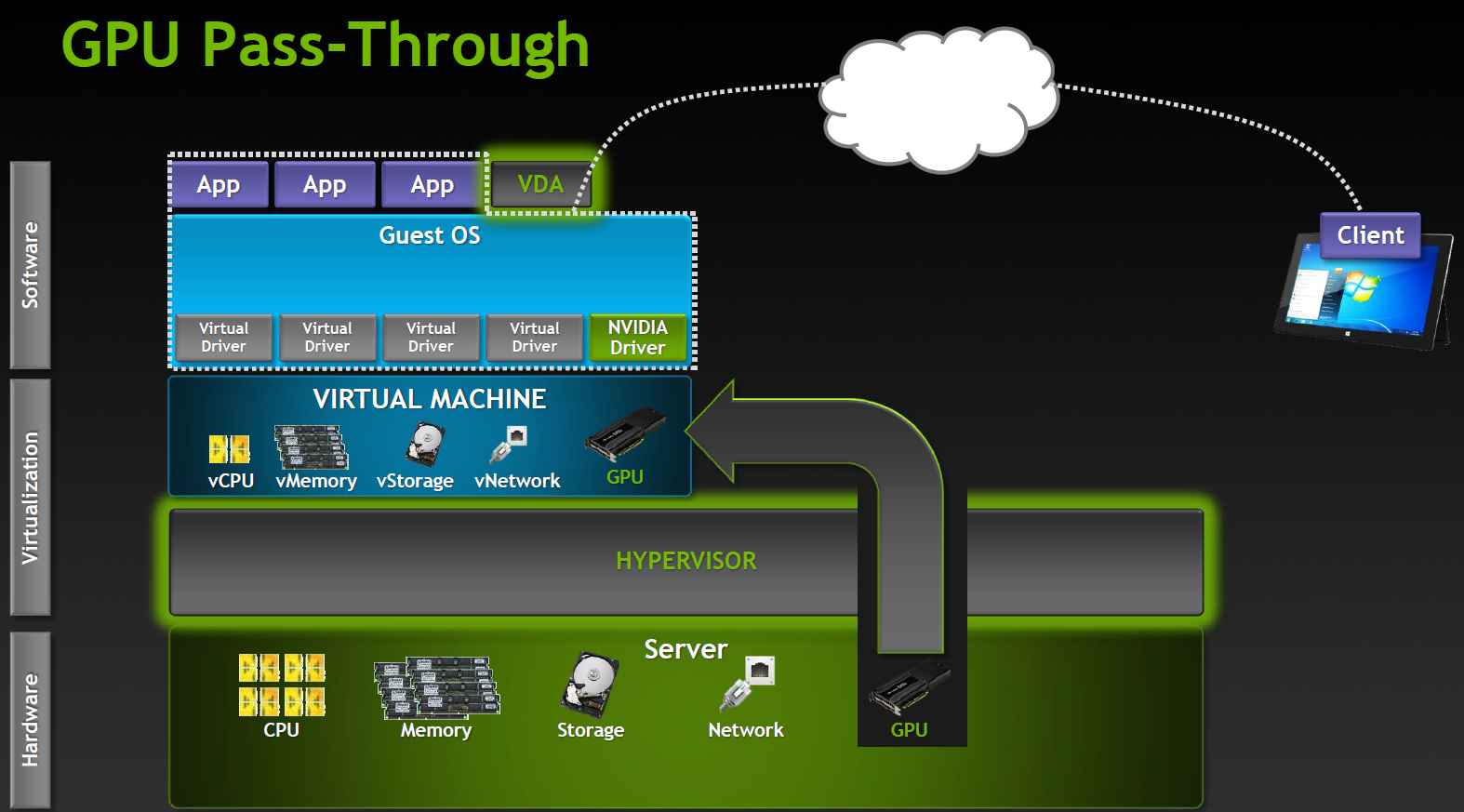

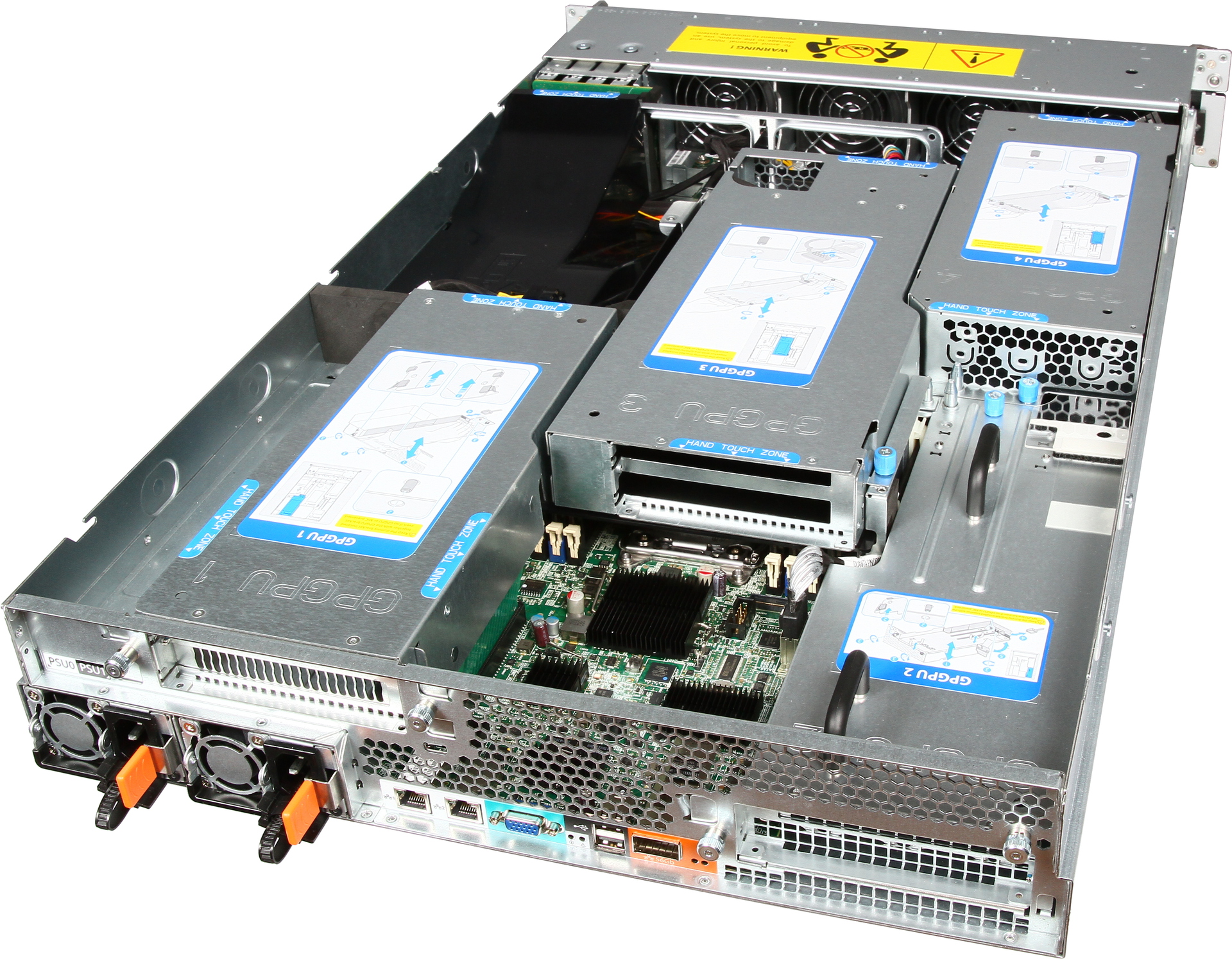

We have a Hyperion RS225 G4 model, designed for installing 4 Intel Xeon Phi or GPGPU cards.

Two Xeon E5-2600 v2 processors, up to 1 terabyte of RAM, 4 hard disk slots, InfiniBand FDR or 40G Ethernet for connecting to a high-speed network and a pair of standard gigabit network connectors.

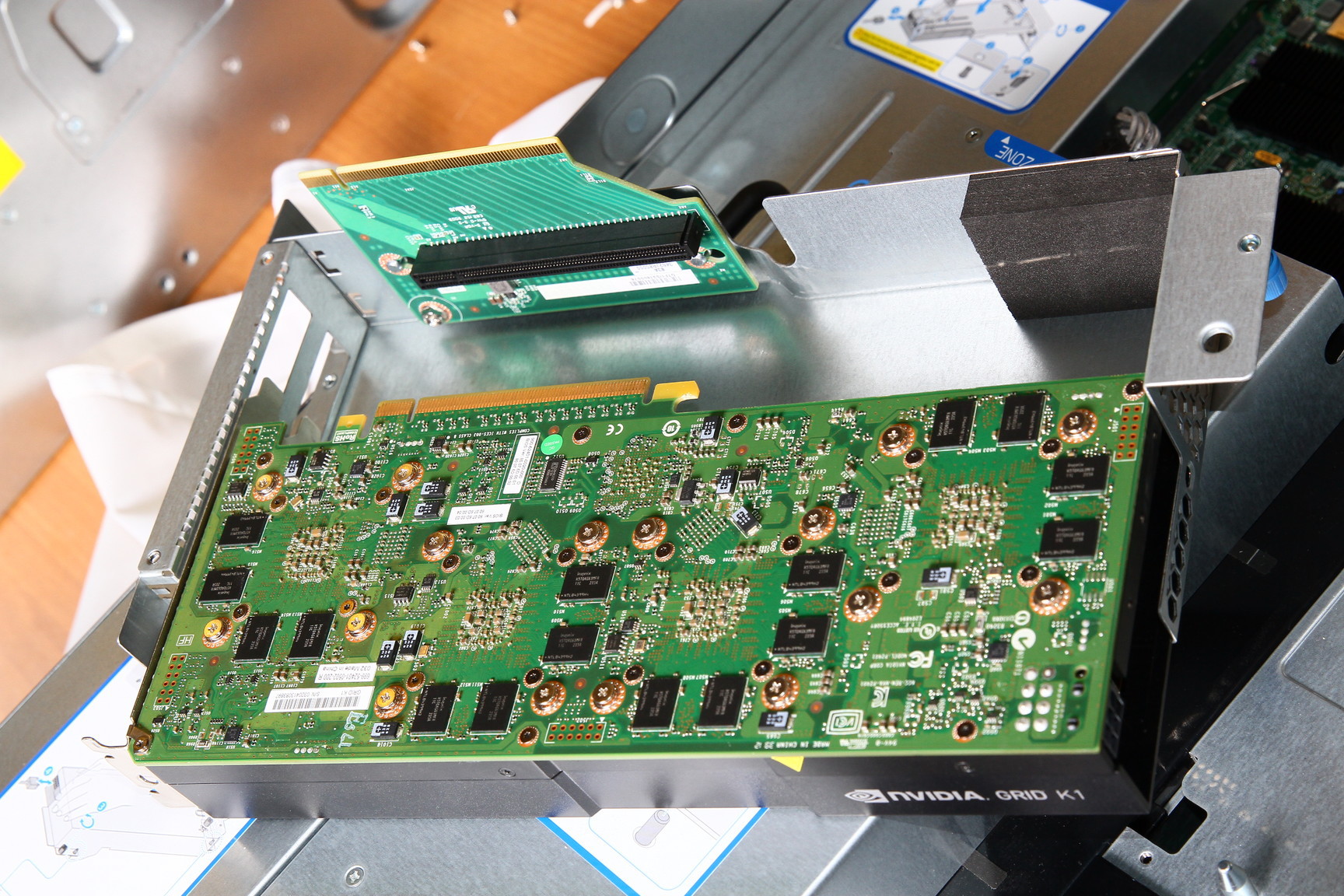

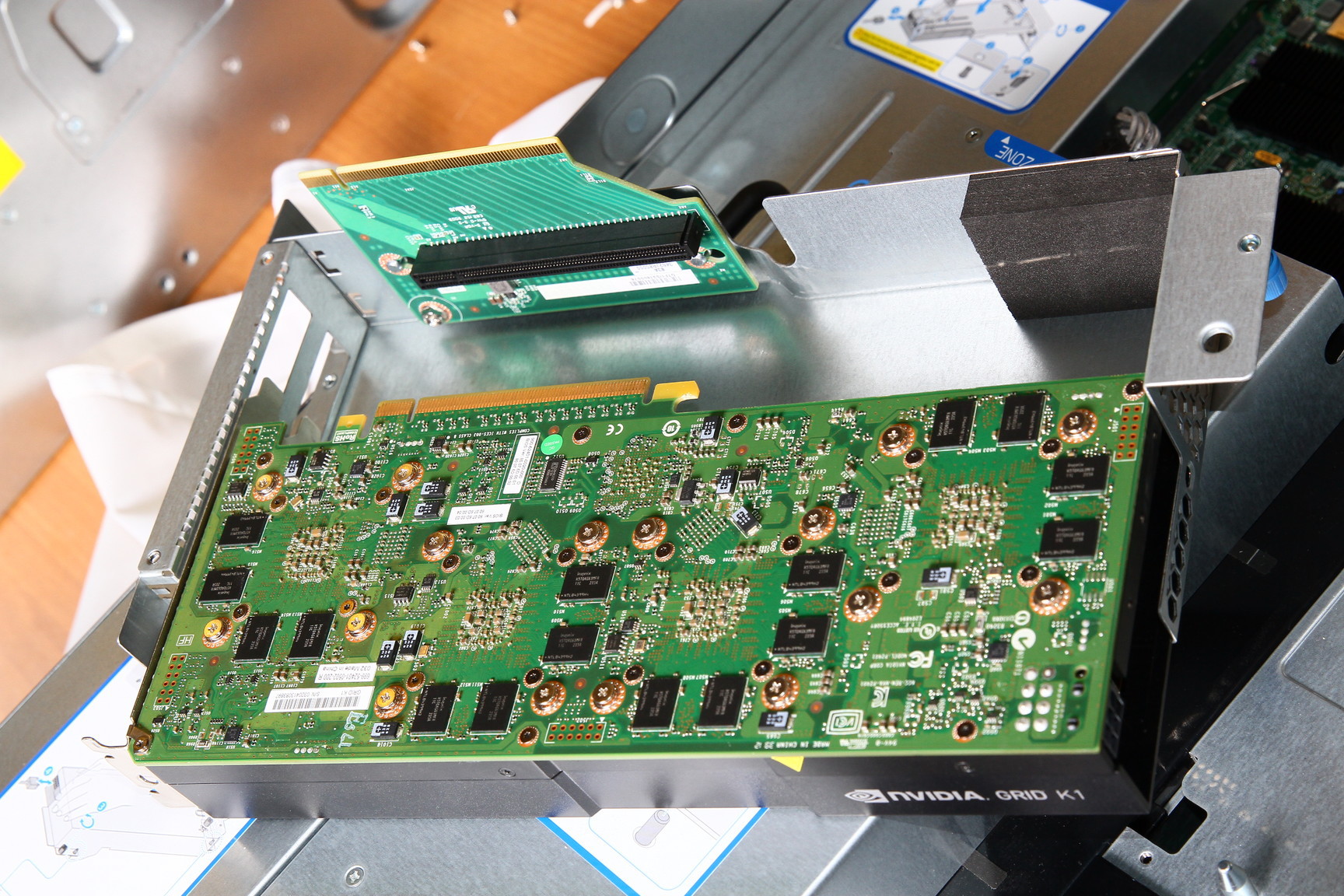

Installing the card in the server:

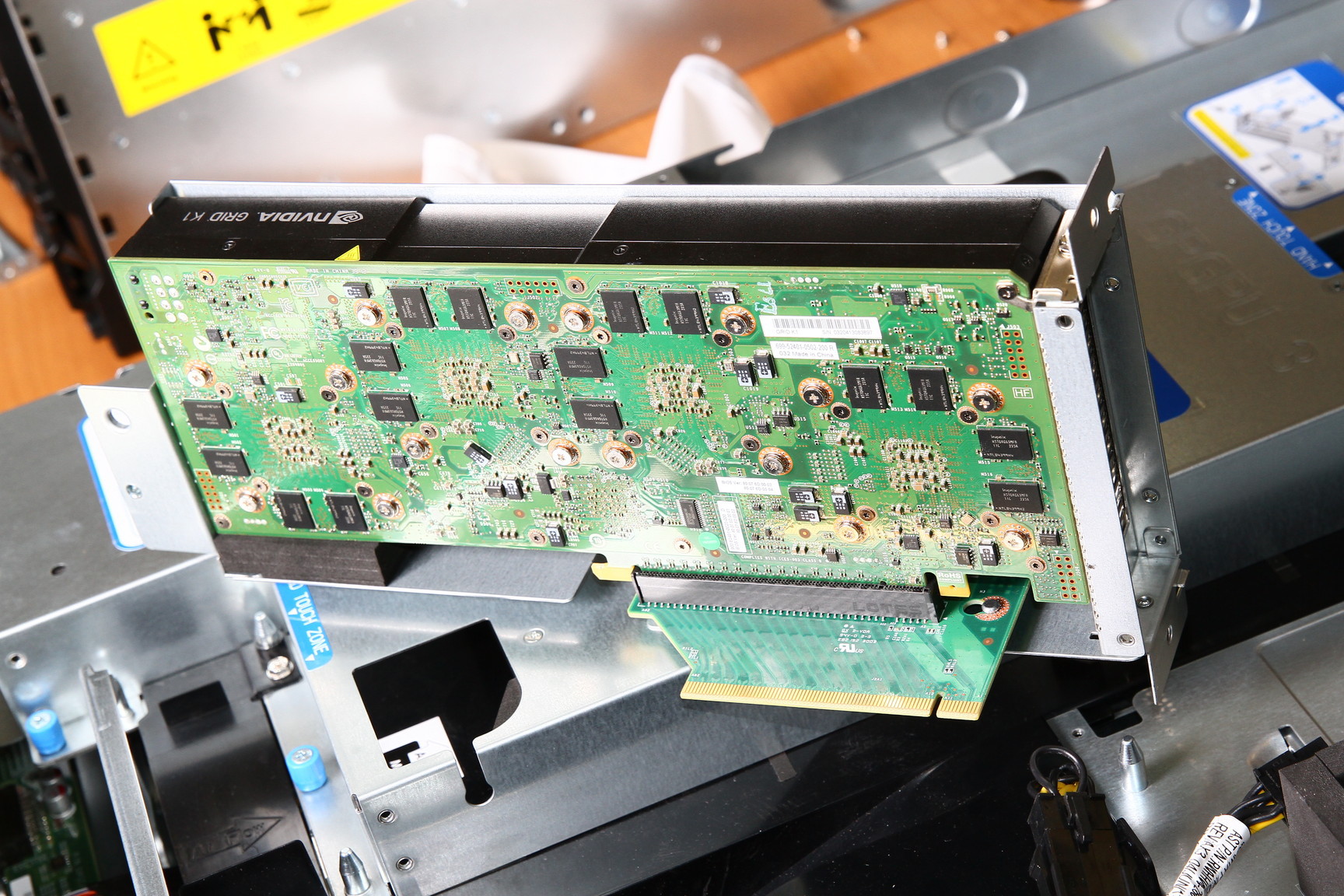

Map in the server:

There are also several typical solutions for various cases.

VDI unplugs the user's desktop from the hardware. You can deploy as a permanent virtual desktop, and (the most frequent option) flexible virtual machine. Virtual machines include an individual set of applications and settings, which is deployed in the base OS when a user is authorized. After logging out, the OS returns to a “clean” state, removing any changes and malware.

')

For a system administrator, this is very convenient - manageability, security, reliability at the height, you can update applications in a single center, and not on every PC. Office packages, database interfaces, Internet browsers and other applications that are not demanding to graphics can work on any server (all well-known 1C terminal clients).

But what to do if you want to virtualize a more serious graphics station?

There can not do without the virtualization of the graphics subsystem.

There are three options for work:

- GPU pass-through: 1: 1 dedicated GPU on VM

- Shared GPU: Software GPU Virtualization

- Virtual GPU: Hardware Vitrualization (HW & SW)

Consider more

Dedicated GPU

The most productive mode of operation, supported by Citrix XenDesktop 7 VDI delivery and VMware Horizon View (5.3 or higher) with vDGA. Fully working NVIDIA CUDA, DirectX 9,10,11, OpenGL 4.4. All other components (processors, memory, drives, network adapters) are virtualized and shared between hypervisor instances, but one GPU remains one GPU. Each virtual machine gets its GPU with almost no penalty for performance.

The obvious limit is that the number of such virtual machines is limited by the number of available graphics cards in the system.

Shared GPU

Works in Microsoft RemoteFX, VMware vSGA. This option relies on the capabilities of the VDI software, the virtual machine works as if with a dedicated adapter, and the server GPU also believes that it works with the same host, although in fact it is a level of abstraction. The hypervisor intercepts API calls and transmits all commands, drawing requests, etc., before transferring it to the graphics driver, and the host machine works with the virtual card driver.

Shared GPU is a reasonable solution for many cases. If the applications are not too complex, then a significant number of users can work at the same time. On the other hand, quite a lot of resources are spent on translating the API and it’s impossible to guarantee compatibility with applications. Especially with those applications that use newer versions of the API than existing at the time of development of the VDI product.

Virtual GPU

The most advanced option of sharing GPU between users is currently supported only in Citrix products.

How it works? In a VDI environment with vGPU, each virtual machine works through a hypervisor with a dedicated vGPU driver, which is in each virtual machine. Each vGPU driver sends commands and controls one physical GPU using a dedicated channel.

The processed frames are returned by the driver to the virtual machine for sending to the user.

This mode of operation has become possible in the latest generation of NVIDIA GPU - Kepler. Kepler has a Memory Management Unit (MMU) that translates virtual host addresses into physical system addresses. Each process operates in its own virtual address space, and the MMU shares their physical addresses so that there is no intersection and struggle for resources.

Card Incarnation

The lineup

| GRID K1 | GRID K2 | |

| Number of GPUs | 4 x entry Kepler GPUs | 2 x high-end Kepler GPUs |

| Total NVIDIA CUDA cores | 768 | 3072 |

| Total memory size | 16 GB DDR3 | 8 GB GDDR5 |

Varieties for VDI

But there is little simple division by characteristics, division into virtual profiles of vGPU is available for GRID.

User Type Vision

| Map | GPU number | Virtual GPU | User type | Memory Capacity (MB) | Number of virtual screens | Maximum resolution | Max number of vGPU per GPU / card |

| GRID K2 | 2 | GRID K260Q | Designer / Designer | 2048 | four | 2560x1600 | 2/4 |

| GRID K2 | 2 | GRID K240Q | Intermediate Designer / Designer | 1024 | 2 | 2560x1600 | 4/8 |

| GRID K2 | 2 | GRID K200 | Office employee | 256 | 2 | 1920x1200 | 8/16 |

| GRID K1 | four | GRID K140Q | Entry Level Designer / Designer | 1024 | 2 | 2560x1600 | 4/16 |

| GRID K1 | four | GRID K100 | Office employee | 256 | 2 | 1920x1200 | 8/32 |

Profiles and Applications

Profiles with Q index are certified for a number of professional applications (for example, Autodesk Inventor 2014 and PTC Creo) as well as Quadro cards.

Full virtual happiness!

NVIDIA wouldn't be true to itself if it hadn't thought about additional varieties. Game services on demand (Gaming-as-a-Service, GaaS) are gaining a certain popularity, where you can also get a good bonus from virtualization and the ability to divide the GPU between users.

| Product Name | GRID K340 | GRID K520 |

| Target Market | High-Density Gaming | High Performance Gaming |

| Concurrent # Users1 | 4–24 | 2–16 |

| Driver support | GRID Gaming | GRID Gaming |

| Total GPUs | 4 GK107 GPUs | 2 GK104 GPUs |

| Total NVIDIA CUDA Cores | 1536 (384 / GPU) | 3072 (1536 / GPU) |

| GPU Core Clocks | 950 MHz | 800 MHz |

| Memory size | 4 GB GDDR5 (1 GB / GPU) | 8 GB GDDR5 (4 GB / GPU) |

| Max power | 225 W | 225 W |

Varieties for cloud games

The impact of virtualization on performance

Server configuration:

Intel Xeon CPU E5-2670 2.6GHz, Dual Socket (16 Physical CPU, 32 vCPU with HT)

Memory 384GB

XenServer 6.2 Tech Preview Build 74074c

Virtual machine configuration:

VM Vcpu: 4 Virtual CPU

Memory: 11GB

XenDesktop 7.1 RTM HDX 3D Pro

AutoCAD 2014

Benchmark CADALYST C2012

NVIDIA Driver: vGPU Manager: 331.24

Guest driver: 331.82

The measurement technique is simple - the CADALYST test was launched in virtual machines and performance was compared when adding new virtual machines.

As can be seen from the results, for the older K2 model and the certified profile, the drop is about 10% when running 8 virtual machines, for the K1 model the drop is stronger, but the virtual machines are twice as large.

The absolute result of the cards with the maximum number of virtual machines:

Where to put cards?

We have a Hyperion RS225 G4 model, designed for installing 4 Intel Xeon Phi or GPGPU cards.

Two Xeon E5-2600 v2 processors, up to 1 terabyte of RAM, 4 hard disk slots, InfiniBand FDR or 40G Ethernet for connecting to a high-speed network and a pair of standard gigabit network connectors.

Installing the card in the server:

Map in the server:

There are also several typical solutions for various cases.

Source: https://habr.com/ru/post/225787/

All Articles