Non-obvious features of the storage

Colleagues, there was a desire to share practical experience in the use of virtualization environments and related systems.

This article will discuss the features of the storage systems. In principle, this information also applies to physical servers using storage systems, but mostly it will be about virtualization, and about VMWare in particular.

')

Why VMWare? It's simple, I am a specialist in this virtualization environment, I have the status of VCP5. For a long time he worked with large customers and had access to their systems.

So let's start with the origins. I will try not to strain readers abstruse words and complex calculations. Not all are experts in the field, and the material can be useful to many.

What is virtualization (in the server area)? This is a kind of software and hardware system that allows separating computing resources from the hardware at the logical level. In the classical form, only one operating system can be operated on a single server that manages this server. All computing resources are given to this operating system, and it owns them exclusively. In the case of virtualization, we have added a layer of software that allows us to emulate some of the server's computing resources in the form of an isolated container, and there may be many such containers (virtual machines). Each container can have its own operating system installed, which, probably, will not suspect that its hardware server is actually a virtual container. This layer of software is called the hypervisor, a medium for creating containers, or virtual servers.

How does a hypervisor work? Conventionally, all requests from operating systems in containers (guest operating systems) are accepted by the hypervisor and processed in turn. This applies both to working with processor power and working with RAM, network cards, as well as with data storage systems. On the latter just will be discussed further.

As disk resources that are provided by the hypervisor to operating systems in containers, disk resources of the hypervisor itself are usually used. It can be both disk systems of the local physical server, and disk resources connected from external storage systems. The connection protocol is secondary here and will not be considered.

All disk systems, in fact, characterize 3 characteristics:

1. The width of the data channel

2. Maximum number of I / O operations

3. The value of the average delay at maximum load

1. The channel width is usually determined by the storage connectivity interface and the performance of the subsystem itself. In practice, the average load on the width is extremely small and rarely exceeds 50 ... 100 megabytes per second, even for a group of 20-30 virtual servers. Of course, there are also specialized tasks, but we are now talking about the average temperature in the hospital. Practice points to exactly these numbers. Naturally, there are peak loads. At such moments, the bandwidth may not be enough, so when sizing (planning) your infrastructure, you need to focus on the maximum possible load.

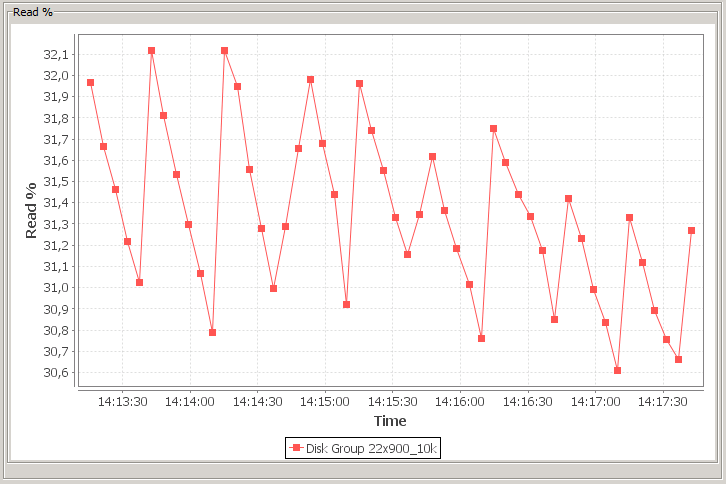

2. I / O operations can be divided into single-threaded and multi-threaded. Given the fact that modern operating systems and applications polls have learned to work multi-threaded, we assume that the entire load is multi-threaded. Further, I / O operations can be divided into sequential and random. With random access, everything is clear, but with sequential? Given the load from a large number of virtual machines, and even multi-threaded from each machine, we end up with almost completely random access to the data. Of course, variants of specific cases with sequential access and a small number of threads are possible, but again, we consider the average temperature. Finally, I / O operations can be divided into read and write. The classic model tells us about 70% of reads and 30% of write operations. Perhaps it is for applications inside virtual machines. And in fact, many, when testing and sizing, take these statistics as the basis for testing storage systems. And make a huge mistake. People confuse access statistics for applications, and access statistics for the disk subsystem. This is not the same thing. In practice, the following division is observed for a disk system: about 30% of operations for reading and 70% for writing.

Where does this difference come from ?! It is due to the work of caches of different levels. The cache may be at the application itself, at the operating system of the virtual machine, at the hypervisor, at the controller, at the disk array, and finally at the disk itself. As a result, part of the operations for reading falls into the cache of any level and does not reach the physical disks. And write operations always come. This must be clearly remembered and understood when sizing storage systems.

3. Delays, or latency of the storage system, is the time during which the guest operating system will receive the requested data from its disk. The request from the application is schematically and simplified as follows: Application-Operating System-Virtual Machine-Hypervisor-Storage System-Hypervisor-Virtual Machine-Operating System-Application. In fact, there are 2-3 times more intermediate chain links, but let's omit deep technical subtleties.

What might be the most interesting thing in this thread? First of all, the answer of the storage system itself and the work of the hypervisor with the virtual machine. With a storage system, everything seems to be clear. If we have SSD drives, then time is spent reading data from the desired cell and in fact everything. Latency is minimal, about 1 ms. If we have SAS disks 10k or 15k, then the access time to the data will consist of many factors: the depth of the current queue, the head position relative to the next track, the angular position of the disk plate relative to the head, etc. The head is positioned, waiting for the disk to turn, when the necessary data will be under it, performs a read or write operation and flies to a new position. The smart controller stores the data access queue on the disks, adjusts the reading sequence depending on the flight path of the head, swaps the positions in the queue, and tries to optimize the work. If the drives are in a RAID array, the access logic becomes even more complex. For example, a mirror has 2 copies of data on 2 halves, so why not read different data from different locations at the same time with two halves of the mirror? Controllers behave similarly with other types of RAID. As a result, for high-speed SAS disks, the standard latency is 3-4 ms. For the slow counterparts of NL-SAS and SATA, this indicator worsens to 9 ms.

Now consider the chain link hypervisor-virtual machine. Virtual machines have virtual hard disk controllers, usually they are SCSI devices. And the guest operating system communicates with its disk also by iSCSI commands. When the disk is accessed, the virtual machine is blocked by the hypervisor and does not work. At this time, the hypervisor intercepts the SCSI commands of the virtual controller, and then resumes the virtual machine. Now the hypervisor itself accesses the data file of the virtual machine (the virtual machine disk file) and performs the necessary operations with it. After that, the hypervisor stops the virtual machine again, again generates SCSI commands for the virtual controller and, on behalf of the virtual machine disk, responds to a recent request from the guest operating system. These fake I / O operations require about 150-700 CPU cycles of the physical server, that is, they take about 0.16 microseconds. On the one hand, not so much, but on the other hand? What if the machine has active I / O? Let's say 50.000 IOPS. And what if it communicates with the network as intensively? Add here a fairly probable loss of data from the processor's cache, which could have changed while waiting for a fake request by the hypervisor. Or something good, the performing core has changed. As a result, we have a significant decrease in the overall performance of the virtual machine, which is quite unpleasant. In practice, I received a performance drop of up to 40% of the nominal value due to the influence of hyperactive I / O of the virtual machine over the network and disk system.

The slow operation of the disk system has an enormous effect on the operation of applications inside the guest machines. Unfortunately, many experts underestimate this effect, and when sizing the hardware, they try to save on the disk subsystem: they try to install inexpensive, large and slow disks, save on controllers, and fault tolerance. Loss of computing power leads to trouble and downtime. Losses at the disk system level can lead to the loss of everything, including business. Remember this.

But back to the read and write operations, as well as the peculiarities of working with them at various RAID levels. If you take stripe as 100% of performance, then the following types of arrays have indicators:

Operation Array Type Efficiency

Reading

RAID0 100%

RAID10 100%

RAID5 ≈90%

RAID6 ≈90%

Record

RAID0 100%

RAID10 50%

RAID5 25%

RAID6 16%

As we can see, RAID5 and RAID6 have a huge performance loss in writing. Here we must not forget that I / O operations load the disk system together, and they cannot be considered separately. Example: in RAID0 mode, a certain hypothetical system has a performance of 10,000 IOPS. We collect RAID6, we load with classical loading of the virtualization environment, 30% reading and 70% of record. We get 630 IOPS reads with simultaneous 1550 IOPS records. Not thick, right? Of course, the presence in the storage systems and cache controllers in write-back write mode slightly increases performance, but everything has limits. IOPS must be read correctly.

A few words about reliability, which have already been repeatedly said. When large and slow disks fail, an array rebuild process occurs (did we take care of hot swapping?). On 4TB disks, the Rebuild RAID 5 and 6 process takes about a week! And if the load on the array is large, then even more. The rebuild process is also associated with a sharp increase in the load on the disks of the array, which increases the probability of another disk crashing. In the case of RAID 5, this will lead to irretrievable data loss. In the case of RAID 6, we face high risks of losing the third disk. For comparison, in RAID10 when rebuild an array, in fact, simply copy data from one half of the mirror (one disk) to the other. This is a much simpler process and takes relatively little time. For a 4 TB disk with an average load, the rebuild time will be about 10-15 hours.

I would like to add that there are in nature smart storage systems like the Dell Compellent SC800, which have a very flexible approach to storing data based on various custom Tier levels. For example, this system can write new data only on SCL SSD drives in the RAID10 mode, and then, in the background, redistribute the data blocks to other types of disks and RAID levels depending on the statistics of access to these blocks. These systems are quite expensive and are aimed at a specific consumer.

Summing up, it remains to determine the following theses:

- Storage system for virtualization should be fast and reliable, with minimal latency

- When designing a virtualization environment, about 40% of the total hardware budget must be put on the storage system

- When sizing a storage system, in general, you should focus on RAID10

- Backups will save the world!

Source: https://habr.com/ru/post/225183/

All Articles