The future of Ethernet & FC

These two protocols have long lived in different niches of application, but the time came when they began to compete with each other. We definitely see that Ethernet picks up speed in the literal sense of the word and begins to climb where FC has always been considered the only player on the field. There are FC alternatives running on Ethernet, both in block access: IP- SAN , FCoE and other types, these are file ( SMB , NFS ), RDMA and object.

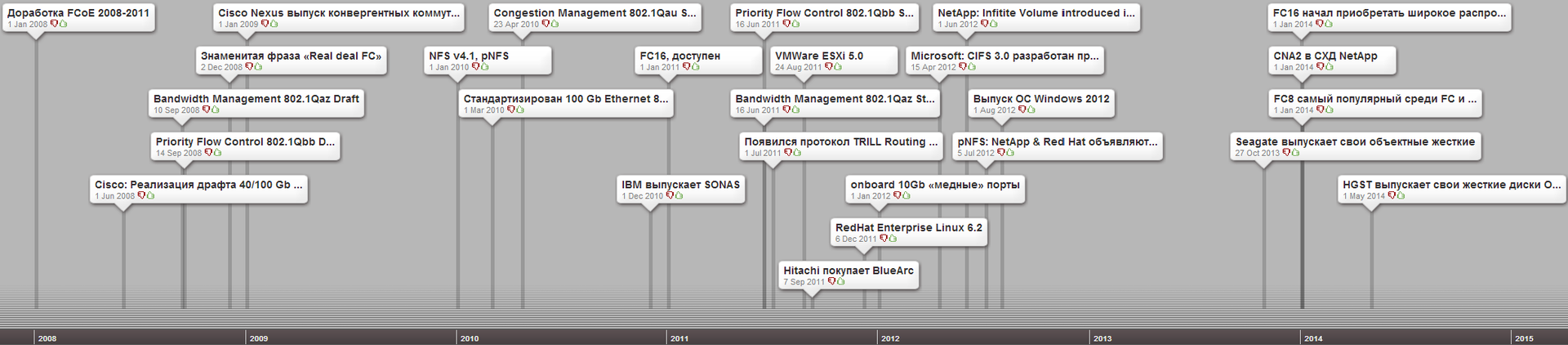

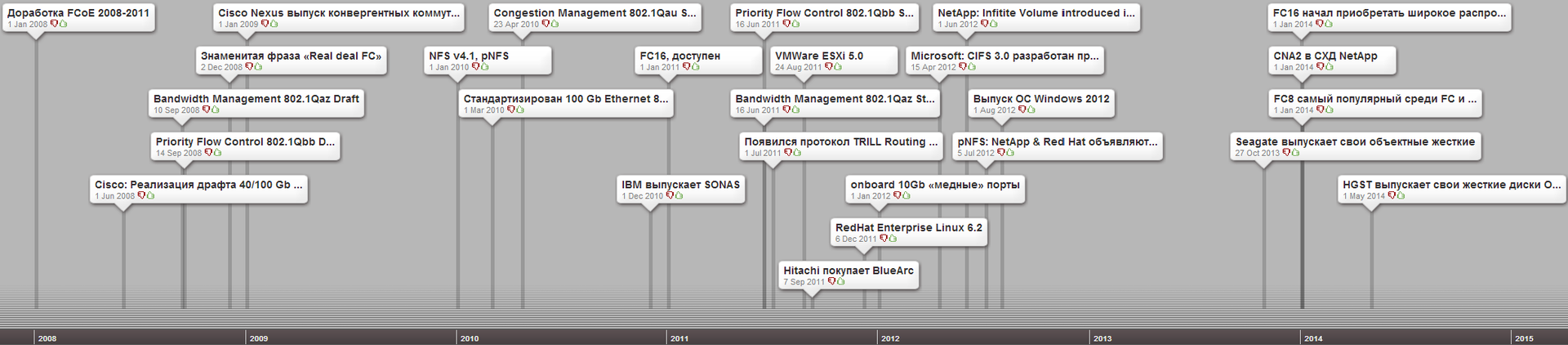

This article is not intended to compare protocols, but rather as a brief chronological description of the evolution of data center networks.

* For some events there are no exact dates. I ask you to send corrections and additions by dates with references certifying them. Timeline.

With the release of 1Gb, Ethernet loses many of its childhood diseases associated with the loss of frames. In 2002 and 2004, the most popular Ethernet standards 10 Gb / sec were adopted. The protocol improves throughput using the new 64b / 66b coding algorithm (10 * 64/64 Gb throughput). In 2007, 1 million 10Gb ports were sold.

Ethernet 40 Gb / sec and Ethernet 100 Gb / sec use the same 64b / 66b encoding algorithm, both standardized in 2010. Some manufacturers provided an implementation of the draft standard 40/100 Gb back in 2008.

')

DCB standards (sometimes referred to as Lossless Ethernet) were developed by two independent IEEE 802.1 and IETF groups from 2008 to 2011. Two different terms apply to products available on the market based on these standards: DCE and CEE :

In more detail about DCB and CEE I recommend to read and ask questions in the corresponding post of Cisco company: " Consolidation of LAN and SAN data center networks based on DCB and FCoE protocols ".

Here it should be noted separately that the Ethernet for FC transmission has been modified (2008-2011), while TRILL is not applicable here. For the implementation of FCoE , mechanisms were developed that mimic buffer-to-buffer credits in FC , such as: Priority Flow Control 802.1Qbb (Draft 2008, Standard 2011), Bandwidth Management 802.1Qaz (Draft 2008, Standard 2011) and Congestion Management ( band reserve, no drops) 802.1Qau (Draft 2006, Standard 2010). For the first time FCoE appeared in Cisco switches.

FC 8 at the time of 2014 is the most popular among FC and FCoE .

FC 16, available from 2011, began to gain widespread distribution in 2014.

FC 32, draft release scheduled for 2016 (forecast).

FC 128, draft release scheduled for 2016 (forecast).

All “pure block storage systems ” acquire file gateways. The famous phrase “Real deal FC ” (2008), the former head of a major storage vendor at the time, seemed to emphasize that in data center networks FC is the best choice. However, IBM develops its SONAS (2010), Hitachi buys BlueArc (2011) to provide file access, EMC and HP use Windows servers to provide access via SMB and Linux for NFS . All of the above also now provide the ability to use iSCSI .

This variety of options essentially uses Ethernet.

In 1997, Oracle with NetApp released a solution for databases located on NAS storage. File protocols that use Ethernet find their use in high-load and mission-critical applications. The evolution of file protocols leads to the use of Ethernet in applications that previously used only block FC . In 2004, Oracle launches the Oracle On-Demand Austin (USA) Data Center using NetApp FAS system-based storage.

Microsoft has developed a new version of the protocol SMB 3.0 (2012), now critical applications like virtualization, databases , etc. can live in a network folder. Many storage engineers immediately announce future support for this protocol. In 2013, NetApp launches Hyper-V on SMB 3.0 and MS SQL on SMB 3.0 together with Microsoft.

A new dNFS function has been added to the Oracle 11g (2007) database to locate database files in the NFS network folder.

NFS v4.0 (2003) - the namespace paradigm is changing in the protocol. Now, instead of exporting multiple file systems, servers export one pseudo-file system assembled from many real file systems and, importantly, provides new High-Availability features for data center networks, such as: transparent replication and data movement, redirecting a client from one server to another; thus allowing you to maintain a global namespace, while the data is located and served by one dedicated server. This is very closely linked with the construction of cluster systems and greatly simplifies their implementation.

NFS v4.1, pNFS - (2010) parallel NFS allows clients to access files through the “nearest” links without re-mounting. In 2012, NetApp, along with RedHat, announced a collaboration on pNFS support for Clustered Ontap and RHEL 6.2.

The advantages of protocols running over IP can also be attributed to their routing capability, which breaks up broadcast domains in such networks. For SMB 3.0 and NFS 4.0 protocols, optimization for WAN operation was laid out.

The release of converged network switches, such as the Cisco Nexus series in 2009, results in their application combining a traditional FC SAN network with an Ethernet network, which allows IT departments to respond more flexibly to changing business needs. For the first time, NetApp launches support for converged networks and multiple protocols over a single wire (2010). Initially, the FCoE transmission was supported only on optics and on Twinax cables.

Appear onboard 10Gb adapters in 2012 with "copper" ports.

In 2013, onboard CNA 2 second generation adapters with SFP + connectors appear, they can be used for both Ethernet and FC 8 / FC 16.

The release of CNA adapters allows you to use FCoE and Ethernet in data center networks, providing the opportunity to get the best from both, allowing you to use the most suitable protocols for solving various problems.

The beginning of widespread use of CNA 2 (2014) adapters in NetApp storage systems that allow you to use FCoE and Ethernet or “clean” FC 8 / FC 16 at the same time. Convergent ports have supplanted virtually all data ports on new NetApp FAS 8000 storage systems, leaving them “clean” only 1GbE. Together with CNA adapters, converged SFP + transceivers appear that can switch between FC / Ethernet robots, for example in NetApp E-Series storage systems.

Object storage is becoming increasingly popular, and in 2013, Seagate launches its Kinetic object hard drives with an Ethernet interface. And in 2014, HGST releases its Open Ethernet hard drives. In 2010, NetApp purchases StorageGRID (formerly a product of Bycast Inc.), the first installation of object storage in 2001.

One of the leaders of NAS clustering solutions was Spinnaker Networks, which started as Start Up and absorbed by NetApp in 2003, its development formed the basis of the modern Clustered Ontap OS for NetApp FAS storage systems.

The largest cluster storage systems providing access via FC block protocol reach 8 nodes for NetApp FAS 6200/8000, while for competitors this is usually not more than 4 nodes. For file protocols, the number of nodes can be several times larger - 24 nodes for NetApp (2013) FAS 6200/8000 ( SMB , NFS ). And for object storages the number of nodes is theoretically unlimited. In the modern world, where relatively inexpensive clustered nodes with the ability to scale as needed, may be the preferred choice, unlike the “Mainframe approach”, which uses one expensive supercomputer.

The maximum size of LUN 's can often leave much to be desired: reach 16TB and have limitations on the number of LUNs , both on the host side and on the storage side. In the clustered storage systems, this threshold has not disappeared anywhere, and “crutch” solutions are often used to get more volume, combining several LUNs at the software level on the host. File balls can take Patabytes and are quite easy to scale, getting one huge logical network folder in the size of several Petabytes; in NetApp FAS, this is achieved using Infinite Volume technology with access via SMB and NFS protocols.

The first snapshot technology was developed and implemented by NetApp in 1993, and the Thin provisioning technology was first introduced in 2001 and first introduced in the 3Par storage system in 2003 and after NetApp FAS in 2004. Despite the fact that these two technologies have no direct relationship to Ethernet with its SMB , NFS or FC , yet in terms of usability, Thin Provisioning and Snapshots “at the junction” with the “file” and “block” protocols are very different from each other.

So for example, if you use a “thin” LUN and the space on the storage system “in fact” has ended, all decent storage systems will transfer such a LUN to offline mode so that the application does not try to write there and does not spoil the data on it. A similar situation will occur when snapshots "eat up" the entire space of the current file system and do not leave room for the data itself in a thin LUN 'e. Also an unpleasant feature of “thin” LUNs has always been that it always “grows”, even if the data is deleted at the file system level of the living “on top” of LUN , the storage of this LUN does not know anything about it.

At the same time, for file spheres, subtle planning is provided that says “by design” and certainly the end of space will not translate the network folder offline.

So, in Windows 2012, RedHat Enterprise Linux 6.2 (2011) and VMWare 5.0 (2011) can run the Logical Block Provisioning feature as defined in the SCSI SBC- 3 standard (what is often called SCSI thin provisioning), which the OS “explains” that LUN in fact, “thin” and the place on it “actually ended”, prohibiting the conduct of write operations. Thus, the OS should stop writing to such a LUN , and it will not be transferred offline and will only be available for reading (another question is how the application will react to this). This functionality also provides the ability to use Space Reclamation, which allows LUN 's to decrease, after deleting the actual data on it. Thus, LUN 's now more adequately work in thin planning mode. So after 8 years, "Thin Provisioning Awareness" has come from storage (2003) to host (2011).

As for clustered solutions for hosts, unlike network folders, with one LUN 'a long time only one host could work (read and write). All other hosts could only read this LUN . But here, too, the decision came after a while, Block or Region Level Locking Mechanism - you can split LUN into logical sections and allowing several hosts to write only to “their” sections. While in network folders, this function is embedded in the design of the protocol and has been present from the very beginning.

On the other hand, network folders have never had functionality like Multipathing, which would allow a client requesting a file to access it in several ways or in the shortest way. This was always lacking in file protocols, pNFS appeared, this deficiency was partially closed by LACP , allowing balancing traffic between multiple switches using vPC technology.

Apparently, the functionality will continue to be borrowed further, bringing together the protocols. Convergence is seen as the inevitable future in view of the events listed above. This will force the two protocols to fight even harder for applications in data center networks. The implementation of cluster storage systems with access using the block FC protocol has a more complex architecture, technical limitations on the size of LUNs and their number, which do not play in favor of the future development of this protocol in the modern world of ever-growing data and the clustering paradigm, requiring further development in this direction. Also, Ethernet is far ahead of FC speed, which may affect the future of the latter, and therefore there is an assumption that FC will shift inside Ethernet and stay there, in the form of FCoE . After all, in fact, the difference is 8m years: 100Gb 2008 and 128Gb in 2016. Choosing between FC and FCoE pay attention to this article.

I want to note that not common types of IP over FC and other various combinations of protocols were considered here, but only the most commonly used combinations of protocols in the data center infrastructure designs that form the future trends.

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary I ask in the comments.

This article is not intended to compare protocols, but rather as a brief chronological description of the evolution of data center networks.

* For some events there are no exact dates. I ask you to send corrections and additions by dates with references certifying them. Timeline.

Ethernet problems

With the release of 1Gb, Ethernet loses many of its childhood diseases associated with the loss of frames. In 2002 and 2004, the most popular Ethernet standards 10 Gb / sec were adopted. The protocol improves throughput using the new 64b / 66b coding algorithm (10 * 64/64 Gb throughput). In 2007, 1 million 10Gb ports were sold.

Ethernet 40 Gb / sec and Ethernet 100 Gb / sec use the same 64b / 66b encoding algorithm, both standardized in 2010. Some manufacturers provided an implementation of the draft standard 40/100 Gb back in 2008.

')

Data center bridging

DCB standards (sometimes referred to as Lossless Ethernet) were developed by two independent IEEE 802.1 and IETF groups from 2008 to 2011. Two different terms apply to products available on the market based on these standards: DCE and CEE :

In more detail about DCB and CEE I recommend to read and ask questions in the corresponding post of Cisco company: " Consolidation of LAN and SAN data center networks based on DCB and FCoE protocols ".

Converged Enhanced Ethernet

Here it should be noted separately that the Ethernet for FC transmission has been modified (2008-2011), while TRILL is not applicable here. For the implementation of FCoE , mechanisms were developed that mimic buffer-to-buffer credits in FC , such as: Priority Flow Control 802.1Qbb (Draft 2008, Standard 2011), Bandwidth Management 802.1Qaz (Draft 2008, Standard 2011) and Congestion Management ( band reserve, no drops) 802.1Qau (Draft 2006, Standard 2010). For the first time FCoE appeared in Cisco switches.

Speed Prospects for FC

FC 8 at the time of 2014 is the most popular among FC and FCoE .

FC 16, available from 2011, began to gain widespread distribution in 2014.

FC 32, draft release scheduled for 2016 (forecast).

FC 128, draft release scheduled for 2016 (forecast).

"Pure-block" storage

All “pure block storage systems ” acquire file gateways. The famous phrase “Real deal FC ” (2008), the former head of a major storage vendor at the time, seemed to emphasize that in data center networks FC is the best choice. However, IBM develops its SONAS (2010), Hitachi buys BlueArc (2011) to provide file access, EMC and HP use Windows servers to provide access via SMB and Linux for NFS . All of the above also now provide the ability to use iSCSI .

This variety of options essentially uses Ethernet.

File Protocols Over Ethernet

In 1997, Oracle with NetApp released a solution for databases located on NAS storage. File protocols that use Ethernet find their use in high-load and mission-critical applications. The evolution of file protocols leads to the use of Ethernet in applications that previously used only block FC . In 2004, Oracle launches the Oracle On-Demand Austin (USA) Data Center using NetApp FAS system-based storage.

Microsoft has developed a new version of the protocol SMB 3.0 (2012), now critical applications like virtualization, databases , etc. can live in a network folder. Many storage engineers immediately announce future support for this protocol. In 2013, NetApp launches Hyper-V on SMB 3.0 and MS SQL on SMB 3.0 together with Microsoft.

A new dNFS function has been added to the Oracle 11g (2007) database to locate database files in the NFS network folder.

NFS v4.0 (2003) - the namespace paradigm is changing in the protocol. Now, instead of exporting multiple file systems, servers export one pseudo-file system assembled from many real file systems and, importantly, provides new High-Availability features for data center networks, such as: transparent replication and data movement, redirecting a client from one server to another; thus allowing you to maintain a global namespace, while the data is located and served by one dedicated server. This is very closely linked with the construction of cluster systems and greatly simplifies their implementation.

NFS v4.1, pNFS - (2010) parallel NFS allows clients to access files through the “nearest” links without re-mounting. In 2012, NetApp, along with RedHat, announced a collaboration on pNFS support for Clustered Ontap and RHEL 6.2.

The advantages of protocols running over IP can also be attributed to their routing capability, which breaks up broadcast domains in such networks. For SMB 3.0 and NFS 4.0 protocols, optimization for WAN operation was laid out.

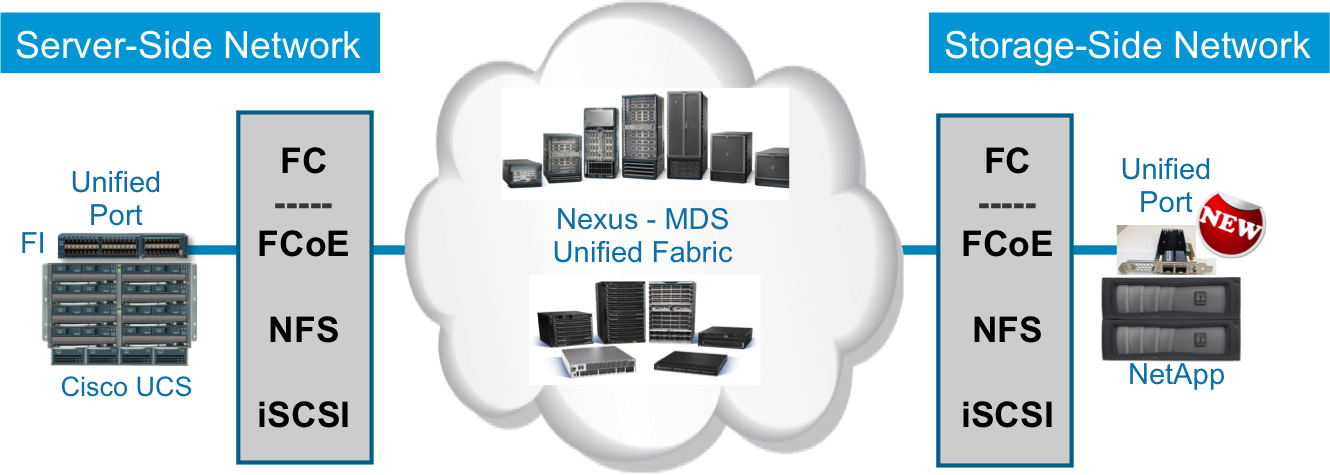

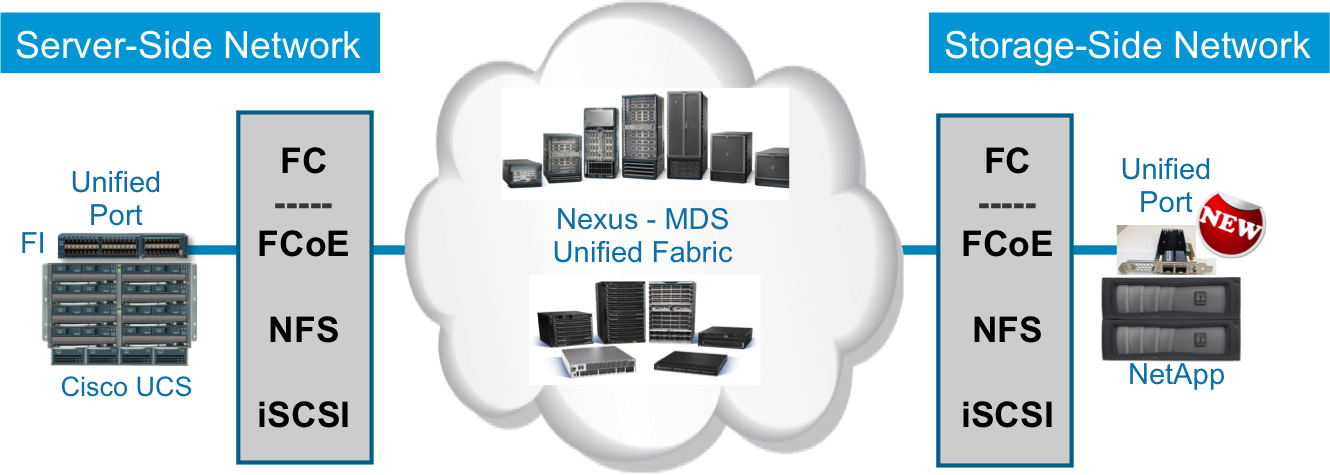

Convergent devices

The release of converged network switches, such as the Cisco Nexus series in 2009, results in their application combining a traditional FC SAN network with an Ethernet network, which allows IT departments to respond more flexibly to changing business needs. For the first time, NetApp launches support for converged networks and multiple protocols over a single wire (2010). Initially, the FCoE transmission was supported only on optics and on Twinax cables.

Appear onboard 10Gb adapters in 2012 with "copper" ports.

In 2013, onboard CNA 2 second generation adapters with SFP + connectors appear, they can be used for both Ethernet and FC 8 / FC 16.

The release of CNA adapters allows you to use FCoE and Ethernet in data center networks, providing the opportunity to get the best from both, allowing you to use the most suitable protocols for solving various problems.

The beginning of widespread use of CNA 2 (2014) adapters in NetApp storage systems that allow you to use FCoE and Ethernet or “clean” FC 8 / FC 16 at the same time. Convergent ports have supplanted virtually all data ports on new NetApp FAS 8000 storage systems, leaving them “clean” only 1GbE. Together with CNA adapters, converged SFP + transceivers appear that can switch between FC / Ethernet robots, for example in NetApp E-Series storage systems.

Object Storage Over Ethernet

Object storage is becoming increasingly popular, and in 2013, Seagate launches its Kinetic object hard drives with an Ethernet interface. And in 2014, HGST releases its Open Ethernet hard drives. In 2010, NetApp purchases StorageGRID (formerly a product of Bycast Inc.), the first installation of object storage in 2001.

Scaling and clustering

One of the leaders of NAS clustering solutions was Spinnaker Networks, which started as Start Up and absorbed by NetApp in 2003, its development formed the basis of the modern Clustered Ontap OS for NetApp FAS storage systems.

The largest cluster storage systems providing access via FC block protocol reach 8 nodes for NetApp FAS 6200/8000, while for competitors this is usually not more than 4 nodes. For file protocols, the number of nodes can be several times larger - 24 nodes for NetApp (2013) FAS 6200/8000 ( SMB , NFS ). And for object storages the number of nodes is theoretically unlimited. In the modern world, where relatively inexpensive clustered nodes with the ability to scale as needed, may be the preferred choice, unlike the “Mainframe approach”, which uses one expensive supercomputer.

The maximum size of LUN 's can often leave much to be desired: reach 16TB and have limitations on the number of LUNs , both on the host side and on the storage side. In the clustered storage systems, this threshold has not disappeared anywhere, and “crutch” solutions are often used to get more volume, combining several LUNs at the software level on the host. File balls can take Patabytes and are quite easy to scale, getting one huge logical network folder in the size of several Petabytes; in NetApp FAS, this is achieved using Infinite Volume technology with access via SMB and NFS protocols.

ThinProvitioning & Snapsots

The first snapshot technology was developed and implemented by NetApp in 1993, and the Thin provisioning technology was first introduced in 2001 and first introduced in the 3Par storage system in 2003 and after NetApp FAS in 2004. Despite the fact that these two technologies have no direct relationship to Ethernet with its SMB , NFS or FC , yet in terms of usability, Thin Provisioning and Snapshots “at the junction” with the “file” and “block” protocols are very different from each other.

So for example, if you use a “thin” LUN and the space on the storage system “in fact” has ended, all decent storage systems will transfer such a LUN to offline mode so that the application does not try to write there and does not spoil the data on it. A similar situation will occur when snapshots "eat up" the entire space of the current file system and do not leave room for the data itself in a thin LUN 'e. Also an unpleasant feature of “thin” LUNs has always been that it always “grows”, even if the data is deleted at the file system level of the living “on top” of LUN , the storage of this LUN does not know anything about it.

At the same time, for file spheres, subtle planning is provided that says “by design” and certainly the end of space will not translate the network folder offline.

Borrowing functionality

So, in Windows 2012, RedHat Enterprise Linux 6.2 (2011) and VMWare 5.0 (2011) can run the Logical Block Provisioning feature as defined in the SCSI SBC- 3 standard (what is often called SCSI thin provisioning), which the OS “explains” that LUN in fact, “thin” and the place on it “actually ended”, prohibiting the conduct of write operations. Thus, the OS should stop writing to such a LUN , and it will not be transferred offline and will only be available for reading (another question is how the application will react to this). This functionality also provides the ability to use Space Reclamation, which allows LUN 's to decrease, after deleting the actual data on it. Thus, LUN 's now more adequately work in thin planning mode. So after 8 years, "Thin Provisioning Awareness" has come from storage (2003) to host (2011).

As for clustered solutions for hosts, unlike network folders, with one LUN 'a long time only one host could work (read and write). All other hosts could only read this LUN . But here, too, the decision came after a while, Block or Region Level Locking Mechanism - you can split LUN into logical sections and allowing several hosts to write only to “their” sections. While in network folders, this function is embedded in the design of the protocol and has been present from the very beginning.

On the other hand, network folders have never had functionality like Multipathing, which would allow a client requesting a file to access it in several ways or in the shortest way. This was always lacking in file protocols, pNFS appeared, this deficiency was partially closed by LACP , allowing balancing traffic between multiple switches using vPC technology.

Conclusion

Apparently, the functionality will continue to be borrowed further, bringing together the protocols. Convergence is seen as the inevitable future in view of the events listed above. This will force the two protocols to fight even harder for applications in data center networks. The implementation of cluster storage systems with access using the block FC protocol has a more complex architecture, technical limitations on the size of LUNs and their number, which do not play in favor of the future development of this protocol in the modern world of ever-growing data and the clustering paradigm, requiring further development in this direction. Also, Ethernet is far ahead of FC speed, which may affect the future of the latter, and therefore there is an assumption that FC will shift inside Ethernet and stay there, in the form of FCoE . After all, in fact, the difference is 8m years: 100Gb 2008 and 128Gb in 2016. Choosing between FC and FCoE pay attention to this article.

I want to note that not common types of IP over FC and other various combinations of protocols were considered here, but only the most commonly used combinations of protocols in the data center infrastructure designs that form the future trends.

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary I ask in the comments.

Source: https://habr.com/ru/post/224869/

All Articles