Saw - Won. How is the capture of items from the robot Tod Bot

Hi Habr! Here we go again! In response to the many skeptics who often met on our way, we continue to develop the project “Rob Tod Bot”. This post is a continuation of the acquaintance with the MoveIt module as a manipulator control tool.

First of all, I would like to say that we managed to achieve significant results in the task of capturing and moving objects by means of a manipulator, as well as in the recognition of objects, but first things first.

It is a little theory about capture in MoveIt

The capture of an object can be represented in the form of a conveyor consisting of several stages, in which the complete trajectory ready for execution is calculated: starting from the initial position of the manipulator and directly raising the object. These calculations are based on the following data:

- Scene planning, which provides a tool Planning Scene Monitor

- Object identifier to capture

- Pose capture brush for this object

The latter in turn contains the following data:

- The position and orientation of the "brush" manipulator

- Expected probability of successful capture for this pose

- The preliminary approach of the manipulator, which is defined as the direction of the vector - the minimum / desired distance of the approach

- The indent of the manipulator after the capture, which is defined as the direction of the vector - the minimum indent distance

- Maximum grip force

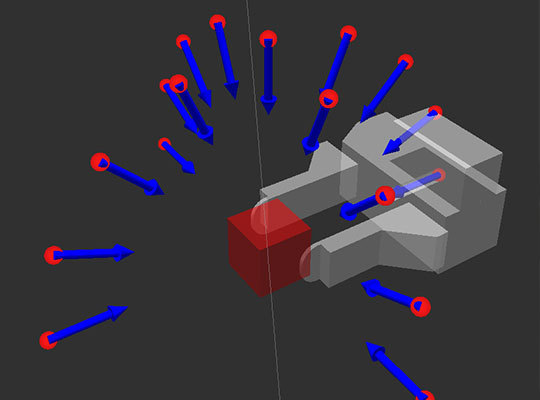

Before the system starts the conveyor, it is necessary to generate possible options for the position of the brush to capture our object. In our case, we make the assumption that all captured objects have a rectangular shape. Accordingly, with the capture in the form of two fingers, we have only two paired planes for reliable gripping of the subject, not counting the top and bottom, on which the subject stands. According to this, capture positions are generated in the form of hemispheres for one pair of planes and another.

Among the obtained set of possible poses, we must weed out those poses that do not satisfy the shape of our grip / brush, and then pass the remaining trajectories to achieve these poses in the pipeline for further planning.

In the conveyor raising the subject, there are three main points:

')

1- Initial position; 2- Pre-locking position; 3 - Position capture;

During the execution of the pipeline, individual paths will be added to the final plan for raising the object. If the capture has successfully passed all the stages, then only the plan can be executed. The conveyor algorithm in general looks like this:

- The trajectory is planned from the initial position to the pre-grip point. If we draw an analogy with the landing of the aircraft on the runway, it will be a landing approach.

- All objects of the environment are initially included in the collision matrix, we wrote about it here . In order for our capture to succeed, collision checking is disabled. Then the capture opens.

- The trajectory of the approach of the manipulator to the object from the pre-grip point to the capture point is calculated.

- Capture closes.

- The captured object is still represented as an object of collision so only a difference that it is now part of the capture and is taken into account when planning the trajectory.

- Then, an indentation trajectory is generated from the position of capture to the point of preload to detach the object from the surface and fix the result of raising the object.

The constructed plan, containing all the necessary trajectories, can now be executed.

That has not yet said

At the entrance of our experiments, we decided to add our hand to the original four degrees of freedom two more. In the video and photo they are shown in red. This is due to the fact that in the case of using a gripper in the form of a fork or an anthropomorphic brush, good flexibility of the manipulator is necessary. By the way, if you use a vacuum sucker as a grip, then everything is somewhat simplified and 4 degrees of freedom can be enough, since only one plane is used for capturing.

In fact, the ability to perform capture largely depends on the generation of capture positions: the more and more diverse the positions are generated, the easier it will be to find the optimal one. Although all of this has a downside: the more positions, the longer it will take to process them. In our case, we first generated 10, 34 positions, then 68, and then 136. The best option that suits us is 34 positions. With a minimal number of positions, the manipulator is quite difficult to get into the generated posture, as a rule, the manipulator simply physically cannot reach it: unable to turn out like this, too short, too long, etc. At 34 there are from 2 to 5 positions satisfying all conditions.

Object Recognition

For these purposes, we decided to use the ROS node tabletop_object_detector. It was implemented by scientists at the University of British Columbia and has already managed to recommend itself. Although, in my opinion, the choice of the system should depend directly on the conditions in which you intend to apply the recognition and those objects that need to be identified. In our case, the recognition is carried out in the form of objects, and if you need to distinguish a jar of cucumbers from a can of tomatoes, then this method will not work. To identify objects using these depth camera data obtained from the Kinect.

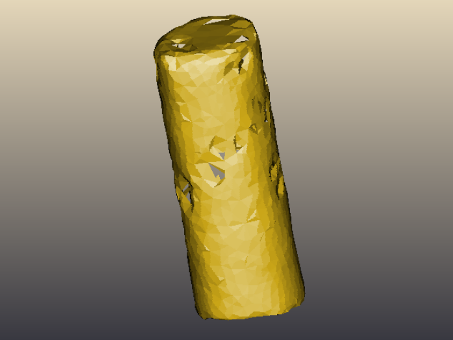

Before recognizing, you first need to train the system - to create a 3D model of the desired object.

3D model of the pack Pringles

After that, the system compares the received data with the models available in the database.

The recognition result looks like this:

As one would expect, the speed of searching for objects directly depends on the power of the machine at which the data is being processed. We used a laptop with intel core 2 duo 1.8ghz and 3Gb RAM. In this case, the identification of objects took about 1.5 - 2 seconds.

And of course, being able to select and identify objects from the environment, now I want to take and move them. The next step is to combine the tasks of recognition and control of the manipulator on a real robot.

Source: https://habr.com/ru/post/224765/

All Articles