Automatic label highlighting

In machine vision and robotics, there is an amusing class of problems: the detection of previously known marks. This could include everything: QR codes, Augmented Reality (AR, augmented reality), object positioning tasks (motion capture, positioning), object detection by tags, object classification in robotics (for example, with automatic sorting), automatic system assistance in positioning (robotic captures), tracking of objects, etc.

The article describes the main methods of capturing tags, their capabilities, limits of applicability.

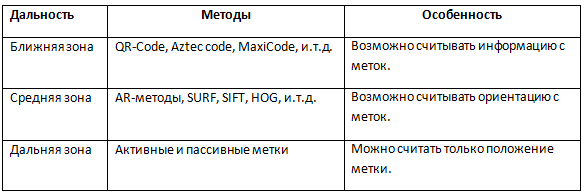

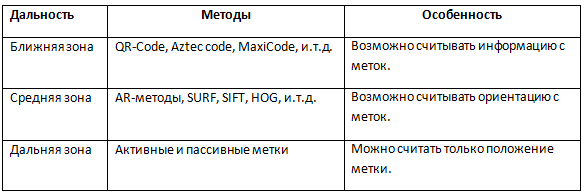

First of all, before describing the methods themselves, we introduce their brief classification in order to describe them further into groups.

')

The near zone is the zone in which detection is possible with high resolution, sufficient to see the bar code. Middle zone - where the resolution is sufficient for the spatial separation of the outer contour of the label. Far zone - where the label is comparable to the pixel size. Long-range methods can be used in the near and middle zone.

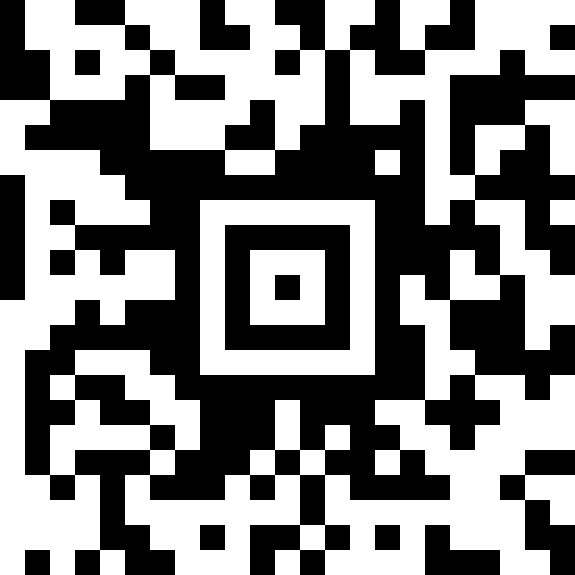

These methods allow not only to detect a label, but also to detect a sufficiently large amount of information from this label. A significant disadvantage of such methods is that high resolution is required, which means that the label should be large. Plus - automatic reading of a large amount of information, sometimes it is convenient (for example, when sorting parcels).

The whole range of bar codes , QR code , Aztec code, MaxiCode, etc. I will not make a comparison here, there is a lot written about this topic, including on Wikipedia.

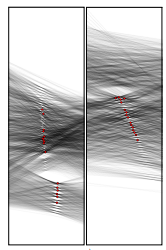

The first method is the selection of the main directions of the Hough transformation.

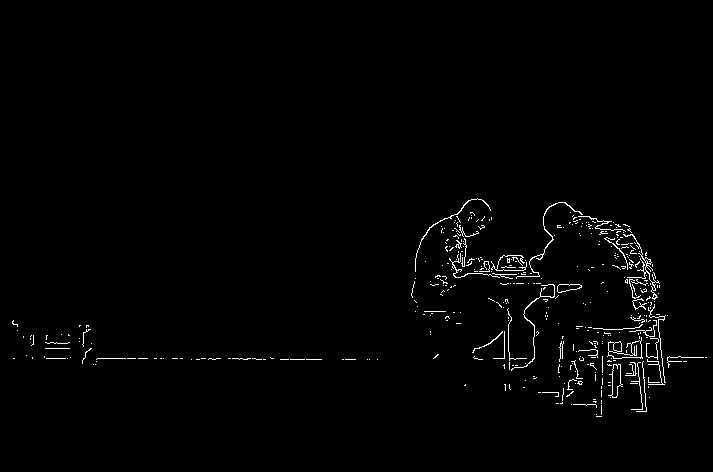

First, all borders are highlighted on the image. For the image, a histogram of border directions is built. The two main maxima will determine the spatial orientation of the label.

In the vicinity of both maxima, the Hough transformation is constructed. In this transformation, 16 main maxima are distinguished, along which the refined orientation of the pattern of the QR code is found, as well as the size of the string.

Then the mask of the QR code is tightened and the information is accurately read.

The second method common on the Internet is searching through the Haar cascade. The method is described in detail in many places, so I will not dwell on it in detail. A trained cascade searches for a corner mark of a QR code. After finding a candidate, symmetry axes are searched for that determine the orientation of the code itself.

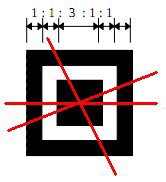

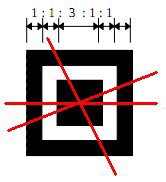

The third method . A set of test lines is taken, for which the places where the alternation of black and white occurs in the ratio (1: 1: 3: 1: 1) are searched. This relationship holds true for any rotation of the label.

Places where a test segment is selected are considered as candidates for the location of the QR-code support characters.

These three methods have many variations. There are other methods (including, you can use the methods that will be discussed below), but they are all less common. The main purpose of barcode detection is to read information from them. If the information is poorly visible and may not be considered - the method no longer requires operability from the method, even if the QR code itself is clearly visible. Therefore, all methods that are designed for QR codes usually do not distinguish the position of a small code size, even if it is visible.

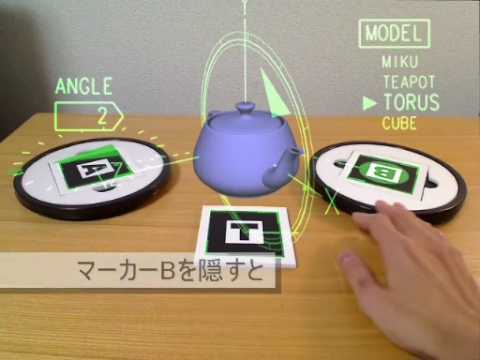

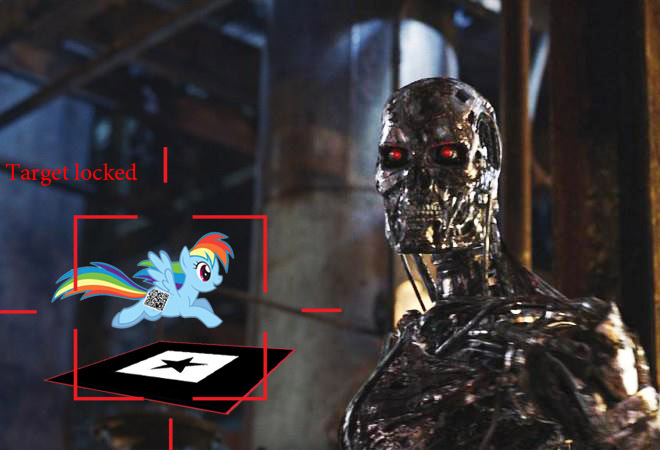

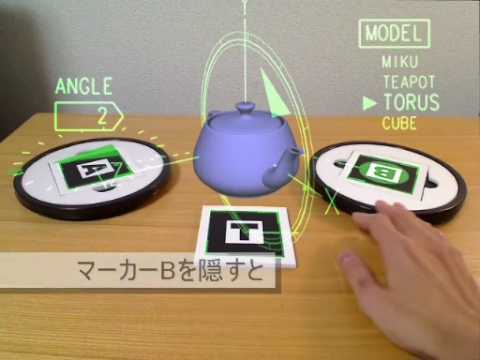

The methods of the middle zone are those methods where it is not necessary to extract information from the sample, but simply to detect it and search for its orientation. Recently, the most popular task of such a plan is the task of AR (augmented reality). I think that everyone has heard about it already. But just in case, here is an example of how this technology works:

The above example was made using the most famous AR framework ARToolKit , available under the GNU GPL. It detects black squares with 1-2 characters in the center. For example:

The framework detects related areas of dark color and performs a contour analysis for them. A brief description of the principle of his work has already been on Habré, I will not repeat it.

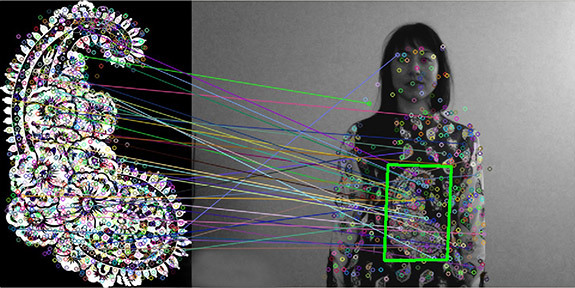

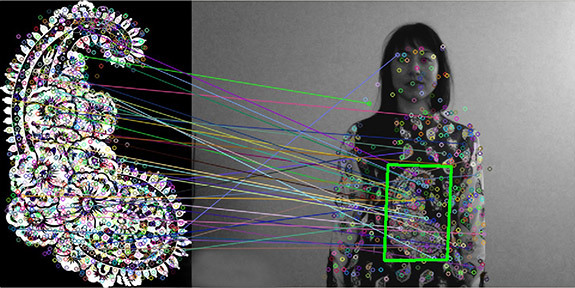

The second most simple and popular way to search for an AR tag is to use SURF / SIFT . In this case, the template is not a rectangle with a label, but any image.

About using SURF as an AR-marker on Habré was also a detailed article .

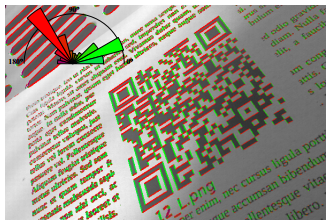

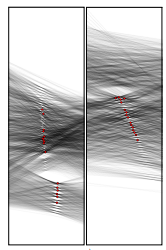

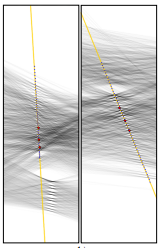

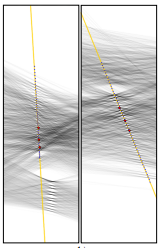

The downside of both methods is the need for sufficient resolution. In addition, for both algorithms, an a priori estimate of what size the label will be on the screen is important enough. For example, border detectors used in the first method and tuned to different object sizes will produce fundamentally different results:

Of course, you can configure the detector of features, be it SURF, SIFT, Haar, HOG, or something else, you can use a fairly small image (while still having the possibility of detecting a large version of it), but then it will work for a long time and the orientation will not be restored anyway .

In my opinion, the above methods are as old as the world and do not represent anything particularly interesting. For each of them there are thousands of articles, millions of implementations. The methods that allow detection in the far-field zone are less well known, there are fewer OpenSource solutions, you always have to do something yourself.

The classic task of the far zone can be called motion capture, although this is only the most popular of the applications. Labels can help robots when positioning themselves on the ground, solve AR problems (for example, to draw a house on the terrain), track objects, etc. In a sense, even the task of binding to the starry sky can be considered the task of detecting labels.

First of all, the far-field methods are also optical, but one should not forget about other methods, which are mentioned closer to the end.

The easiest and cheapest method is detection in a video stream. What mark can be seen from any distance? Only bright point. On this is built the whole method. There are two ways to create such points: a light bulb and a reflector on which to shine a light bulb.

The main part of the algorithms in such systems is, of course, point control algorithms and algorithms for the further processing of the resulting point field. Algorithms of selection of complexity do not represent. They are usually reduced to the detection of local maxima. Some examples of how this can be done are in these articles ( 1 , 2 , 3 ).

It is worth noting only that the optical algorithms are good in rooms and at night. During the daytime, a light bulb in the sun can be lost in thousands of other highlights (in expensive systems that shoot thousands of frames per second, you can use temporal coding of the signal to separate it).

Let's go to the labels that do not use optics. Let's start with the radio band.

This includes everything: systems with a planetary swing, GPS, Glonass - in the end, these are the same tags. There are more local methods , with an area of coverage roughly in the city. A simple beacon may be a mark of the presence of an object in the vicinity of the device. At the same time, its detection will be much simpler and more reliable than the detection of any of the optical labels.

Surprisingly, there is very little information about sound tags. They are easy to make, they are resistant to flare. Of course, the accuracy is not very high, and will depend on the base between the receivers. The kit for them needs the same as for all known distance sensors, which cost $ 2 each:

The base is made of several receivers, and the transmitter is a label. A couple of times I came across tasks where I would like to use them, but in the end we followed the simpler path of optical labels.

It seems that from widely used methods I did not forget anything (it is clear that there are many variations for codes, AR tags and motion capture methods). There are also exotic methods (corner reflectors for lidars), but they are rare. If suddenly someone else comes up with something else, write, try to insert.

The article describes the main methods of capturing tags, their capabilities, limits of applicability.

Work range

First of all, before describing the methods themselves, we introduce their brief classification in order to describe them further into groups.

')

The near zone is the zone in which detection is possible with high resolution, sufficient to see the bar code. Middle zone - where the resolution is sufficient for the spatial separation of the outer contour of the label. Far zone - where the label is comparable to the pixel size. Long-range methods can be used in the near and middle zone.

Near field methods

These methods allow not only to detect a label, but also to detect a sufficiently large amount of information from this label. A significant disadvantage of such methods is that high resolution is required, which means that the label should be large. Plus - automatic reading of a large amount of information, sometimes it is convenient (for example, when sorting parcels).

The whole range of bar codes , QR code , Aztec code, MaxiCode, etc. I will not make a comparison here, there is a lot written about this topic, including on Wikipedia.

Selection methods

The first method is the selection of the main directions of the Hough transformation.

First, all borders are highlighted on the image. For the image, a histogram of border directions is built. The two main maxima will determine the spatial orientation of the label.

In the vicinity of both maxima, the Hough transformation is constructed. In this transformation, 16 main maxima are distinguished, along which the refined orientation of the pattern of the QR code is found, as well as the size of the string.

Then the mask of the QR code is tightened and the information is accurately read.

The second method common on the Internet is searching through the Haar cascade. The method is described in detail in many places, so I will not dwell on it in detail. A trained cascade searches for a corner mark of a QR code. After finding a candidate, symmetry axes are searched for that determine the orientation of the code itself.

The third method . A set of test lines is taken, for which the places where the alternation of black and white occurs in the ratio (1: 1: 3: 1: 1) are searched. This relationship holds true for any rotation of the label.

Places where a test segment is selected are considered as candidates for the location of the QR-code support characters.

These three methods have many variations. There are other methods (including, you can use the methods that will be discussed below), but they are all less common. The main purpose of barcode detection is to read information from them. If the information is poorly visible and may not be considered - the method no longer requires operability from the method, even if the QR code itself is clearly visible. Therefore, all methods that are designed for QR codes usually do not distinguish the position of a small code size, even if it is visible.

Middle zone methods

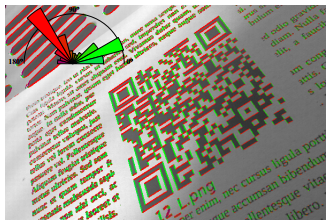

The methods of the middle zone are those methods where it is not necessary to extract information from the sample, but simply to detect it and search for its orientation. Recently, the most popular task of such a plan is the task of AR (augmented reality). I think that everyone has heard about it already. But just in case, here is an example of how this technology works:

The above example was made using the most famous AR framework ARToolKit , available under the GNU GPL. It detects black squares with 1-2 characters in the center. For example:

The framework detects related areas of dark color and performs a contour analysis for them. A brief description of the principle of his work has already been on Habré, I will not repeat it.

The second most simple and popular way to search for an AR tag is to use SURF / SIFT . In this case, the template is not a rectangle with a label, but any image.

About using SURF as an AR-marker on Habré was also a detailed article .

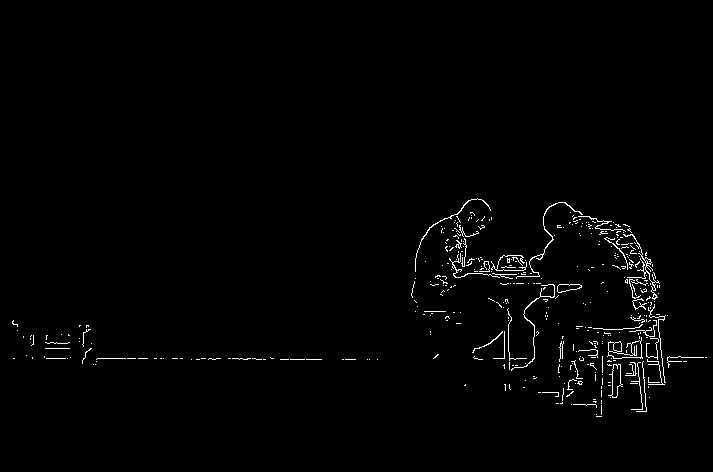

The downside of both methods is the need for sufficient resolution. In addition, for both algorithms, an a priori estimate of what size the label will be on the screen is important enough. For example, border detectors used in the first method and tuned to different object sizes will produce fundamentally different results:

Of course, you can configure the detector of features, be it SURF, SIFT, Haar, HOG, or something else, you can use a fairly small image (while still having the possibility of detecting a large version of it), but then it will work for a long time and the orientation will not be restored anyway .

Far-field methods

In my opinion, the above methods are as old as the world and do not represent anything particularly interesting. For each of them there are thousands of articles, millions of implementations. The methods that allow detection in the far-field zone are less well known, there are fewer OpenSource solutions, you always have to do something yourself.

The classic task of the far zone can be called motion capture, although this is only the most popular of the applications. Labels can help robots when positioning themselves on the ground, solve AR problems (for example, to draw a house on the terrain), track objects, etc. In a sense, even the task of binding to the starry sky can be considered the task of detecting labels.

First of all, the far-field methods are also optical, but one should not forget about other methods, which are mentioned closer to the end.

Optical detection in video stream

The easiest and cheapest method is detection in a video stream. What mark can be seen from any distance? Only bright point. On this is built the whole method. There are two ways to create such points: a light bulb and a reflector on which to shine a light bulb.

The main part of the algorithms in such systems is, of course, point control algorithms and algorithms for the further processing of the resulting point field. Algorithms of selection of complexity do not represent. They are usually reduced to the detection of local maxima. Some examples of how this can be done are in these articles ( 1 , 2 , 3 ).

It is worth noting only that the optical algorithms are good in rooms and at night. During the daytime, a light bulb in the sun can be lost in thousands of other highlights (in expensive systems that shoot thousands of frames per second, you can use temporal coding of the signal to separate it).

Radar tags

Let's go to the labels that do not use optics. Let's start with the radio band.

This includes everything: systems with a planetary swing, GPS, Glonass - in the end, these are the same tags. There are more local methods , with an area of coverage roughly in the city. A simple beacon may be a mark of the presence of an object in the vicinity of the device. At the same time, its detection will be much simpler and more reliable than the detection of any of the optical labels.

Sound methods

Surprisingly, there is very little information about sound tags. They are easy to make, they are resistant to flare. Of course, the accuracy is not very high, and will depend on the base between the receivers. The kit for them needs the same as for all known distance sensors, which cost $ 2 each:

The base is made of several receivers, and the transmitter is a label. A couple of times I came across tasks where I would like to use them, but in the end we followed the simpler path of optical labels.

It seems that from widely used methods I did not forget anything (it is clear that there are many variations for codes, AR tags and motion capture methods). There are also exotic methods (corner reflectors for lidars), but they are rare. If suddenly someone else comes up with something else, write, try to insert.

Source: https://habr.com/ru/post/224339/

All Articles