Experiment "Laboratory work", or How we created a robot that uses a smartphone

A remake of the well-known wisdom says that it is better to see once than read a hundred times. We talk a lot about the new features and benefits offered by the second YotaPhone screen. But the most intelligible descriptions can not be compared with the ability to see what it is - to work with the second screen of the smartphone.

And we decided to create a robot that would help to demonstrate the idea. And not just a robot, but a specially trained YotaPhone control. We created it together with great guys from Look At Media and Hello Computer.

')

"Laboratory work"

We called this project “ Laboratory work ”. Its essence is to enable everyone to remotely test some functions of the YotaPhone with the help of a specially created robot. The real, of

In short, the robot's control process was as follows: the website could send SMS text and a number, and then observe how the robot dials it and sends for you. If in the text there were certain words-markers, then on the second screen there appeared a picture created for this word.

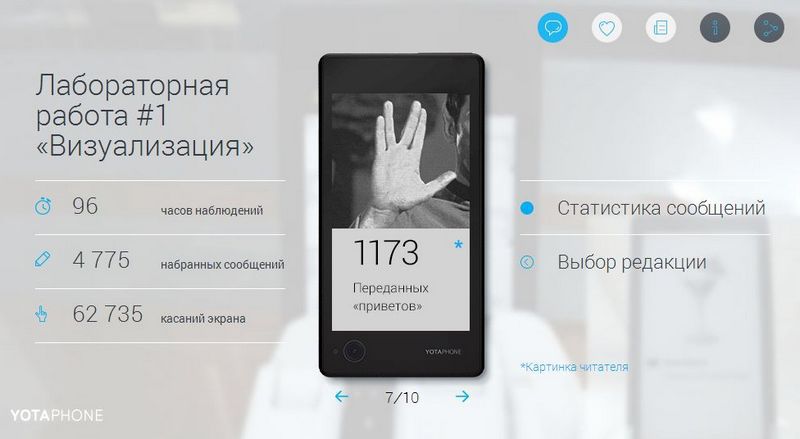

During the operation of the robot as an SMS sender, our users sent 4,775 messages with it, of which 218 were declarations of love. By the way, there were also 65 hacking attempts.

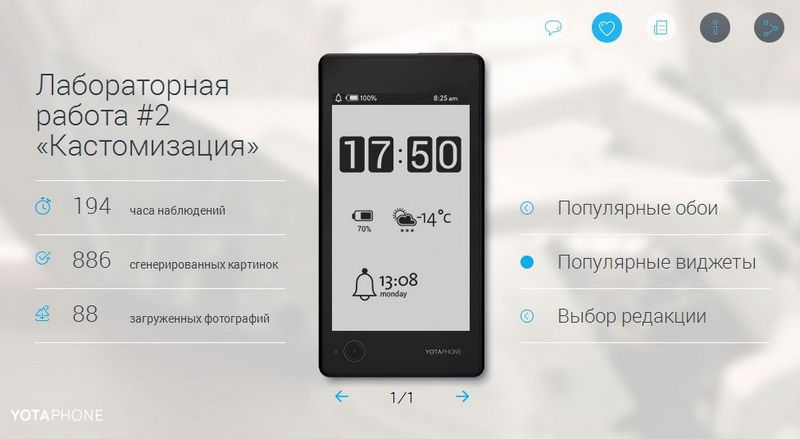

Then the second stage of testing was carried out: we gave the opportunity to remotely download the wallpaper on the second screen belonging to the robot YotaPhone, as well as select various widgets. The robot “collected” on the main screen a set of wallpapers and widgets, and then with the firm gesture put2back effectively sent it to the second screen. During this stage, users generated 886 images and uploaded 88 photos.

The third stage of the remote testing of YotaPhone was recently completed by everyone with the help of a robot. This time we conducted a stress test: how long the YotaPhone will work on a single battery charge while reading a book on the second screen. In the role of a literary source was "War and Peace", and the speed of "reading" was about 3 pages per minute. Especially for the third stage, we built a hoop around YotaPhone, on which the backlight for the second screen is placed. It is connected to an additional Arduino. Anyone could not only watch the process of reading “War and Peace” around the clock, but also change the illumination intensity, simulating situations for different times of the day.

During this stage, three tests were conducted, that is, a charged smartphone worked three times until the battery was completely discharged. Here are the results of the third stage:

History of creation

At first, we decided to take the path of least resistance and studied the open-source ready-made projects of such robotic hands, including even such an open source project. The main drawback of the considered projects was the mechanization of fingers based on tensioning cables. Unfortunately, it would not allow to achieve the desired positioning accuracy. We also liked this project , but it did not satisfy the key condition, the presence of a hand, so we also had to give it up. As a result, it was decided to create a robot of its own design.

Prototype

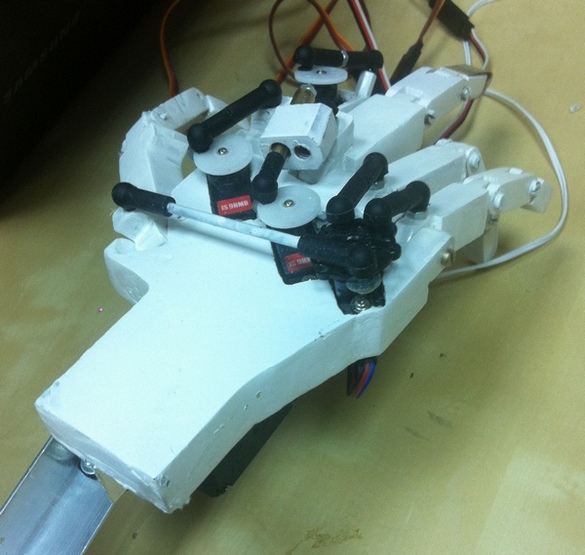

At first we decided to make a rough version of epoxy resin. Molded parts with a knife gave the desired shape, and then painted in white. At first, we wanted to check the performance of the structure, estimate the location and methods of mounting the servomotors, estimate the amplitude of finger movements.

To control all servomotors decided to use the Arduino UNO. After testing the first version of the prototype came to the following conclusions:

• To improve positioning accuracy, remove the servos from the phalanges.

• Each finger should be controlled by only one servo.

• For the swipe gesture, you need to move the whole arm up / down

Work samples

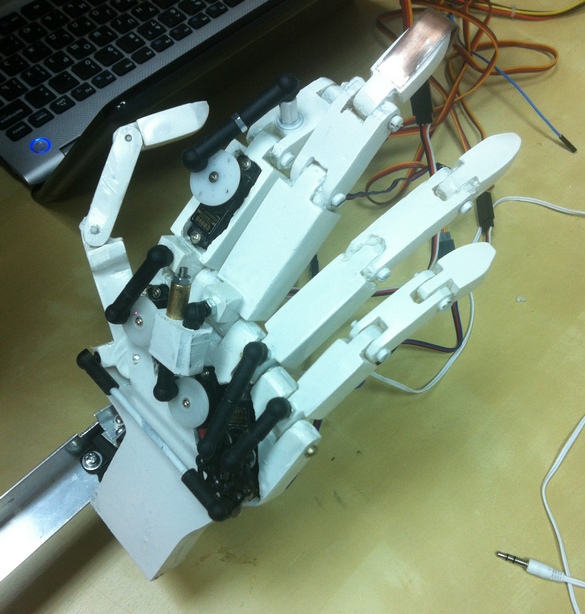

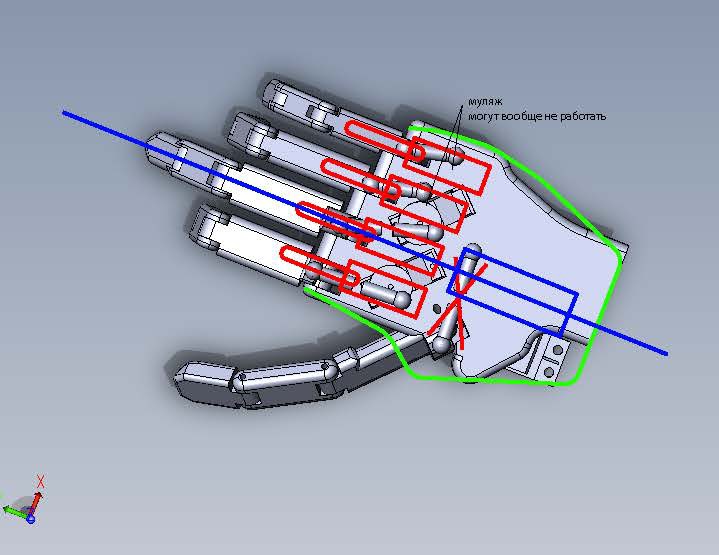

In the SolidWorks CAD package, we created 3D models of 15 parts that make up the hand. After printing on a 3D printer and assembly, we tested the interaction with the touch screen.

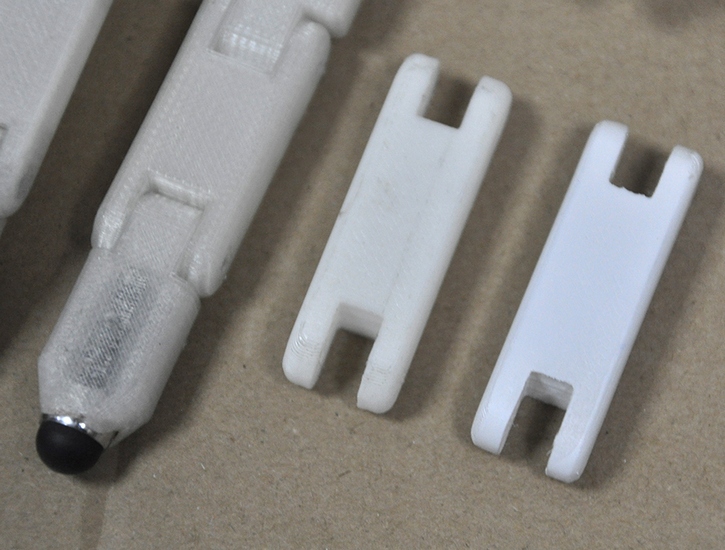

It turned out that the capacitive screen does not respond to the plastic from which the hand is made. The problem was solved by installing cut tips from the stylus of large diameter into the fingers.

A rotary engine and servo drives the fingers were installed on the arm. Their accuracy was not enough for confident positioning, the fingers did not fall on the virtual buttons on the smartphone screen. As a result, we had to use expensive servo drives manufactured for model aircraft: FUTABA S1353 for fingers and BLS-352 for turning hands. In addition to positioning accuracy, we also solved the problem of reliability, because the hand had to work continuously for three weeks.

After solving this problem began to solve a new one. The hand itself is fixed on the shaft of the stepper motor, which is mounted on a platform moved up / down. The engine is responsible for turning the arm around an axis perpendicular to the plane of the smartphone. And during turns there were strong vibrations, since the overall rigidity of the structure did not allow to compensate for the substantial moment of inertia that arises. This again led to a decrease in the accuracy of finger positioning. To reduce vibrations, we tried to use different gaskets in the articulated joints, but this turned out to be ineffective. I had to artificially reduce the speed of the rotary engine and introduce small pauses after each turn in order to have time to damp out the residual vibrations.

An unexpected problem turned out to be connected with a rotating engine. He was very hot. This was decided by assembling the intermediate controller, which completely shut off the engine during downtimes.

To improve the appearance of the robot, a second working sample of lighter plastic was printed. Before assembly, additional details were painted in white to give the product an even more elegant look.

Rack

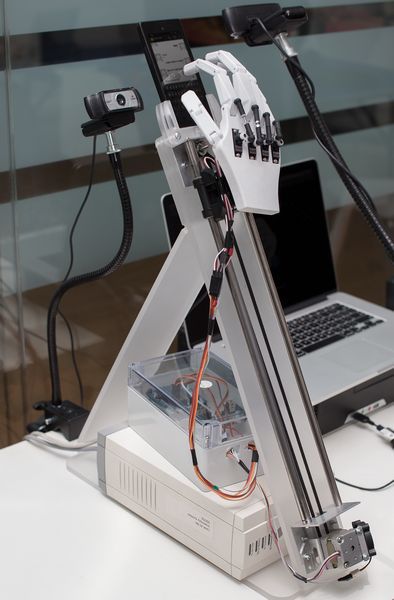

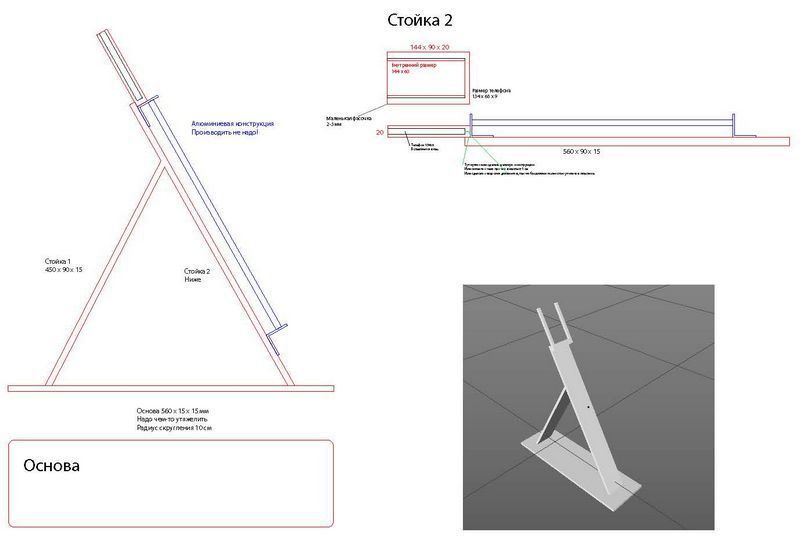

It was decided to place the smartphone, which the robot should work with, on an inclination, as it is usually held by a person, and not vertically or horizontally. Based on this, a stand was designed in the form of an equilateral triangle, one of the sides of which consists of two guides along which the platform with the arm moves.

Rack made of white plastic. The platform with the hand is connected by a belt drive with a stepper motor, thanks to which the platform moves along the guides.

Software part

After eliminating all the electronic-mechanical obstacles, we proceeded to debug the program part of the project. As a client part, we wrote a small web application in JavaScript. Information from the client gets to our server where Ruby 2.0.0 Rails 4 MySQL is installed. The server collects user requests and creates a queue of tasks for the robot. The server sends this information to a local control application written by us on NodeJS. All data is transmitted in JSON format. Next, tasks are converted to specific commands for the Arduino, to which the robot is directly connected. Interaction with Arduino is carried out through the library voodootikigod / node-serialport .

In addition, the management application receives feedback from a specially written client working directly on the smartphone. This client tracks the click coordinates, and if a discrepancy is found, the control application performs a calibration.

The third function assigned to the control application is to record video clips that capture the performance of each task. For the video used camera Logitech C920 , mounted on flexible holders . During the passage of the first stage of the project, the managing application received information on sending SMS from the client module on the smartphone. The video sequence from the cameras was recorded using the WireCast program, then the corresponding video fragment was cut out of it and a link to it was sent to the user.

To save time, we decided not to create our own API for mass video broadcasting, but instead we used a ready-made solution from CDNvideo. However, due to the fact that their API was not yet fully debugged, for a while we had problems with saving video clips. In the end, the developer provided us with a new, fully working version of the API.

In total, we manufactured two robots that directly participated in the public testing of the YotaPhone. The last sample was slightly modernized: we had to change the place of attachment of the rotary motor to the arm, so that the robot could make a screen-swipe gesture (svayp).

Conclusion

The implementation of this project was very exciting for us. The ability to creatively solve a non-standard task, the creation of a new and quite universal device, the observation of how the robot “lives” its life thanks to the interest of users - all this is very close in spirit to our work on YotaPhone.

We would like to give everyone the opportunity to personally try what it is - a smartphone with two screens. Feel the phone in your hand, evaluate the screen response on electronic ink. Unfortunately this is not possible. But we are sure that, even if the remote, but independent interaction with the smartphone allows us to better understand the advantages of the second screen. We believe that the possibility of such an interactive test YotaPhone is much more honest compared to the licked commercials. What do you think? We are very interested to know your opinion about this project.

Source: https://habr.com/ru/post/223543/

All Articles