Muggle Shaders

Prehistory

Lammers book was published in Russian, astrologers predict ...

At the DevGAMM conference, I bought a high-priced Kenny Lammers book in which I later signed: Simonov, Galenkik and Pridyuk. Imposingly for two evenings, I did finish it to the middle and decided: to collect everything that is written there at the beginning, to digest, draw pictures and write an article.

The article is intended for completely beginners who hardly copy the C # code from the lessons copied, so I will not delve into the theory that is already described . For the place of this, we will solve practical problems and find out that shaders are not only needed: “Everything sparkled and shone”.

')

Introduction

What is a shader? This is such a cool program that runs on a video card. Cool, yeah? But no, in inept hands one curve shader is capable of dropping FPS into zeros for you, and maybe even winds back time. The shader is also not integral, it is also executed on pixels (Pixel shader) and on vertexes (Vertex shader). That is, really, for each pixel of your raster, a small program will be called which will count something there, if we still twist cycles there and instruct if'ov - it will be completely painful. To do this, Dumbledore thought of forbidding students

Whose name can not be called

In Unity3D they made some kind of wrapper over HLSL and CG (shader languages) - Shader Lab. It allows you not to write new shaders for each API and provides a lot of everything delicious. But the Shader Lab is still a wrapper and inside we still write in one of these languages, it has historically happened that almost everyone writes on CG. Unity3D also throws most of the information on the shader itself, so we just need to describe what we want and the engine will figure it out.

Surface Shader is the kind of functionality that abstracts us from pixel and vertex shaders and we work with the surface. That is, we simply say color, force of reflection and normal. This whole thing is compiled into vertex and pixel functions. Very powerful tool that allows you to write shaders easily and simply, that even I could.

We write the first shader

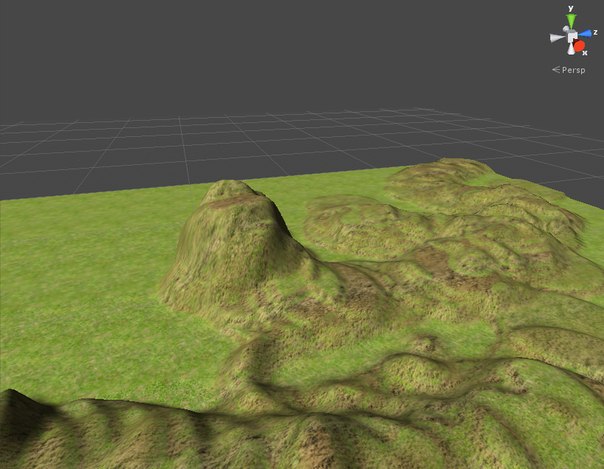

As a result, we get this:

Yes, it is terrain, yes it is some kind of dirty. The bottom line is:

- We take mesh

- Take a height map

- Texture the mesh on this map.

As a result, we get the pollution of the slopes. This is a very simple example in which we will get acquainted with the main features and tasks that shaders solve. I will comment on the code:

Shader "Custom/HeightMapTexture" { // Properties { // Unity3D _HeightTex ("HeightMap Texture", 2D) = "white" {} // , . _GrassTex ("Grass Texture", 2D) = "white" {} // 2D _RockTex ("Rock Texture", 2D) = "white" {} } SubShader { Tags { "RenderType"="Opaque" } // LOD 200 CGPROGRAM // CG #pragma surface surf Lambert // . , : Surface . sampler2D _GrassTex; // Properties sampler2D _RockTex; sampler2D _HeightTex; struct Input { // , float2 uv_GrassTex; //uv , float2 uv_RockTex; float2 uv_HeightTex; }; void surf (Input IN, inout SurfaceOutput o) { // // UV float4 grass = tex2D(_GrassTex, IN.uv_GrassTex); // float4 rock = tex2D(_RockTex, IN.uv_RockTex); //\ float4 height = tex2D(_HeightTex, IN.uv_HeightTex); // o.Albedo = lerp(grass, rock, height); // // SurfaceOutput :) } ENDCG } FallBack "Diffuse" // - } It seems to be difficult, but nothing Harry could dispel by the patronus of the dementors, and we can not interpol the pixels? Now I’ll tell you why we are doing all this and the code will become a little clearer.

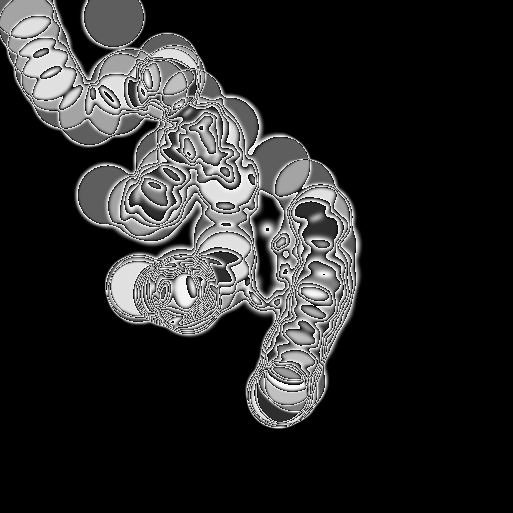

- This is a height map. We take from it the color of the pixel, it is black and white so that we can interpret it as a height. Suppose 1 is absolutely high and 0 is absolutely low (even lower than the conversion in your game). Proceeding from this, we can do such things as: If 1, then we take the texture of the stone completely, and if 0 weeds. If it is gray, then 50/50, etc. As you can see from the map, we have a lot of heights, so everything goes down softly as in Hagrid's arms.

- lerp () is such a function for interpolation, in the first two arguments we specify two values between which will be selected and in the second on what grounds. There we could transfer one of the pixel components, only r is allowed, but in this case, everything is transmitted at once. Then I will tell why it is unnecessary to do so.

- tex2D () - Take the pixel color from the texture by UV. Very boring. We get UV from the Input structure, the unit took care of us and put everything there. To animate the texture, we can assume that UV is offset in sine wave time.

It became clearer? If not, then you urgently need to eat apple pie and then come back. At 70% it works. Oh yeah, create a shader in the same place as the scripts. Then we hang it on the material, and we put the material in the terrain settings.

UV

I'll tell you a little about UV, and then UV, then UV and that - and what kind of beast is incomprehensible.

In the simple case, the UV coordinate simply corresponds to the coordinate on the object. UV coordinates lie in the limit from 0 to 1. In this scenario, the texture will simply be superimposed on the object 1 to 1, stretched in places - but we are not afraid. We can specify UV for each vertex and we will get a scan when the texture falls in parts. I'm certainly not an expert, but like Unity3D itself takes information about the sweep out of the mesh and passes it to the shader, so we don’t need to think about it for now.

How to make beautiful and not to submit the form. Or use the Normal map.

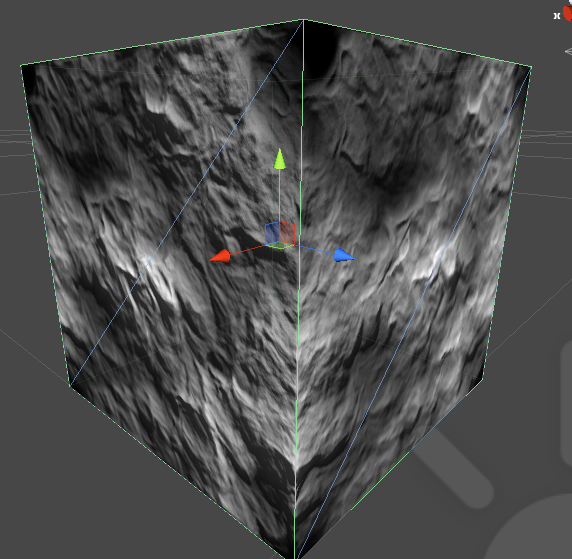

Normal map is such a texture in rgb which vectors are encoded. That is, in our three-dimensional space, an ordinary vector consists of three components x, y and z. Which just fits into the texture components. Therefore, it looks so weird when we look at it as a normal texture, there actually are brain stumps.

Using this texture when drawing our pixels, we can take a vector from a pixel and refract light on it to get the effect of volume. It sounds complicated but using the sorcery of surface shaders and the Unity3D library. The implementation of this task is rather trivial.

Before

After

Shader:

Shader "Custom/BumpMapExample" { Properties { _MainTex ("Base (RGB)", 2D) = "white" {} _BumpMap ("Bump map", 2D) = "bump" {} // } SubShader { Tags { "RenderType"="Opaque" } LOD 200 CGPROGRAM #pragma surface surf Lambert sampler2D _MainTex; sampler2D _BumpMap; struct Input { float2 uv_MainTex; float2 uv_BumpMap; }; void surf (Input IN, inout SurfaceOutput o) { half4 c = tex2D (_MainTex, IN.uv_MainTex); o.Normal = UnpackNormal(tex2D(_BumpMap, IN.uv_BumpMap)); // o.Albedo = c.rgb; o.Alpha = ca; } ENDCG } FallBack "Diffuse" } Everything is the same as in the past, only now we take a pixel and transfer it to the UnpackNormal function, it will get the normal itself and we just put it into the SurfaceOutput structure. Further, the normal lighting function will take its place and there they will already decide what to do with it. By the way, without a light source Normal Map'ing will not work.

Three unforgivable spells

A little bit about how to do it right:

- Collect textures in one. Suppose we have a height map, we can pack as many as 4 values into a pixel, for the height we need only one. That is, we can collect several textures in one, scattering them through the channels. Useful practice of these wizards

- Part of the logic can and should be transferred to scripts. Much enough to count only every frame and not every pixel. Shaders help but do not do everything only by them.

- Do not use conditional operators, it is very stressful to the system and try to find workarounds.

- All that you can bake in advance in texture, bake. Free the shader from unnecessary work, take the data from the texture much faster than re-calculate it.

Conclusion

This is the end of the first part. Next, I want to tell you more about the specs, display using CubeMap and how to keep 300 animated units in the frame on the ipad :)

I very much hope that all comments on the article will be expressed as constructively as possible so that I and other newcomers can learn. I do not consider myself a professional in this matter, but I have some experience, and I know how many subtleties are there. I really hope for the help of the Habrasoobshchestvo. I deliberately missed the story about shader models and subtleties in the settings of tags and other things - for this there is still unfinished material in which we will analyze it.

Source: https://habr.com/ru/post/223541/

All Articles