Document in perspective, what to do with it? Correction of the results of contactless scanning and photographs of documents

We came up with the idea of this article after reading the article “How does the automatic selection of a document on an image work in ABBYY FineScanner?” , Published on Habré by ABBYY, which describes in detail an algorithm for determining the boundaries of a document on an image obtained by a mobile phone camera.

The article is certainly interesting and useful. We, “with a feeling of deep satisfaction,” noted that ABBYY uses the same mathematical algorithms as we and wisely omits some details, without which the accuracy of determining the boundaries of the document is significantly reduced.

I think that after reading the article a certain part of readers had a reasonable question: “What to do with the document found on the picture further?” I will answer with the words of the Cheshire Cat Alice: “Where do you want to come?” If the ultimate goal is to “pull out” the text from the picture data, then you need to maximally facilitate the task of the recognition system. For this, first of all, it is necessary to correct perspective distortions, the scourge of all photographs of documents "by hand". If this problem is not solved, an attempt to recognize the data may give a result comparable to attempts to recognize captcha. On the freelance sites with enviable regularity appear "believers" in the victory of machine intelligence over the captcha for a small price. Blessed is he who believes, but we are not talking about that now.

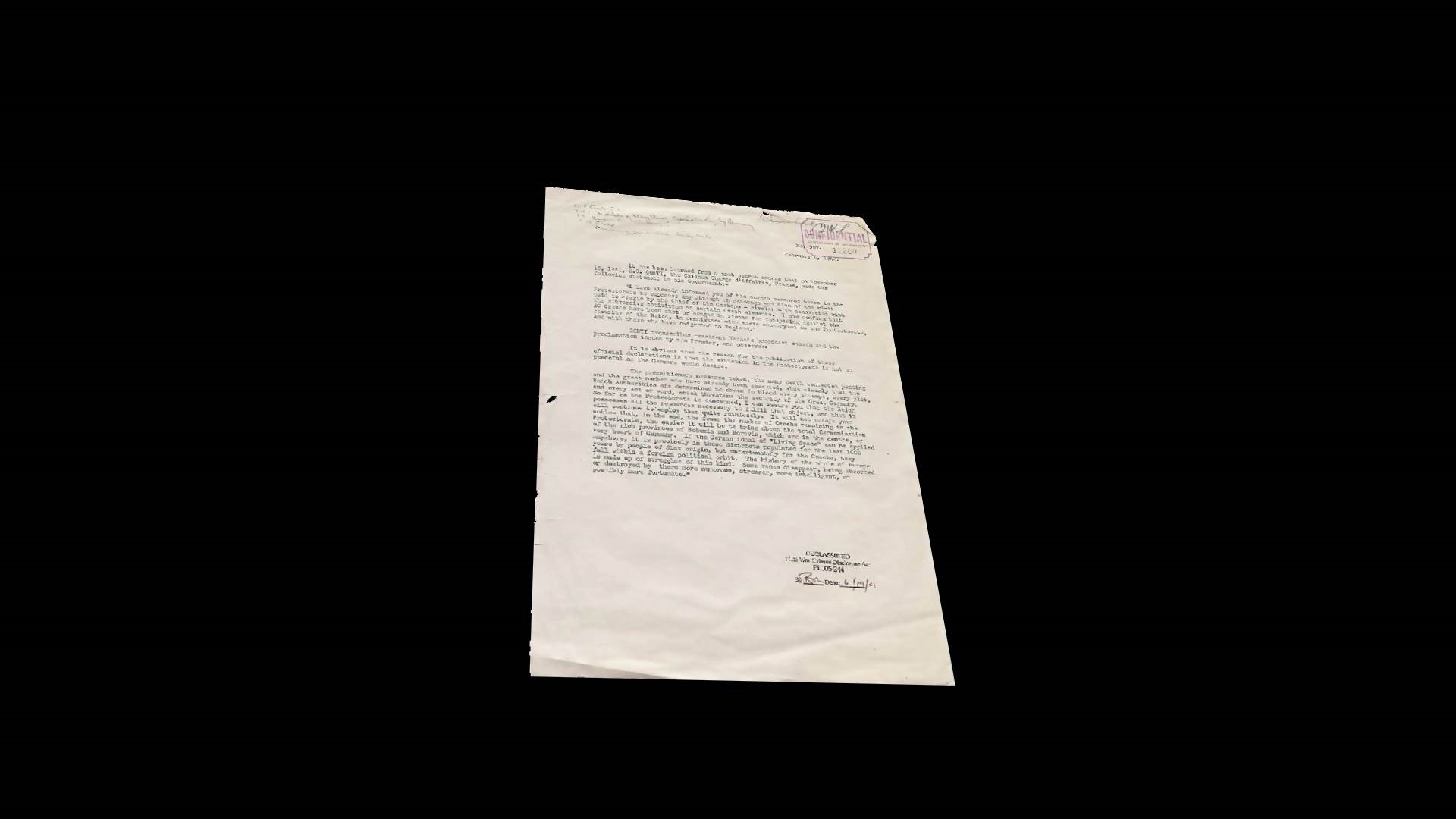

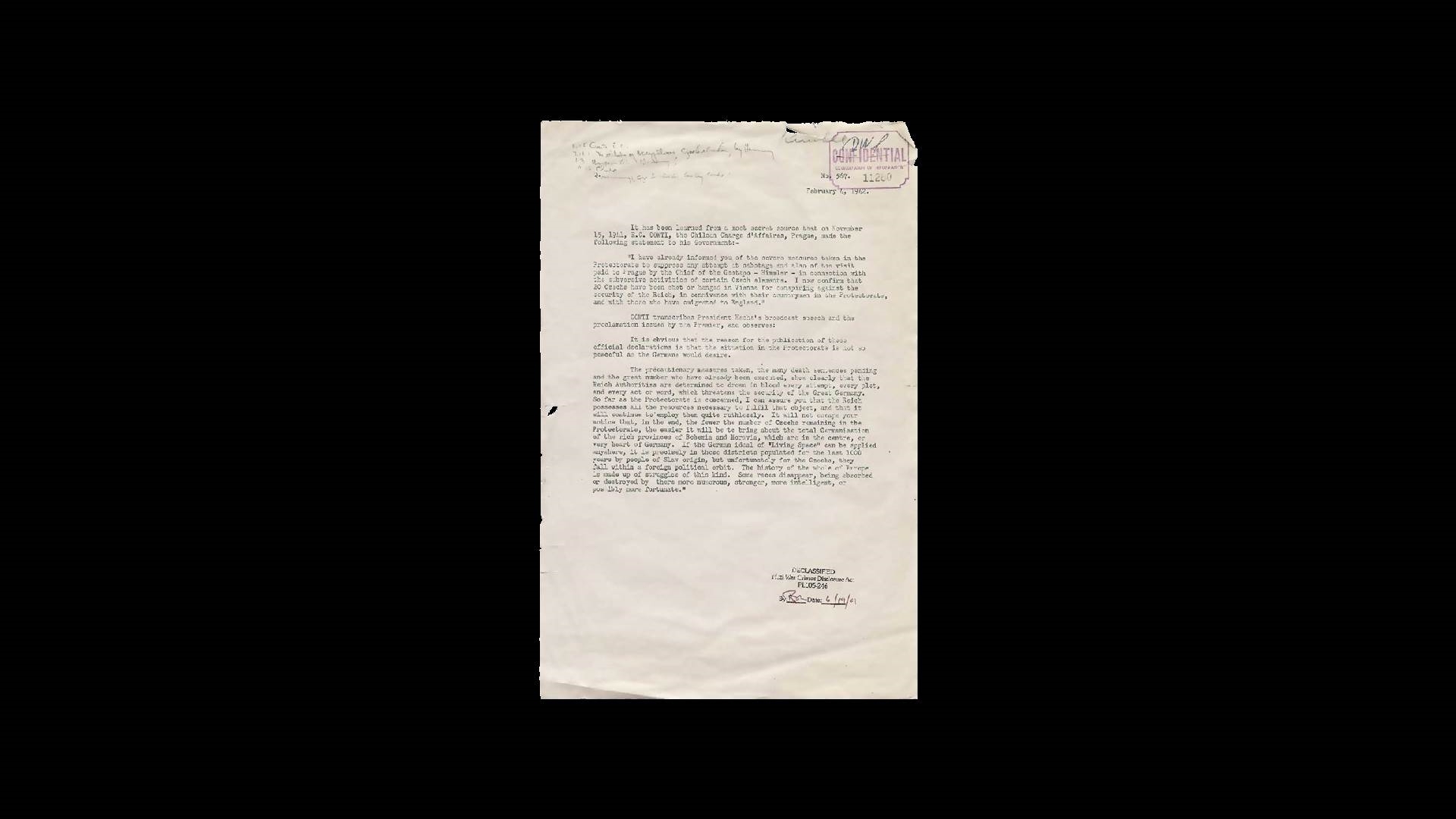

So, in this article we will try to pick up the baton from ABBYY and tell you in our own way how to bring the prism-like, at best, document that we identified in the picture (thanks to ABBYY for science) to rectangular form, preferably with preservation original proportions. We do not consider exotic cases like pentagonal or oval documents, although the question is interesting.

The problem of distorting perspective distortions arose before ALANIS Software not quite from the side from which it was expected. I mean, the fact that we do not specialize in mobile development. However, our customer, for whom we are developing a system for scanning and image processing for planetary scanners based on Canon EOS digital mirror cameras (hi, photographers!), At some point wanted to have such functionality in the arsenal. Moreover, it was not about processing the finished camera image, but about adjusting the video stream, at the LiveView preview stage. However, the solution we developed works equally well in the correction mode of an already taken snapshot of a document.

Given:

Task:

to bring the document to its original form the shortest way

Challenge (the Russian equivalent somehow does not occur):

Decision:

So, to solve the problem as a whole, we suggest splitting it into two separate ones:

You could, of course, try to reinvent the wheel, and some still succeed, but we took the easier way and used the OpenCV toolkit. We work mostly in the .NET environment, through C # Wrapper OpenCVSharp . OpenCVSharp is also available as a Nuget package in Visual Studio. "That's all" (c) and will use.

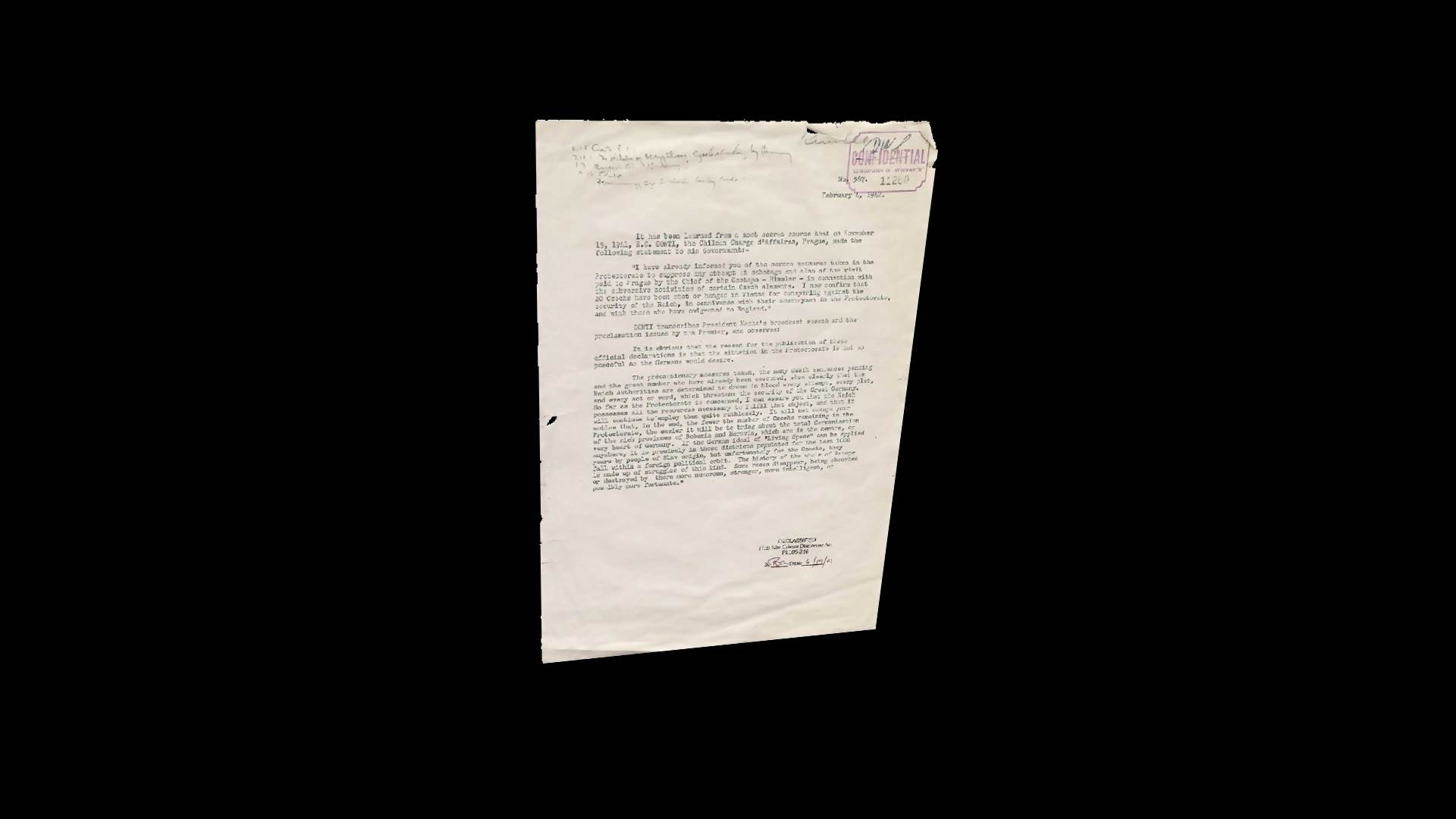

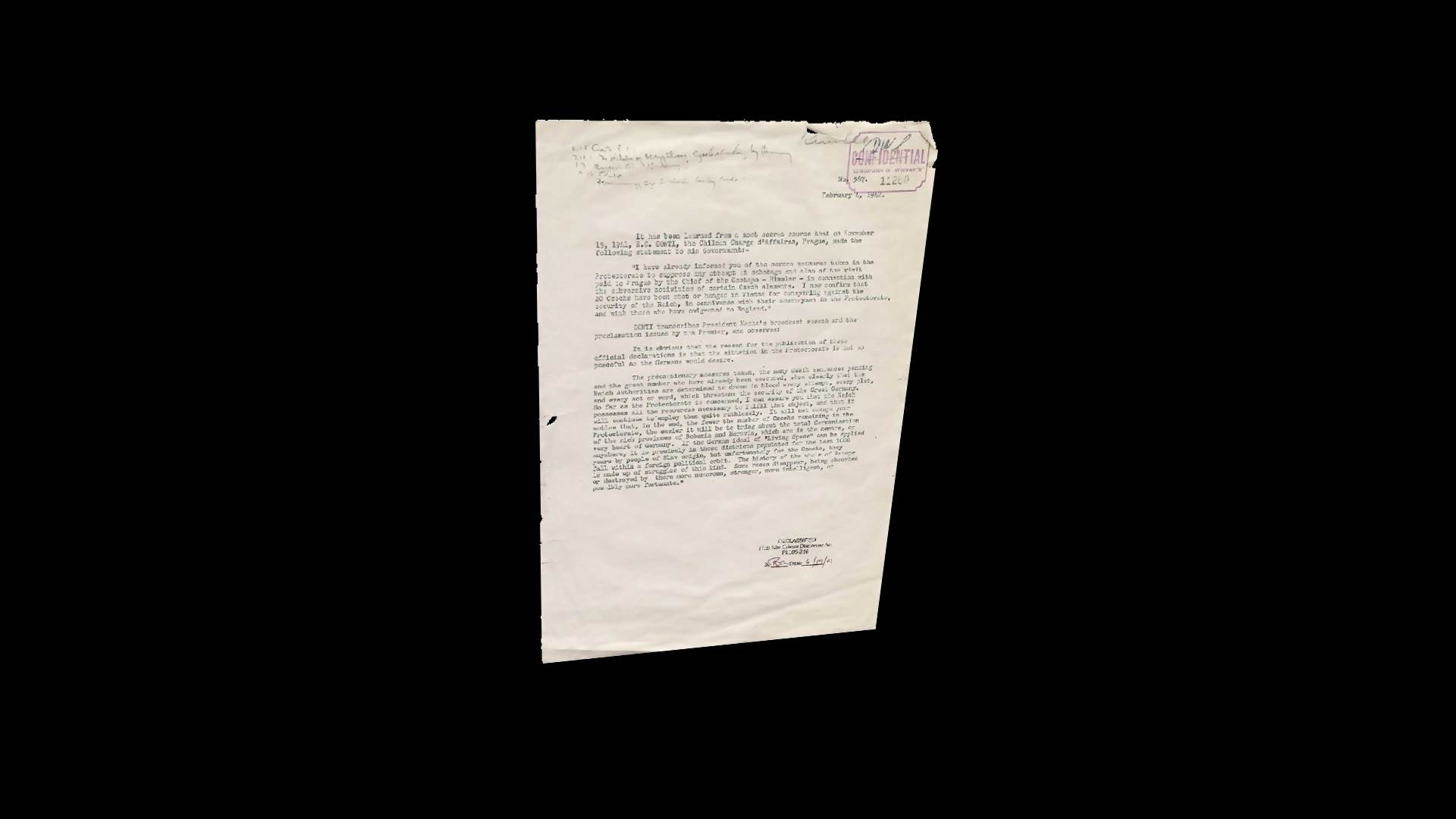

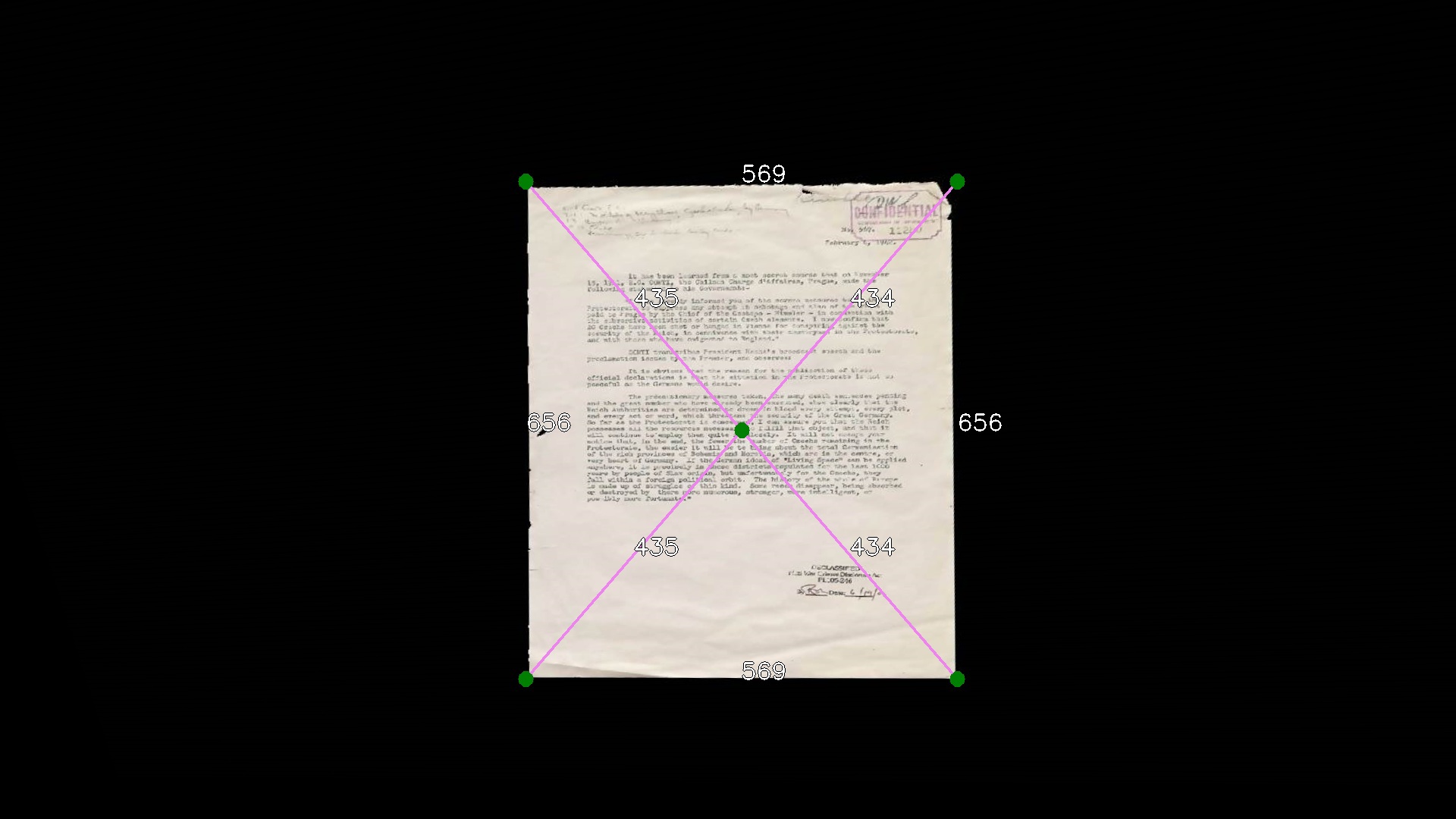

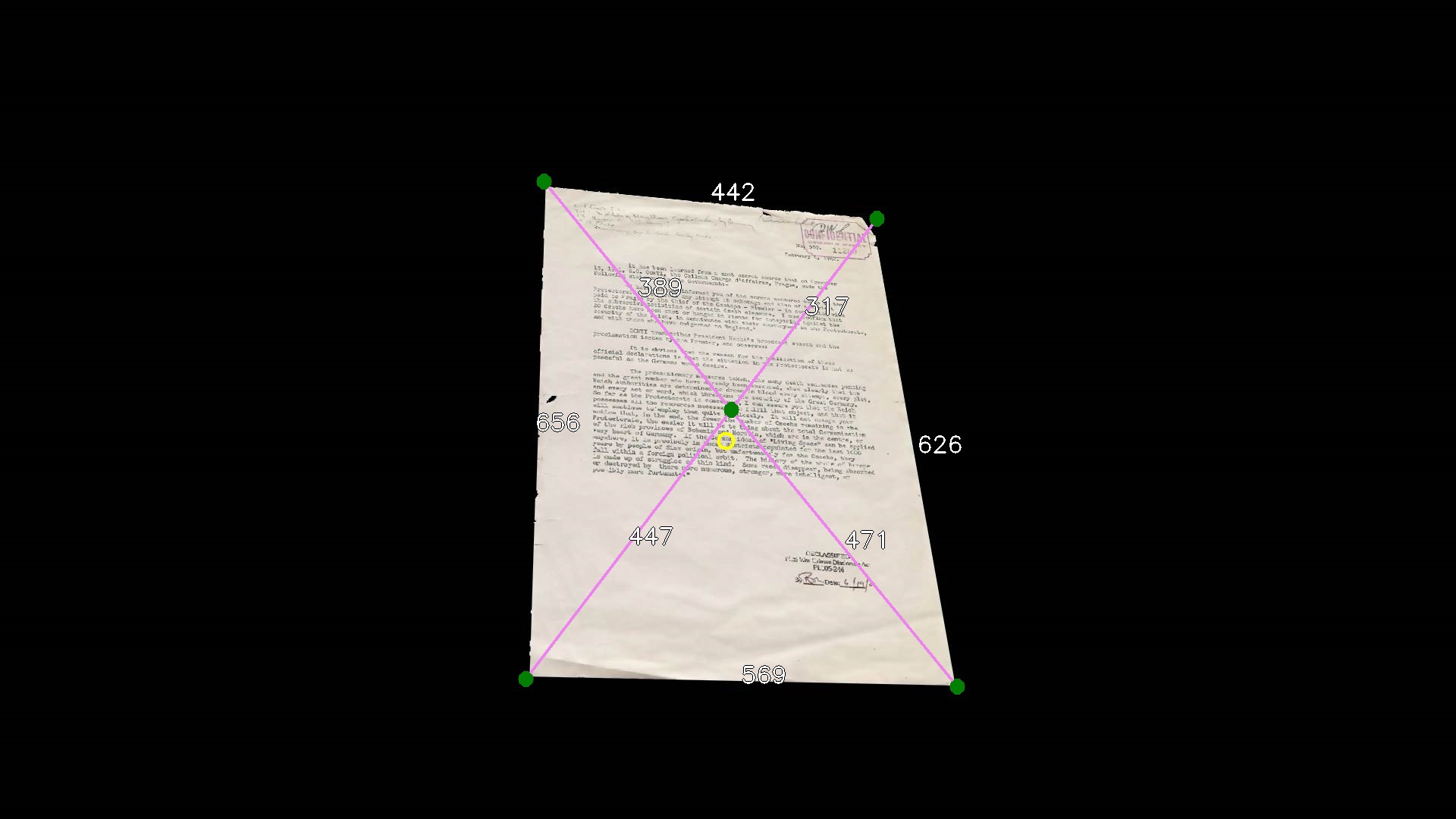

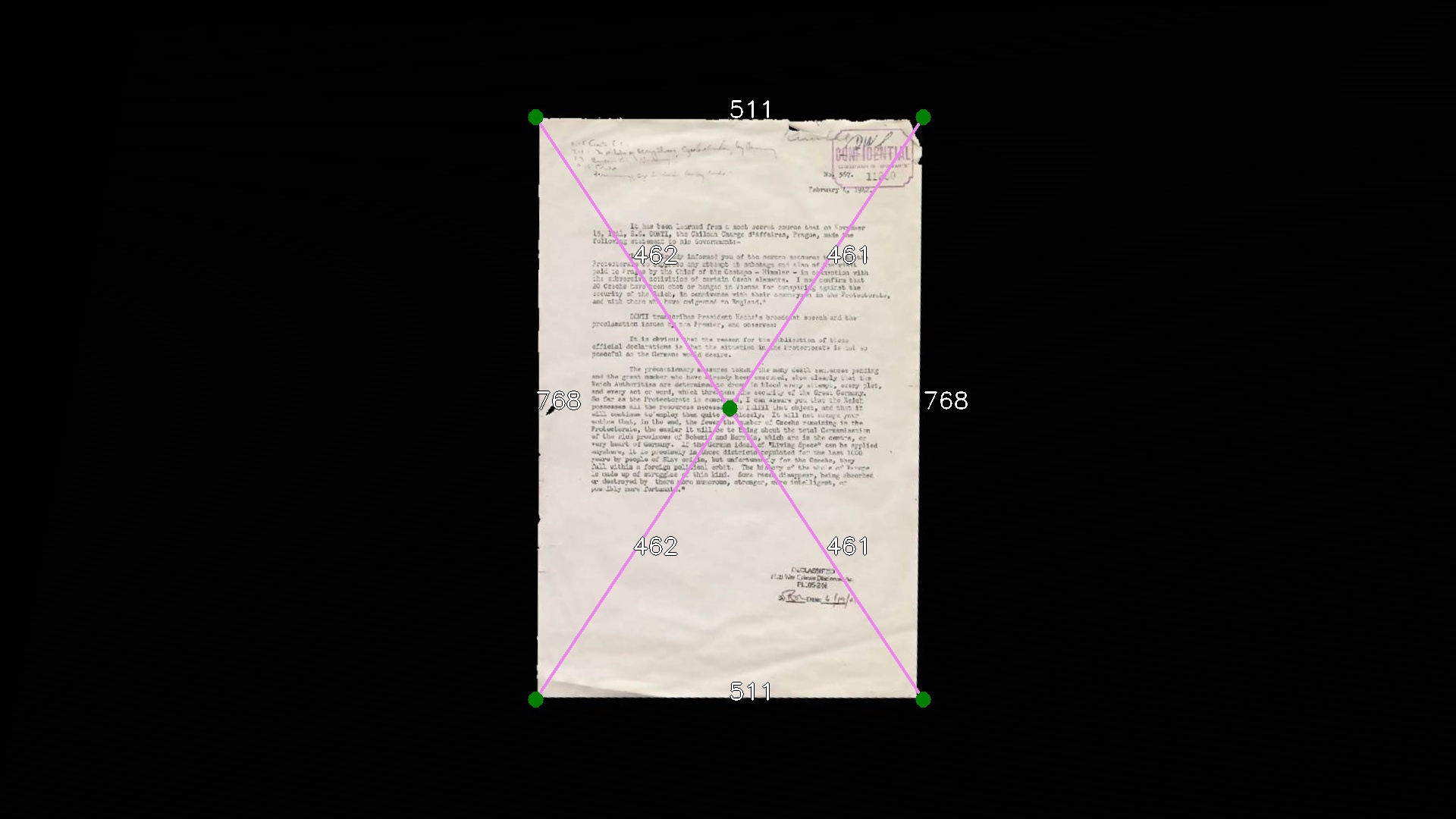

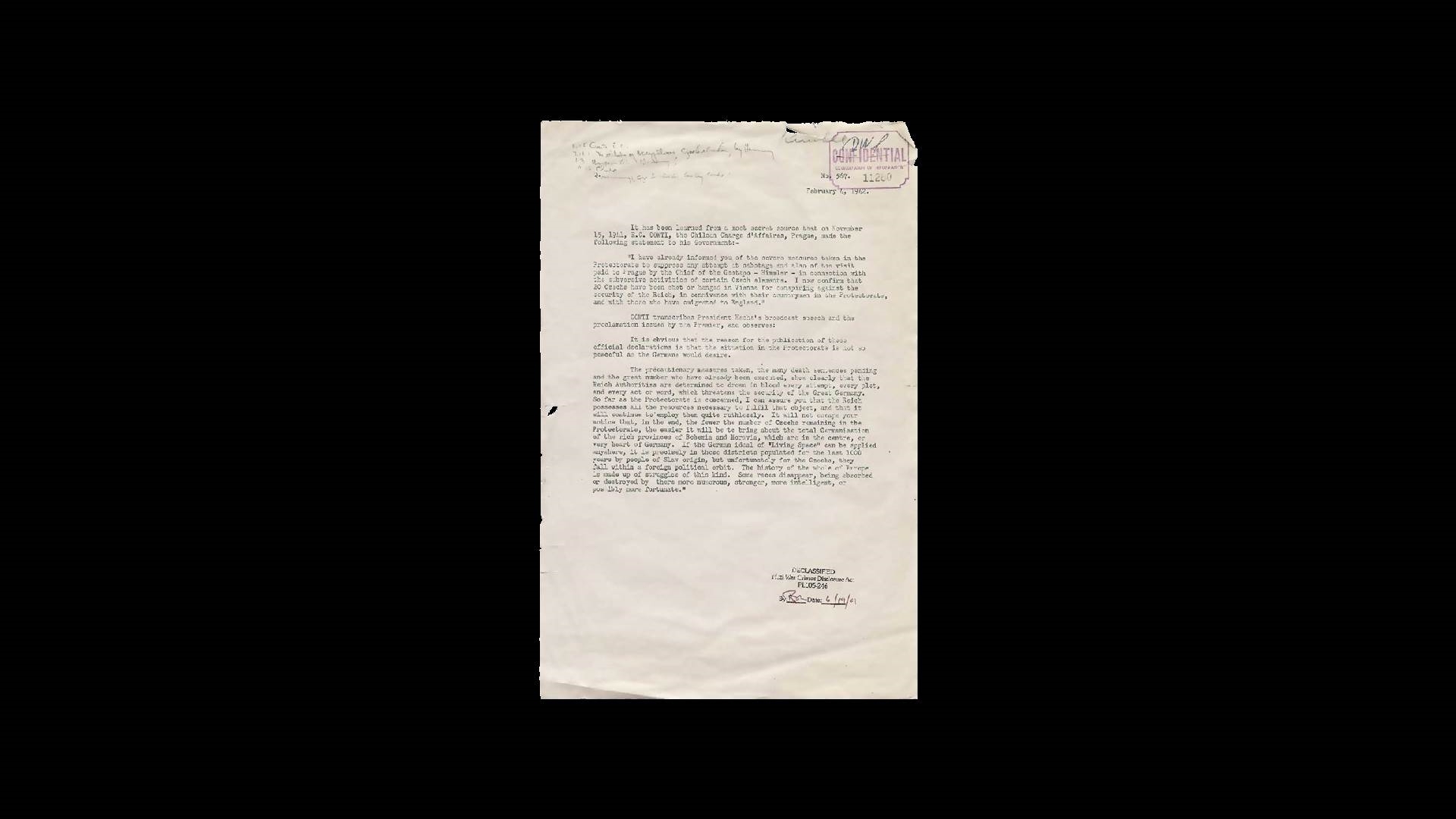

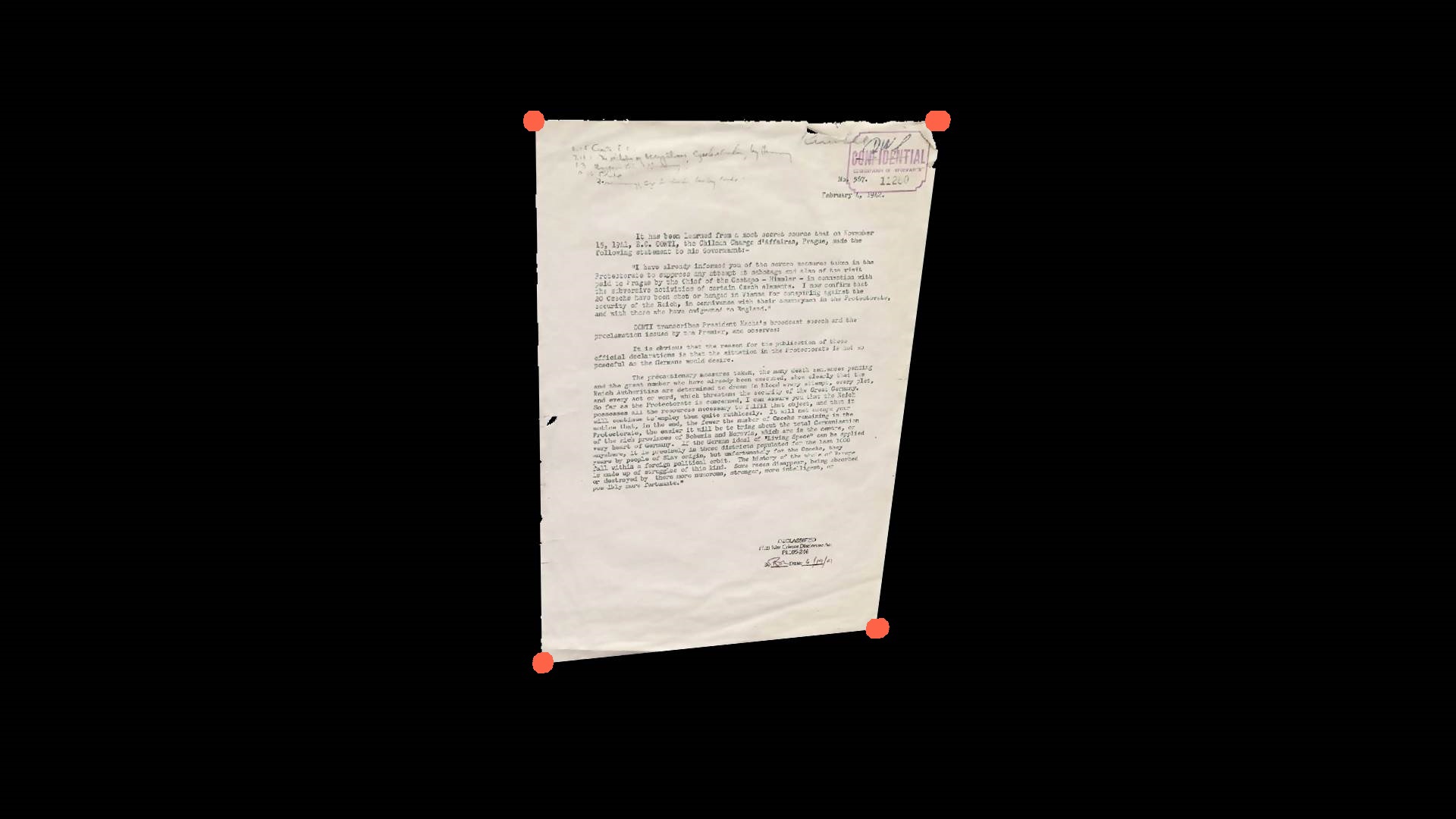

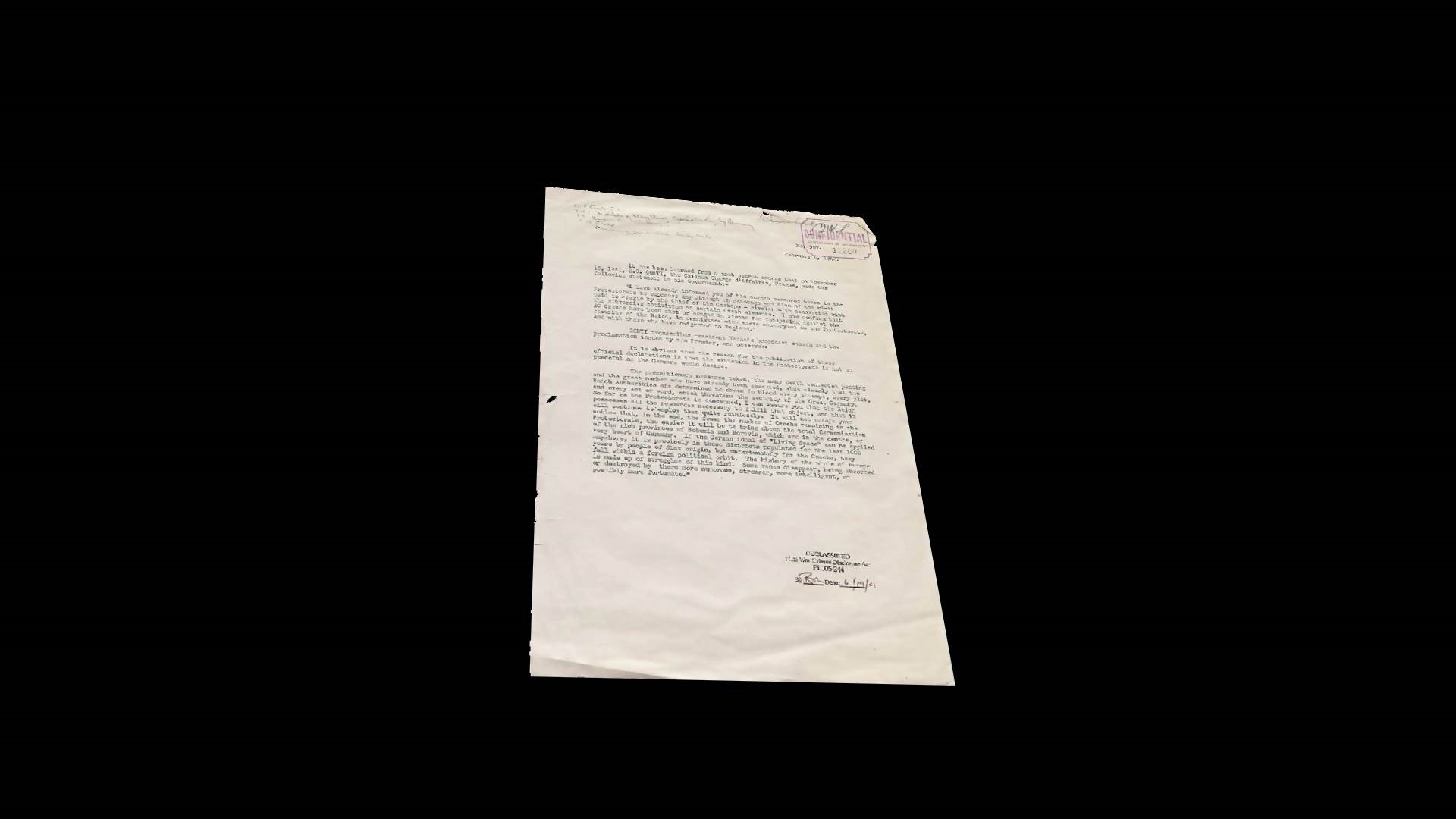

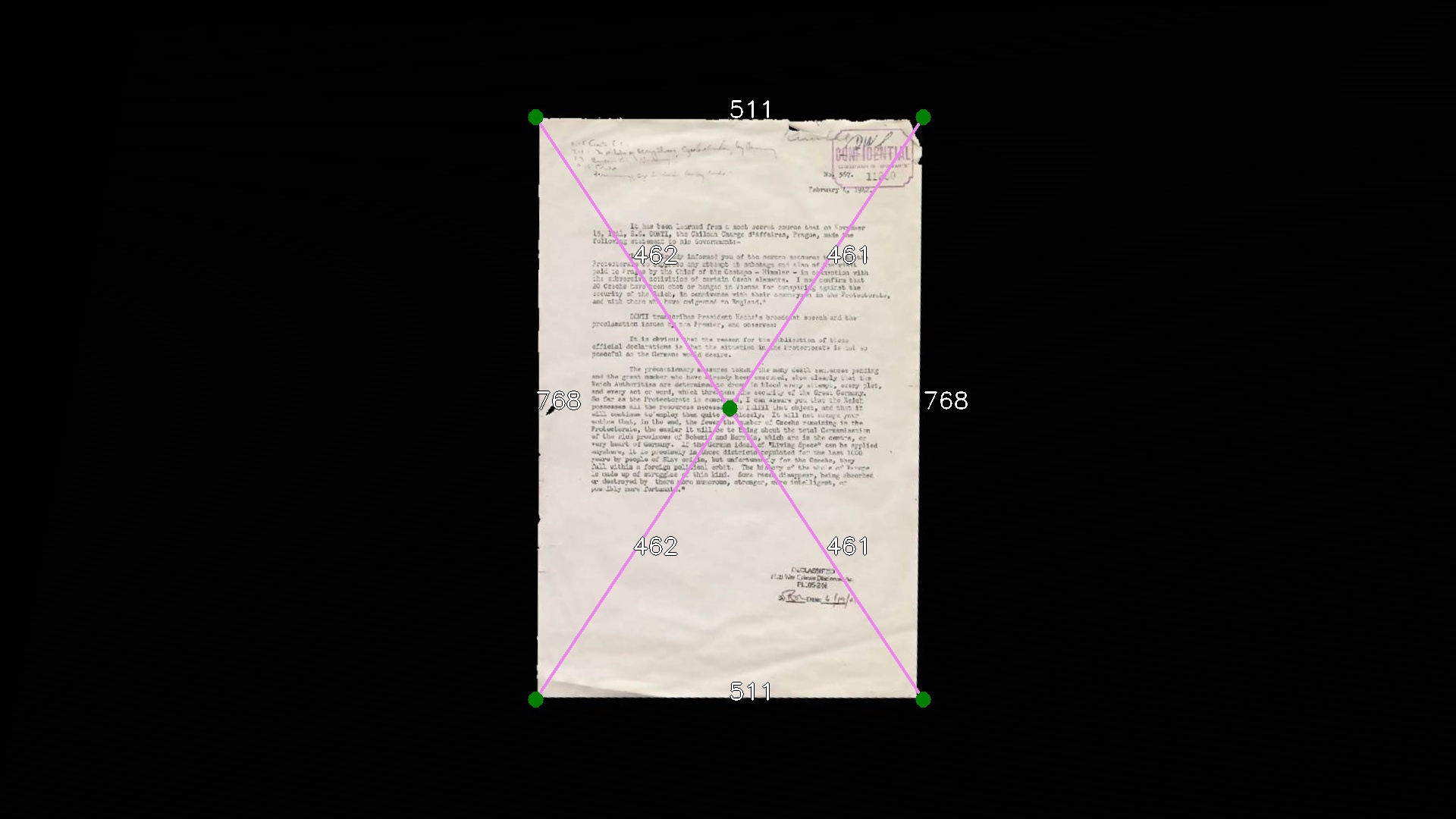

Consider the main interesting points in solving the problem of correcting the perspective image in the following image:

1. In order to find the outline in the presented image, it is necessary to get rid of small details that may interfere. This can be done by applying a “low power” blur spell according to low power, after converting the image into shades of gray:

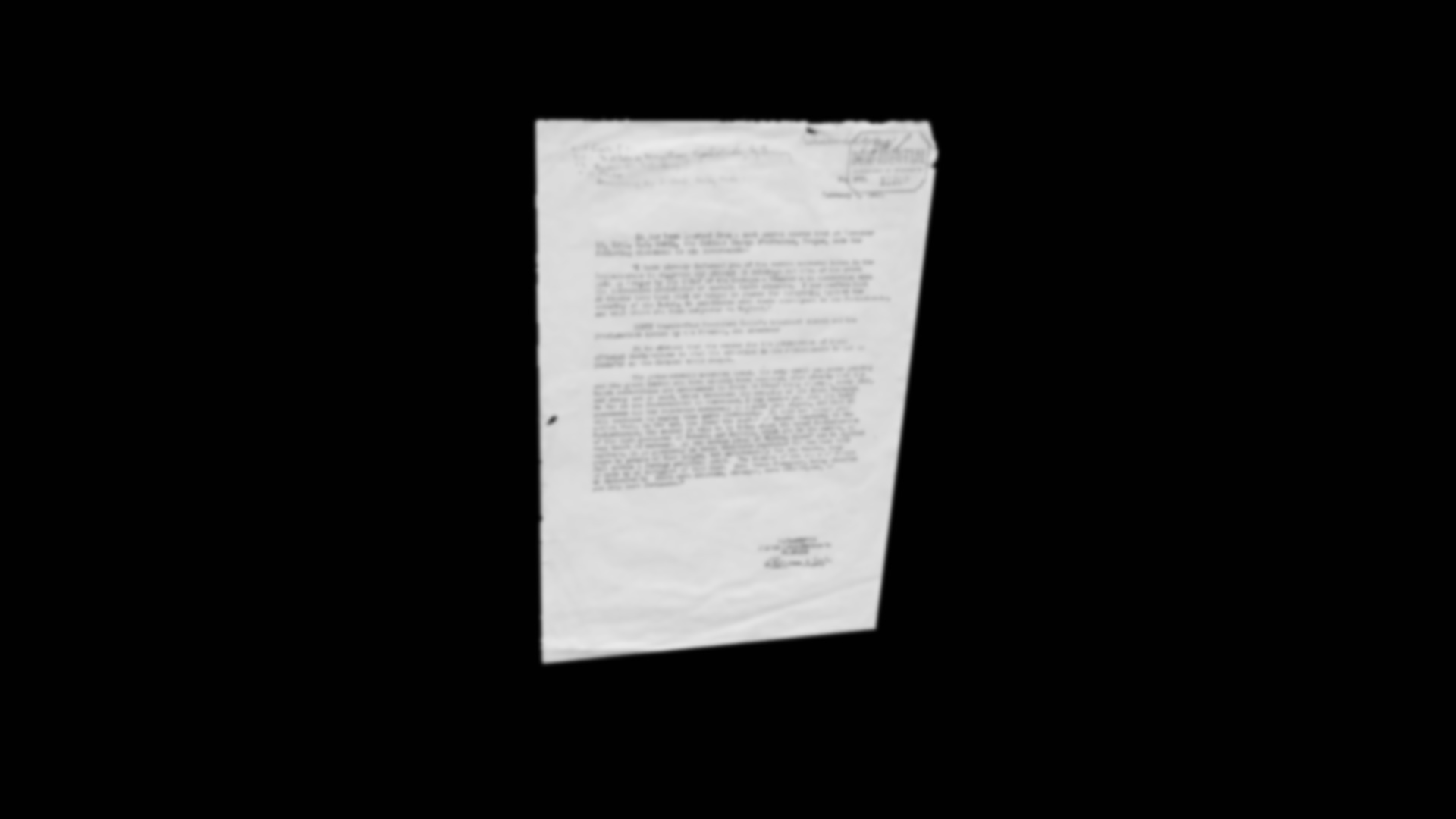

Here's what happened as a result of applying the above described chain (if I take off my glasses, there will be about the same effect. Hence the moral: “Myopia is not a disease, but intellectual processing of the image, which aims to weed out all unnecessary and make the world more beautiful!”):

2. Next, you need to make the image black and white:

')

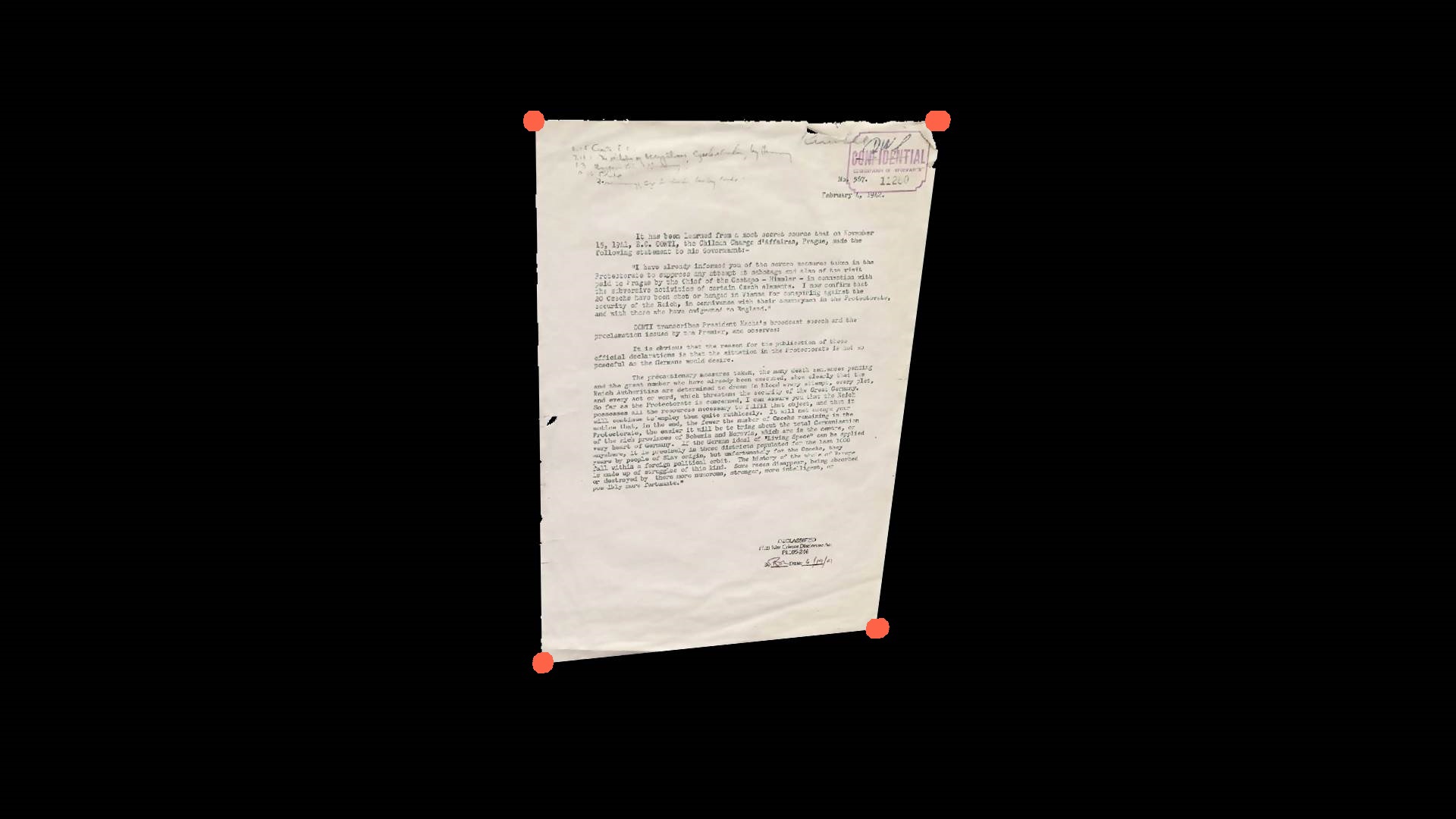

3. On the resulting image is easy to find the outline of the document. We will look for the maximum external contour. OpenCVSharp has a great CvContourScanner class that can list all the contours of an image found. Using Linq, you can sort these outlines by area and take the first one, which will be the maximum one.

If you draw the contour found, you get the following image:

4. Cheers! Found a contour! However, it can show little - it is necessary to know the exact coordinates of all the corner points - the points of intersection of the sides of the document. Obviously, to find the coordinates of these points, it is desirable to describe the sides of the contour found by the equations of a straight line. So how can OpenCV help us? Very simple! It has a tool that uses the Hough transform . "Cast" this method on the image obtained in the previous step:

The article is certainly interesting and useful. We, “with a feeling of deep satisfaction,” noted that ABBYY uses the same mathematical algorithms as we and wisely omits some details, without which the accuracy of determining the boundaries of the document is significantly reduced.

I think that after reading the article a certain part of readers had a reasonable question: “What to do with the document found on the picture further?” I will answer with the words of the Cheshire Cat Alice: “Where do you want to come?” If the ultimate goal is to “pull out” the text from the picture data, then you need to maximally facilitate the task of the recognition system. For this, first of all, it is necessary to correct perspective distortions, the scourge of all photographs of documents "by hand". If this problem is not solved, an attempt to recognize the data may give a result comparable to attempts to recognize captcha. On the freelance sites with enviable regularity appear "believers" in the victory of machine intelligence over the captcha for a small price. Blessed is he who believes, but we are not talking about that now.

So, in this article we will try to pick up the baton from ABBYY and tell you in our own way how to bring the prism-like, at best, document that we identified in the picture (thanks to ABBYY for science) to rectangular form, preferably with preservation original proportions. We do not consider exotic cases like pentagonal or oval documents, although the question is interesting.

The problem of distorting perspective distortions arose before ALANIS Software not quite from the side from which it was expected. I mean, the fact that we do not specialize in mobile development. However, our customer, for whom we are developing a system for scanning and image processing for planetary scanners based on Canon EOS digital mirror cameras (hi, photographers!), At some point wanted to have such functionality in the arsenal. Moreover, it was not about processing the finished camera image, but about adjusting the video stream, at the LiveView preview stage. However, the solution we developed works equally well in the correction mode of an already taken snapshot of a document.

Given:

- image of a rectangular document with a camera with distortion

- the contours of the document in the picture

Task:

to bring the document to its original form the shortest way

Challenge (the Russian equivalent somehow does not occur):

- the proportions of the original document we are not exactly known

- the distance to the plane on which the document lies we do not know

- There are no reference objects on which to navigate (for example, the correct square caught in the lens)

Decision:

So, to solve the problem as a whole, we suggest splitting it into two separate ones:

- Finding, in fact, the distorted contour of the document on the scanned image (perhaps, we will once again highlight this question for those who have not read the article ABBYY).

- Determining the correct proportions of the document to which the original distorted contour should be mapped in order to get an aligned document.

You could, of course, try to reinvent the wheel, and some still succeed, but we took the easier way and used the OpenCV toolkit. We work mostly in the .NET environment, through C # Wrapper OpenCVSharp . OpenCVSharp is also available as a Nuget package in Visual Studio. "That's all" (c) and will use.

Consider the main interesting points in solving the problem of correcting the perspective image in the following image:

1. In order to find the outline in the presented image, it is necessary to get rid of small details that may interfere. This can be done by applying a “low power” blur spell according to low power, after converting the image into shades of gray:

imgSource.CvtColor(imgGrayscale, ColorConversion.BgrToGray);

imgSource.Smooth(imgSource, SmoothType.Gaussian, 15);Here's what happened as a result of applying the above described chain (if I take off my glasses, there will be about the same effect. Hence the moral: “Myopia is not a disease, but intellectual processing of the image, which aims to weed out all unnecessary and make the world more beautiful!”):

2. Next, you need to make the image black and white:

')

imgSource.Threshold(imgSource, 0, 255, ThresholdType.Binary | ThresholdType.Otsu);

3. On the resulting image is easy to find the outline of the document. We will look for the maximum external contour. OpenCVSharp has a great CvContourScanner class that can list all the contours of an image found. Using Linq, you can sort these outlines by area and take the first one, which will be the maximum one.

using (var storage = new CvMemStorage())

using (var scanner = new CvContourScanner(image, _storage, CvContour.SizeOf, ContourRetrieval.External, ContourChain.ApproxSimple))

{

var largestContour = scanner.OrderBy(contour => Math.Abs(contour.ContourArea())).FirstOrDefault();

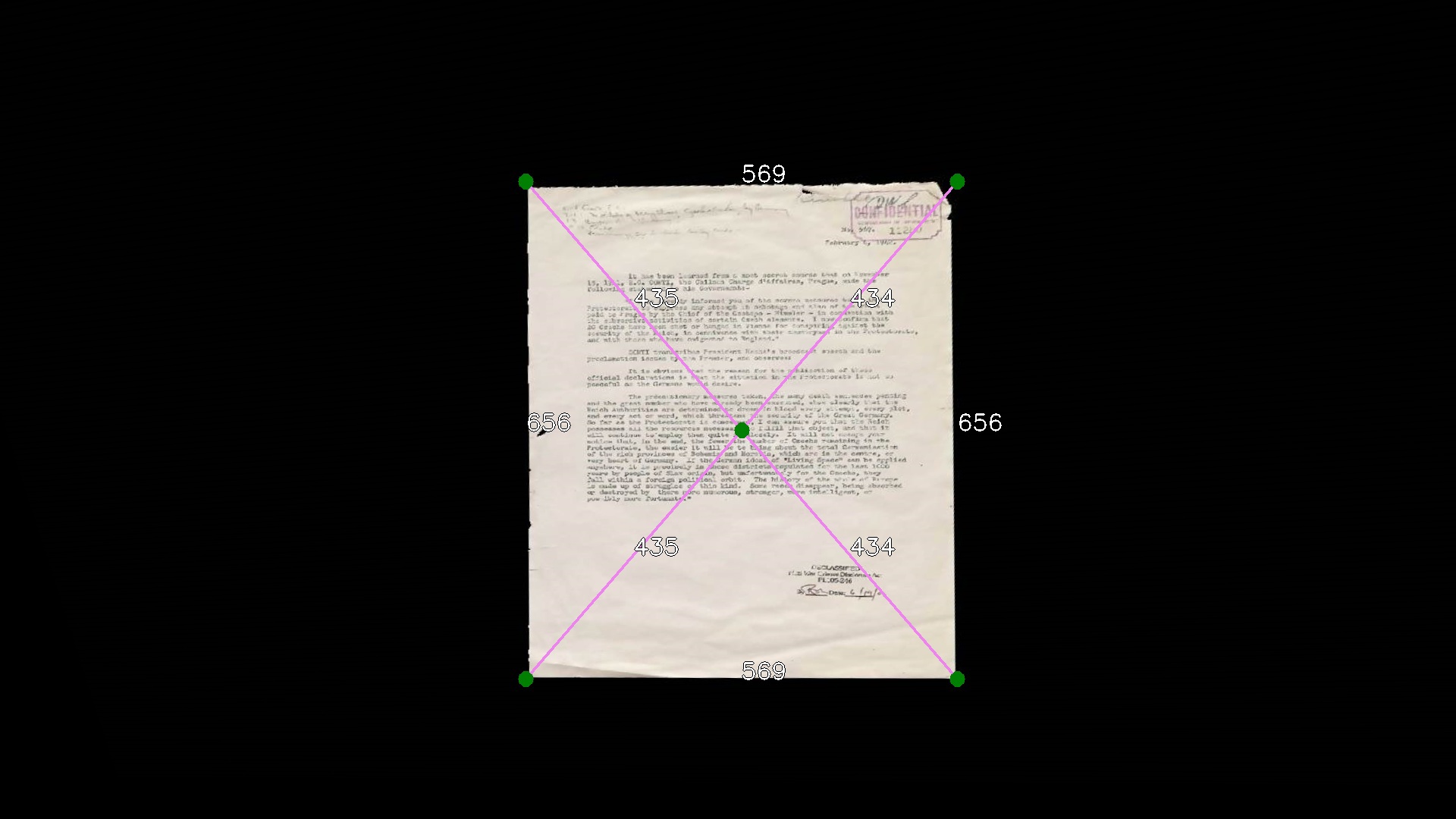

}If you draw the contour found, you get the following image:

4. Cheers! Found a contour! However, it can show little - it is necessary to know the exact coordinates of all the corner points - the points of intersection of the sides of the document. Obviously, to find the coordinates of these points, it is desirable to describe the sides of the contour found by the equations of a straight line. So how can OpenCV help us? Very simple! It has a tool that uses the Hough transform . "Cast" this method on the image obtained in the previous step:

var lineSegments = imgSource.HoughLines2(storage, HoughLinesMethod.Probabilistic, 1, Math.PI / 180.0, 70, 100, 1).ToArray();

, 4 , , ! 100, 200, . , , , ( «» OpenCV). , - , , : , :

var verticalSegments = segments

.Where(s => Math.Abs(s.P1.X - s.P2.X) < Math.Abs(s.P1.Y - s.P2.Y))

.ToArray();

var horizontalSegments = segments

.Where(s => Math.Abs(s.P1.X - s.P2.X) >= Math.Abs(s.P1.Y - s.P2.Y))

.ToArray();

, «» – ; – . , , :

, . , :

var corners = horizontalSegments

.SelectMany(sh => verticalSegments

.Select(sv => sv.LineIntersection(sh))

.Where(v => v != null)

.Select(v => v.Value))

// exclude points which is out of image area

.Where(c => new CvRect(0, 0, imgSource.Width, imgSource.Height).Contains(c))

.ToArray();

:

– ). OpenCVSharp:

contour = contour.ApproxPoly(CvContour.SizeOf, storage, ApproxPolyMethod.DP, contour.ArcLength() * 0.02, true);

, ! , - :

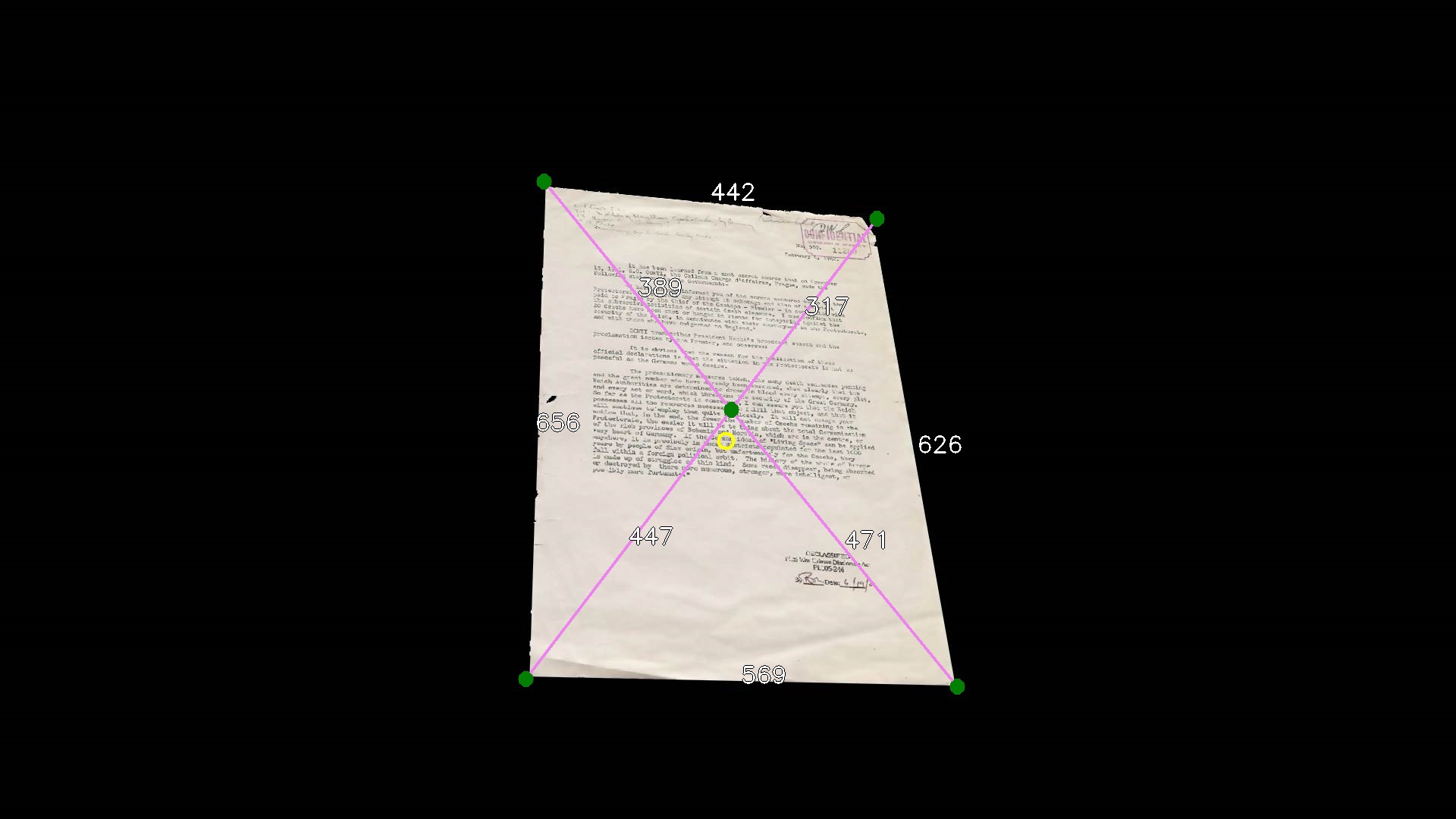

5. , . , – , . , , . , – - , . , , .

, , , 100% . , , , , , .

, , . : . . , , :

:

, , . !

- . , ( 10, – , – ):

, «» . - , , . - , , :

:

deltaX, deltaY – , ;

targetWidth, targetHeight – ;

topWidth, bottomWidth, leftHeight, rightHeight – .

:

, :

. , .

, «» , «» , , - …

, - . , . , Canon. «» « » LiveView, .

, . youtube , , . !var lineSegments = imgSource.HoughLines2(storage, HoughLinesMethod.Probabilistic, 1, Math.PI / 180.0, 70, 100, 1).ToArray();

, 4 , , ! 100, 200, . , , , ( «» OpenCV). , - , , : , :

var verticalSegments = segments

.Where(s => Math.Abs(s.P1.X - s.P2.X) < Math.Abs(s.P1.Y - s.P2.Y))

.ToArray();

var horizontalSegments = segments

.Where(s => Math.Abs(s.P1.X - s.P2.X) >= Math.Abs(s.P1.Y - s.P2.Y))

.ToArray();

, «» – ; – . , , :

, . , :

var corners = horizontalSegments

.SelectMany(sh => verticalSegments

.Select(sv => sv.LineIntersection(sh))

.Where(v => v != null)

.Select(v => v.Value))

// exclude points which is out of image area

.Where(c => new CvRect(0, 0, imgSource.Width, imgSource.Height).Contains(c))

.ToArray();

:

– ). OpenCVSharp:

contour = contour.ApproxPoly(CvContour.SizeOf, storage, ApproxPolyMethod.DP, contour.ArcLength() * 0.02, true);

, ! , - :

5. , . , – , . , , . , – - , . , , .

, , , 100% . , , , , , .

, , . : . . , , :

:

, , . !

- . , ( 10, – , – ):

, «» . - , , . - , , :

:

deltaX, deltaY – , ;

targetWidth, targetHeight – ;

topWidth, bottomWidth, leftHeight, rightHeight – .

:

, :

. , .

, «» , «» , , - …

, - . , . , Canon. «» « » LiveView, .

, . youtube , , . !Source: https://habr.com/ru/post/223507/

All Articles