Fight for traffic. How to get a site out of Google spam filter (Part Two)

Hello!

It is time to publish the second part of the article - the final, with a happy ending. For those who are not in the subject, in the first part I described in detail how to find out if your site got spam filters from Google and what to do with it, if, after all, filters were applied to your site and traffic from Google fell .

')

Today I will tell you what to do next if all the stages of the first part are successfully completed. Let me remind you that the article is written on the basis of personal experience of pulling out our site rusonyx.ru from under the Google filter.

Suppose that you have already completed all the steps described in the first part of the article:

Total you have:

What remains to be done:

I mentioned Disavow in the first article, but here it will be correct to repeat. Disavow is a Google tool that you can use to reject links. In fact, you are asking Google not to take into account some links when evaluating your site - to make them non-indexable (nofollow). This tool should be used carefully and only if attempts to contact webmasters or to remove substandard links on their own failed.

It is very important to correctly form the file. It needs to be created in txt format. But first you need to prepare the links that you want to reject.

Preparation of references to rejection

Short algorithm:

So the first thing to do is copy a google spreadsheet. We will continue to work only with copies, since we will have to make changes to the table, and the source will be needed to be sent to Google.

Open a copy of the table. At this stage, we are interested in the Disavow column.

In the process of working with links, you assigned each of them certain statuses. So, in the Disavow column, each of the links should have one of the following statuses:

We are interested in the first two.

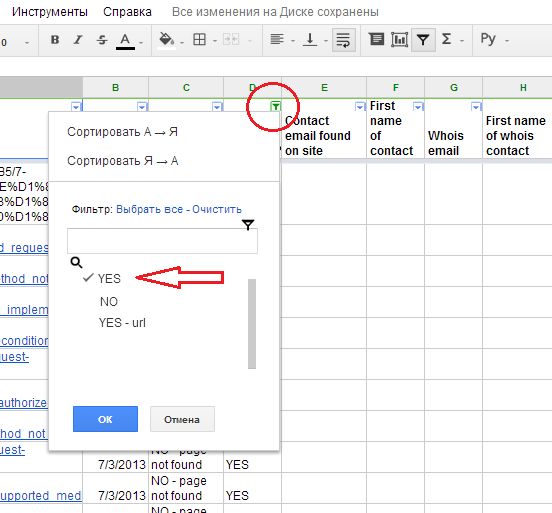

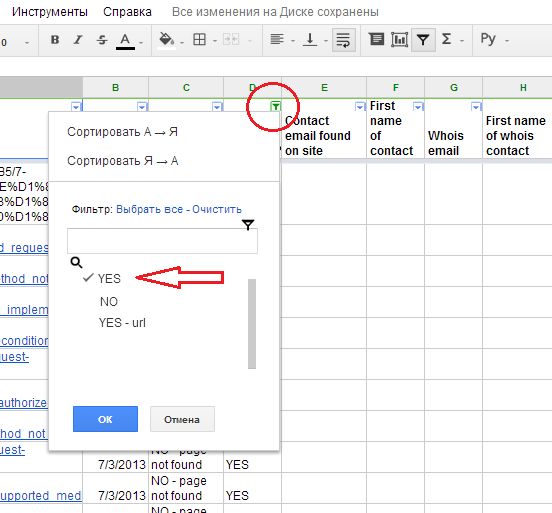

Let's start with the status of YES (YES). This status means that we want to reject the entire domain. Filter the Disavow column for this status.

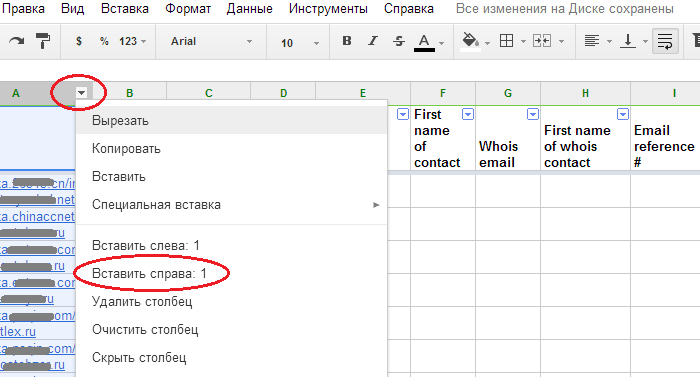

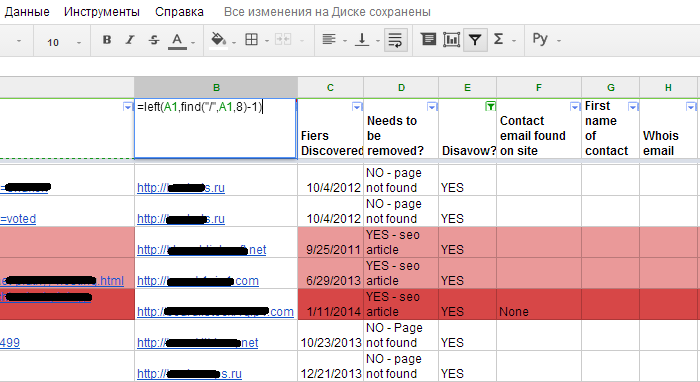

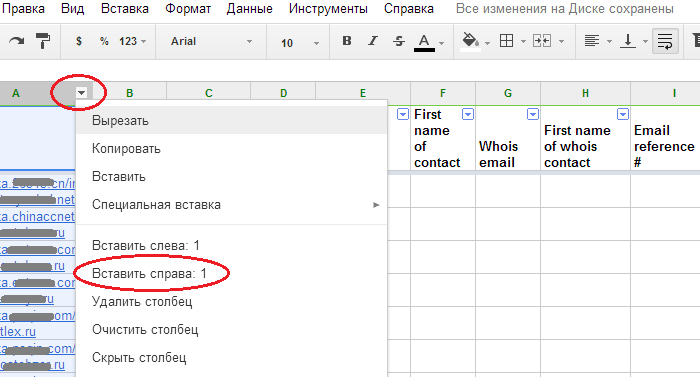

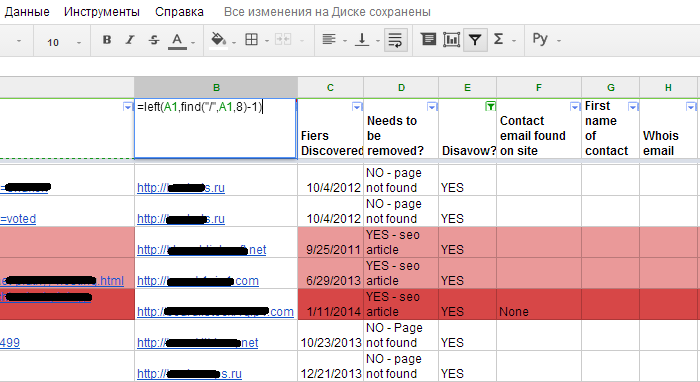

Now you need to remove all unnecessary. To do this, create a new column to the right of the “A” column and in its first cell (B1) enter the formula: = left (A1, find (“/”, A1.8) -1). Apply the formula to the entire column (select the column and CTRL-D).

This formula removes the extra “tail” of the link to the first-level domain. For example, it was domain.ru/12345/321/abc/, and it became - domain.ru.

Now you need to delete “http: //”, “https: //”, “http: // www.”, “Https: // www.” And add the value “domain:” to each domain.

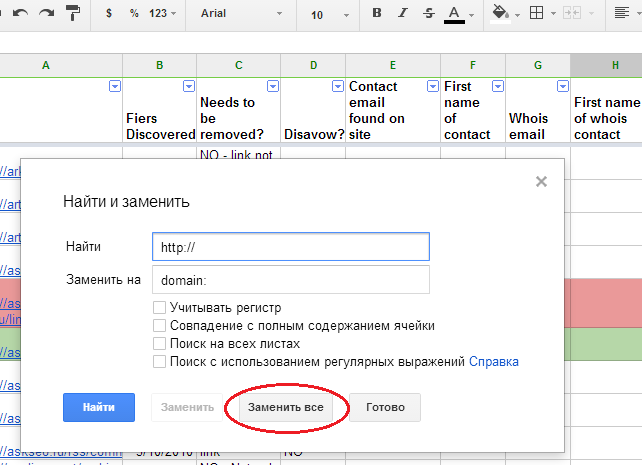

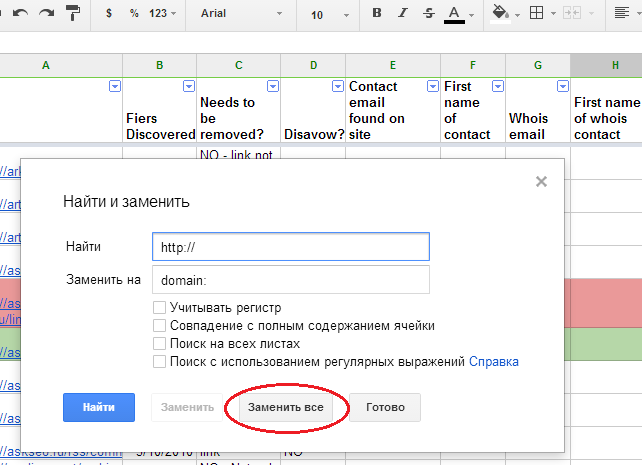

To do this, use the “Find and replace” function in the Google Tables Edit menu. Open "find and replace" and in the first field enter "http: //", and in the line "Replace with" specify the value of "domain:". Next - "Replace all."

Do the same with https: //, http: // www. And https: // www.

As a result, you should get a list of "clean" domains with the value of "domain:".

It turned out almost what we need. It remains to remove repetitions. To do this, sort the resulting list in alphabetical order and create a new column to the right of column “A”. In its first cell, enter the formula: = if (C1 = C2, ”duplicate”, ””). Apply the formula to the whole column (CTRL + D). As a result, if there are duplicate domains in the list, then the word duplicate will appear opposite each such repeat.

Apply the filter to column “B” and uncheck the word duplicate. Thus, you should get a list of unique domains.

Creating a Disavow File

Create a new .txt file and name it Disavow. Copy all the contents from the “C” column and paste into the txt file.

Status YES - url (YES - url)

We still have links that we have marked as YES - url for rejection (YES - url). This status means that we do not want to reject the entire domain, but only a separate link or links on this domain.

Everything is simple here. Create a new copy of the table, filter the Disavow column by the status YES - url (YES - url), copy the links and paste into the .txt file.

File to reject links created. It now remains to send it to Google.

You can send the file through the GoogleWebmasterTools tool. Select your site and click reject links. You’ll see a Google warning that the tool should be used with caution and only when there are good reasons for this. Click the reject links and upload the .txt file you created. If there are any errors in the file, then you will see a message about it. Correct them and download the file again.

The main work has been done and it is time to prepare a request to re-check the site.

A request for rescanning is a letter to Google describing the work done.

The letter should contain links to Google documents created during the work on the ban:

Where to get links

Open one of the above Google documents and in the upper right corner select “Access Settings”. In the pop-up window you will find a link to the document.

Very important! By default, the document is open for personal use only. Therefore, before you copy the link, change the access level to the following: “Any user who has a link can view the item.” This is necessary so that Google employees can view the files that you have prepared for them.

Request example

Below is my text of the request letter to re-verify the site, which I sent to Google. You can take it as a template.

Before sending, double check access to links to documents. Otherwise it will be a shame if Google sends a failure.

To send a file, follow the link . Select your site and click "Measures taken manually." Then copy the request, paste into the input field and send.

Now it remains to wait. The answer from Google will not come right away. Before you receive it, it may take several days, or even weeks. For example, when we sent a request for the first time, we received an answer only after 2 weeks, but the answer to the repeated request came earlier - a week later.

What to expect and how to deal with it?

It is clear that there are only 2 options. And you will either be removed from manual measures or not. If successful, you will receive such a letter:

And in case of failure - is:

If you received a letter of refusal, then first of all, check the recommendations indicated in the letter. The reason for failure may be as follows:

In my case, the letter indicated the first reason. As a recommendation, Google employees provided 2 examples of URL links with poor quality links leading to our site. After checking the links, it turned out that this is really spam, and I didn’t delete them because at the time of working with the links these were not opened, but later they started working again.

What have I done. Created a copy of the main Google spreadsheet and revised all links with the status "NO - Page not found" (NO - Page not found). All links that still did not open, marked for deviation (column Disavow - status YES (YES)).

I reviewed all the opened URL-links, and if I found poor-quality ones, I acted according to the already run-in pattern: find a contact, contact the webmaster, etc. (see the first part ).

I also reviewed all the links in the comments to which, during the first analysis, indicated the status “Possible”.

Yes, and more. I really didn’t want to receive the second letter with refusal :) and therefore re-uploaded the links from the Webmastertools panel, as the links were not initially all downloaded, but only a part. Then I compared the resulting list of links with the main, worked list, deleted duplicate links and worked on those that remained. Then I added these links to the main table, created a new .txt file to reject the links, and sent it to Google. The next day, sent the request itself to re-check the site.

If you carefully read the text of the response from Google, then you will find a paragraph in which it says that the second request will be considered only a few weeks after receiving the refusal. This time you are given to further work with links. So, you are still punished and are sent back to serve the sentence in the form of correctional labor for an “unknown week” period.

Therefore, it is not necessary to send new requests for verification on the same day. If you did everything in 1-2 days, then it is better to postpone and send, for example, in a week.

Fortunately, our “multi-part” story in the fight for traffic ended with a happy ending. March 13, 2014 I received a letter of happiness from Google. From our site removed the measures taken by hand. Hooray! Ban Google, goodbye!

After a couple of days, rusonyx.ru returned to search results for the main key queries. So, for example, by the high-frequency query “vps” and “virtual server” we went to the first page.

The visibility of the site in search engines and revenues from organic issuance increased on average by 1.5 times.

And so now looks like statistics on search queries.

As our experience has shown, Google’s spam filter can be accessed without the use of active SEO-actions (as, for example, this happened to us). Despite the fact that we stopped working with the promotion agency and removed the SEO links long before the filter was applied, our website was good for it. However, for us everything ended well.

If you are overtaken by the same fate, I hope two of my articles (the first part here ) will help you figure out what's what and get out of the Google filter as soon as possible. I wish you all good luck and patience :).

I would be glad if you share your personal experience of going out from under the ban Google. What they were doing? What difficulties arose? How did it all end? Write in the comments.

Thanks to all!

It is time to publish the second part of the article - the final, with a happy ending. For those who are not in the subject, in the first part I described in detail how to find out if your site got spam filters from Google and what to do with it, if, after all, filters were applied to your site and traffic from Google fell .

')

Today I will tell you what to do next if all the stages of the first part are successfully completed. Let me remind you that the article is written on the basis of personal experience of pulling out our site rusonyx.ru from under the Google filter.

Suppose that you have already completed all the steps described in the first part of the article:

- you find the cause;

- worked with links;

- revealed substandard;

- have contacted the webmasters of these sites where such links are located;

- documented the fact of accessing webmasters;

- assigned to each link from your list a certain status in the table.

Total you have:

- Google table with a list of links, each of which is assigned a certain status;

- Google document with e-mail codes, confirming the fact of sending letters to webmasters;

- Google document with screenshots of requests sent through the reverse order form on the site, or through domain administrators.

What remains to be done:

- Prepare a file to reject links (Disavow);

- Send the Disavow file to Google (disallow links via the Disavow tool);

- Make a request to re-check the site;

- Send a request to Google.

Step One: Preparing the Disavow File

I mentioned Disavow in the first article, but here it will be correct to repeat. Disavow is a Google tool that you can use to reject links. In fact, you are asking Google not to take into account some links when evaluating your site - to make them non-indexable (nofollow). This tool should be used carefully and only if attempts to contact webmasters or to remove substandard links on their own failed.

It is very important to correctly form the file. It needs to be created in txt format. But first you need to prepare the links that you want to reject.

Preparation of references to rejection

Short algorithm:

- Create a copy of Google tables with links;

- Filter the Disavow column by YES status;

- Remove all unnecessary (http: //, https: //, www.);

- Add to each link the value of "domain:";

- Create the Disavow.txt file and copy the prepared links into it;

- Create a new copy of google spreadsheet;

- Filter the Disavow column by the status YES - url (YES - url);

- Copy links and paste into the file Disavow.txt;

- Upload the Disavow.txt file to Google - a tool for rejecting links.

So the first thing to do is copy a google spreadsheet. We will continue to work only with copies, since we will have to make changes to the table, and the source will be needed to be sent to Google.

Open a copy of the table. At this stage, we are interested in the Disavow column.

In the process of working with links, you assigned each of them certain statuses. So, in the Disavow column, each of the links should have one of the following statuses:

- YES

- YES - url (YES - url)

- NO (NO)

We are interested in the first two.

Let's start with the status of YES (YES). This status means that we want to reject the entire domain. Filter the Disavow column for this status.

Now you need to remove all unnecessary. To do this, create a new column to the right of the “A” column and in its first cell (B1) enter the formula: = left (A1, find (“/”, A1.8) -1). Apply the formula to the entire column (select the column and CTRL-D).

This formula removes the extra “tail” of the link to the first-level domain. For example, it was domain.ru/12345/321/abc/, and it became - domain.ru.

Now you need to delete “http: //”, “https: //”, “http: // www.”, “Https: // www.” And add the value “domain:” to each domain.

To do this, use the “Find and replace” function in the Google Tables Edit menu. Open "find and replace" and in the first field enter "http: //", and in the line "Replace with" specify the value of "domain:". Next - "Replace all."

Do the same with https: //, http: // www. And https: // www.

As a result, you should get a list of "clean" domains with the value of "domain:".

It turned out almost what we need. It remains to remove repetitions. To do this, sort the resulting list in alphabetical order and create a new column to the right of column “A”. In its first cell, enter the formula: = if (C1 = C2, ”duplicate”, ””). Apply the formula to the whole column (CTRL + D). As a result, if there are duplicate domains in the list, then the word duplicate will appear opposite each such repeat.

Apply the filter to column “B” and uncheck the word duplicate. Thus, you should get a list of unique domains.

Creating a Disavow File

Create a new .txt file and name it Disavow. Copy all the contents from the “C” column and paste into the txt file.

Status YES - url (YES - url)

We still have links that we have marked as YES - url for rejection (YES - url). This status means that we do not want to reject the entire domain, but only a separate link or links on this domain.

Everything is simple here. Create a new copy of the table, filter the Disavow column by the status YES - url (YES - url), copy the links and paste into the .txt file.

File to reject links created. It now remains to send it to Google.

Step Two: Send the Disavow File to Google

You can send the file through the GoogleWebmasterTools tool. Select your site and click reject links. You’ll see a Google warning that the tool should be used with caution and only when there are good reasons for this. Click the reject links and upload the .txt file you created. If there are any errors in the file, then you will see a message about it. Correct them and download the file again.

Step Three: Preparing a request to verify the site

The main work has been done and it is time to prepare a request to re-check the site.

A request for rescanning is a letter to Google describing the work done.

The letter should contain links to Google documents created during the work on the ban:

- Link to the main Google table;

- Link to Google document "Screenshots of requests sent from contact forms";

- Link to Google document "Original codes, sent letters"

Where to get links

Open one of the above Google documents and in the upper right corner select “Access Settings”. In the pop-up window you will find a link to the document.

Very important! By default, the document is open for personal use only. Therefore, before you copy the link, change the access level to the following: “Any user who has a link can view the item.” This is necessary so that Google employees can view the files that you have prepared for them.

Request example

Below is my text of the request letter to re-verify the site, which I sent to Google. You can take it as a template.

Good day!

Thank you for taking the time to review our request to re-verify the site _________. (date) we received a notice that some of the links leading to our site __________ do not comply with the quality guidelines. We took note of your recommendations. For several months, we studied the links and removed substandard, contrary to the rules.

Below are links to documents in Google Docs, confirming the fact of the work done.

Table with external links

https://docs.google.com/spreadsheet/ccc…

In red, we indicated links that should be removed and, for one reason or another, we were unable to contact the webmasters. In green, we selected links that were successfully deleted or to which the nofollow tag was applied.

What we have done to remove low-quality links.

For all such links, we sent letters to webmasters or resource owners on an e-mail that we could find on the website or in the whois database. In addition, we also sent requests using the feedback form on the website or through the domain registrar feedback form. We have carefully documented all our attempts to contact webmasters or owners.

The document with the source code sent letters:

https://docs.google.com/document/d/1X...

In this document you will find the source codes of the letters that we sent to the webmasters, and in some cases the source letters with their answers. (in the table of external links, see the column “I” - “E-mail reference”)

Screenshots document:

https://docs.google.com/document/d/1j...

In this document you will find screenshots of letters sent through feedback forms that we found on the website or through domain registrars feedback forms. (in the external links table, see the column “K” - “Contact form reference”).

References to Deviation (Disavow)

We also want to draw your attention to the fact that (date) we sent a Disavow.txt file in which we ask you to reject every link or domain specified in this file.

Thank you for taking the time and reviewing our request.

We look forward to your response and sincerely look forward to a positive decision regarding our site.

Respectfully,

Step Four: Submitting the Request

Before sending, double check access to links to documents. Otherwise it will be a shame if Google sends a failure.

To send a file, follow the link . Select your site and click "Measures taken manually." Then copy the request, paste into the input field and send.

What then?

Now it remains to wait. The answer from Google will not come right away. Before you receive it, it may take several days, or even weeks. For example, when we sent a request for the first time, we received an answer only after 2 weeks, but the answer to the repeated request came earlier - a week later.

What to expect and how to deal with it?

It is clear that there are only 2 options. And you will either be removed from manual measures or not. If successful, you will receive such a letter:

And in case of failure - is:

If the answer came with a refusal

If you received a letter of refusal, then first of all, check the recommendations indicated in the letter. The reason for failure may be as follows:

- Not all substandard links are removed;

- You have provided insufficient evidence that you have done everything possible to communicate with webmasters and remove “bad” links;

- Something is wrong with your Disavow.txt file.

In my case, the letter indicated the first reason. As a recommendation, Google employees provided 2 examples of URL links with poor quality links leading to our site. After checking the links, it turned out that this is really spam, and I didn’t delete them because at the time of working with the links these were not opened, but later they started working again.

What have I done. Created a copy of the main Google spreadsheet and revised all links with the status "NO - Page not found" (NO - Page not found). All links that still did not open, marked for deviation (column Disavow - status YES (YES)).

I reviewed all the opened URL-links, and if I found poor-quality ones, I acted according to the already run-in pattern: find a contact, contact the webmaster, etc. (see the first part ).

I also reviewed all the links in the comments to which, during the first analysis, indicated the status “Possible”.

Yes, and more. I really didn’t want to receive the second letter with refusal :) and therefore re-uploaded the links from the Webmastertools panel, as the links were not initially all downloaded, but only a part. Then I compared the resulting list of links with the main, worked list, deleted duplicate links and worked on those that remained. Then I added these links to the main table, created a new .txt file to reject the links, and sent it to Google. The next day, sent the request itself to re-check the site.

How soon to send a new request for rescan?

If you carefully read the text of the response from Google, then you will find a paragraph in which it says that the second request will be considered only a few weeks after receiving the refusal. This time you are given to further work with links. So, you are still punished and are sent back to serve the sentence in the form of correctional labor for an “unknown week” period.

Therefore, it is not necessary to send new requests for verification on the same day. If you did everything in 1-2 days, then it is better to postpone and send, for example, in a week.

Happy end

Fortunately, our “multi-part” story in the fight for traffic ended with a happy ending. March 13, 2014 I received a letter of happiness from Google. From our site removed the measures taken by hand. Hooray! Ban Google, goodbye!

After a couple of days, rusonyx.ru returned to search results for the main key queries. So, for example, by the high-frequency query “vps” and “virtual server” we went to the first page.

The visibility of the site in search engines and revenues from organic issuance increased on average by 1.5 times.

And so now looks like statistics on search queries.

Conclusion

As our experience has shown, Google’s spam filter can be accessed without the use of active SEO-actions (as, for example, this happened to us). Despite the fact that we stopped working with the promotion agency and removed the SEO links long before the filter was applied, our website was good for it. However, for us everything ended well.

If you are overtaken by the same fate, I hope two of my articles (the first part here ) will help you figure out what's what and get out of the Google filter as soon as possible. I wish you all good luck and patience :).

I would be glad if you share your personal experience of going out from under the ban Google. What they were doing? What difficulties arose? How did it all end? Write in the comments.

Thanks to all!

Source: https://habr.com/ru/post/223455/

All Articles