Integration of VR tools in Unity3d

Time passes and everything changes, including the game, or rather the approach to their creation. Now there are newfangled things like virtual reality helmets Oculus Rift, Sony Morpheus, various motion controllers such as Razer Hydra, Sixense STEM and many other tools that help immerse yourself in virtual reality. In this article, the integration of Oculus Rift and Razer Hydra in Unity3d will be visually discussed.

From the cap it follows that we have:

- Oculus rift

- Razer hydra

- Unity3d 4.3.4f

Foreword

So, I’ll start with the story about the Razer Hydra controller, which was released in 2011, you can already say, at a reasonable price, but this device allowed not only to get information about the tilt and deviations of the joystick, but also the coordinates of each of them in space. At that time, there were no really games developed with regard to the capabilities of the hydra and many people forgot about it, but time passed and Oculus Rift was shown, which allows you to plunge into a previously unexplored virtual reality. And for greater sensations, it was necessary to at least control the movements of the arms, and then the forgotten Razer Hydra comes to the rescue.

')

Training

I probably will start with the process of installing drivers on the device. And again, I'll start with the hydra. For a start, it’s worth understanding that Razer has already forgotten about the device and the driver hasn’t been updated for a long time and they don’t provide the SDK, but Sixense does that actually developed this technology. To download the latest driver, go to their forum where you need select the latest version of Motion Creator, download and install.

With Oculus Rift, it's easier, it does not need drivers, but you need to download the latest version of the SDK, you can do this by clicking on the link , but you need to register for the download.

Integration

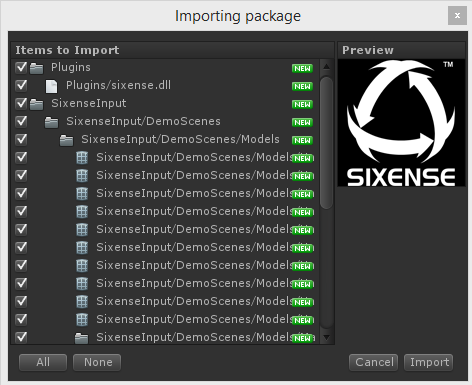

Now after installing all the software, we need to make our devices work in Unity3d. According to the already established tradition, I will start with the Razer Hydra. You need to launch Unity3d, open your project or create a new one, go to the Asset Store (Ctrl + 9 hot keys), find SixenseUnityPlugin in it, download and import.

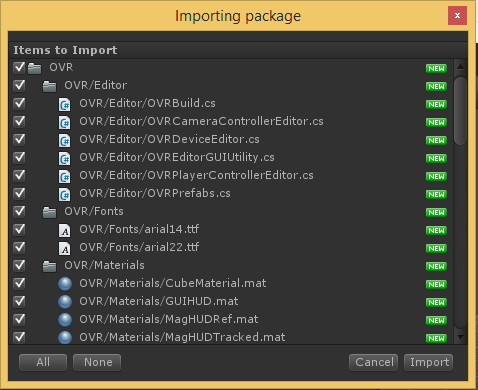

Now let's import the Oculus SDK, for this we need to drag from the

\{" SDK"}\ovr_unity_{ }_lib\OculusUnityIntegration folder \{" SDK"}\ovr_unity_{ }_lib\OculusUnityIntegration file called OculusUnityIntegration.unitypackage

In the project we should have the following files:

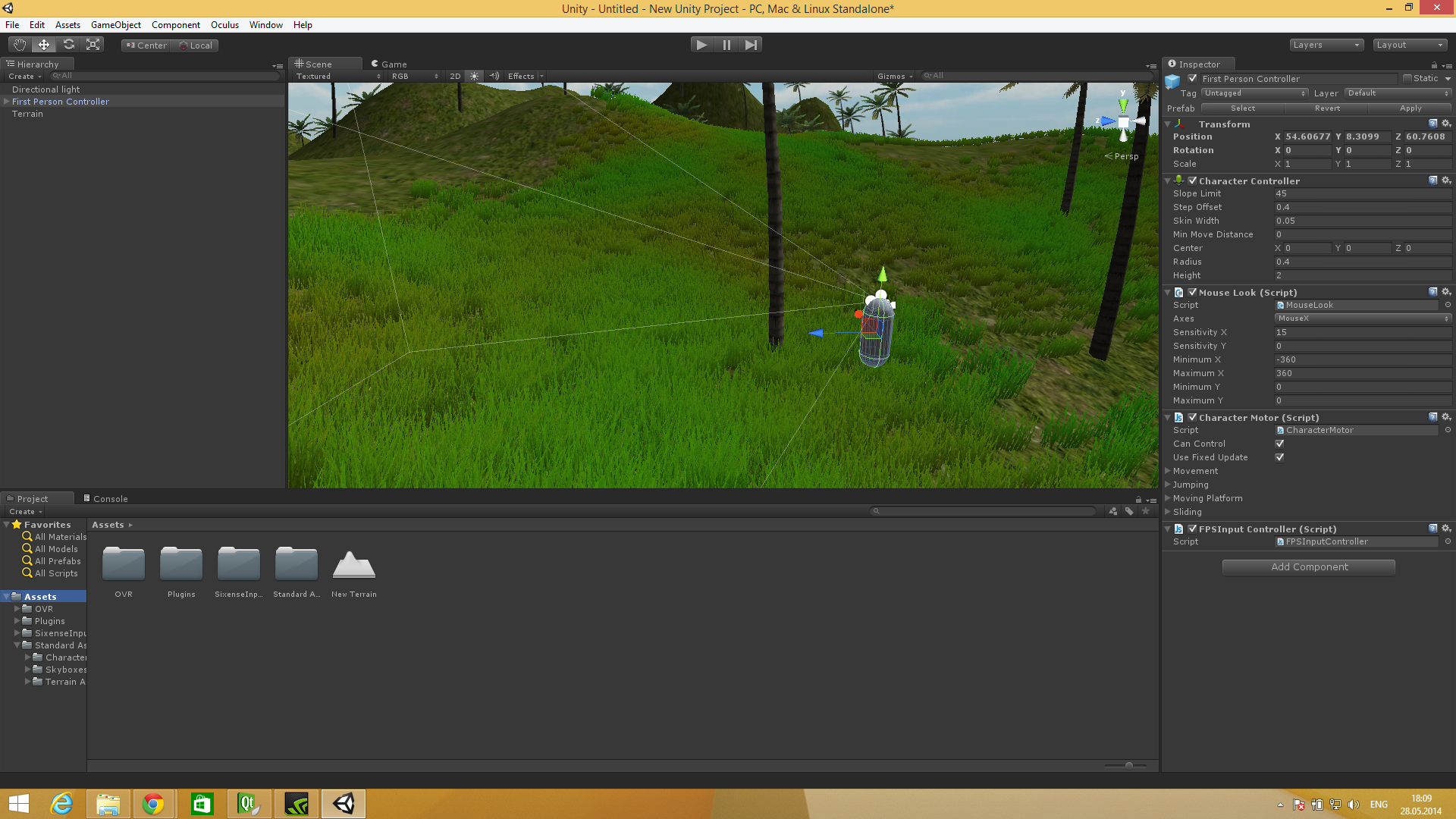

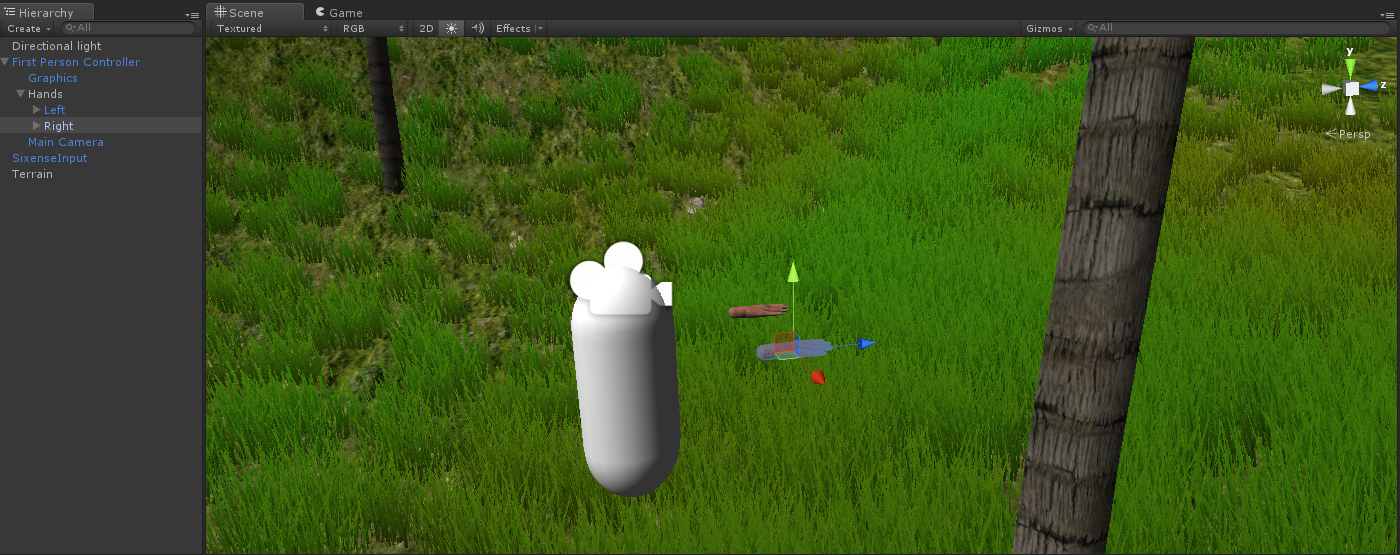

Ok, we coped with it, now you need to try it all in action. To do this, create a scene on which to start place

Terrain , First Person Controller .So, I sketched a small scene to try our hydra for a start:

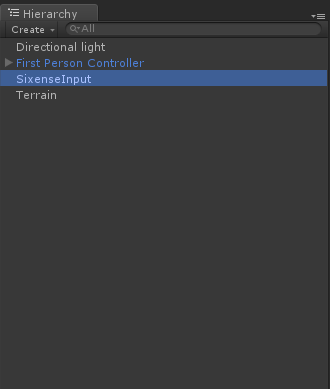

Also, in order for the hydra itself to work, you need to add the

SixenseInput object from the /SixenseInput folder in the project to the scene.

Now we need to add hands to the scene, find them in

SixenseInput/DemoScenes/Models , the object is called Hand . Since this is just a tutorial, I do not consider that this is just one right hand =). So in order not to lose hands, I suggest you to shove them into an empty object and fix it in the hierarchy of the FirstPersonController object. We get something like this:

Now we need to create a script to handle the values coming from the hydra. To do this, create a C # script, call it HandsController and put this code into it:

HandsController.cs

using UnityEngine; using System.Collections; public class HandsController : MonoBehaviour { float m_sensitivity = 0.003f; public GameObject leftHand; public GameObject rightHand; void Update () { leftHand.transform.localPosition = SixenseInput.Controllers[0].Position * m_sensitivity; rightHand.transform.localPosition = SixenseInput.Controllers[1].Position * m_sensitivity; leftHand.transform.localRotation = SixenseInput.Controllers [0].Rotation; rightHand.transform.localRotation = SixenseInput.Controllers [1].Rotation; } } We hang this script on

MainCamera in FirstPersonController , assign hands to the appropriate fields and see what we have done, I will attach a video demonstrating the work.Great, the Oculus Rift's turn has come, there are no special problems with it, we just need to carefully replace

FirstPersonController with OVRPlayerController from the OVR/Prefabs . Also, do not forget to add our HandsController.cs to the OVRCameraController and do not forget to assign hands.Here is how you should look like:

After all this, we can test our creation, but we cannot walk, it is necessary to solve this problem. Create a

MoveController.cs script and put this code into it:MoveController.cs

using UnityEngine; using System.Collections; public class MoveController : MonoBehaviour { void Update () { float X = SixenseInput.Controllers[1].JoystickX * 5; float Y = SixenseInput.Controllers[1].JoystickY * 5; X*=Time.deltaTime; Y*=Time.deltaTime; transform.Translate(X, 0, Y); } } We will assign this script to

OVRPlayerController and can be checked. I apologize for the low frame rate video:Total

As a result, we got a little playable scene, where you can understand whether it is worthwhile to use these newfangled things in your projects or not? I think that if you make such games, you need to think through every little thing. I have nothing more to say, thank you for your attention.

Source: https://habr.com/ru/post/223295/

All Articles