Joint experiment of Yandex.Mail and Nginx teams: does SPDY really speed up the Internet?

We in Yandex.Post together with the Nginx team conducted a study to put a dot on the “e” in the question of how much and due to what SPDY speeds up the Internet.

About SPDY itself, of course, you know. In 2011, several Google developers published a draft of a new protocol designed to replace the usual HTTP. Its main differences were in multiplexing responses, header compression and traffic prioritization. The first few versions were not entirely successful, but by 2012 the specification was settled, the first alternative (not from Google) implementations appeared, the share of browser support reached 80%, a stable version of nginx was released with support for SPDY.

')

We realized that, apparently, the protocol from a promising prospect turns into a good streamlined solution and began a full cycle of implementation works. Began, of course, with testing. I really wanted to believe in the praises published in the blogosphere without him, but this cannot be done in projects with millions of users. We should have received confirmation that SPDY really gives an accelerating effect.

There is a lot of interesting research around SPDY, including Google itself. The company-author of the protocol showed that in their case SPDY accelerates the download by 40%. The SPDY protocol was also studied by Opera. But neither the methods of counting, nor the examples of the pages on which such impressive results were achieved were not in these studies.

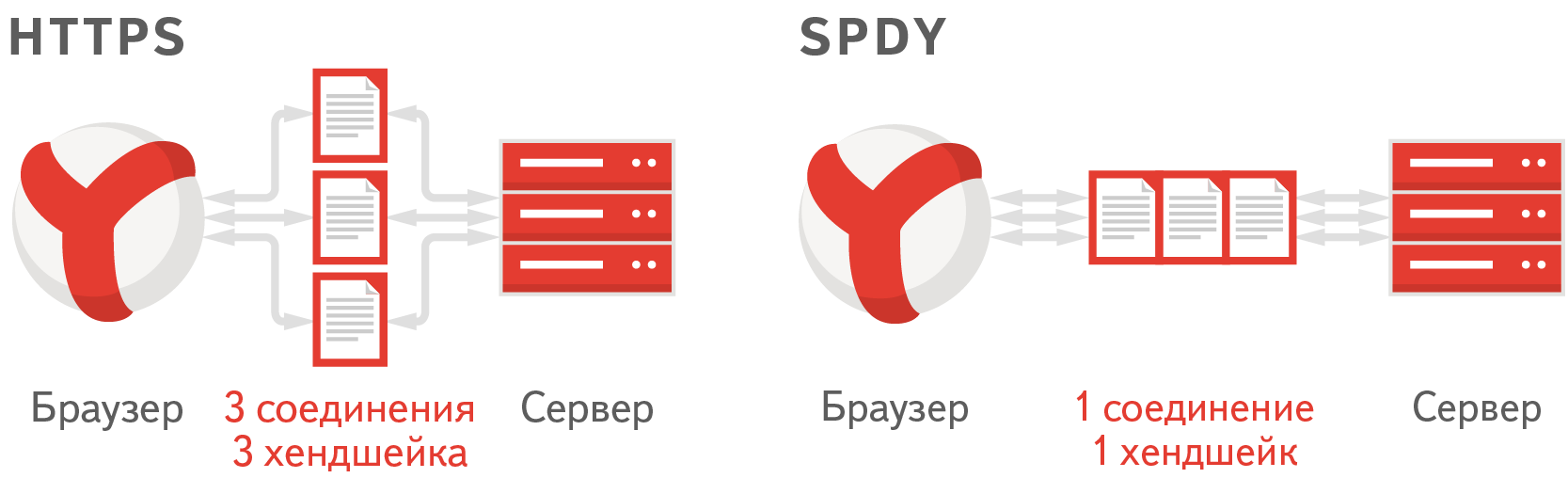

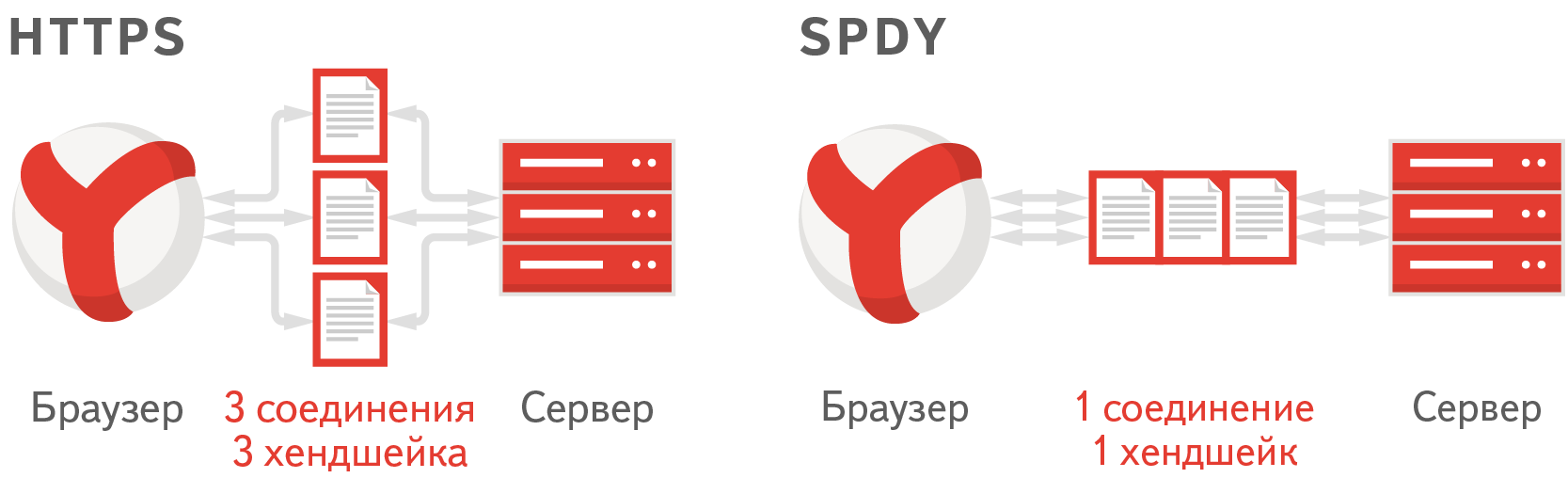

The SPDY protocol is quite complex and difficult to talk about. Let's try to visually describe how it works.

Imagine a web page that includes several javascript and css files. The browser opens several connections to the server and starts simultaneously downloading these files from the server. If HTTPS is enabled on the server, and as you know, Yandex.Mail works on HTTPS, for each connection you need to make an SSL-handshake, that is, the browser and the server need to exchange keys with which they will encrypt the transmitted data. Both the server and the browser need to spend some time and CPU resources on this.

The SPDY protocol is designed to reduce the time - due to the fact that it is necessary to establish only one connection to the server, which means that only one is necessary for the handshake. As a result, to download statics, the browser establishes a connection to the server and immediately, without waiting for a response, sends requests for all the necessary scripts.

After that, the server begins to send these files sequentially.

We use a lot of static in our mail. In the minimized form in the amount of about 500 megabytes of resources for all themes and all pages. This css and js files, and pictures. Due to the large amount of static needed, the first boot time is not lightning.

We distribute this statics to users from almost 200 servers located in Moscow and CDN throughout Russia and abroad. As a server, we use Nginx, because we believe that this is the fastest and most reliable of the existing web servers that can distribute statics. Last year, the Nginx team announced its implementation of the SPDY protocol version 2 as a module. And we started trying to distribute static with the help of Nginx and this module in early 2013.

We have been worrying about static loading speed for a long time. We have already done a lot to ensure that the user needs to load as little static as possible. Firstly, all js-scripts and css itself are minimized during assembly. We control the size of the images and try not to go beyond the amount of static needed for mail operation.

Secondly, we use the technology of the so-called freeze of statics. It consists in the following: if the contents of the file has not changed, then users do not need to transfer it. Therefore, we make it so that its path from version to version remains unchanged. When building the layout, this path is obtained by calculating the hash sum of the file contents. For example . For the formation of such paths we use Borschik .

First, we decided to measure on our test machines how fast we will be by simply including SPDY in the config file. We laid out static on one physical machine, inaccessible to users, which worked under control of Ubuntu 12.04. The machine had 12 cores and 20GB of RAM. All static was placed in the RAM-disk in RAM.

Judging by these measurements, we accelerated the mail very noticeably. Approximately the same as Google has accelerated in their measurements. But we inside we could not fully emulate all the features of mail download, all network problems, packet loss and other TTL. Therefore, in order to understand whether we are slowing down the download for someone, we decided to conduct an experiment on our users.

Among them, all of them were randomly selected by 10% and divided them into two groups: the first group began to give statics through SPDY, and the second group was the control group.

For the first group, the static was loaded from the experiment.yandex.st domain, and for the second group - with meth.yandex.st. The need for a control group was due to the fact that all Mail users could have static. Therefore, if they moved to another domain, they would have to re-extract the static, and those who did not get into the experiment would not. A comparison would be incorrect.

All modern browsers have support for the Navigation Timing API . This is a browser's JavaScript-API that allows you to get different data about load times. Through it, it is possible to find out within milliseconds when the user went to the Mail page and the magic began with loading the static. Our client side works in such a way that he knows the moment when all the necessary static files are loaded. In our scripts, we subtract the first from the second moment and get the time to fully load all the static and send this figure to the server.

In addition to the download speed, an important aspect for us is the number of static downloads. We check two things with clients:

1. Did you ever get a static file ? For example, the server replied that there was no such file. The fact of each such nezagruzki we also send to the server and build a graph of their number.

2. Has the file come in the size it should have been ? We will tell about this type of errors in more detail. We checked the behavior of browsers in cases where the connection is lost during the transfer of a file between the server and the client. It turned out that Firefox, IE and the old Opera give us the status of 200 and all the under-received content. Browsers do not verify that they received exactly as much data as indicated in the Content-Length header. But, even worse, IE and Opera while caching this under-received content, therefore, as long as the cache is alive, it will not be possible to re-distribute the file correctly. Firefox, fortunately, pulls the data from the server the next time you request it. A bug reporting this misbehavior is in the Mozilla tracker .

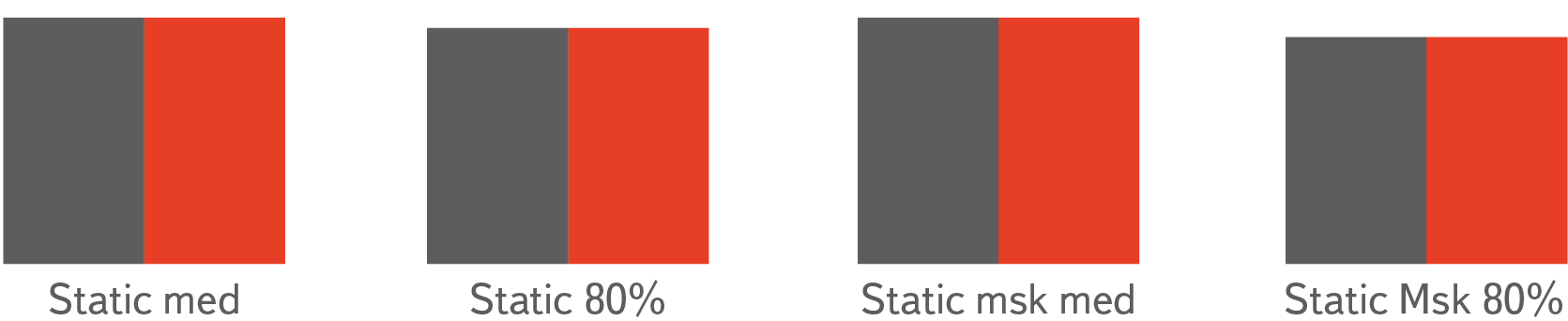

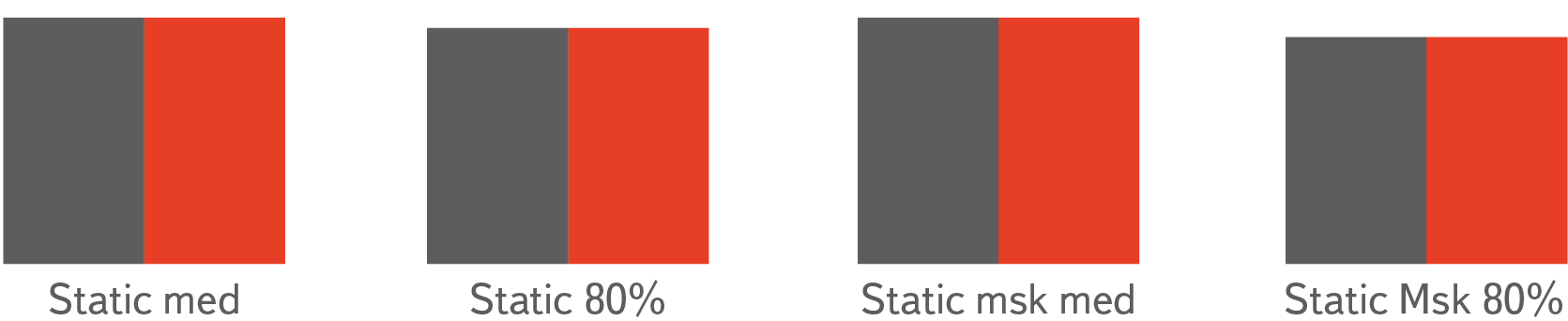

According to the received figures, we calculated the average loading time of the statics, the median, and also the percentile of 80%. Acceleration of our devices, unfortunately, did not overcome the statistical error.

Average load time decreased by 0.6%. That is, despite all the assurances of Google, SPDY as such is not able to take and speed up any site on those insane 40-50%.

We tried to enable SPDY / 2 on mail.yandex.ru. After switching on, we had a lot of errors in the logs and we had to turn it off. In Opera, there is an unpleasant bug, which, in view of the fact that its support has already been terminated, is unlikely to be fixed. It lies in the fact that Opera, when sending POST requests, sends an invalid Content-Length header. The problem is described here: trac.nginx.org/nginx/ticket/337

If you have many visitors with old Operas (12. *) and use POST requests, we do not recommend you to include SPDY.

UPD: The SPDY developer suggests in the comments that in nginx, starting with version 1.5.10, the third version of the protocol is already implemented, and therefore the old Operas will no longer use SPDY when working with it. Therefore, the Opera bug will be irrelevant.

After our research, we came to the conclusion that SPDY will not accelerate anything, and optimization of static loading, reduction of its size, optimal caching have much more - by orders of magnitude - noticeable effects in the speed of the interface.

However, in SPDY we found an unexpected profit. Due to the fact that many customers stopped creating connections for each file, these connections became noticeably smaller, which means that our processors are less loaded. That is, no, and there is savings.

Here is how the inclusion of SPDY looked on the open connections graph on our servers.

We did not find any differences in the number of static loading errors. This means that the inclusion of SPDY in Nginx does not create any problems in browsers, even in those where it is not supported.

We performed reverse engineering of the implementation of the SPDY protocol in nginx and made sure that it works exactly as intended. We also tried in testing to distribute statics through Apache with the SPDY module connected and to measure similarly on synthetic queries. We were convinced that in this case the acceleration was also a very small amount.

As a result, we have included SPDY on everything yandex.st. Suppose it did not bring us acceleration of 100,500%, but it saved some amount of electricity due to a smaller load on the processor :)

Another reason for the lack of acceleration - Nginx itself is an incredibly fast server. Initially, SPDY was implemented as a module for the Apache server, and as you know, it requires a lot of resources to maintain the connection. Therefore, saving Apache connections gives a noticeable profit in its performance. In Nginx, about 2.5M of memory is used to maintain 10,000 inactive HTTP keep-alive connections (source - nginx.org/ru ).

Unfortunately, we were not able to figure out how Google conducted research in its measurements to speed up SPDY. Therefore, we should not take the results of our research as a message that this protocol does not speed up anything. Our findings are the result of researching a special case of SPDY for the distribution of statics.

About SPDY itself, of course, you know. In 2011, several Google developers published a draft of a new protocol designed to replace the usual HTTP. Its main differences were in multiplexing responses, header compression and traffic prioritization. The first few versions were not entirely successful, but by 2012 the specification was settled, the first alternative (not from Google) implementations appeared, the share of browser support reached 80%, a stable version of nginx was released with support for SPDY.

')

We realized that, apparently, the protocol from a promising prospect turns into a good streamlined solution and began a full cycle of implementation works. Began, of course, with testing. I really wanted to believe in the praises published in the blogosphere without him, but this cannot be done in projects with millions of users. We should have received confirmation that SPDY really gives an accelerating effect.

There is a lot of interesting research around SPDY, including Google itself. The company-author of the protocol showed that in their case SPDY accelerates the download by 40%. The SPDY protocol was also studied by Opera. But neither the methods of counting, nor the examples of the pages on which such impressive results were achieved were not in these studies.

SPDY. Inside view

The SPDY protocol is quite complex and difficult to talk about. Let's try to visually describe how it works.

Imagine a web page that includes several javascript and css files. The browser opens several connections to the server and starts simultaneously downloading these files from the server. If HTTPS is enabled on the server, and as you know, Yandex.Mail works on HTTPS, for each connection you need to make an SSL-handshake, that is, the browser and the server need to exchange keys with which they will encrypt the transmitted data. Both the server and the browser need to spend some time and CPU resources on this.

The SPDY protocol is designed to reduce the time - due to the fact that it is necessary to establish only one connection to the server, which means that only one is necessary for the handshake. As a result, to download statics, the browser establishes a connection to the server and immediately, without waiting for a response, sends requests for all the necessary scripts.

After that, the server begins to send these files sequentially.

Https

| Connect + TLS-handshake | Connect + TLS-handshake | Connect + TLS-handshake |

|---|---|---|

| > GET /bootstrap.css > ... <HTTP / 1.1 200 OK <... <body {margin: 0;} | > GET / jquery.min.js > ... <HTTP / 1.1 200 OK <... <(function (window, undefined) { <... | > GET / logo.png > ... <HTTP / 1.1 200 OK <... <[data] <... |

HTTPS + SPDY

| Connect + TLS-handshake |

|---|

| > GET /bootstrap.css > GET /jquery.js > GET /logo.png <[bootstrap.css content] <... <[jquery.js content] <... <[logo.png content] |

Accelerate the first download

We use a lot of static in our mail. In the minimized form in the amount of about 500 megabytes of resources for all themes and all pages. This css and js files, and pictures. Due to the large amount of static needed, the first boot time is not lightning.

We distribute this statics to users from almost 200 servers located in Moscow and CDN throughout Russia and abroad. As a server, we use Nginx, because we believe that this is the fastest and most reliable of the existing web servers that can distribute statics. Last year, the Nginx team announced its implementation of the SPDY protocol version 2 as a module. And we started trying to distribute static with the help of Nginx and this module in early 2013.

Current optimizations

We have been worrying about static loading speed for a long time. We have already done a lot to ensure that the user needs to load as little static as possible. Firstly, all js-scripts and css itself are minimized during assembly. We control the size of the images and try not to go beyond the amount of static needed for mail operation.

Secondly, we use the technology of the so-called freeze of statics. It consists in the following: if the contents of the file has not changed, then users do not need to transfer it. Therefore, we make it so that its path from version to version remains unchanged. When building the layout, this path is obtained by calculating the hash sum of the file contents. For example . For the formation of such paths we use Borschik .

How we measured load times

First, we decided to measure on our test machines how fast we will be by simply including SPDY in the config file. We laid out static on one physical machine, inaccessible to users, which worked under control of Ubuntu 12.04. The machine had 12 cores and 20GB of RAM. All static was placed in the RAM-disk in RAM.

Judging by these measurements, we accelerated the mail very noticeably. Approximately the same as Google has accelerated in their measurements. But we inside we could not fully emulate all the features of mail download, all network problems, packet loss and other TTL. Therefore, in order to understand whether we are slowing down the download for someone, we decided to conduct an experiment on our users.

Among them, all of them were randomly selected by 10% and divided them into two groups: the first group began to give statics through SPDY, and the second group was the control group.

For the first group, the static was loaded from the experiment.yandex.st domain, and for the second group - with meth.yandex.st. The need for a control group was due to the fact that all Mail users could have static. Therefore, if they moved to another domain, they would have to re-extract the static, and those who did not get into the experiment would not. A comparison would be incorrect.

Metrics

All modern browsers have support for the Navigation Timing API . This is a browser's JavaScript-API that allows you to get different data about load times. Through it, it is possible to find out within milliseconds when the user went to the Mail page and the magic began with loading the static. Our client side works in such a way that he knows the moment when all the necessary static files are loaded. In our scripts, we subtract the first from the second moment and get the time to fully load all the static and send this figure to the server.

In addition to the download speed, an important aspect for us is the number of static downloads. We check two things with clients:

1. Did you ever get a static file ? For example, the server replied that there was no such file. The fact of each such nezagruzki we also send to the server and build a graph of their number.

2. Has the file come in the size it should have been ? We will tell about this type of errors in more detail. We checked the behavior of browsers in cases where the connection is lost during the transfer of a file between the server and the client. It turned out that Firefox, IE and the old Opera give us the status of 200 and all the under-received content. Browsers do not verify that they received exactly as much data as indicated in the Content-Length header. But, even worse, IE and Opera while caching this under-received content, therefore, as long as the cache is alive, it will not be possible to re-distribute the file correctly. Firefox, fortunately, pulls the data from the server the next time you request it. A bug reporting this misbehavior is in the Mozilla tracker .

Measurement results

According to the received figures, we calculated the average loading time of the statics, the median, and also the percentile of 80%. Acceleration of our devices, unfortunately, did not overcome the statistical error.

Average load time decreased by 0.6%. That is, despite all the assurances of Google, SPDY as such is not able to take and speed up any site on those insane 40-50%.

Opera 12.16 and Content-Length

We tried to enable SPDY / 2 on mail.yandex.ru. After switching on, we had a lot of errors in the logs and we had to turn it off. In Opera, there is an unpleasant bug, which, in view of the fact that its support has already been terminated, is unlikely to be fixed. It lies in the fact that Opera, when sending POST requests, sends an invalid Content-Length header. The problem is described here: trac.nginx.org/nginx/ticket/337

If you have many visitors with old Operas (12. *) and use POST requests, we do not recommend you to include SPDY.

UPD: The SPDY developer suggests in the comments that in nginx, starting with version 1.5.10, the third version of the protocol is already implemented, and therefore the old Operas will no longer use SPDY when working with it. Therefore, the Opera bug will be irrelevant.

findings

After our research, we came to the conclusion that SPDY will not accelerate anything, and optimization of static loading, reduction of its size, optimal caching have much more - by orders of magnitude - noticeable effects in the speed of the interface.

However, in SPDY we found an unexpected profit. Due to the fact that many customers stopped creating connections for each file, these connections became noticeably smaller, which means that our processors are less loaded. That is, no, and there is savings.

Here is how the inclusion of SPDY looked on the open connections graph on our servers.

We did not find any differences in the number of static loading errors. This means that the inclusion of SPDY in Nginx does not create any problems in browsers, even in those where it is not supported.

We performed reverse engineering of the implementation of the SPDY protocol in nginx and made sure that it works exactly as intended. We also tried in testing to distribute statics through Apache with the SPDY module connected and to measure similarly on synthetic queries. We were convinced that in this case the acceleration was also a very small amount.

As a result, we have included SPDY on everything yandex.st. Suppose it did not bring us acceleration of 100,500%, but it saved some amount of electricity due to a smaller load on the processor :)

Another reason for the lack of acceleration - Nginx itself is an incredibly fast server. Initially, SPDY was implemented as a module for the Apache server, and as you know, it requires a lot of resources to maintain the connection. Therefore, saving Apache connections gives a noticeable profit in its performance. In Nginx, about 2.5M of memory is used to maintain 10,000 inactive HTTP keep-alive connections (source - nginx.org/ru ).

Unfortunately, we were not able to figure out how Google conducted research in its measurements to speed up SPDY. Therefore, we should not take the results of our research as a message that this protocol does not speed up anything. Our findings are the result of researching a special case of SPDY for the distribution of statics.

Source: https://habr.com/ru/post/222951/

All Articles