How GZIP compression works

In the life of every man, a moment comes when traffic grows and a

In the life of every man, a moment comes when traffic grows and a Errors are possible in the text (I did the proofreading several times, but still suddenly), so I apologize in advance and ask you to inform me about all the problems through personal messages if some part of the translation seems to be incorrect.

Even in the modern world, with a high-speed Internet connection and unlimited data storages, data compression is still relevant, especially for mobile devices and countries with a slow Internet connection. This post describes a de facto lossless compression method for compressing textual data on websites: GZIP.

')

Gzip compression

GZIP provides lossless compression, in other words, the original data can be fully restored when unpacking. It is based on the DEFLATE algorithm, which uses a combination of the LZ77 algorithm and the Huffman algorithm.

LZ77 algorithm

The LZ77 algorithm replaces repeated data entries with “links”. Those. if in the available data some chain of elements occurs more than once, then all its subsequent entries are replaced by “links” to its first instance. The algorithm is perfectly reviewed by horror_x and is described here . Each such link has two values: offset and length.

Let's look at an example:

Original text: "ServerGrove, the PHP hosting company, provides hosting solutions for PHP projects" (81 bytes)

LZ77: “ServerGrove, the PHP hosting company, p <3.32> ides <9.26> solutions for <5.52> <3.35> jects” (73 bytes, assuming that each reference is 3 bytes)

As you can see, the words “hosting” and “PHP” are repeated, so the second time the substring is found, it will be replaced with a link. There are other coincidences, such as "er", but since this is insignificant (in this case, “er” is missing in other words) , the original text remains.

Huffman coding

Huffman coding is a variable length coding method that assigns shorter codes to more frequent “characters”. The problem with variable code length is usually that we need a way to find out when the code has ended and a new one started to decrypt it.

Huffman coding solves this problem by creating a prefix code where no codeword is the prefix of another. This can be better understood by example:

> Original text: ServerGrove

ASCII codification: "01010011 01100101 01110010 01110110 01100101 01110010 01000111 01110010 01101111 01110110 01100101" (88 bits)

ASCII is a fixed-length character encoding system, so the letter “e”, which is repeated three times and is also the most frequently encountered letter in English, is the same size as the letter “G”, which appears only once. Using this statistical information, Huffman can create the most optimized system.

Huffman: "1110 00 01 10 00 01 1111 01 110 10 00" (27 bits)

The Huffman method allows us to get shorter codes for “e”, “r” and “v”, while “S” and “G” are longer. The explanations of how to use the Huffman method are beyond the scope of this post, but if you are interested, I recommend you to read the excellent video on Computerphile (or an article on Harbe ) .

DEFLATE as an algorithm used in GZIP compression is a combination of both of these algorithms.

Is gzip the best compression method?

The answer is no. There are other methods that give higher compression rates, but there are several good reasons to use this.

Firstly, even though GZIP is not the best compression method, it provides a good compromise between speed and compression ratio. Compression and decompression of a GZIP occur quickly and the compression ratio is high.

Secondly, it is not easy to implement a new global data compression method that everyone can use. Browsers will need an update, which is now much easier due to auto-update. However, browsers are not the only problem. Chromium tried to add support for BZIP2, a better method based on the Burrows-Wheeler transformation, but had to be abandoned because Some intermediate proxy servers distorted data because could not recognize the bzip2 headers and tried to process gzip content. A bug report is available here .

Gzip + http

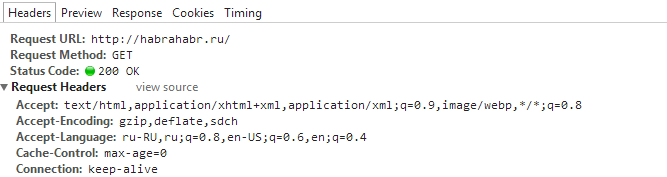

The process of obtaining compressed content between the client (browser) and the server is quite simple. If the browser has support for GZIP / DEFLATE, it lets the server understand this through the “Accept-Encoding” header. Then, the server can choose to send the content in compressed or original form.

Implementation

The DEFLATE specification provides some freedom for developers to implement an algorithm using various approaches, as long as the resulting stream is compatible with the specification.

GNU GZIP

The GNU implementation is the most common and was designed to be a replacement for the archiving utility, free from proprietary algorithms. To compress a file using the GNU GZIP utility:

$ gzip -c file.txt> file.txt.gz

There are 9 compression levels, from “1” (the fastest with the lowest compression ratio) to “9” (the slowest with the best compression ratio). By default, "6" is used. If you need maximum compression at the expense of using more memory and time, use the "-9" (or "-best") flag:

$ gzip -9 -c file.txt> file.txt.gz

7-zip

7-zip implements the DELFATE algorithm differently and usually archives with a higher compression ratio. To maximize file compression:

7z a -mx9 file.txt.gz file.txt

7-zip is also available for Windows and provides an implementation for other compression methods such as 7z, xz, bzip2, zip, and others.

Zopfli

Zopfli is ideal for one-time compression, for example in situations where a file is once compressed and reusable. It is 100 times slower, but compression is 5% better than others. Habrapost .

Enable GZIP

Apache

The mod_deflate module provides support for GZIP, so that the server’s response is compressed on the fly before it is transmitted to the client via the network. To enable text file compression, you need to add .htaccess lines:

AddOutputFilterByType DEFLATE text / plain

AddOutputFilterByType DEFLATE text / html

AddOutputFilterByType DEFLATE text / xml

AddOutputFilterByType DEFLATE text / css

AddOutputFilterByType DEFLATE application / xml

AddOutputFilterByType DEFLATE application / xhtml + xml

AddOutputFilterByType DEFLATE application / rss + xml

AddOutputFilterByType DEFLATE application / javascript

AddOutputFilterByType DEFLATE application / x-javascript

There are several known bugs in some versions of browsers, for this reason it is recommended * to also add:

BrowserMatch ^ Mozilla / 4 gzip-only-text / html* this decision is currently no longer relevant, like the above browsers, so this information can be taken for informational purposes.

BrowserMatch ^ Mozilla / 4 \ .0 [678] no-gzip

BrowserMatch \ bMSIE! No-gzip! Gzip-only-text / html

Header append Vary User-Agent

In addition, you can use pre-compressed files instead of compressing them every time. This is especially useful for files that do not change with every request, such as CSS and JavaScript, which can be compressed using slow algorithms. For this:

RewriteEngine On

AddEncoding gzip .gz

RewriteCond% {HTTP: Accept-encoding} gzip

RewriteCond% {REQUEST_FILENAME} .gz -f

RewriteRule ^ (. *) $ $ 1.gz [QSA, L]

This makes Apache understand that files with the .gz extension must be compressed (line 2), you need to check the availability of the browser accepting gzip (line 3), and if the compressed file exists (line 4), we add .gz for the requested file.

Nginx

The ngx_http_gzip_module module allows you to compress files using GZIP on the fly, while the ngx_http_gzip_static_module module allows you to send pre-compressed files with the “.gz” extension instead of the usual ones.

An example configuration looks like this:

gzip on;

gzip_min_length 1000;

gzip_types text / plain application / xml;

Gzip + php

Although it is usually not recommended to compress data using PHP, since it is rather slow, it can be done using the zlib module. For example, we use the maximum compression on the jQuery.min library:

$originalFile = __DIR__ . '/jquery-1.11.0.min.js'; $gzipFile = __DIR__ . '/jquery-1.11.0.min.js.gz'; $originalData = file_get_contents($originalFile); $gzipData = gzencode($originalData, 9); file_put_contents($gzipFile, $gzipData); var_dump(filesize($originalFile)); // int(96380) var_dump(filesize($gzipFile)); // int(33305) Instead of output (translator's note)

Despite the fact that itching to add the author’s own explanations of the algorithms, statistics and the results of comparison tests to the author’s article, the translation was carried out practically without interference from the translator. The article was translated with the permission of the author and the ServerGrove portal.

Source: https://habr.com/ru/post/221849/

All Articles