Fast web application - network trepanation

Psychology is an interesting and sometimes useful science. Numerous studies show that the delay in displaying a web page for more than 300 ms causes the user to distract from the web resource and think: “what the hell is this?”. Therefore, ACCELERATING a web project to psychologically imperceptible values can JUST hold users longer. And that is why the business is ready to spend money on speed: $ 80M - to reduce lateny by only 1 ms .

However, in order to speed up a modern web project, you will have to start korushki and thoroughly delve into this topic - therefore, a basic knowledge of network protocols is welcome. Knowing the principles, you can effortlessly speed up your web system by hundreds of milliseconds in just a few approaches. Well, ready to save hundreds of millions? Pour coffee.

This is a very hot topic - how to satisfy the site user - and usabilityists will most likely make me drink a Molotov cocktail, have a snack with a grenade without checks and have time to shout out before the explosion: “I carry heresy”. Therefore, I want to go on the other side. It is widely known that the delay in displaying a page is more than 0.3 seconds - it makes the user notice this and ... wake up from the process of communication with the site. And the delay in the display is more than a second - to think about the topic: “what am I doing here at all? What are they torturing me for and forcing me to wait? ”

')

Therefore, we will give the yuzabelists their “usability”, while we ourselves will tackle a practical task - how not to disturb the “dream” of the client and facilitate his work with the site as long as possible, without distractions to the “brake”.

Well, of course you are. Otherwise, you would hardly have started reading the post. If it is serious, then there is a problem - for the issue of speed is divided into 2 weakly connected both technologically and socially parts: the frontend and the backend. And they often forget about the third key component - the network.

To begin with, we recall that the first sites in the early nineties were ... a set of static pages. And HTML was simple, clear and concise: first text, then text and resources. Bacchanalia began with the dynamic generation of web pages and the proliferation of java, perl and at the present time - this is already a galaxy of technologies, including php.

In order to reduce the impact of this race on the viability of the network, HTTP / 1.0 is taken in after 1996, and HTTP / 1.1 in 1999 after 3 years. Finally, they finally agreed that you don’t need to drive TCP handshakes from ~ 2/3 light speeds ( fiber) there-vessels for each request, establishing a new connection, and it is more correct to open a TCP connection once and work through it.

Here, little has changed in the last 40 years. Well, maybe a “parody” on the relational theory was added under the name NoSQL - which gives both advantages and disadvantages. Although, as practice shows, there seems to be more business benefit from it (but also sleepless nights searching for the answer to the question: "who deprived the integrity data and under what pretext" - rather became more).

Everything is clear in terms of speed:

We will no longer talk about overclocking the “application” - many books and articles have been written about it and everything is rather linear and simple.

The main thing is that the application is transparent and you can measure the speed of the request through various components of the application. If it is not - then you can not read, it will not help.

How to achieve this? Paths are known:

If you have a lot of logs and you get confused in them - aggregate the data, see percentiles and distribution. Simple and straightforward. Found a request for more than 0.3 seconds - start debriefing and so on until the bitter end.

Moving out. Web server. Here, too, little has changed much, but it can only be a crutches - through the installation of a reverse proxy web server in front of the web server (fascgi server). Yes, it certainly helps:

But the question remains - why didn’t immediately begin to do apache “correctly” and sometimes you have to install web servers with a train.

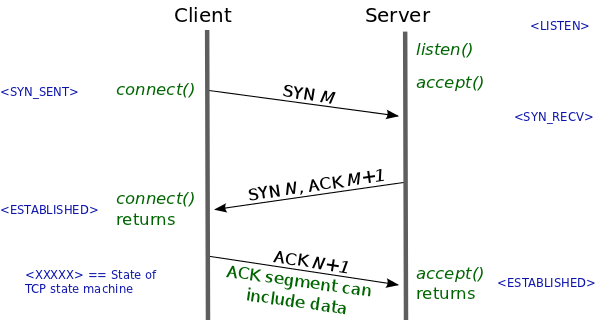

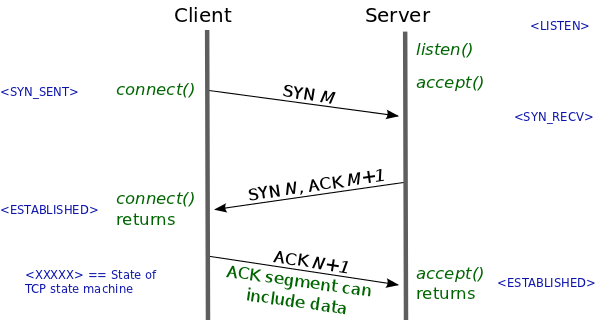

Establishing a TCP connection takes 1 RTT. Print the chart and hang it in front of you. The key to understanding the appearance of brakes is here.

This value correlates fairly closely with the location of your user relative to the web server (yes, there is the speed of light, there is the speed of light propagation in the material, there is routing) and it can take (especially considering the last mile provider) - tens and hundreds of milliseconds, which of course is a lot . And the trouble is, if this connection is established for each request, which was distributed in HTTP / 1.0.

For this, by and large, HTTP 1.1 was started and HTTP 2.0 (in the person of spdy ) is being developed in this direction. IETF with Google is currently trying to do everything to squeeze the most out of the current network architecture - without breaking it. And this can be done ... well, yes, as efficiently as possible using TCP connections, using their bandwidth as closely as possible through multiplexing , recovery from packet loss, etc.

Therefore, be sure to check the use of persistent connections on the web servers and in the application.

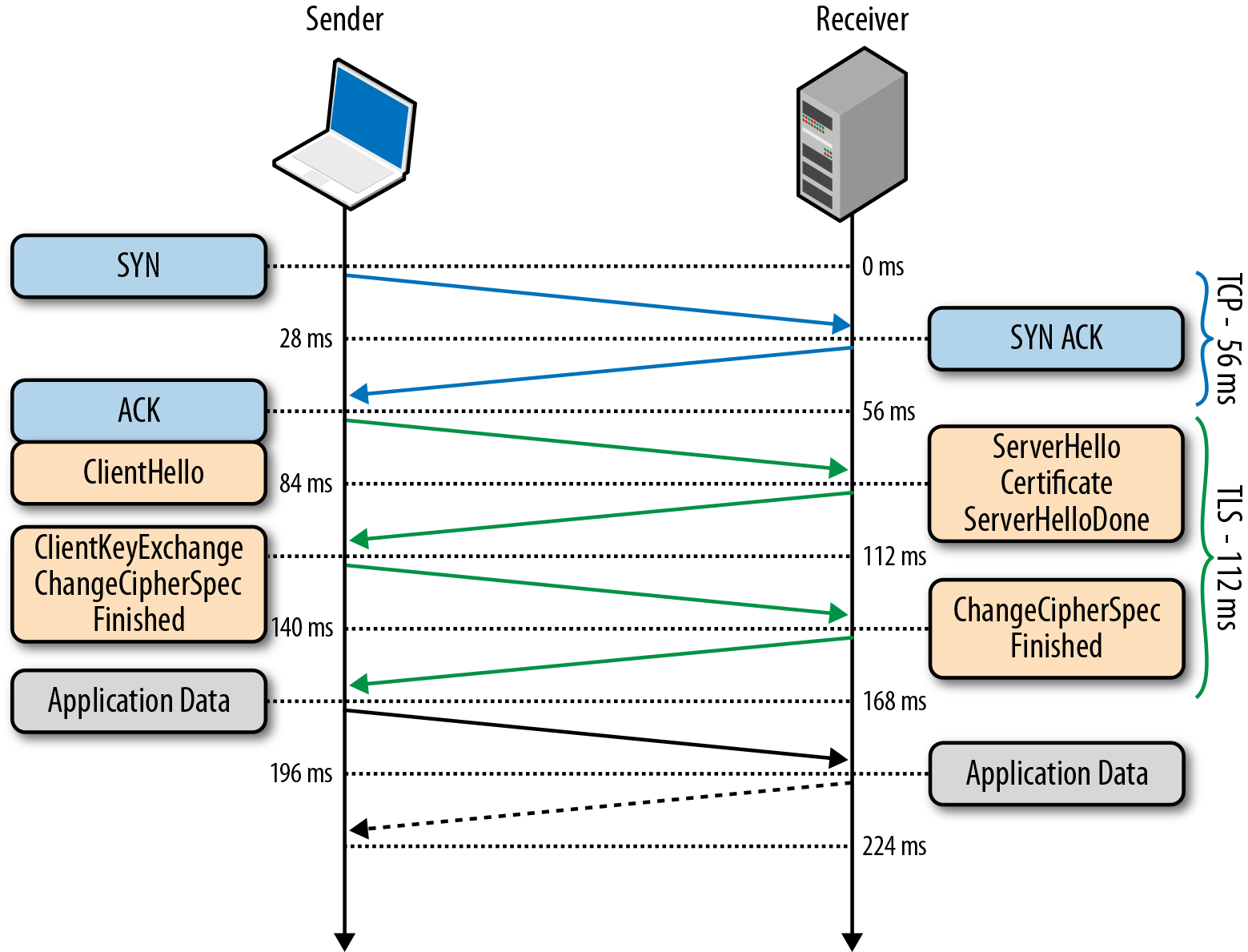

Without TLS , which originally originated in the depths of Netscape Communications as SSL, there is nowhere in the modern world. And although, they say, the Last “hole” in this protocol made many people turn gray much earlier than the term - there is practically no alternative.

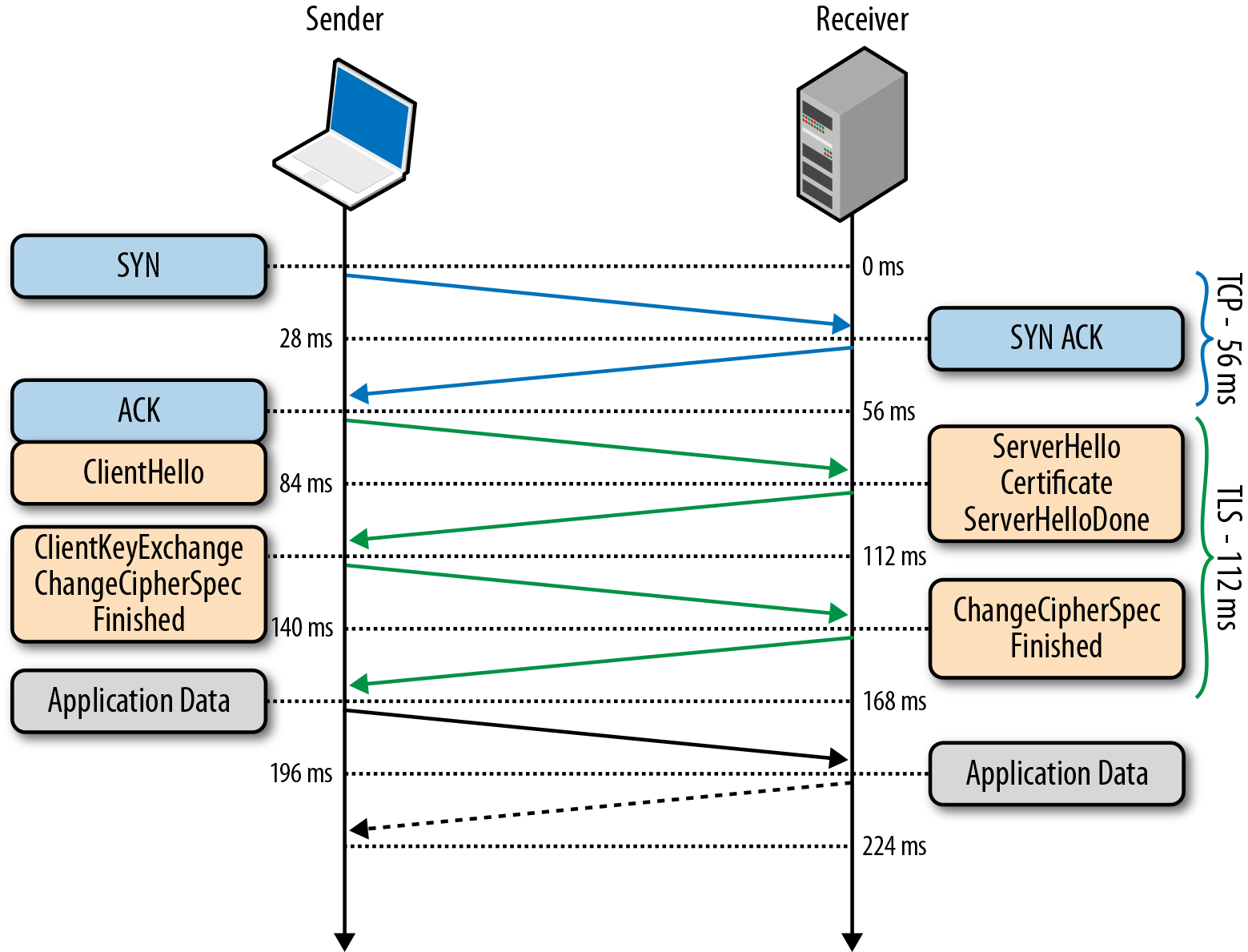

But for some reason, not everyone remembers that TLS worsens the “aftertaste” - adding 1-2 RTTs in addition to 1 RTT connection via TCP. In nginx, in general, the default TLS session cache is off - which adds an extra RTT.

Therefore, make sure that the TLS sessions are cached on a mandatory basis - and so we will save another 1 RTT (and one RTT still remains, unfortunately, as a security fee).

About the backend, probably all. It will be more difficult, but more interesting.

You can often hear - we have 50 Mbit / s, 100 Mbit / s, 4G will give even more ... But you can rarely see the understanding that bandwidth is not particularly important for a typical web application (unless you download files) - more important is lantency, t. to. many small requests are made for different connections and the TCP window just does not have time to swing.

And of course, the farther the client is from the web server, the longer. But it happens that it is impossible otherwise or difficult. That is why they came up with:

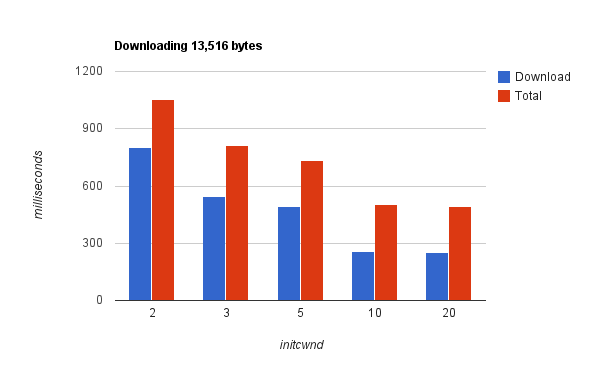

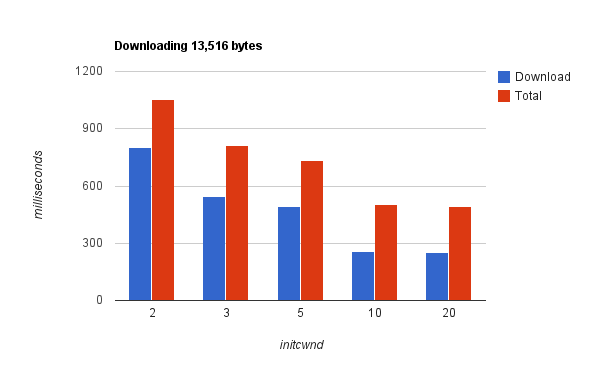

What else can you do ... increase TCP's initial congestion window - yes, it often helps, because A webpage is given in one set of packages without confirmation. Try it.

Turn on the browser debugger, look at the loading time of the web page and think about latency and how to reduce it.

Remember that the TCP connection window must be accelerated first. If the web page loads less than a second - the window may not have time to increase. The average network bandwidth in the world is slightly higher than 3 Mbps. Conclusion - transfer through one established connection as much as possible, "warming up" it.

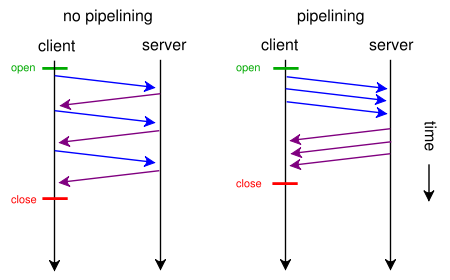

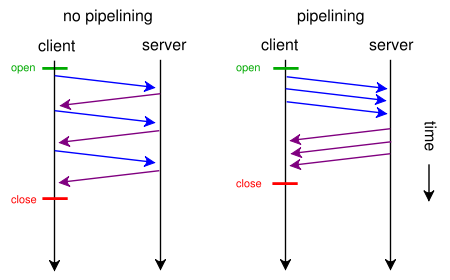

Here, of course, multiplexing of HTTP resources within a single TCP connection can help: the transfer of several resources alternately in both the request and the response. And even this technique was included in the standard, but they were under-written and, as a result, it did not take off (it was removed in the chrome not so long ago). Therefore, you can still try spdy, wait for HTTP 2.0, or use pipelining - but not from the browser, but from the application directly.

But what about the very popular domain sharding technique - when the browser / application overcomes the limit of> = 6 connections per domain, opening more>> = 6 or more connections to fictitious domains: img1.mysite.ru, img2.mysite.ru ...? It's fun, because from the point of view of HTTP / 1.1, this will most likely speed you up, but from the point of view of HTTP / 2.0 it is an anti-pattern, since Multiplexing HTTP traffic over a TCP connection can provide better bandwidth.

So for now - shardim domains and waiting for HTTP / 2.0 to not do this anymore. And of course - it is better to measure specifically for your web application and make an informed choice.

About known things like rendering speed of a web page and the size of images and JavaScript, the order of loading resources, etc. - writing is not interesting. Subject beaten and killed. If brief and inaccurate - cache the resources on the side of the web browser, but ... with your head. Caching 10MB js-file and parsing it inside the browser on each web page - we understand where it will lead. We turn on the browser debugger, pour coffee and by the end of the day - there are trends. Throw a plan and implement. Simple and transparent.

Much sharper pitfalls can be hidden behind the fairly new and rapidly developing network capabilities of a web browser. Let's talk about them:

Initially, the browser was perceived as a client application for displaying HTML markup. But every year it was transformed into a control center of a galaxy of technologies - as a result, the HTTP server and the web application behind it are now perceived only as an auxiliary component inside the browser. An interesting technological shift in emphasis.

Moreover, with the advent of WebRTC, a television studio built into the browser, and browser networking tools with the outside world, the issue of performance has smoothly shifted from the server infrastructure to the browser. If this internal kitchen at the client will slow down - no one will remember the php on the web server or join the database.

Let's disassemble this opaque monolith for parts.

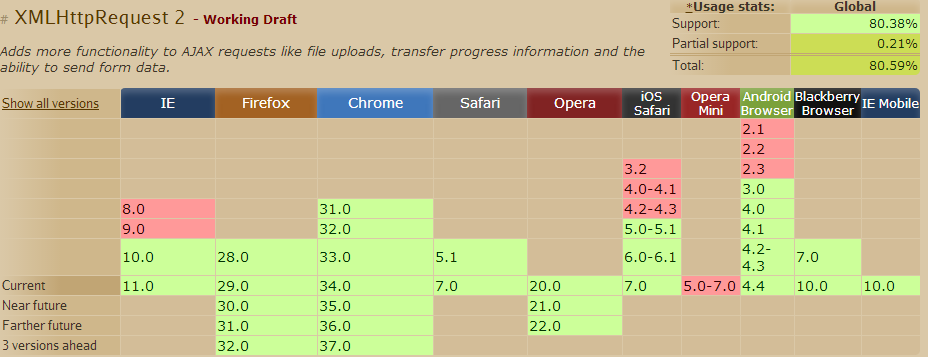

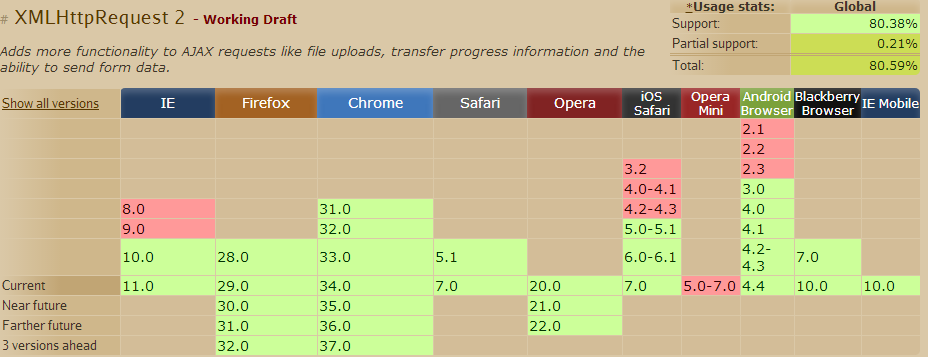

This is the well-known AJAX - the browser's ability to access external resources via HTTP. With the advent of CORS - the perfect "chaos" began. Now, to determine the cause of braking, you need to climb all the resources and watch logs everywhere.

Seriously, the technology undoubtedly exploded the capabilities of the browser, turning it into a powerful platform for dynamic information rendering. It makes no sense to write about it, the topic is known to many. However, I will describe the limitations:

Nevertheless, the technology is very popular and it is easy to make it transparent in terms of monitoring speed.

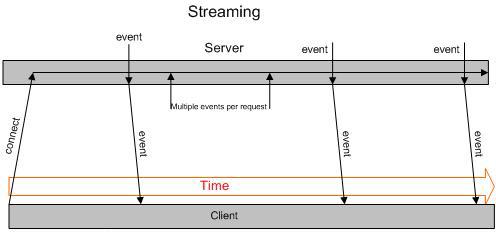

How to make a web chat? Yes, you need to somehow transfer from the server to the browser information about the changes. Directly via HTTP - can not, can not. Only: request and reply. It’s people who decided: make a request and wait for an answer, second, 30 seconds, a minute. If something has come, give back and break the connection.

Yes, a lot of antipatterns, crutches - but the technology is very widespread and always works. But, since You are responsible for the speed - know, the load on the servers with this approach is very high, and can be compared with the load from the main attendance of the web project. And if updates from the server to browsers are often distributed, then it can exceed the main load by several times!

What to do?

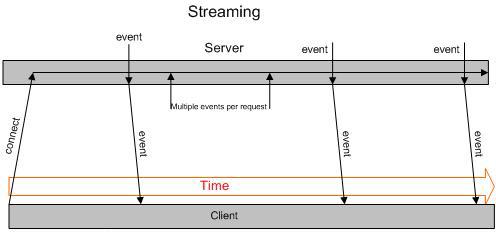

This opens a TCP connection to the web server, does not close, and the server writes different info to UTF-8 to it. True binary data cannot be transmitted optimally without Base64 (+ 33% increase in size), but as a control channel in one direction is an excellent solution. True in IE - not supported (see the paragraph above, which works everywhere).

The advantages of technology are that it:

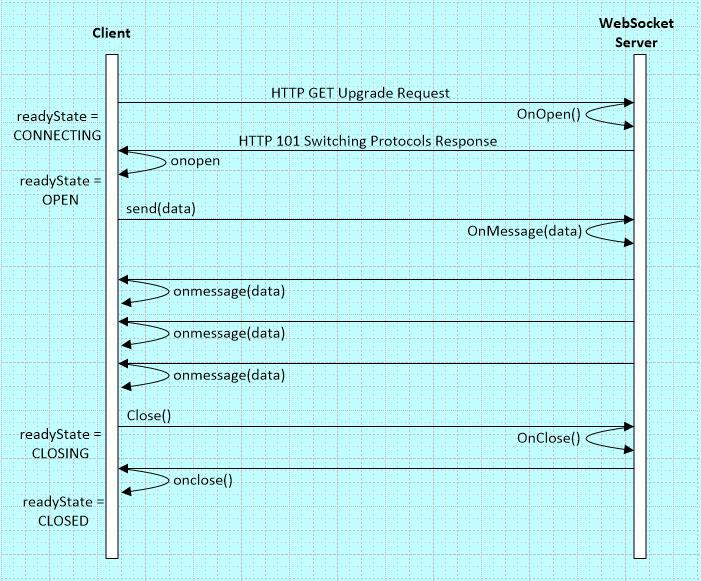

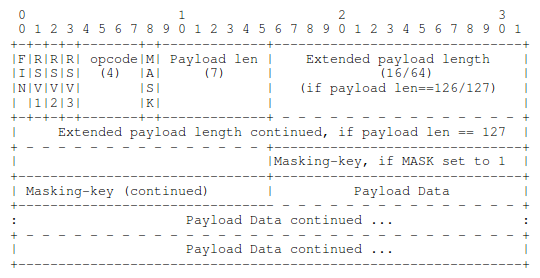

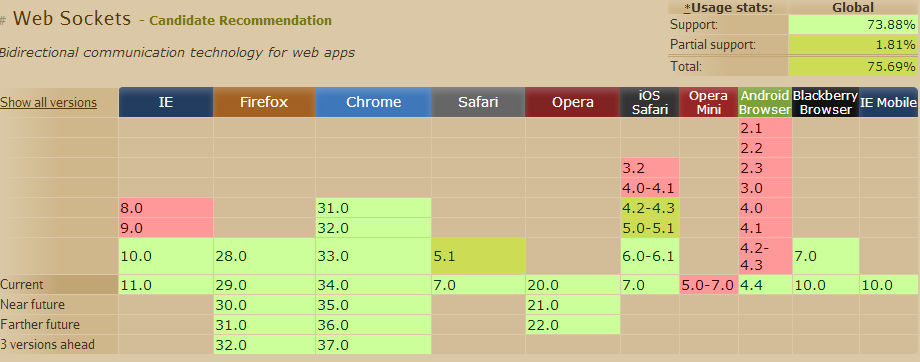

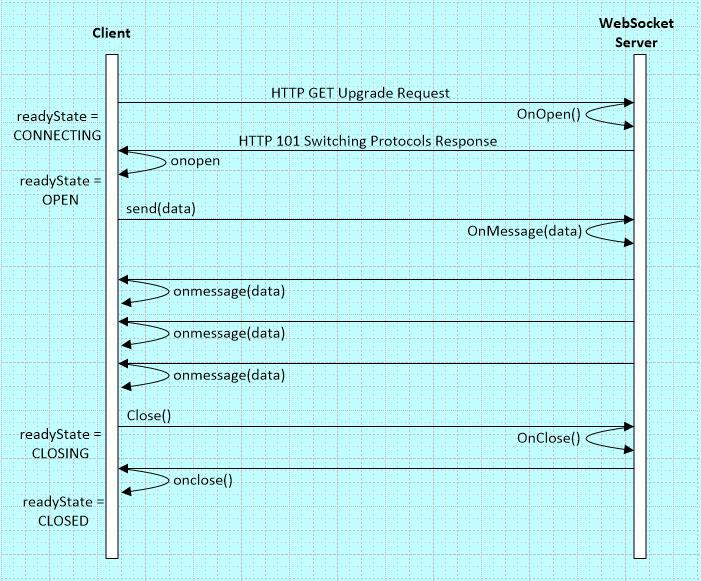

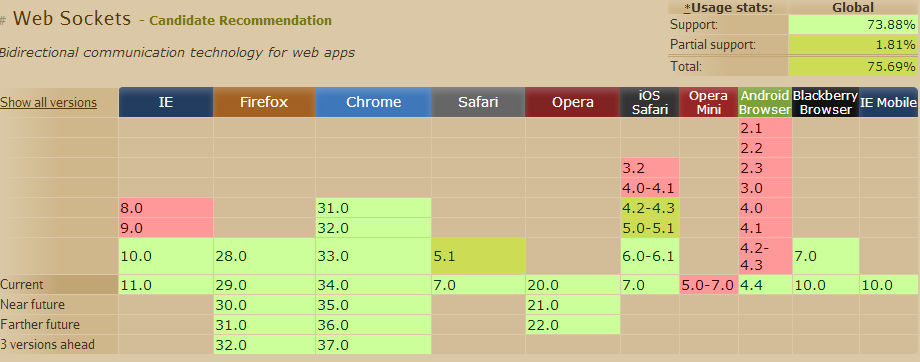

For the system administrator, this is not even a beast, but rather a night necromorph . In a “smart” way through HTTP 1.1 Upgrade, the browser changes the “type” of the HTTP connection and it remains open.

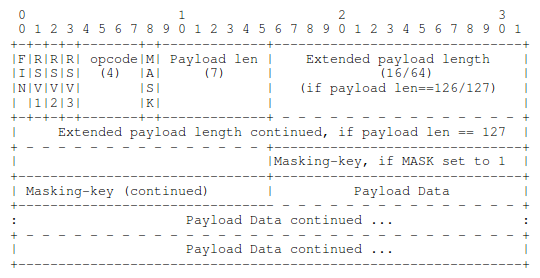

Then on the connection in the BOTH (!) Side, you can begin to transfer data, decorated in messages (frames). Messages are not only with information, but also checklists, incl. such as "PING", "PONG". The first impression was that they invented the bicycle again, again TCP based on TCP.

From the point of view of the developer, of course this is convenient; a duplex channel appears between the browser and the web application on the server. You want streaming, you want messages. But:

How to track Web Sockets performance? Very good question left especially for a snack. On the client side, the WireShark packet sniffer, on the server side and with TLS enabled, we solve the problem by patching the modules for nginx, but apparently there is a simpler solution.

The main thing is to understand how Web Sockets are built from the inside, and you already know this and control over speed will be provided.

So what is better: XMLHttpRequest, Long Polling, Server-Sent Events or Web Sockets? Success - in a competent combination of these technologies. For example, you can manage an application through WebSockets, and load resources using in-built caching via AJAX.

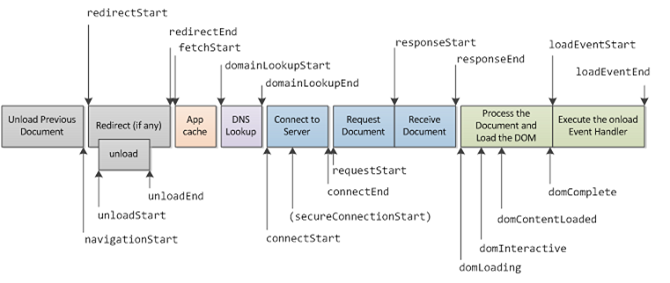

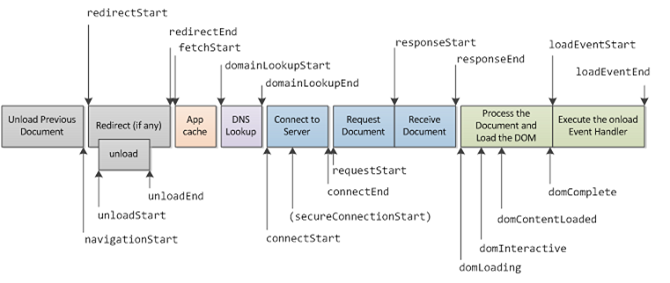

Learn to measure and respond to set points overshoot. Process web application logs, deal with slow queries in them. The speed on the client side was also possible to measure thanks to the Navigation timing API - we collect performance data in browsers, send them using JavaScript to the cloud, aggregate to pinba and react to deviations. Very useful API, use necessarily.

As a result, you will find yourself surrounded by a monitoring system like nagios , with a dozen or so automatic tests, which show that your web system’s speed is fine. And in the case of triggers - the team is going and a decision is made. Cases can be, for example, such:

etc.

We walked through the main components of a modern web application. They learned about HTTP 2.0 trends, control points, which are important to understand and learn to measure in order to ensure the speed of a web application's response, if possible, below 0.3 sec. They looked into the essence of the modern network technologies used in browsers, outlined their strengths and bottlenecks.

Understand that to understand the network, its speed, latency and bandwidth - it is important. And that bandwidth is not always important.

It became clear that now it’s not enough to “protune” a web server and database. You need to understand the bunch of network technologies used by the browser, know them from the inside and measure them effectively. Therefore, the TCP traffic sniffer should now become your right hand, and monitoring key performance indicators in server logs with your left foot.

You can try to solve the problem of processing the client's request for "0.3 sec" in different ways. The main thing is to determine the metrics, automatically collect them and act if they are exceeded - get to the root in each case. In our product, we solved the problem of ensuring the lowest possible latency due to an integrated caching technology that combines static and dynamic site technologies.

In conclusion, we invite you to visit our technology conference, which will be held soon, on May 23. Good luck and success in the difficult task of ensuring the performance of web projects!

However, in order to speed up a modern web project, you will have to start korushki and thoroughly delve into this topic - therefore, a basic knowledge of network protocols is welcome. Knowing the principles, you can effortlessly speed up your web system by hundreds of milliseconds in just a few approaches. Well, ready to save hundreds of millions? Pour coffee.

Aftertaste

This is a very hot topic - how to satisfy the site user - and usabilityists will most likely make me drink a Molotov cocktail, have a snack with a grenade without checks and have time to shout out before the explosion: “I carry heresy”. Therefore, I want to go on the other side. It is widely known that the delay in displaying a page is more than 0.3 seconds - it makes the user notice this and ... wake up from the process of communication with the site. And the delay in the display is more than a second - to think about the topic: “what am I doing here at all? What are they torturing me for and forcing me to wait? ”

')

Therefore, we will give the yuzabelists their “usability”, while we ourselves will tackle a practical task - how not to disturb the “dream” of the client and facilitate his work with the site as long as possible, without distractions to the “brake”.

Who is responsible for speed

Well, of course you are. Otherwise, you would hardly have started reading the post. If it is serious, then there is a problem - for the issue of speed is divided into 2 weakly connected both technologically and socially parts: the frontend and the backend. And they often forget about the third key component - the network.

Just html

To begin with, we recall that the first sites in the early nineties were ... a set of static pages. And HTML was simple, clear and concise: first text, then text and resources. Bacchanalia began with the dynamic generation of web pages and the proliferation of java, perl and at the present time - this is already a galaxy of technologies, including php.

In order to reduce the impact of this race on the viability of the network, HTTP / 1.0 is taken in after 1996, and HTTP / 1.1 in 1999 after 3 years. Finally, they finally agreed that you don’t need to drive TCP handshakes from ~ 2/3 light speeds ( fiber) there-vessels for each request, establishing a new connection, and it is more correct to open a TCP connection once and work through it.

Backend

application

Here, little has changed in the last 40 years. Well, maybe a “parody” on the relational theory was added under the name NoSQL - which gives both advantages and disadvantages. Although, as practice shows, there seems to be more business benefit from it (but also sleepless nights searching for the answer to the question: "who deprived the integrity data and under what pretext" - rather became more).

- Application and / or web server (php, java, perl, python, ruby, etc.) - accepts client request

- The application accesses the database and receives data.

- The application generates html

- Application and / or web server - sends data to the client

Everything is clear in terms of speed:

- optimal application code, no loops for seconds

- optimal data in the database, indexing, denormalization

- database caching

We will no longer talk about overclocking the “application” - many books and articles have been written about it and everything is rather linear and simple.

The main thing is that the application is transparent and you can measure the speed of the request through various components of the application. If it is not - then you can not read, it will not help.

How to achieve this? Paths are known:

- Standard query logging (nginx, apache, php-fpm)

- Logging slow database queries (option in mysql)

- Tools fixing bottlenecks when passing a query. For php, this is xhprof, pinba.

- Built-in tools within the web application, for example, a separate trace module .

If you have a lot of logs and you get confused in them - aggregate the data, see percentiles and distribution. Simple and straightforward. Found a request for more than 0.3 seconds - start debriefing and so on until the bitter end.

Web server

Moving out. Web server. Here, too, little has changed much, but it can only be a crutches - through the installation of a reverse proxy web server in front of the web server (fascgi server). Yes, it certainly helps:

- to keep significantly more open client connections (due to? .. yes, another caching proxy architecture - for nginx this is the use of multiplexing sockets with a small number of processes and low memory for one connection)

- more efficiently allocate static resources directly from disk without filtering through application code

But the question remains - why didn’t immediately begin to do apache “correctly” and sometimes you have to install web servers with a train.

Permanent connections

Establishing a TCP connection takes 1 RTT. Print the chart and hang it in front of you. The key to understanding the appearance of brakes is here.

This value correlates fairly closely with the location of your user relative to the web server (yes, there is the speed of light, there is the speed of light propagation in the material, there is routing) and it can take (especially considering the last mile provider) - tens and hundreds of milliseconds, which of course is a lot . And the trouble is, if this connection is established for each request, which was distributed in HTTP / 1.0.

For this, by and large, HTTP 1.1 was started and HTTP 2.0 (in the person of spdy ) is being developed in this direction. IETF with Google is currently trying to do everything to squeeze the most out of the current network architecture - without breaking it. And this can be done ... well, yes, as efficiently as possible using TCP connections, using their bandwidth as closely as possible through multiplexing , recovery from packet loss, etc.

Therefore, be sure to check the use of persistent connections on the web servers and in the application.

Tls

Without TLS , which originally originated in the depths of Netscape Communications as SSL, there is nowhere in the modern world. And although, they say, the Last “hole” in this protocol made many people turn gray much earlier than the term - there is practically no alternative.

But for some reason, not everyone remembers that TLS worsens the “aftertaste” - adding 1-2 RTTs in addition to 1 RTT connection via TCP. In nginx, in general, the default TLS session cache is off - which adds an extra RTT.

Therefore, make sure that the TLS sessions are cached on a mandatory basis - and so we will save another 1 RTT (and one RTT still remains, unfortunately, as a security fee).

About the backend, probably all. It will be more difficult, but more interesting.

Network. Network distance and bandwidth

You can often hear - we have 50 Mbit / s, 100 Mbit / s, 4G will give even more ... But you can rarely see the understanding that bandwidth is not particularly important for a typical web application (unless you download files) - more important is lantency, t. to. many small requests are made for different connections and the TCP window just does not have time to swing.

And of course, the farther the client is from the web server, the longer. But it happens that it is impossible otherwise or difficult. That is why they came up with:

- CDN

- Dynamic proxying (CDN-vice versa). When in the region put for example nginx, opening constant connections to the web server and terminating ssl. It is clear why? That is - the establishment of connections from the client to the web proxy is accelerated many times (handshakes begin to fly), and then a heated TCP connection is used.

What else can you do ... increase TCP's initial congestion window - yes, it often helps, because A webpage is given in one set of packages without confirmation. Try it.

Turn on the browser debugger, look at the loading time of the web page and think about latency and how to reduce it.

Bandwidth

Remember that the TCP connection window must be accelerated first. If the web page loads less than a second - the window may not have time to increase. The average network bandwidth in the world is slightly higher than 3 Mbps. Conclusion - transfer through one established connection as much as possible, "warming up" it.

Here, of course, multiplexing of HTTP resources within a single TCP connection can help: the transfer of several resources alternately in both the request and the response. And even this technique was included in the standard, but they were under-written and, as a result, it did not take off (it was removed in the chrome not so long ago). Therefore, you can still try spdy, wait for HTTP 2.0, or use pipelining - but not from the browser, but from the application directly.

Domain sharding

But what about the very popular domain sharding technique - when the browser / application overcomes the limit of> = 6 connections per domain, opening more>> = 6 or more connections to fictitious domains: img1.mysite.ru, img2.mysite.ru ...? It's fun, because from the point of view of HTTP / 1.1, this will most likely speed you up, but from the point of view of HTTP / 2.0 it is an anti-pattern, since Multiplexing HTTP traffic over a TCP connection can provide better bandwidth.

So for now - shardim domains and waiting for HTTP / 2.0 to not do this anymore. And of course - it is better to measure specifically for your web application and make an informed choice.

Frontend

About known things like rendering speed of a web page and the size of images and JavaScript, the order of loading resources, etc. - writing is not interesting. Subject beaten and killed. If brief and inaccurate - cache the resources on the side of the web browser, but ... with your head. Caching 10MB js-file and parsing it inside the browser on each web page - we understand where it will lead. We turn on the browser debugger, pour coffee and by the end of the day - there are trends. Throw a plan and implement. Simple and transparent.

Much sharper pitfalls can be hidden behind the fairly new and rapidly developing network capabilities of a web browser. Let's talk about them:

- XMLHttpRequest

- Long polling

- Server-Sent Events

- Web sockets

Browser - as an operating system

Initially, the browser was perceived as a client application for displaying HTML markup. But every year it was transformed into a control center of a galaxy of technologies - as a result, the HTTP server and the web application behind it are now perceived only as an auxiliary component inside the browser. An interesting technological shift in emphasis.

Moreover, with the advent of WebRTC, a television studio built into the browser, and browser networking tools with the outside world, the issue of performance has smoothly shifted from the server infrastructure to the browser. If this internal kitchen at the client will slow down - no one will remember the php on the web server or join the database.

Let's disassemble this opaque monolith for parts.

XMLHttpRequest

This is the well-known AJAX - the browser's ability to access external resources via HTTP. With the advent of CORS - the perfect "chaos" began. Now, to determine the cause of braking, you need to climb all the resources and watch logs everywhere.

Seriously, the technology undoubtedly exploded the capabilities of the browser, turning it into a powerful platform for dynamic information rendering. It makes no sense to write about it, the topic is known to many. However, I will describe the limitations:

- again, the lack of multiplexing of several “channels” makes it inefficient and not fully utilize the bandwidth of a TCP connection

- There is no adequate support for streaming (opened the connection and hang, wait), i.e. it remains to pull the server and watch what he replied

Nevertheless, the technology is very popular and it is easy to make it transparent in terms of monitoring speed.

Long polling

How to make a web chat? Yes, you need to somehow transfer from the server to the browser information about the changes. Directly via HTTP - can not, can not. Only: request and reply. It’s people who decided: make a request and wait for an answer, second, 30 seconds, a minute. If something has come, give back and break the connection.

Yes, a lot of antipatterns, crutches - but the technology is very widespread and always works. But, since You are responsible for the speed - know, the load on the servers with this approach is very high, and can be compared with the load from the main attendance of the web project. And if updates from the server to browsers are often distributed, then it can exceed the main load by several times!

What to do?

Server-Sent Events

This opens a TCP connection to the web server, does not close, and the server writes different info to UTF-8 to it. True binary data cannot be transmitted optimally without Base64 (+ 33% increase in size), but as a control channel in one direction is an excellent solution. True in IE - not supported (see the paragraph above, which works everywhere).

The advantages of technology are that it:

- very simple

- no need to reopen the connection to the server after receiving the message

Web sockets

For the system administrator, this is not even a beast, but rather a night necromorph . In a “smart” way through HTTP 1.1 Upgrade, the browser changes the “type” of the HTTP connection and it remains open.

Then on the connection in the BOTH (!) Side, you can begin to transfer data, decorated in messages (frames). Messages are not only with information, but also checklists, incl. such as "PING", "PONG". The first impression was that they invented the bicycle again, again TCP based on TCP.

From the point of view of the developer, of course this is convenient; a duplex channel appears between the browser and the web application on the server. You want streaming, you want messages. But:

- html caching is not supported, since working through binary framing protocol

- compression is not supported, you need to implement it yourself

- Eerie glitches and delays when working without TLS - due to outdated proxy servers

- no multiplexing, as a result of which each bandwidth of each connection is used inefficiently

- On the server, there are many hanging and doing something "nasty with the database" direct TCP-connections from browsers

How to track Web Sockets performance? Very good question left especially for a snack. On the client side, the WireShark packet sniffer, on the server side and with TLS enabled, we solve the problem by patching the modules for nginx, but apparently there is a simpler solution.

The main thing is to understand how Web Sockets are built from the inside, and you already know this and control over speed will be provided.

So what is better: XMLHttpRequest, Long Polling, Server-Sent Events or Web Sockets? Success - in a competent combination of these technologies. For example, you can manage an application through WebSockets, and load resources using in-built caching via AJAX.

What now?

Learn to measure and respond to set points overshoot. Process web application logs, deal with slow queries in them. The speed on the client side was also possible to measure thanks to the Navigation timing API - we collect performance data in browsers, send them using JavaScript to the cloud, aggregate to pinba and react to deviations. Very useful API, use necessarily.

As a result, you will find yourself surrounded by a monitoring system like nagios , with a dozen or so automatic tests, which show that your web system’s speed is fine. And in the case of triggers - the team is going and a decision is made. Cases can be, for example, such:

- Slow database query. Solution - query optimization, denormalization in case of emergency.

- Slow processing of the application code. Solution - algorithm optimization, caching.

- Slow transfer of the page body over the network. Solution (in order of increasing cost) - increase the tcp initial cwnd, set the dynamic proxy next to the client, transfer servers closer

- Slow return of static resources to customers. The solution is CDN.

- Blocking pending connection to servers in the browser. The solution is domain sharding.

- Long Polling creates a load on servers that are greater than customer hits. Solution - Server-Sent Events, Web Sockets.

- Brake, unstable work Web Sockets. The solution is TLS for them (wss).

etc.

Results

We walked through the main components of a modern web application. They learned about HTTP 2.0 trends, control points, which are important to understand and learn to measure in order to ensure the speed of a web application's response, if possible, below 0.3 sec. They looked into the essence of the modern network technologies used in browsers, outlined their strengths and bottlenecks.

Understand that to understand the network, its speed, latency and bandwidth - it is important. And that bandwidth is not always important.

It became clear that now it’s not enough to “protune” a web server and database. You need to understand the bunch of network technologies used by the browser, know them from the inside and measure them effectively. Therefore, the TCP traffic sniffer should now become your right hand, and monitoring key performance indicators in server logs with your left foot.

You can try to solve the problem of processing the client's request for "0.3 sec" in different ways. The main thing is to determine the metrics, automatically collect them and act if they are exceeded - get to the root in each case. In our product, we solved the problem of ensuring the lowest possible latency due to an integrated caching technology that combines static and dynamic site technologies.

In conclusion, we invite you to visit our technology conference, which will be held soon, on May 23. Good luck and success in the difficult task of ensuring the performance of web projects!

Source: https://habr.com/ru/post/221805/

All Articles