J-sort

Pyramid sorting (a sort of a bunch ) is a classic algorithm that any programmer should probably know. The good old “pyramid” is remarkable in that, regardless of the data set, it has the same complexity in time (and very decent) - O ( n log n ). There are no better or degenerate cases for it.

Since the invention of the method (and this year the algorithm is celebrating its half-century anniversary) there have been many eagerly drastically optimizing the process of laying the sorting heaps. Ternary pyramidal sorting, smooth sorting, Cartesian sorting - this is an incomplete list of innovations. The listed algorithms, while testing, are ahead of the original in absolute speed, who is 12 and 25% ahead, still revolve around O ( n log n ) in the assessment of time complexity. Moreover, these methods are very sophisticatedly implemented.

A humble laborer of the University of Manitoba, Jason Morrison, also suggested his vision of a pyramidal sorting. In this case, the method in some cases approaches the speed O ( n ).

')

Heapsort

For those who are not in the subject, briefly outline how the pyramidal sorting works.

An array can be sorted by building and rebuilding a sorting tree based on it, known as a binary heap or simply a pyramid .

What is a sorting tree? This is a tree that has any parent more (or less, depending on which way it is sorting) than its descendants.

How to make a sorting tree out of ordinary wood? It is very simple - you need to move from descendants up to the parents, and if the descendant is larger than the parent, then switch places. If such an exchange has occurred, a parent descended one level must be compared with the descendants below - maybe there will also be a reason for the exchange there.

Transforming the unsorted part of the array into a sorting tree, as a result, the largest element “pops up” to the root. We swap the maximum with the last key of the unsorted subarray. The structure will cease to be a sorting tree, but as moral compensation, its unsorted part will become less by one node. The whole procedure is again applicable to this unsorted part, that is, we transform it into a sorting tree with subsequent rearrangement of the found maximum to the end. And so we act until the unsorted part shrinks down to a single element.

The approach, to be sure, is witty, but at the same time specialists at the algorithms note a whole bunch of flaws in sorting, such as: instability, randomness of sampling, insensitivity to almost ordered arrays, etc. The unimprovable speed O ( n log n ) confuses everyone shown by sorting absolutely with any sets of input data.

Outstanding computer science minds offer various brain-bearing ideas ( ternary pyramids , Leonardo numbers , Cartesian trees ) with which you can improve the algorithm. Jason Morrison ( J ason Morrison, the sort is named after the author) suggested the opposite - to optimize, you need not to complicate, but simplify as much as possible.

Jsort

A Canadian scientist came to the conclusion that rebuilding a pile for each element is expensive. So is it necessary to array an array of n elements to radically shovel n times?

If for the array to build only a couple of heaps (descending and ascending), then it will significantly order it in both directions.

First you need to build a non-increasing heap . As a result, smaller elements float to the upper nodes of the pyramid (which will correspond to the left half of the array), the smallest element will generally be at the root. The higher the elements are in the sorting tree, the more ordered the corresponding part of the array will be. Elements closer to the leaves of the tree (they will correspond to the second half of the array) will have a less orderly appearance, since they did not compare with each other, but were simply pushed aside by the movement of their parents.

To restore relative order on the right side of the array, you should build a bunch again, in the opposite of the first one. First, this heap should be non-decreasing (we now want to deal with large-value keys). Secondly, it should be “mirrored” to the array, that is, its root should correspond not to the first, but to the last element and build a tree, going through the array from the end to the beginning.

Having built such a twin pyramid, we get a largely ordered array. Completes the sorting inserts . This algorithm has a very modest average time complexity O ( n 2 ), but it works wonders on arrays that are already almost sorted. Their sorting inserts click as nuts.

Time complexity

Let's try to evaluate with the most favorable scenario. A single pyramid building is O ( n ). Heap we have imposed two, so we get O ( 2n ). An almost ordered array sorting inserts can sort for record O ( n ). The overall complexity is O ( 3n ), which is the same as O ( n ).

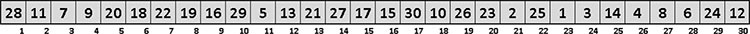

However, in practice, everything looks more modest. Take, for example, an unsorted array containing numbers from 1 to 30 :

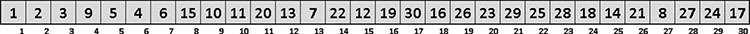

Construct the usual non-increasing heap:

The first third of the massif took a quite nice form, in the middle - who is in the forest for firewood, the end of the array is not impressive either.

We now build a “mirror” non-decreasing heap:

The end of the array noticeably prettier. In the middle, there are some rudiments of orderliness, but it doesn’t look as glamorous as the left and right sectors. In principle, the array is quite ripe to feed it sorting inserts.

Notice the key with a value of 20. Why is this item stuck in the first third of the array? Tritely unlucky - before building a “mirror” non-decreasing heap, all parents went upward along the branch by value (at the root is now 17 , but this key will sink in the left half of the tree and give way to 30 ). Therefore, in the pyramid he was not destined to rise at least a step.

The longer the array, the more often such degenerate nodes will arise. So, in the middle lane of long arrays sorting inserts will have to work hard. The array for processing supplied to it will not be nearly sorted, but rather almost almost sorted. On very long lists, the time complexity of the Insertion Sort will, of course, not be its average / worst O ( n 2 ), but it will be far to O ( n ).

By the way

There is another sorting algorithm with a very similar name - J sort , which was developed by J Cohn ( J ohn Cohen). This is also a hybrid algorithm, used to handle doubly linked lists. Combines thread-like sorting ( Strand sort ) and sorting by shuffling ( Shuffle sort ). But that's another story.

Algorithm Characteristics

| Title | Jsort (J-sort) | |

|---|---|---|

| Author | Jason Morrison | |

| Class | Hybrid sorting (heap + inserts) | |

| Resilience | Unstable | |

| Comparisons | there is | |

| Time complexity | the best | O (n) |

| average | ? | |

| the worst | O (n 2 ) | |

| Memory difficulty | Total | O (n) |

| additional data | O (1) | |

Additionally

Heap sort in java

/** * * - JWJ Williams RW Floyd * * SortAlgorithm.java, Thu Oct 27 10:32:35 1994 * * @author Jason Harrison@cs.ubc.ca * @version 1.0, 23 Jun 1995 */ class HeapSortAlgorithm extends SortAlgorithm { // void sort(int a[]) throws Exception { int N = a.length; // // . for (int k = N / 2; k > 0; k--) downheap(a, k, N); // // do { // ... int T = a[0]; a[0] = a[N - 1]; a[N - 1] = T; //... // N = N - 1; downheap(a, 1, N); } while (N > 1); // } // void downheap(int a[], int k, int N) throws Exception { // // int T = a[k - 1]; // while (k <= N / 2) { int j = k + k;// // , // // if ((j < N) && (a[j - 1] < a[j])) j++; // ( ) ... if (T >= a[j - 1]) { //... break; } else { // ... //... a[k - 1] = a[j - 1]; k = j; } } // //( , ) a[k - 1] = T; } } YouTube version of Heap sort animation

Jsort java

/** * J- (JSort). * - (Jason Morrison) * <http://www.scs.carleton.ca/~morrison> * * JSortAlgorithm.java * * - * @author Patrick Morin */ class JSortAlgorithm extends SortAlgorithm { // // void reheap (int a[], int length, int i) throws Exception { // boolean done = false; // // int T = a[i]; int parent = i; int child = 2 * (i + 1) - 1; // , // ( - ) // // - . while ((child < length) && (!done)) { // if (child < length - 1) { // if (a[child] >= a[child + 1]) { child += 1; } } // ? if (T < a[child]) { // done = true; // . // // } else { a[parent] = a[child]; parent = child; child = 2 * (parent + 1) - 1; } } //, // //( ) a[parent] = T; } // // void invreheap (int a[], int length, int i) throws Exception { // boolean done = false; // // int T = a[length - 1 - i]; int parent = i; int child = 2 * (i + 1) - 1; // , // ( - ) // // - . while ((child < length) && (!done)) { // if (child < length - 1) { // if (a[length - 1 - child] <= a[length - 1 - (child + 1)]) { child += 1; } } // ? if (T > a[length - 1 - child]) { // done = true; } else { // . // // a[length - 1 - parent] = a[length - 1 - child]; parent = child; child = 2 * (parent + 1) - 1; } } //, // //( ) a[length - 1 - parent] = T; } // void sort(int a[]) throws Exception { // // // for (int i = a.length-1; i >= 0; i--) reheap (a, a.length, i); // // // for (int i = a.length - 1; i >= 0; i--) invreheap (a, a.length, i); // // for (int j = 1; j < a.length; j++) { int T = a[j]; int i = j - 1; while (i >= 0 && a[i] > T) { a[i + 1] = a[i]; i -= 1; } a[i + 1] = T; } } } YouTube version of Jsort animation

Links

Jsort on Wikipedia

Jason Morrison Page at the University of Manitoba

Jason Morrison's LinkedIn page

Source: https://habr.com/ru/post/221095/

All Articles