How is the musical search. Lecture in Yandex

Usually under the music search understand the ability to respond to text requests for music. The search should understand, for example, that “Friday” is not always the day of the week, or to find a song according to the words “you want sweet oranges”. But the tasks of musical search are not limited to this. It happens that you need to recognize a song that a user sang, or one that plays in a cafe. And you can also find a common in the compositions to recommend the user music for its taste. Elena Kornilina and Yevgeny Krofto told how this is done and what difficulties arise for students of the Small ShAD.

According to some sources, about thirty million different music tracks have accumulated in the world today. These data are taken from various databases, where these tracks are listed and cataloged. Working with a music search, you will interact with the usual objects: artists, albums and tracks. To begin with, let's look at how to distinguish musical requests from non-musical in the search. First of all, of course, by keywords in the request. Markers of musicality can be both words that clearly indicate that they are looking for music, for example, “listen” or “who sings a song”, and more blurry: “download”, “online”. At the same time, in the seemingly quite simple case, when a part of the name of the desired track, artist or album is present in the request, problems may arise related to ambiguity.

')

For example, take the names of five domestic performers:

If the user asks such a request, it’s not so easy to understand at once what exactly he wants to find. Therefore, the probabilities of one or another user's intention are estimated, and the results of several vertical searches are displayed on the issue page.

On the other hand, performers love to distort words in their names, track and album names. Because of this, many of the linguistic extensions used in the search simply fail. For example:

In addition, there are many artists with similar or even identical names. Suppose a query came in the search [agilera]. At first glance, everything is clear, the user is looking for Christina Aguilera. But still there is some chance that the user needed a completely different artist - Paco Aguilera.

A very common situation with the joint performance of two or more performers. For example, the song Can't Remember to Forget You can be attributed directly to two performers: Shakira and Rihanna. Accordingly, the base should provide for the possibility of adding joint performers.

Another feature is regionality. In different regions there may be performers with the same names. Therefore, it is necessary to take into account which region the request came from, and to give the most popular artist in this region in the search results. For example, there are performers with the name Zara in both Russia and Turkey. At the same time, their audiences practically do not overlap.

With the names of the tracks, too, everything is so simple. Cover versions are very common, when one artist writes his interpretation of another artist's track. At the same time, the cover version may even be more popular than the original. For example, in most cases, on request [saw the night] the version of the group Zdob si Zdub will be more relevant than the original track of the group “Kino”. The situation is similar with remixes.

It is very important to be able to find tracks by quoting from the text. Often the user may not remember or know neither the name of the track, nor the name of the artist, but only some line from the song. However, it is necessary to take into account that some common phrases can occur at once in a variety of tracks.

To solve this problem, several methods are used at once. First, it takes into account the history of user requests, to which genres and artists he has addictions. Secondly, the important role played by the popularity of the track and the artist: how often click on a particular link in the search, how long they listen to the track.

Very often, different versions of spelling of names of performers and composers are fixed in different countries. Therefore, it is necessary to take into account that the user can search for any of these options, and different spellings can occur in the database. The name of Peter Ilyich Tchaikovsky has about 140 spellings. Here are just some of them:

By the way, when we talked about the main objects with which we have to work in a musical search, we did not mention the composers. In most cases, this object really does not play an important role, but not in the case of classical music. Here the situation is almost the opposite, and it is necessary to rely on the author of the work, and the performer is a minor object, although it is impossible not to take this object into consideration either. There is also a feature in the names of albums and tracks.

In addition to the metadata of the track and lyrics in a musical search, you can use this data obtained by analyzing the audio signal directly. This allows you to solve several problems at once:

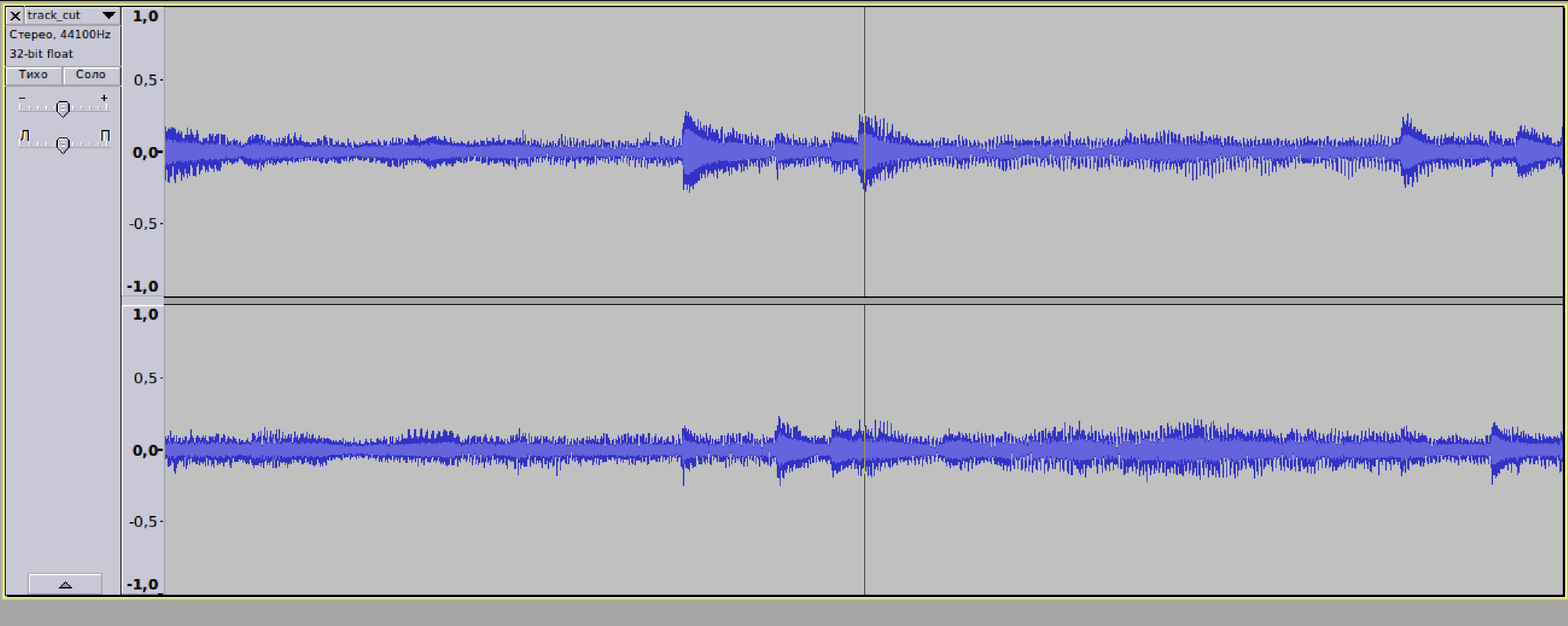

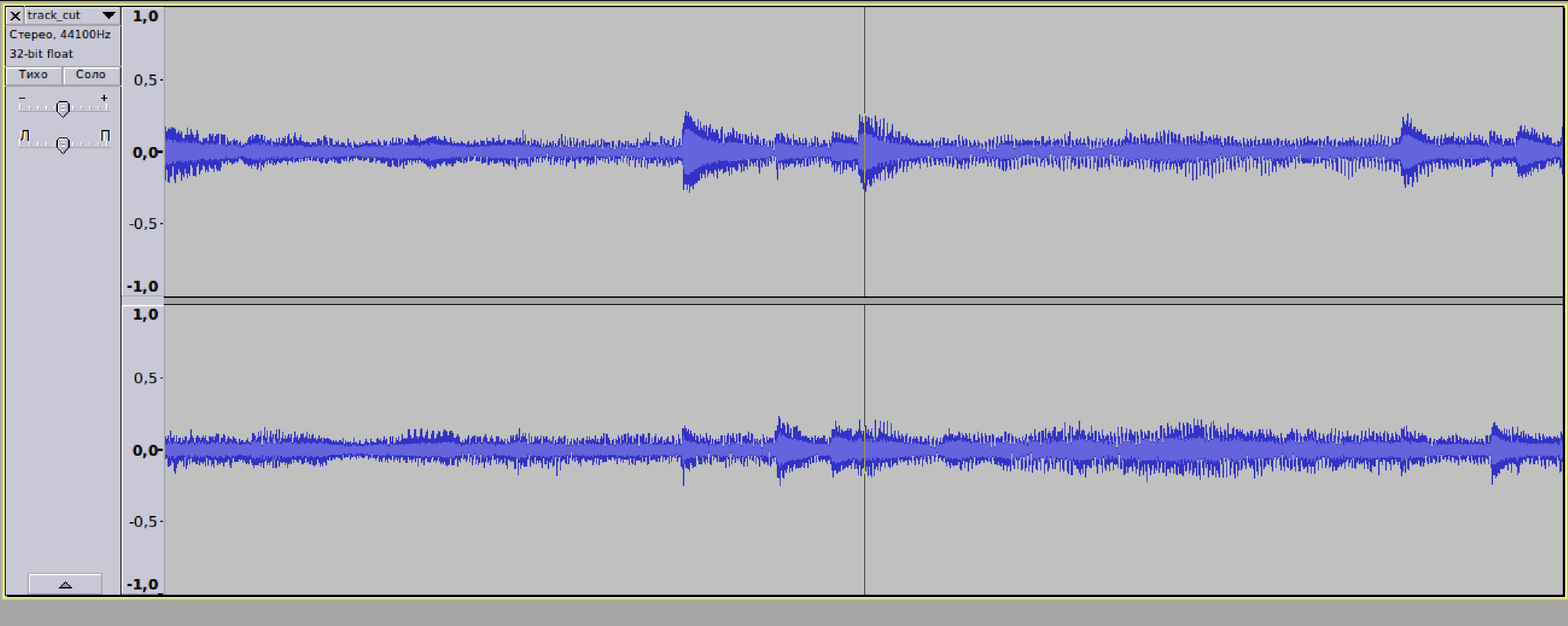

A digital audio signal can be represented as an image of a sound wave:

If we will increase the detail of this image, i.e. to stretch on the time scale, then sooner or later we will see points placed at regular intervals. These points represent the moments at which the amplitude of oscillations of a sound wave is measured:

The narrower the intervals between the points, the higher the sampling rate of the signal, and the wider the frequency range that can be encoded in this way.

We see that the amplitude of oscillations depends on time and correlates with the volume of the sound. And the oscillation frequency is directly related to the pitch. How do we get information about the oscillation frequency: convert the signal from the time domain to the frequency domain? This is where the Fourier transform comes to the rescue. It allows decomposing a periodic function into a sum of harmonics with different frequencies. The coefficients at the terms will give us the frequencies that we wanted to receive. However, we want to get the spectrum of our signal without losing its temporal component. To do this, use the window Fourier transform . In fact, we divide our audio signal into short segments - frames - and instead of a single spectrum we get a set of spectra - separately for each frame. Putting them together we get something like this:

Time is displayed on the horizontal axis, and frequency is shown on the vertical axis. The color indicates the amplitude, i.e. how much signal power is at a particular time and in a particular layer of frequencies.

Such spectrograms are used most of the methods of analysis used in the musical search. And before moving on, we need to figure out which signs of the spectrogram may be useful to us. To classify signs in two ways. First, by time scale:

Secondly, signs can be classified according to the level of presentation, i.e. how high-level abstractions and concepts describe these features.

Having watched the lecture to the end, you will learn exactly how these signs help to solve the problems of musical search, as well as how computer vision and machine learning have to do with it.

How is the text musical search

According to some sources, about thirty million different music tracks have accumulated in the world today. These data are taken from various databases, where these tracks are listed and cataloged. Working with a music search, you will interact with the usual objects: artists, albums and tracks. To begin with, let's look at how to distinguish musical requests from non-musical in the search. First of all, of course, by keywords in the request. Markers of musicality can be both words that clearly indicate that they are looking for music, for example, “listen” or “who sings a song”, and more blurry: “download”, “online”. At the same time, in the seemingly quite simple case, when a part of the name of the desired track, artist or album is present in the request, problems may arise related to ambiguity.

')

For example, take the names of five domestic performers:

- Friday

- Pizza

- Movie

- 30.02

- Aquarium

If the user asks such a request, it’s not so easy to understand at once what exactly he wants to find. Therefore, the probabilities of one or another user's intention are estimated, and the results of several vertical searches are displayed on the issue page.

On the other hand, performers love to distort words in their names, track and album names. Because of this, many of the linguistic extensions used in the search simply fail. For example:

- TI NAKAYA ODNA00 (Dorn)

- Sk8 (Nerves)

- Crawled up a dapoddai (pull)

- N1nt3nd0

- Oxxxymiron

- dom! no

- P! Nk

- Sk8ter boi (Avril Lavign)

In addition, there are many artists with similar or even identical names. Suppose a query came in the search [agilera]. At first glance, everything is clear, the user is looking for Christina Aguilera. But still there is some chance that the user needed a completely different artist - Paco Aguilera.

A very common situation with the joint performance of two or more performers. For example, the song Can't Remember to Forget You can be attributed directly to two performers: Shakira and Rihanna. Accordingly, the base should provide for the possibility of adding joint performers.

Another feature is regionality. In different regions there may be performers with the same names. Therefore, it is necessary to take into account which region the request came from, and to give the most popular artist in this region in the search results. For example, there are performers with the name Zara in both Russia and Turkey. At the same time, their audiences practically do not overlap.

With the names of the tracks, too, everything is so simple. Cover versions are very common, when one artist writes his interpretation of another artist's track. At the same time, the cover version may even be more popular than the original. For example, in most cases, on request [saw the night] the version of the group Zdob si Zdub will be more relevant than the original track of the group “Kino”. The situation is similar with remixes.

It is very important to be able to find tracks by quoting from the text. Often the user may not remember or know neither the name of the track, nor the name of the artist, but only some line from the song. However, it is necessary to take into account that some common phrases can occur at once in a variety of tracks.

To solve this problem, several methods are used at once. First, it takes into account the history of user requests, to which genres and artists he has addictions. Secondly, the important role played by the popularity of the track and the artist: how often click on a particular link in the search, how long they listen to the track.

Translation difficulties

Very often, different versions of spelling of names of performers and composers are fixed in different countries. Therefore, it is necessary to take into account that the user can search for any of these options, and different spellings can occur in the database. The name of Peter Ilyich Tchaikovsky has about 140 spellings. Here are just some of them:

- Petr Ilyich Chaikovsky

- Peter Ilych Tchaikovsky

- Pyotr Ilyich Tchaikovsky

- Pyotr Il'ic Ciaikovsky

- PI Tchaikovski

- Pyotr Il'yich Tchaïkovsky

- Piotr I. Tchaikovsky

- Pyotr İlyiç Çaykovski

- Peter Iljitsch Tschaikowski

- Pjotr Iljitsch Tschaikowski

By the way, when we talked about the main objects with which we have to work in a musical search, we did not mention the composers. In most cases, this object really does not play an important role, but not in the case of classical music. Here the situation is almost the opposite, and it is necessary to rely on the author of the work, and the performer is a minor object, although it is impossible not to take this object into consideration either. There is also a feature in the names of albums and tracks.

Audio analysis

In addition to the metadata of the track and lyrics in a musical search, you can use this data obtained by analyzing the audio signal directly. This allows you to solve several problems at once:

- Recognition of music on the fragment recorded on the microphone;

- Singing recognition;

- Search for fuzzy duplicates;

- Search for cover versions and remixes;

- Selection of a melody from a polyphonic signal;

- Music classification;

- Auto-tagging;

- Search for similar / recommendations.

A digital audio signal can be represented as an image of a sound wave:

If we will increase the detail of this image, i.e. to stretch on the time scale, then sooner or later we will see points placed at regular intervals. These points represent the moments at which the amplitude of oscillations of a sound wave is measured:

The narrower the intervals between the points, the higher the sampling rate of the signal, and the wider the frequency range that can be encoded in this way.

We see that the amplitude of oscillations depends on time and correlates with the volume of the sound. And the oscillation frequency is directly related to the pitch. How do we get information about the oscillation frequency: convert the signal from the time domain to the frequency domain? This is where the Fourier transform comes to the rescue. It allows decomposing a periodic function into a sum of harmonics with different frequencies. The coefficients at the terms will give us the frequencies that we wanted to receive. However, we want to get the spectrum of our signal without losing its temporal component. To do this, use the window Fourier transform . In fact, we divide our audio signal into short segments - frames - and instead of a single spectrum we get a set of spectra - separately for each frame. Putting them together we get something like this:

Time is displayed on the horizontal axis, and frequency is shown on the vertical axis. The color indicates the amplitude, i.e. how much signal power is at a particular time and in a particular layer of frequencies.

Feature classification

Such spectrograms are used most of the methods of analysis used in the musical search. And before moving on, we need to figure out which signs of the spectrogram may be useful to us. To classify signs in two ways. First, by time scale:

- Frame-level - features related to one column of the matrix.

- Segment-level - signs that combine several frames.

- Global-level - features that describe the entire track.

Secondly, signs can be classified according to the level of presentation, i.e. how high-level abstractions and concepts describe these features.

- Low-level:

- Zero Crossing Rate allows you to distinguish between music and speech;

- Short-time energy - reflects the change in energy over time;

- Spectral Centroid - the center of mass of the spectrum;

- Spectral Bandwidth - variation relative to the center of mass;

- Spectral Flatness Measure - characterizes the "smoothness" of the spectrum. It helps to distinguish a signal similar to noise from signals with a pronounced tone.

- Middle-level:

- Beat tracker;

- Pitch Histogram;

- Rhythm Patterns.

- High-level:

- Music genres;

- Mood: cheerful, sad, aggressive, calm;

- Vocal / Instrumental;

- The perceived speed of the music (slow, fast, medium);

- Gender vocalist

Having watched the lecture to the end, you will learn exactly how these signs help to solve the problems of musical search, as well as how computer vision and machine learning have to do with it.

Source: https://habr.com/ru/post/220917/

All Articles