How to DPAA driver

This article is intended for a narrow circle of developers,  “Hooked up” to the Freescale QoriQ processors and is a brief retelling of the main provisions of the documentation on the network architecture of DPAA.

“Hooked up” to the Freescale QoriQ processors and is a brief retelling of the main provisions of the documentation on the network architecture of DPAA.

DPAA (Data Path Acceleration Architecture) is a specialized proprietary data path acceleration architecture developed by Freescale and embedded in the latest processor series. The main purpose of DPAA is to unload the processor from the routine work of processing network traffic. We have developed drivers of this architecture for the p30xx / p4080 processor series and are ready to share our experience.

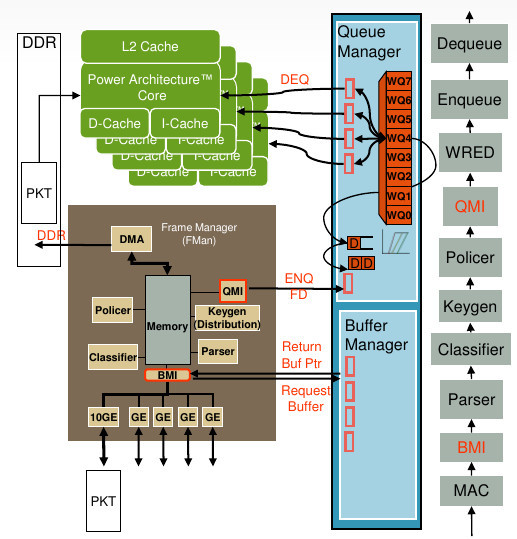

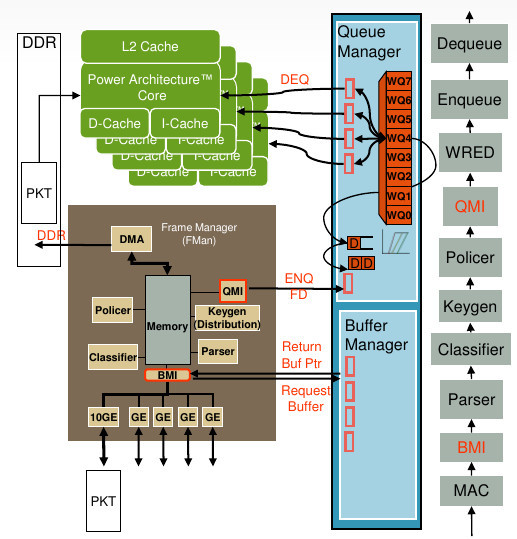

DPAA can operate in 2 modes - normal mode and simplified mode, which is usually used by loaders and drivers, the developers of which did not have time / were able to master the normal mode. The simplified mode imposes restrictions on the maximum processing speed of 100 Mbps and the absence of the possibility of using hardware acceleration of data processing. In normal mode, we have 5 gigabit datapath three-speed Ethernet controller ports (DTSEC), one 10 Gigabit Ethernet media access controller (10GEC) and a total bandwidth of 12 Gbit / s. The block diagram of the p3040 processor is shown in the figure, and the DPAA is circled in red in it.

')

According to representatives of freescale - dpaa it is simple. It is so simple that the driver from freescale for Linux is being developed to this day and its summary code has gone beyond 2 megabytes, though this is together with the test units. For our task, it was not possible to use it, but from time to time we would peek at it, because the style of documentation could not answer all questions regarding the order of initialization of the DPAA.

So, the basic information unit of the network driver is the package. Data packet DPAA has in buffers (buffers). Buffers are combined into frames that have a descriptor (frame descriptor / FD), frames are combined into frame queues (Frame queue / FQ), frame queues are combined into work queues (Work Queue / WQ), work queues are combined into channels (Channel), and channels are leaked to the portals (Portal). This is not your e1000.

Such a hierarchy is required to preserve the order of the packets, implement the priority of receiving and sending packets, sorting packets according to a protocol attribute and other buns. The latter, by the way, gives us a theoretical opportunity to get rid of package analysis on the processor.

Buffer and queue managers are DPAA subsystems that provide buffer pooling and inbound / outbound queue services. They are part of DPAA, but may not necessarily work with network interests — they can also be downloaded by other tasks.

Each such manager has portals — a manager resource sharing mechanism designed to work with individual processor cores. To solve synchronization problems, at least one portal is allocated to each processor core. In the queue manager there are also the concepts of direct and software portals. This is an internal concept of a queue manager, and to configure DPAA, you need to understand that software portals are connected to the processor, and direct portals to the Frame manager and its components. Each portal is configured by a set of registers divided into 2 parts: cached and non-cached, which need to be mapped with the corresponding memory attributes to the aligned address range.

Communication with managers consists of issuing commands and processing responses.

The team building process has a similar structure for QMan and BMan. For each type of command, there is a ring buffer of the command queue, the size of which is fixed and a multiple of degree 2. For example, for the “Give buffer” command, there is a queue for 2 command slots, and for the “take buffer” command, there are 8 command slots. For a queue of a command buffer, there are concepts of a consumer that processes commands and a manufacturer that issues them. For example, for the “send package” command, the driver will be the manufacturer, and QMan will be the consumer. There are 3 ways to control the processing of a command queue: by bit of validity, by pointers to the beginning / end in the cached and non-cached area of the manager registers. For my taste, the use of a bit of validity is better implemented. The meaning is as follows - the signal for processing the command for the queue consumer will be a change in the validity bit in the command. Bit changed - the team refreshed.

Commands and answers to them are located in the cached memory area and their size is a multiple of the local bus width - 32 bytes. This is convenient, you do not need to copy the data and you can use it as is. The main thing - do not forget to update the cache. Therefore, writing a command looks like a sequence of actions: reset the cache, fill in the fields, the barrier, set the validity bit and reset the cache to the bus. Before reading the data, do not forget to update the cache.

In the comics, courtesy of freescale, the buffer manager is presented in the form of a tray of paper for office equipment. You can load paper of the same size into these trays, and the device will take it as needed.

A very rough example, in reality, the buffer manager manages only pointers to the physical addresses of the same size memory area. If in truth, then it doesn’t matter what the 32-bit data it stores means, but to use BMan in the DPAA there must be physical addresses. The operating system allocates buffers for storing network packets from the main memory and transfers their physical addresses to the buffer manager, specifying the number of the heap to which they will belong. The maximum number of such piles is fixed - 0x3f and in each pile there are buffers of the same size. The number of buffers (pointers) in piles depends on the size of the memory that we will allocate for them during BMan initialization.

The use of buffers is governed by the gentlemen's agreement between the operating system and other processor components on the internal bus. To use the buffer, it should be requested from the buffer manager, and after use it should be released. The process of freeing the buffer is not different from the process of initializing buffers. Thus, communication with the buffer manager can be reduced to two commands to free the buffer and occupy the buffer. The command structure allows you to manage 8 buffers per command.

Also, the Buffer manager can be asked to issue an interrupt when the buffers end by setting a threshold and hysteresis in the settings.

The main task of the queue manager is to distribute the load on the processor cores, configure the queues and generate interrupts of queue events.

It is the queue manager that initiates an interrupt when receiving packets from all the DPAA network interfaces. Why is this done? If we have 5 different network cards, at different interrupts, how many interrupt handlers will we need? Five. In the case of DPAA, only one handler is required, which can be run on all processor cores. DPAA supports 5 network ports, the information flow of which can be accessible to all processor cores. By distributing the load on the kernels, the queue manager implements in accordance with the configured scheduling algorithm.

DPAA includes various data processing subsystems (network traffic). At the moment, they are not supported by the driver, so briefly about the main features.

The SEC encryption accelerator performs encryption and decryption of packets, knows the protocols ipsec, 802.1ae, ssl / tsl, rsa, des, aes encryption algorithms; hash algorithms for sha- *, md5, hmac, rc4 / arc4, crc32, and more.

The pattern recognition accelerator PME processes regular expressions and applies them to network traffic at 9 Gbit / s. Up to 32K patterns of up to 128 bits each can be recognized simultaneously in PME. The recognition result is a “coloring” of frames, which can be used by other DPAA subsystems.

These components can be part of a network traffic processing pipeline and operate in parallel.

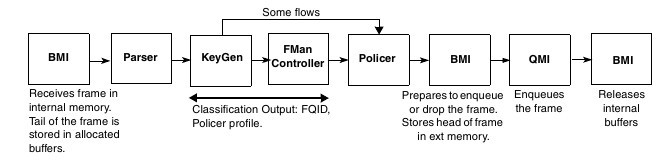

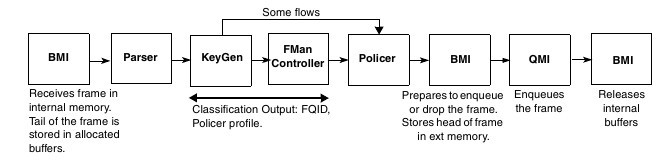

The frame manager (Frame manager / FMan), the DPAA control center, interacts with the queue manager, the buffer manager, DTSEC and data processing subsystems, recognizes and sorts the packets according to protocols, determines the direction of the packet movement depending on the settings. On the p4080 and higher processors, the Frame manager is presented in 2 copies. Example of a package motion pattern:

To fully realize the full potential of DPAA, you need to pick a network stack. I did not have the source network stack stack, the driver testing was on the BSD experimental stack, therefore (and also because they are not implemented) we are not considering the use of all these subsystems now. But to obtain adequate performance on iDF BSD, the stack also had to be slightly modified.

The source and destination of data in the Frame manager are ports. Port assignment is fixed, there are ports: 1 / 2.5Gb receiving, sending 1 / 2.5Gb, receiving 10Gb, sending 10Gb and internal. Internal ports are designed for processing data not related to DPAA network interfaces.

The BMan initialization process requires allocating memory for pointers to buffers. The address of the beginning of the memory and its size must be a multiple of 2 ^ n. The physical address of the selected fragment of memory is written to the BMan registers;

During QMan initialization, memory is required for pointers to descriptors and frame data, the memory start address and its size must also be a multiple of 2 ^ n;

During FMan initialization, the initial settings for the QMan / BMan interface, DMA and other components are set, adjust the direction of the packets by setting the appropriate destination id (Next invoked action / NIA);

The initialization of dtsec, MAC and PHY does not contain any difficulties and is quite standard for network drivers;

And it is also necessary to initialize portals, interrupts for all managers (we have already configured MPIC in advance), buffers in BMan and queues in QMan;

In theory, up to 6 types (sizes) of buffers per port can be created. If we want to save memory, it will be useful - why should an ICMP packet take up a buffer of 2Kb? Let's create a bunch of buffers of 128 bytes! Why do we split jumbo frames? Create a small bunch of buffers of 10Kb! And a big bunch of 1.6 KB.

In practice, buffers will have to be returned after processing the packet, each in its own handful. To do this, you need to calculate something, store it somewhere, I'm lazy, and the memory is cheap, less code means fewer errors. Therefore, we managed one heap of 2kb, common to all ports.

In the minimum configuration, we will need to receive packets - one queue for incoming packets, one queue for errors, and one or several internal queues. For sending packets, one queue for receiving errors, one queue for receiving acknowledgments, and one or more queues for outgoing packets. We use for sending packets the number of queues equal to the command buffer for sending - 8.

Interestingly, in the freescale driver, BMan is assigned to assign queued numbers. Its buffer pool stores numbers from 0 to 0x1ff, which are numbers of free queues and are requested when the queue is initialized.

In the parameters of the queue it is necessary to determine its type and to which channel and working queue it belongs. An interesting parameter is the contextB and contextA fields. We can put any number in the contextB field, for example, a pointer to a structure with data for processing packets in this queue. This parameter will be available in the interrupt handler. The contextA field may contain a physical address whose data will be loaded into the cache before the interrupt arrives. Right in L2. Data size is a multiple of 32 bytes and is indicated in the parameters.

The packet passes the PHY-MAC-DTSEC and enters the frame manager port. In accordance with the packet size, it selects a suitable buffer and is requested from BMan using the BMI interface. The further fate of the package depends on the settings: the package can be subjected to decryption, filtering, sorting. In the simplest case, we just give it to the paws of QMan. The queue manager puts it in the appropriate queue, channel, and generates an interrupt. This process is illustrated in the freescale presentation:

All you need to do in the interrupt handler is to release the packet processing semaphore. Do not deal with the processing of packets in the context of the RV system interrupt handler?! Yes, and in the conventional system can not do that. So, the interrupt handler starts a thread that reads the queue and processes the buffer. I used two types of buffer of four - a whole (1 packet - 1 buffer) and fragmented (S / G), and there is nothing new for network drivers. Although the driver from freescale S / G buffer was implemented only in January of this year. As packets are processed by the network stack, the buffers are returned to the buffer manager in the heap from which it was taken by FMan.

We form a list of the format from the S / G package and put it in the QMan queue. The further process is similar to the reception and the driver is no longer involved in it.

Of course, only part of the DPAA initialization process is described here, allowing it to be cynically used as a simple 5-port ethernet adapter. I hope that this development will not end, and we will have an exciting journey into the jungle of hardware accelerated processing of network traffic.

“Hooked up” to the Freescale QoriQ processors and is a brief retelling of the main provisions of the documentation on the network architecture of DPAA.

“Hooked up” to the Freescale QoriQ processors and is a brief retelling of the main provisions of the documentation on the network architecture of DPAA.DPAA (Data Path Acceleration Architecture) is a specialized proprietary data path acceleration architecture developed by Freescale and embedded in the latest processor series. The main purpose of DPAA is to unload the processor from the routine work of processing network traffic. We have developed drivers of this architecture for the p30xx / p4080 processor series and are ready to share our experience.

DPAA can operate in 2 modes - normal mode and simplified mode, which is usually used by loaders and drivers, the developers of which did not have time / were able to master the normal mode. The simplified mode imposes restrictions on the maximum processing speed of 100 Mbps and the absence of the possibility of using hardware acceleration of data processing. In normal mode, we have 5 gigabit datapath three-speed Ethernet controller ports (DTSEC), one 10 Gigabit Ethernet media access controller (10GEC) and a total bandwidth of 12 Gbit / s. The block diagram of the p3040 processor is shown in the figure, and the DPAA is circled in red in it.

')

According to representatives of freescale - dpaa it is simple. It is so simple that the driver from freescale for Linux is being developed to this day and its summary code has gone beyond 2 megabytes, though this is together with the test units. For our task, it was not possible to use it, but from time to time we would peek at it, because the style of documentation could not answer all questions regarding the order of initialization of the DPAA.

Information flow

So, the basic information unit of the network driver is the package. Data packet DPAA has in buffers (buffers). Buffers are combined into frames that have a descriptor (frame descriptor / FD), frames are combined into frame queues (Frame queue / FQ), frame queues are combined into work queues (Work Queue / WQ), work queues are combined into channels (Channel), and channels are leaked to the portals (Portal). This is not your e1000.

Such a hierarchy is required to preserve the order of the packets, implement the priority of receiving and sending packets, sorting packets according to a protocol attribute and other buns. The latter, by the way, gives us a theoretical opportunity to get rid of package analysis on the processor.

Buffer Manager / BMan and Queue Manager / QMan

Buffer and queue managers are DPAA subsystems that provide buffer pooling and inbound / outbound queue services. They are part of DPAA, but may not necessarily work with network interests — they can also be downloaded by other tasks.

Portals

Each such manager has portals — a manager resource sharing mechanism designed to work with individual processor cores. To solve synchronization problems, at least one portal is allocated to each processor core. In the queue manager there are also the concepts of direct and software portals. This is an internal concept of a queue manager, and to configure DPAA, you need to understand that software portals are connected to the processor, and direct portals to the Frame manager and its components. Each portal is configured by a set of registers divided into 2 parts: cached and non-cached, which need to be mapped with the corresponding memory attributes to the aligned address range.

Communication with managers consists of issuing commands and processing responses.

Management teams

The team building process has a similar structure for QMan and BMan. For each type of command, there is a ring buffer of the command queue, the size of which is fixed and a multiple of degree 2. For example, for the “Give buffer” command, there is a queue for 2 command slots, and for the “take buffer” command, there are 8 command slots. For a queue of a command buffer, there are concepts of a consumer that processes commands and a manufacturer that issues them. For example, for the “send package” command, the driver will be the manufacturer, and QMan will be the consumer. There are 3 ways to control the processing of a command queue: by bit of validity, by pointers to the beginning / end in the cached and non-cached area of the manager registers. For my taste, the use of a bit of validity is better implemented. The meaning is as follows - the signal for processing the command for the queue consumer will be a change in the validity bit in the command. Bit changed - the team refreshed.

Commands and answers to them are located in the cached memory area and their size is a multiple of the local bus width - 32 bytes. This is convenient, you do not need to copy the data and you can use it as is. The main thing - do not forget to update the cache. Therefore, writing a command looks like a sequence of actions: reset the cache, fill in the fields, the barrier, set the validity bit and reset the cache to the bus. Before reading the data, do not forget to update the cache.

Buffer Manager (Buffer Manager / BMan)

In the comics, courtesy of freescale, the buffer manager is presented in the form of a tray of paper for office equipment. You can load paper of the same size into these trays, and the device will take it as needed.

A very rough example, in reality, the buffer manager manages only pointers to the physical addresses of the same size memory area. If in truth, then it doesn’t matter what the 32-bit data it stores means, but to use BMan in the DPAA there must be physical addresses. The operating system allocates buffers for storing network packets from the main memory and transfers their physical addresses to the buffer manager, specifying the number of the heap to which they will belong. The maximum number of such piles is fixed - 0x3f and in each pile there are buffers of the same size. The number of buffers (pointers) in piles depends on the size of the memory that we will allocate for them during BMan initialization.

The use of buffers is governed by the gentlemen's agreement between the operating system and other processor components on the internal bus. To use the buffer, it should be requested from the buffer manager, and after use it should be released. The process of freeing the buffer is not different from the process of initializing buffers. Thus, communication with the buffer manager can be reduced to two commands to free the buffer and occupy the buffer. The command structure allows you to manage 8 buffers per command.

Also, the Buffer manager can be asked to issue an interrupt when the buffers end by setting a threshold and hysteresis in the settings.

Queue Manager / QMan

The main task of the queue manager is to distribute the load on the processor cores, configure the queues and generate interrupts of queue events.

It is the queue manager that initiates an interrupt when receiving packets from all the DPAA network interfaces. Why is this done? If we have 5 different network cards, at different interrupts, how many interrupt handlers will we need? Five. In the case of DPAA, only one handler is required, which can be run on all processor cores. DPAA supports 5 network ports, the information flow of which can be accessible to all processor cores. By distributing the load on the kernels, the queue manager implements in accordance with the configured scheduling algorithm.

Data processing subsystems

DPAA includes various data processing subsystems (network traffic). At the moment, they are not supported by the driver, so briefly about the main features.

The SEC encryption accelerator performs encryption and decryption of packets, knows the protocols ipsec, 802.1ae, ssl / tsl, rsa, des, aes encryption algorithms; hash algorithms for sha- *, md5, hmac, rc4 / arc4, crc32, and more.

The pattern recognition accelerator PME processes regular expressions and applies them to network traffic at 9 Gbit / s. Up to 32K patterns of up to 128 bits each can be recognized simultaneously in PME. The recognition result is a “coloring” of frames, which can be used by other DPAA subsystems.

These components can be part of a network traffic processing pipeline and operate in parallel.

HR manager

The frame manager (Frame manager / FMan), the DPAA control center, interacts with the queue manager, the buffer manager, DTSEC and data processing subsystems, recognizes and sorts the packets according to protocols, determines the direction of the packet movement depending on the settings. On the p4080 and higher processors, the Frame manager is presented in 2 copies. Example of a package motion pattern:

To fully realize the full potential of DPAA, you need to pick a network stack. I did not have the source network stack stack, the driver testing was on the BSD experimental stack, therefore (and also because they are not implemented) we are not considering the use of all these subsystems now. But to obtain adequate performance on iDF BSD, the stack also had to be slightly modified.

Ports

The source and destination of data in the Frame manager are ports. Port assignment is fixed, there are ports: 1 / 2.5Gb receiving, sending 1 / 2.5Gb, receiving 10Gb, sending 10Gb and internal. Internal ports are designed for processing data not related to DPAA network interfaces.

DPAA Initialization

The BMan initialization process requires allocating memory for pointers to buffers. The address of the beginning of the memory and its size must be a multiple of 2 ^ n. The physical address of the selected fragment of memory is written to the BMan registers;

During QMan initialization, memory is required for pointers to descriptors and frame data, the memory start address and its size must also be a multiple of 2 ^ n;

During FMan initialization, the initial settings for the QMan / BMan interface, DMA and other components are set, adjust the direction of the packets by setting the appropriate destination id (Next invoked action / NIA);

The initialization of dtsec, MAC and PHY does not contain any difficulties and is quite standard for network drivers;

And it is also necessary to initialize portals, interrupts for all managers (we have already configured MPIC in advance), buffers in BMan and queues in QMan;

Size and number of buffers for network traffic

In theory, up to 6 types (sizes) of buffers per port can be created. If we want to save memory, it will be useful - why should an ICMP packet take up a buffer of 2Kb? Let's create a bunch of buffers of 128 bytes! Why do we split jumbo frames? Create a small bunch of buffers of 10Kb! And a big bunch of 1.6 KB.

In practice, buffers will have to be returned after processing the packet, each in its own handful. To do this, you need to calculate something, store it somewhere, I'm lazy, and the memory is cheap, less code means fewer errors. Therefore, we managed one heap of 2kb, common to all ports.

Number of queues (FQ) per network interface

In the minimum configuration, we will need to receive packets - one queue for incoming packets, one queue for errors, and one or several internal queues. For sending packets, one queue for receiving errors, one queue for receiving acknowledgments, and one or more queues for outgoing packets. We use for sending packets the number of queues equal to the command buffer for sending - 8.

Interestingly, in the freescale driver, BMan is assigned to assign queued numbers. Its buffer pool stores numbers from 0 to 0x1ff, which are numbers of free queues and are requested when the queue is initialized.

Queue Settings

In the parameters of the queue it is necessary to determine its type and to which channel and working queue it belongs. An interesting parameter is the contextB and contextA fields. We can put any number in the contextB field, for example, a pointer to a structure with data for processing packets in this queue. This parameter will be available in the interrupt handler. The contextA field may contain a physical address whose data will be loaded into the cache before the interrupt arrives. Right in L2. Data size is a multiple of 32 bytes and is indicated in the parameters.

Reception process

The packet passes the PHY-MAC-DTSEC and enters the frame manager port. In accordance with the packet size, it selects a suitable buffer and is requested from BMan using the BMI interface. The further fate of the package depends on the settings: the package can be subjected to decryption, filtering, sorting. In the simplest case, we just give it to the paws of QMan. The queue manager puts it in the appropriate queue, channel, and generates an interrupt. This process is illustrated in the freescale presentation:

All you need to do in the interrupt handler is to release the packet processing semaphore. Do not deal with the processing of packets in the context of the RV system interrupt handler?! Yes, and in the conventional system can not do that. So, the interrupt handler starts a thread that reads the queue and processes the buffer. I used two types of buffer of four - a whole (1 packet - 1 buffer) and fragmented (S / G), and there is nothing new for network drivers. Although the driver from freescale S / G buffer was implemented only in January of this year. As packets are processed by the network stack, the buffers are returned to the buffer manager in the heap from which it was taken by FMan.

Transfer process

We form a list of the format from the S / G package and put it in the QMan queue. The further process is similar to the reception and the driver is no longer involved in it.

Conclusion

Of course, only part of the DPAA initialization process is described here, allowing it to be cynically used as a simple 5-port ethernet adapter. I hope that this development will not end, and we will have an exciting journey into the jungle of hardware accelerated processing of network traffic.

Source: https://habr.com/ru/post/220529/

All Articles