Zabbix + Iostat: monitoring the disk subsystem

Zabbix + Iostat: monitoring the disk subsystem.

What for?

The disk subsystem is one of the important server subsystems and very much depends on the level of the load on the disk subsystem, for example, the speed of content delivery or how quickly the database will respond. This largely applies to mail or file servers, database servers. In general, disk performance indicators need to be monitored. Based on the performance graphs of the disk subsystem, we can decide on the need to increase capacity long before the rooster bites. And in general, it is useful to glance from release to release as the work of developers affects the level of workload.

Under the cut, on monitoring and how to configure.

Dependencies:

Monitoring is implemented through a zabbix agent and two utilities: awk and iostat (sysstat package). If awk comes in distributions by default, then iostat needs to be installed with the sysstat package (thanks to Sebastien Godard and co-workers).

Known limitations:

For monitoring, you need sysstat starting from version 9.1.2, since there is a very important change: "Added r_await and w_await fields to iostat's extended statistics". So you should be careful, in some distros, for example, CentOS has a slightly “stable” and less feature-free version of sysstat.

If you start from the version of zabbix (2.0 or 2.2), then the question is not fundamental, it works on both versions. At 1.8 it does not work. Low level discovery is used.

')

Opportunities (purely subjective, as the utility decreases):

In general, as you can see, all the metrics that are available in iostat are available here (those who are unfamiliar with this utility, I strongly recommend that you look into man iostat).

Available graphics:

Graphs are drawn per-device, LLD detects devices that fall under the regular expression "(xvd | sd | hd | vd) [az]", so if your disks have different names, you can easily make the appropriate changes. Such a regular schedule is made to detect devices that will be parental to other partitions, LVM volumes, MDRAID arrays, etc. In general so as not to collect too much. A little distracted, so the list of graphs:

Analogs and differences:

Zabbix has boxed options for similar monitoring, these are the keys vfs.dev.read and vfs.dev.write . They are good and work fine, but less informative than iostat. For example, iostat has metrics like latency and utilization.

There is also a similar pattern from Michael Noman. In my opinion, the only difference is one, it is sharpened on the old versions of iostat, well, and + small syntactic changes.

Where to get:

So, monitoring consists of a configuration file for the agent, two scripts for collecting / receiving data and a template for the web interface. All this is available in the repository on Github , so by any available means (git clone, wget, curl, etc ...) we download them to the machines we want to monitor and proceed to the next item.

How to setup:

Now everything is ready, we start the agent and go to the monitoring server and execute the command (do not forget to change the agent_ip):

Thus, we check from the monitoring server that iostat.conf is loaded and gives information, at the same time we are watching that LLD works. JSON with the names of detected devices will be returned as an answer. If the answer has not come, then something did wrong.

There is also such a moment that the zabbix server does not wait for the execution of some item'ov by agents (iostat.collect). To do this, increase the timeout values.

How to configure the web interface:

Now the iostat-disk-utilization-template.xml template remains. Via the web interface, we import it into the templates section and assign it to our host. It's simple. Now it remains to wait about one hour, this time is set in the LLD rule (also configurable). Or you can glance in the Latest Data of the monitored host, in the Iostat section. As soon as the values appeared there, you can go to the graphs section and observe the first data.

And finally, the top three screenshots of graphs c localhost))):

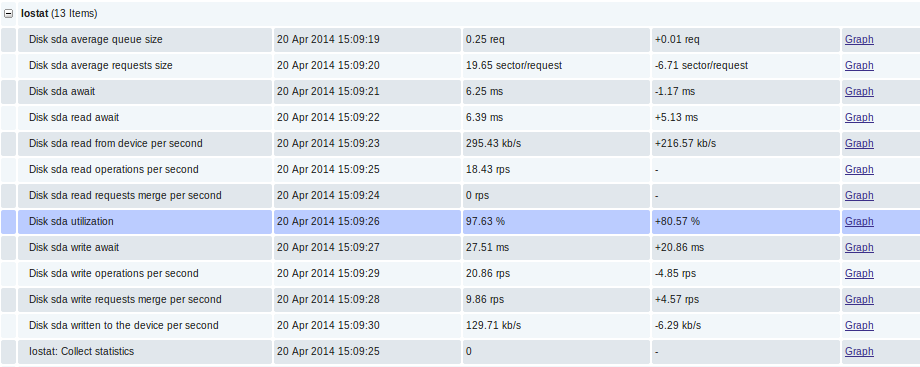

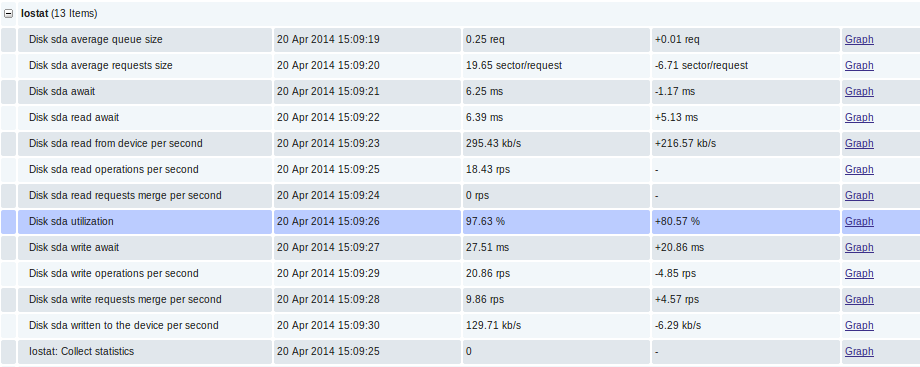

Directly data in Latest Data:

Responsiveness Graphics (Latency):

Recycling schedule and IOPS:

That's all, thank you for your attention.

Well, according to tradition, I take this opportunity to convey greetings to Fedorov Sergey (Alekseevich) :)

What for?

The disk subsystem is one of the important server subsystems and very much depends on the level of the load on the disk subsystem, for example, the speed of content delivery or how quickly the database will respond. This largely applies to mail or file servers, database servers. In general, disk performance indicators need to be monitored. Based on the performance graphs of the disk subsystem, we can decide on the need to increase capacity long before the rooster bites. And in general, it is useful to glance from release to release as the work of developers affects the level of workload.

Under the cut, on monitoring and how to configure.

Dependencies:

Monitoring is implemented through a zabbix agent and two utilities: awk and iostat (sysstat package). If awk comes in distributions by default, then iostat needs to be installed with the sysstat package (thanks to Sebastien Godard and co-workers).

Known limitations:

For monitoring, you need sysstat starting from version 9.1.2, since there is a very important change: "Added r_await and w_await fields to iostat's extended statistics". So you should be careful, in some distros, for example, CentOS has a slightly “stable” and less feature-free version of sysstat.

If you start from the version of zabbix (2.0 or 2.2), then the question is not fundamental, it works on both versions. At 1.8 it does not work. Low level discovery is used.

')

Opportunities (purely subjective, as the utility decreases):

- Low level discovery (hereinafter simply LLD) for automatic detection of block devices on the monitored node;

- utilization of the block device in% - a convenient metric for tracking the total load on the device;

- latency or responsiveness - both general responsiveness and responsiveness to read / write operations are available;

- the size of the queue (in requests) and the average size of the request (in sectors) - allows you to assess the nature of the load and the degree of congestion of the device;

- the current read / write speed on the device in kilobytes understandable;

- the number of read / write requests (per second) combined when queued for execution;

- iops - the value of read / write operations per second;

- average request service time (svctm). In general, it is deprecated, the developers promise to cut it for a long time, but all the hands do not reach.

In general, as you can see, all the metrics that are available in iostat are available here (those who are unfamiliar with this utility, I strongly recommend that you look into man iostat).

Available graphics:

Graphs are drawn per-device, LLD detects devices that fall under the regular expression "(xvd | sd | hd | vd) [az]", so if your disks have different names, you can easily make the appropriate changes. Such a regular schedule is made to detect devices that will be parental to other partitions, LVM volumes, MDRAID arrays, etc. In general so as not to collect too much. A little distracted, so the list of graphs:

- Disk await - device responsiveness (r_await, w_await);

- Disk merges - merge operations in the queue (rrqm / s, wrqm / s);

- Disk queue - the queue status (avgrq-sz, avgqu-sz);

- Disk read and write - the current read / write values on the device (rkB / s, wkB / s);

- Disk utilization - disk utilization and IOPS value (% util, r / s, w / s) - makes it possible to track jumps in utilization and what they were caused by reading or writing.

Analogs and differences:

Zabbix has boxed options for similar monitoring, these are the keys vfs.dev.read and vfs.dev.write . They are good and work fine, but less informative than iostat. For example, iostat has metrics like latency and utilization.

There is also a similar pattern from Michael Noman. In my opinion, the only difference is one, it is sharpened on the old versions of iostat, well, and + small syntactic changes.

Where to get:

So, monitoring consists of a configuration file for the agent, two scripts for collecting / receiving data and a template for the web interface. All this is available in the repository on Github , so by any available means (git clone, wget, curl, etc ...) we download them to the machines we want to monitor and proceed to the next item.

How to setup:

- iostat.conf - the contents of this file should be placed in the zabbix agent configuration file, or put in the configuration directory specified in the Include option of the main agent configuration. In general, it depends on the policy of the party. I use the second option, for custom configs I have a separate directory.

- scripts / iostat-collect.sh and scripts / iostat-parse.sh - this two working scripts should be copied to / usr / libexec / zabbix-extensions / scripts /. Here you can also use a location that is convenient for you, but in this case do not forget to correct the paths in the parameters defined in iostat.conf. Do not forget to check that they are executable (mode = 755).

Now everything is ready, we start the agent and go to the monitoring server and execute the command (do not forget to change the agent_ip):

# zabbix_get -s agent_ip -k iostat.discovery Thus, we check from the monitoring server that iostat.conf is loaded and gives information, at the same time we are watching that LLD works. JSON with the names of detected devices will be returned as an answer. If the answer has not come, then something did wrong.

There is also such a moment that the zabbix server does not wait for the execution of some item'ov by agents (iostat.collect). To do this, increase the timeout values.

How to configure the web interface:

Now the iostat-disk-utilization-template.xml template remains. Via the web interface, we import it into the templates section and assign it to our host. It's simple. Now it remains to wait about one hour, this time is set in the LLD rule (also configurable). Or you can glance in the Latest Data of the monitored host, in the Iostat section. As soon as the values appeared there, you can go to the graphs section and observe the first data.

And finally, the top three screenshots of graphs c localhost))):

Directly data in Latest Data:

Responsiveness Graphics (Latency):

Recycling schedule and IOPS:

That's all, thank you for your attention.

Well, according to tradition, I take this opportunity to convey greetings to Fedorov Sergey (Alekseevich) :)

Source: https://habr.com/ru/post/220073/

All Articles