Telepresence Tod Bot - go for coffee without getting up from the table

After an unsuccessful previous post and a forced absence, we return to Habr and continue to cover the Robot Tod Bot project. In this post I want to talk about the completion of the functionality of the robot - the implementation of telepresence. Now control the robot is available from anywhere in the world. How it works and how, in our opinion, a good telepresence interface should look like - read under the cut. And, of course, everyone's favorite picture in this topic.

Go

As the wiki tells us, telepresence is a set of technologies that allows you to get the impression of being in a place other than our physical location. In my opinion, the top performance of the implementation of telepresence would be the transfer of touch, smell, sight, hearing, taste to the possibility of movement in space. Thus, the meaning of technology lies in the ability to influence the environment and receive feedback in the form of those sensations experienced by the user, being present in that place.

In order to provide the user with a convincing presence effect, at a minimum, a number of technologies are needed that will allow:

Speaking of our robot, he has a telepresence implemented via a web interface. This approach has an undeniable advantage - independence from the used platform on the client side.

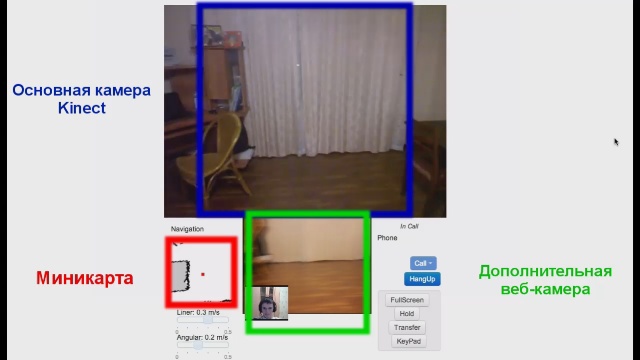

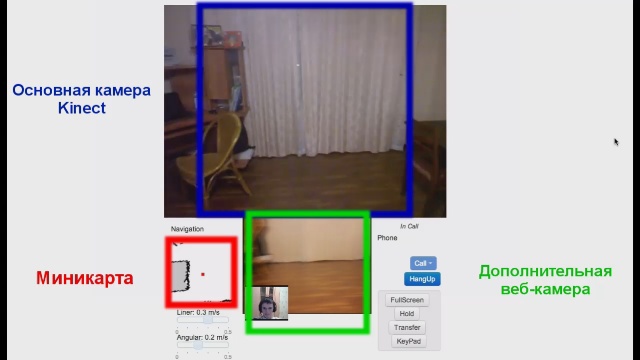

To get the video on the side of the robot, we use the KGB RGB camera and an additional webcam, in our case it is a webcam built into the laptop, but it is also possible to use a separate one. One is frontal, where we, in fact, see everything that happens, the other - under our feet, where we can see all the objects that could potentially prevent us from controlling the robot. Images from cameras are located in the web interface in a convenient and natural way for a person. If we want to see what is under our feet, then we put our head down, the same principle is used in our web interface - we look at the lower screen.

Video feedback is provided by a webcam located on the client side. The resulting picture is displayed on the display of the robot or on a laptop installed on the mobile platform. Video streaming is organized via the SIP protocol - this is an analogue of the Skype protocol, which is widely used as a mini-PBX in offices and on service websites.

')

To transmit sound, we use the integrated microphone network in Kinect. These microphones are of good quality, which minimizes the occurrence of various noises. For the transmission of the audio stream we use the same SIP.

In the future, we want to use the Kinect microphones to the maximum and realize the transmission of stereo sound. Undoubtedly, this will allow the user to get an even greater sense of the effect of telepresence.

As for the movement, there are two options for remote control of the robot: manual and autonomous. Since our robot is already able to independently navigate in space, it would be foolish not to use this opportunity here. Like a human, for a robot it is necessary to navigate in space, and for this he uses a room map, pre-built on the basis of sensory information.

In the web-interface for more convenient orientation of the user in the room in the lower left corner of the displayed mini-map, which is useful to us for any control mode. We are always in the center of the mini-map, and the map itself rotates and moves according to the movement of the robot. So we can understand exactly where the robot is, and when double-clicking on it, the global map unfolds.

Real hardcore mode. In this mode, we control the robot from the keyboard with the arrow keys and are free to go anywhere, even despite the obstacles. The result is a kind of racing simulator from the first person, only at the other end of the wire is a real robot and a real environment. In addition, speed control in the form of sliders is also available. The first slider is responsible for the linear speed, and the second - for the speed of rotation of the robot. Despite the seeming simplicity, it is rather difficult to control it in the first place due to the lack of dimensions.

Autonomous navigation involves minimizing user roles. It is necessary and sufficient for the user to specify one or several destination points, and the robot, navigating the route and avoiding obstacles, will independently navigate through them. At the same time, both visual and sound contact will be maintained. If necessary, the user can stop the execution of autonomous movement and switch to manual control mode. For the autonomous mode, the function of following the interlocutor, whose implementation is in our plans, will also be relevant.

In spite of the seeming completeness of the functional, there is still something to work on. We want to make the web-based interface a kind of “control panel” with battery level indicators and other sensors vital for the robot, through which the setup and calibration of the robot will be available.

Not every telepresence robot can boast the function of manipulating objects. There are a number of reasons for this, one of which is the high cost of the manipulators themselves, the cost of which is sometimes comparable to the cost of the robots themselves and the complexity of the software implementation.

Tod Bot is an exception and he still has a hand. So it is up to the software. The easiest way to manage a manipulator is to place the sliders / scrolls on the web interface panel, which are responsible for each degree of freedom of the manipulator. There is only one thing: to grab something with such control is incredibly difficult. I know firsthand - I tried to take a box of matches from the table. Spent on all this about 5 minutes. At the same time the probability of grazing or tilting adjacent objects tends to 100%. You can, of course, work out a week and improve your results, but in this case it is not the easiest and most convenient way.

As we see the manipulator control through the web-interface. This process should be as automated as possible, which would not require the nerves and forces of the user. The user should restrict himself only to the choice of the object in the video stream, and the system will do the rest. Therefore, we entrusted hand control to the MoveIt program module, which we wrote about earlier . At the moment, the hand is already able to avoid colliding with surrounding objects when moving. We will integrate MoveIt with the web interface to achieve satisfactory results in capturing objects. Now we have some success in this direction.

Speaking about the telepresence software implementation, for a start it is worth recalling that the Tod Bot robot is controlled under the ROS framework. In ROS, all the functionality is distributed among the program nodes communicating with each other through messages published in topics. Accordingly, any new software integrated into the system must be represented as such a ROS node.

To implement telepresence functionality, on the one hand, we need a web server with the ability to generate HTML pages and process POST / GET requests, on the other hand, we need to receive odometer and navigation map data from ROS and send room patrol and motion control commands .

Based on these requirements, we decided to decorate all telepresence functionality as a ROS software node, use CherryPy, a minimalist Python web framework, as the web server, and store the data in NoSQL Redis storage in a simple key-value format. The HTML5 client sipML5 was used as a SIP client, which allows you to make audio / video calls directly in the browser.

How does it work together? The operator in the web browser sends data through AJAX requests to the robot’s web server. The telepresence host Python script processes the data from the web server and sends it to other ROS nodes that are already directly executing commands on the robot. From the telepresence node to the operator’s side, the data on the map, odometry and video stream from Kinect, which are rendered in HTML5 Canvas, come in the same way. In parallel, the audio / video stream is transmitted through the operator’s and the robot’s sipML5 clients. By the way, the quality of free SIP-services for communication of SIP-clients does not cause any complaints. The only thing you need is a fairly wide Internet channel.

We would also like to hear your opinion. What should a telepresence reference system look like?

Go

As the wiki tells us, telepresence is a set of technologies that allows you to get the impression of being in a place other than our physical location. In my opinion, the top performance of the implementation of telepresence would be the transfer of touch, smell, sight, hearing, taste to the possibility of movement in space. Thus, the meaning of technology lies in the ability to influence the environment and receive feedback in the form of those sensations experienced by the user, being present in that place.

In order to provide the user with a convincing presence effect, at a minimum, a number of technologies are needed that will allow:

- Receive and transmit video stream

- Receive and transmit sound

- Move in space

- Ability to manipulate objects in a remote environment

Speaking of our robot, he has a telepresence implemented via a web interface. This approach has an undeniable advantage - independence from the used platform on the client side.

Video

To get the video on the side of the robot, we use the KGB RGB camera and an additional webcam, in our case it is a webcam built into the laptop, but it is also possible to use a separate one. One is frontal, where we, in fact, see everything that happens, the other - under our feet, where we can see all the objects that could potentially prevent us from controlling the robot. Images from cameras are located in the web interface in a convenient and natural way for a person. If we want to see what is under our feet, then we put our head down, the same principle is used in our web interface - we look at the lower screen.

Video feedback is provided by a webcam located on the client side. The resulting picture is displayed on the display of the robot or on a laptop installed on the mobile platform. Video streaming is organized via the SIP protocol - this is an analogue of the Skype protocol, which is widely used as a mini-PBX in offices and on service websites.

')

Sound

To transmit sound, we use the integrated microphone network in Kinect. These microphones are of good quality, which minimizes the occurrence of various noises. For the transmission of the audio stream we use the same SIP.

In the future, we want to use the Kinect microphones to the maximum and realize the transmission of stereo sound. Undoubtedly, this will allow the user to get an even greater sense of the effect of telepresence.

Moving in space

As for the movement, there are two options for remote control of the robot: manual and autonomous. Since our robot is already able to independently navigate in space, it would be foolish not to use this opportunity here. Like a human, for a robot it is necessary to navigate in space, and for this he uses a room map, pre-built on the basis of sensory information.

In the web-interface for more convenient orientation of the user in the room in the lower left corner of the displayed mini-map, which is useful to us for any control mode. We are always in the center of the mini-map, and the map itself rotates and moves according to the movement of the robot. So we can understand exactly where the robot is, and when double-clicking on it, the global map unfolds.

Manual control

Real hardcore mode. In this mode, we control the robot from the keyboard with the arrow keys and are free to go anywhere, even despite the obstacles. The result is a kind of racing simulator from the first person, only at the other end of the wire is a real robot and a real environment. In addition, speed control in the form of sliders is also available. The first slider is responsible for the linear speed, and the second - for the speed of rotation of the robot. Despite the seeming simplicity, it is rather difficult to control it in the first place due to the lack of dimensions.

Autonomous navigation

Autonomous navigation involves minimizing user roles. It is necessary and sufficient for the user to specify one or several destination points, and the robot, navigating the route and avoiding obstacles, will independently navigate through them. At the same time, both visual and sound contact will be maintained. If necessary, the user can stop the execution of autonomous movement and switch to manual control mode. For the autonomous mode, the function of following the interlocutor, whose implementation is in our plans, will also be relevant.

In spite of the seeming completeness of the functional, there is still something to work on. We want to make the web-based interface a kind of “control panel” with battery level indicators and other sensors vital for the robot, through which the setup and calibration of the robot will be available.

Manipulating objects in a remote environment

Not every telepresence robot can boast the function of manipulating objects. There are a number of reasons for this, one of which is the high cost of the manipulators themselves, the cost of which is sometimes comparable to the cost of the robots themselves and the complexity of the software implementation.

Tod Bot is an exception and he still has a hand. So it is up to the software. The easiest way to manage a manipulator is to place the sliders / scrolls on the web interface panel, which are responsible for each degree of freedom of the manipulator. There is only one thing: to grab something with such control is incredibly difficult. I know firsthand - I tried to take a box of matches from the table. Spent on all this about 5 minutes. At the same time the probability of grazing or tilting adjacent objects tends to 100%. You can, of course, work out a week and improve your results, but in this case it is not the easiest and most convenient way.

As we see the manipulator control through the web-interface. This process should be as automated as possible, which would not require the nerves and forces of the user. The user should restrict himself only to the choice of the object in the video stream, and the system will do the rest. Therefore, we entrusted hand control to the MoveIt program module, which we wrote about earlier . At the moment, the hand is already able to avoid colliding with surrounding objects when moving. We will integrate MoveIt with the web interface to achieve satisfactory results in capturing objects. Now we have some success in this direction.

How do we make a web interface with ROS?

Speaking about the telepresence software implementation, for a start it is worth recalling that the Tod Bot robot is controlled under the ROS framework. In ROS, all the functionality is distributed among the program nodes communicating with each other through messages published in topics. Accordingly, any new software integrated into the system must be represented as such a ROS node.

To implement telepresence functionality, on the one hand, we need a web server with the ability to generate HTML pages and process POST / GET requests, on the other hand, we need to receive odometer and navigation map data from ROS and send room patrol and motion control commands .

Based on these requirements, we decided to decorate all telepresence functionality as a ROS software node, use CherryPy, a minimalist Python web framework, as the web server, and store the data in NoSQL Redis storage in a simple key-value format. The HTML5 client sipML5 was used as a SIP client, which allows you to make audio / video calls directly in the browser.

How does it work together? The operator in the web browser sends data through AJAX requests to the robot’s web server. The telepresence host Python script processes the data from the web server and sends it to other ROS nodes that are already directly executing commands on the robot. From the telepresence node to the operator’s side, the data on the map, odometry and video stream from Kinect, which are rendered in HTML5 Canvas, come in the same way. In parallel, the audio / video stream is transmitted through the operator’s and the robot’s sipML5 clients. By the way, the quality of free SIP-services for communication of SIP-clients does not cause any complaints. The only thing you need is a fairly wide Internet channel.

We would also like to hear your opinion. What should a telepresence reference system look like?

Source: https://habr.com/ru/post/219991/

All Articles