Backend no problem. Miracle or future?

Hello!

Friends, it’s not for me to tell you, and you yourself know how the backend is done for server / client-server applications. In our ideal world, everything begins with the design of architecture, then we select the site, then we estimate the necessary number of machines, both virtual and not. Then there is the process of raising the architecture for development / testing. All is ready? Well, let's go write code, make the first commit, update the code on the server from the repository. Opened the console / browser checked and drove off. While everything is simple, and then what?

Friends, it’s not for me to tell you, and you yourself know how the backend is done for server / client-server applications. In our ideal world, everything begins with the design of architecture, then we select the site, then we estimate the necessary number of machines, both virtual and not. Then there is the process of raising the architecture for development / testing. All is ready? Well, let's go write code, make the first commit, update the code on the server from the repository. Opened the console / browser checked and drove off. While everything is simple, and then what?

Over time, the architecture inevitably grows, there are new services, new servers, and now it's time to think about scalability. Servers a little more than 1 ?, - it would be necessary to collect logs like that all together. Immediately in my head climb thoughts about the aggregator of logs.

')

And when something, God forbid, falls, immediately comes the thought of monitoring. Familiar, is not it? So I ate with my friends. And when you are in a team, other related problems appear.

You can immediately think that we have not heard anything about aws, jelastic, heroku, digitalocean, puppet / chief, travis, git-hooks, zabbix, datadog, loggly ... I assure you it is not. We tried to make friends with each of these systems. More precisely, we set up each of these systems for ourselves. But did not get the proper effect. Some kind of pitfalls always appeared and I would like to automate some of the work.

Living in such a world a fairly large amount of time, we thought - "well, we are developers, let's do something with this." Having estimated the problems accompanying us at each stage of project creation and development, we wrote them out on a separate page and turned them into features of the future service.

And after 2 months of this, the service was born - lastbackend.com :

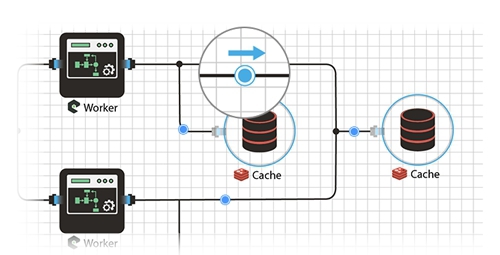

We started with the very first problem when building a server system, namely with the construction of a visual scheme of the project. I don’t know about you, but for me it’s fascinating to see which element is connected to, how data flows flow, in comparison with the list of servers and the configured environment in the wiki or Google dock. But judge you.

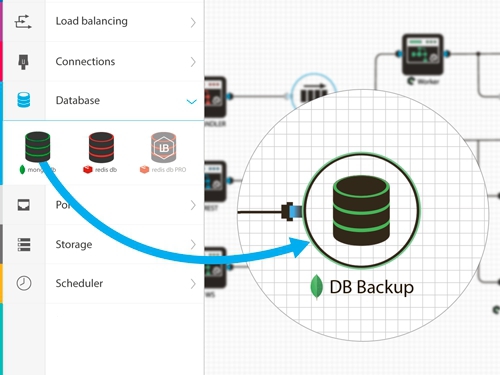

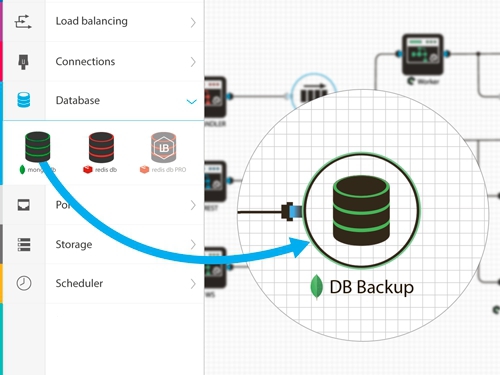

The process of designing a circuit is very simple and intuitive: the list of backend elements on the left, the working field on the right. Here we just take and drag. Should I enable the node.js element to connect to mongodb? Please - take and hold the connection.

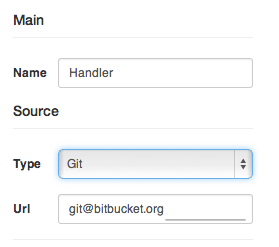

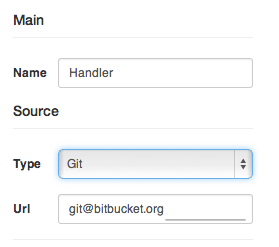

Each element in the system is unique, it must be configured and if it is necessary to enable its auto-scaling. If the element is a load balancer, there is a place where the rules for choosing an upstream are indicated. If the item has source code, we specify its repository, environment variables, dependencies. The system itself will download it, install it, launch it.

And of course, we also thought about auto-deployment - the source codes in a particular branch changed - they slyly and quickly updated the element. We tried to make everything convenient, because we ourselves use and first see all the flaws.

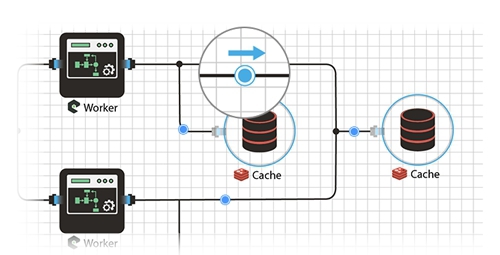

And here is the most interesting moment. When the circuit is ready and configured, its deployment takes a few seconds. Sometimes it is longer, and sometimes even instantly. It all depends on the types of elements and its source code. We mainly program in node.js and our schemes unfold in a couple of seconds. The most exciting moment is to see how all the configured elements on the circuit “come to life” and the indicators light up, indicating the current state of the element.

I forgot to add that we have the ability to run each item from a different host / data center. For example, for balancing traffic between countries, one element can be launched in one country, another in the second, and the database, for example, can be located somewhere in the center. An example of course is not very, but in general, I think the opportunity is quite useful, especially if you know how and where to apply it.

That's basically the main part, which allows quickly, beautifully and without problems to raise the server part of a project, but we immediately remembered further problems, namely:

Each element aggregates the log into a single repository of logs, where you can watch and analyze them, search and select them. Now there is no need to connect via ssh and grep to look for any hidden information, or just analyze the data

Naturally, we and the hunt itself to go fishing or just to rest knowing that if something is going wrong, you will be informed about this. So we are doing so that there would be instant information about all failures. Now the head does not hurt, that you can miss something important.

That's basically the main problems that we have tried and are trying to solve with our service and at least somehow make life easier for the brother developer.

Naturally, I did not manage to list all the features, I tried to select only the main ones, but in the comments I can answer all the questions and suggestions in more detail.

Thanks for attention. First of all, I would very much like to read your opinion, answer your questions , write down your advices and wishes , as well as give everyone access to the closed beta testing of the service, which according to preliminary plans will begin at the May holidays.

You can get an invitation to the beta right at this link. On the opening day of testing - will receive a letter with access data.

Also friends, if interested, we can start a series of technical articles on the stack of technologies used, namely node.js, mongodb, redis, sockets, angular, svg, etc.

Friends, it’s not for me to tell you, and you yourself know how the backend is done for server / client-server applications. In our ideal world, everything begins with the design of architecture, then we select the site, then we estimate the necessary number of machines, both virtual and not. Then there is the process of raising the architecture for development / testing. All is ready? Well, let's go write code, make the first commit, update the code on the server from the repository. Opened the console / browser checked and drove off. While everything is simple, and then what?

Friends, it’s not for me to tell you, and you yourself know how the backend is done for server / client-server applications. In our ideal world, everything begins with the design of architecture, then we select the site, then we estimate the necessary number of machines, both virtual and not. Then there is the process of raising the architecture for development / testing. All is ready? Well, let's go write code, make the first commit, update the code on the server from the repository. Opened the console / browser checked and drove off. While everything is simple, and then what? Over time, the architecture inevitably grows, there are new services, new servers, and now it's time to think about scalability. Servers a little more than 1 ?, - it would be necessary to collect logs like that all together. Immediately in my head climb thoughts about the aggregator of logs.

')

And when something, God forbid, falls, immediately comes the thought of monitoring. Familiar, is not it? So I ate with my friends. And when you are in a team, other related problems appear.

You can immediately think that we have not heard anything about aws, jelastic, heroku, digitalocean, puppet / chief, travis, git-hooks, zabbix, datadog, loggly ... I assure you it is not. We tried to make friends with each of these systems. More precisely, we set up each of these systems for ourselves. But did not get the proper effect. Some kind of pitfalls always appeared and I would like to automate some of the work.

Living in such a world a fairly large amount of time, we thought - "well, we are developers, let's do something with this." Having estimated the problems accompanying us at each stage of project creation and development, we wrote them out on a separate page and turned them into features of the future service.

And after 2 months of this, the service was born - lastbackend.com :

Design

We started with the very first problem when building a server system, namely with the construction of a visual scheme of the project. I don’t know about you, but for me it’s fascinating to see which element is connected to, how data flows flow, in comparison with the list of servers and the configured environment in the wiki or Google dock. But judge you.

The process of designing a circuit is very simple and intuitive: the list of backend elements on the left, the working field on the right. Here we just take and drag. Should I enable the node.js element to connect to mongodb? Please - take and hold the connection.

Adjustment and scaling

Each element in the system is unique, it must be configured and if it is necessary to enable its auto-scaling. If the element is a load balancer, there is a place where the rules for choosing an upstream are indicated. If the item has source code, we specify its repository, environment variables, dependencies. The system itself will download it, install it, launch it.

And of course, we also thought about auto-deployment - the source codes in a particular branch changed - they slyly and quickly updated the element. We tried to make everything convenient, because we ourselves use and first see all the flaws.

Deployment

And here is the most interesting moment. When the circuit is ready and configured, its deployment takes a few seconds. Sometimes it is longer, and sometimes even instantly. It all depends on the types of elements and its source code. We mainly program in node.js and our schemes unfold in a couple of seconds. The most exciting moment is to see how all the configured elements on the circuit “come to life” and the indicators light up, indicating the current state of the element.

I forgot to add that we have the ability to run each item from a different host / data center. For example, for balancing traffic between countries, one element can be launched in one country, another in the second, and the database, for example, can be located somewhere in the center. An example of course is not very, but in general, I think the opportunity is quite useful, especially if you know how and where to apply it.

That's basically the main part, which allows quickly, beautifully and without problems to raise the server part of a project, but we immediately remembered further problems, namely:

Log aggregation

Each element aggregates the log into a single repository of logs, where you can watch and analyze them, search and select them. Now there is no need to connect via ssh and grep to look for any hidden information, or just analyze the data

Monitoring and alert system

Naturally, we and the hunt itself to go fishing or just to rest knowing that if something is going wrong, you will be informed about this. So we are doing so that there would be instant information about all failures. Now the head does not hurt, that you can miss something important.

Summary

That's basically the main problems that we have tried and are trying to solve with our service and at least somehow make life easier for the brother developer.

Naturally, I did not manage to list all the features, I tried to select only the main ones, but in the comments I can answer all the questions and suggestions in more detail.

Thanks for attention. First of all, I would very much like to read your opinion, answer your questions , write down your advices and wishes , as well as give everyone access to the closed beta testing of the service, which according to preliminary plans will begin at the May holidays.

You can get an invitation to the beta right at this link. On the opening day of testing - will receive a letter with access data.

PS

Also friends, if interested, we can start a series of technical articles on the stack of technologies used, namely node.js, mongodb, redis, sockets, angular, svg, etc.

Source: https://habr.com/ru/post/219579/

All Articles