Write Cache and DRBD: Why it is useful to know the inside story

Prehistory

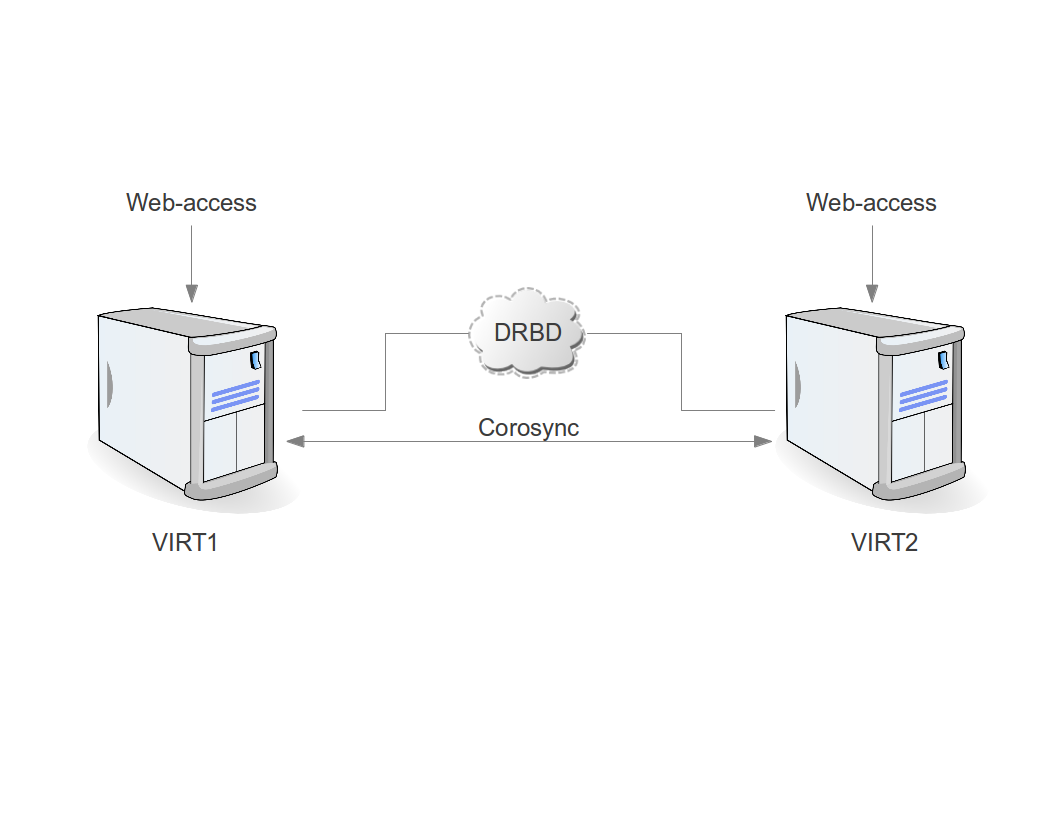

There is a beautiful solution for creating a reliable low-cost cluster based on DRBD + Proxmox VE. A page on the Proksmox project's Wiki appeared on September 11, 2009, and it was created by the CEO of Martin Maurer.

Since then, this decision has become very popular, and no one suspected that this solution has a hidden pitfall. The documentation does not write about it, and those who have come across the consequences of this problem (for example, the machine crashes during an online migration from one host to another), blamed everything on the “case”. Someone sinned on iron, someone on Proxmox, and someone on the drivers inside the virtual machine. Of course, I would like DRBD to report its problems on its own, and, somehow, unconsciously believe in what it does. Check / proc / drbd, see the line “cs: Connected ro: Primary / Primary ds: UpToDate / UpToDate” and continue to believe that DRBD has nothing to do with it.

Once, carefully reading the documentation for DRBD, I found a recommendation to do a regular check of DRBD for integrity, i.e. on the identity of the two nodes among themselves. Well, why not, I thought, there will be no unnecessary verification. And then the whole year long story began.

')

It turned out that every week new out-of-sync blocks appeared in DRBD. Those. the data on the two servers was different. But why?!

Replacing the hardware, updating the DRBD version, disconnecting offload and bonding did not lead to a positive result, and new blocks all appeared and appeared. Analysis of exactly which blocks turned out to be out-of-sync led to the following results:

- often - swap partition of the virtual machine with Linux

- rarely - Windows with NTFS partition on the virtual machine

- never - ext4 on a Linux virtual machine

It was “interesting”, but it did not give an answer to the question “why and how it can be”. I talked about all of my research on the DRBD Users mailing list (http://www.gossamer-threads.com/lists/drbd/users/25227) and once I received the answer I had been waiting for. Lars Ellenberg, one of the main developers of DRBD, explained what was happening and how to check it.

How to write to DRBD

General case:

1) DRBD receives a request from the OS for writing data from the buffer;

2) DRBD writes data to a local block device;

3) DRBD sends data to the second node over the network;

4) DRBD receives confirmation of the end of the recording from the local block device;

5) DRBD receives confirmation of the end of the recording from the second node;

6) DRBD sends the OS a confirmation of the end of the recording.

Now imagine that the data in the buffer unexpectedly changed and we get:

1) DRBD receives a request from the OS for writing data from the buffer;

2) DRBD writes data to a local block device;

2.5) buffer data has changed;

3) DRBD sends data to the second node over the network;

4) DRBD receives confirmation of the end of the recording from the local block device;

5) DRBD receives confirmation of the end of the recording from the second node;

6) DRBD sends the OS a confirmation of the end of the recording.

And we immediately get out-of-sync.

How does this work? Very simple. The buffer can be used by the application for write caching (the application, we have, is a virtual machine process). In this case, the application can update the data in the buffer, without waiting for confirmation of the recording of this data on a physical device or controller, and in the case of DRBD this is unacceptable.

The most annoying thing about all this is that to lose data you don’t even need to turn off the power . You only need to enable the cache to write.

How to check? It is necessary to enable data-integrity-alg in the DRBD settings and wait. If you get something like "buffer modified upper layers during write", then this is it. Be careful, if DRBD works in dual-primary mode, then with “data-integrity-alg” enabled, you will immediately get a split brain (this is not written in the documentation).

Caching write to QEMU / KVM

By default, virtual machines in Proxmox VE use cache = none mode for virtual disks. Despite the fact that "none" as if tells us "we do not cache anything", in fact, this is not quite so. None, in this case, means not using “host cache”, but the write cache on the block device is still used. And from here we get a problem with block out-of-sync in DRBD.

Only two modes can be considered reliable when used with DRBD: directsync and writethrough. The first does not use caching at all, i.e. always reads directly from the block device (it can be a RAID controller) and writes to the block device (it can be a RAID controller), always waiting for confirmation of the record. The second mode uses “host cache” for reading.

Thus, the virtualization system can become catastrophically slow if you do not use physical RAID with caching per write and BBU. And if you use RAID with BBU, then the virtual machine will receive confirmation of the record immediately after placing the data in the controller cache.

Why is there no out-of-sync on ext4

Using the cache = directsync and cache = writethrough modes are the most reliable, because, in this case, we do not need to worry about what is happening inside the virtual machine. But this is not the only way. This needs to be taken into account in cases where we have insufficient RAID performance or we do not have RAID at all.

You can make sure that the data is already written, not only at the process level of the virtual machine, but also inside the VM. Here we are introduced to the concept of barrier, which assumes that the file system itself knows when it needs to reset the data from the cache to a physical device and wait for the operation to complete. And man mount tells us that "The ext4 filesystem enables write barriers by default". That is why we will never see out-of-sync on areas where the file system is using barriers.

Useful links:

- Proxmox and DRBD: pve.proxmox.com/wiki/DRBD

- Out of sync: www.gossamer-threads.com/lists/drbd/users/25227

- More about out of sync: forum.proxmox.com/threads/18259-KVM-on-top-of-DRBD-and-out-of-sync-long-term-investigation-results

- Description of the cache parameter for kvm / qemu: www.suse.com/documentation/sles11/book_kvm/data/sect1_1_chapter_book_kvm.html

- More about the cache: www.ilsistemista.net/index.php/virtualization/23-kvm-storage-performance-and-cache-settings-on-red-hat-enterprise-linux-62.html?start=2

Source: https://habr.com/ru/post/219295/

All Articles