PCIe SSD, subspecies and future

Subspecies and prospects of the usual SSD drives were considered in the previous material , and before him considered hard drives .

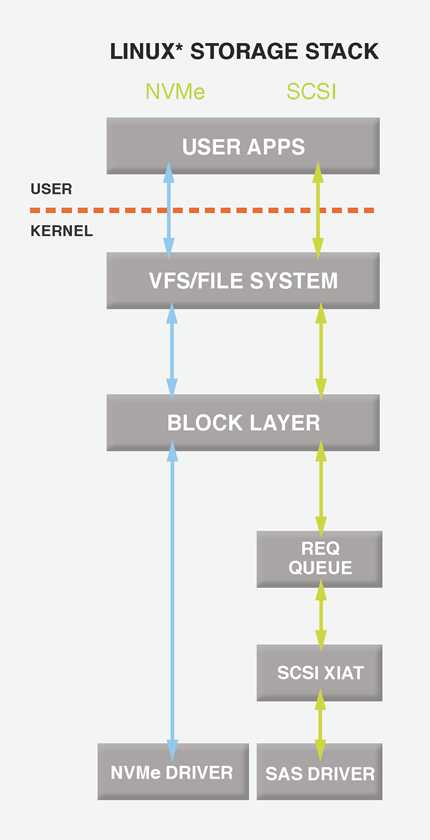

Traditional SAS / SATA interfaces are familiar, developed and convenient in everyday life. There is a large infrastructure - disks, controllers, compatibility with expanders, waste cable management. Attached is the SCSI and SATA stack operation, the latency of the interface controllers. The ongoing struggle to increase IOPS and reduce delays requires getting rid of unnecessary links and placing drives as close as possible to the processor. So what to do if the performance of the SAS / SATA SSD is not satisfied and you want even faster?

Choose a PCIe!

Trends, as usual, divided.

')

The simplest cards (as in the photo above) use regular SATA drives connected to a PCIe controller. There are no advantages over simple SATA SSDs, everything is transmitted to the same AHCI stack. This subspecies will not be considered, it is intended for consumer devices.

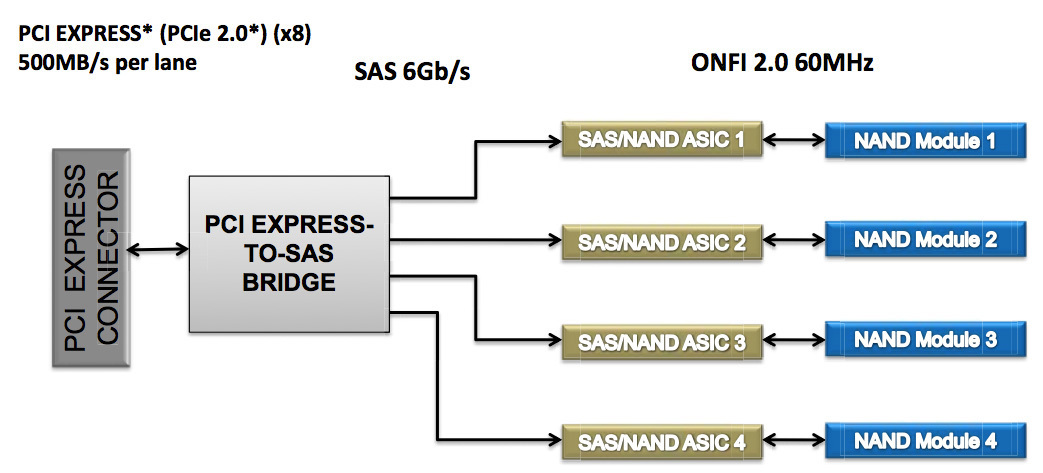

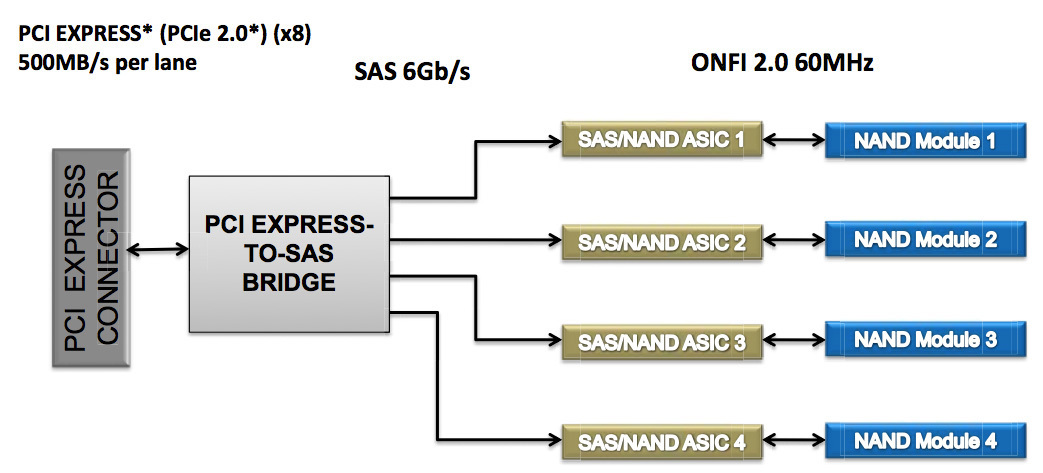

More sophisticated options use SAS bridge and flash memory controllers with connection to the bridge.

In fact, the second subspecies is an array of SAS SSD, which are combined into a single space by means of the OS. The undoubted advantage of this approach is the ability to use a standard SCSI stack. Works everywhere, complaints minimum, the decision worked out for decades. Density is also at altitude, because there is no restriction of 2.5 ”format and a flash can be placed a lot, the volume reaches 3.2TB. Typical representatives - Intel 910, LSI Nytro WarpDrive and Nytro XP, very fast cards with low CPU usage. The natural disadvantage is still the translation of all levels of the driver and the SCSI stack, which add a delay and I want to get rid of it.

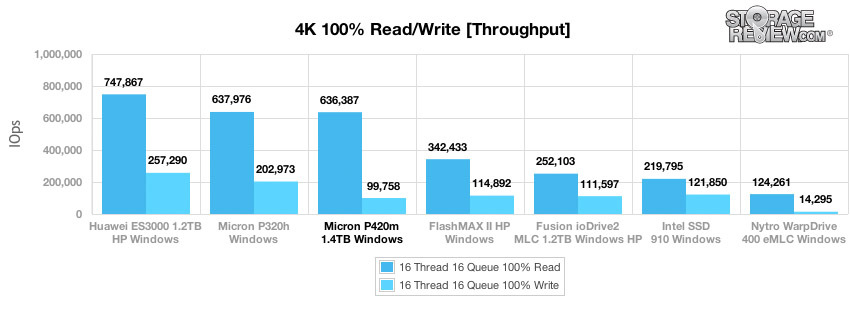

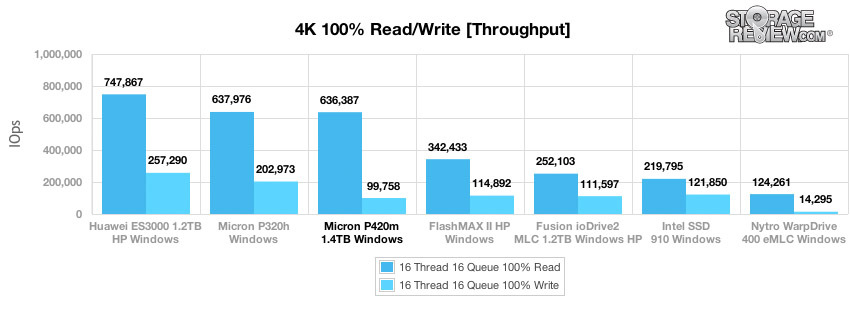

In order to get rid of unnecessary SCSI stack, which was developed in ancient times, when the existence of flash in mass drives has not yet dreamed, some developers have come up with drives with specialized controllers that connect PCIe directly to the NAND controller. Such cards make Fusion-io, Virident, Huawei, Micron.

Pros:

Minuses:

The uniqueness of the drivers complicates the spread of promising technology, so the search for a single solution began.

NVM Express

Over 90 companies have joined the NVMHCI Work Group to develop the new NVM Express protocol, which will unleash the potential of SSD solutions in a single standard. The future is not forgotten - multi-core optimization enables each thread to select its turn of commands and interrupts. An advanced error detection and control system is great for industrial applications. The self-encryption protocol is supported.

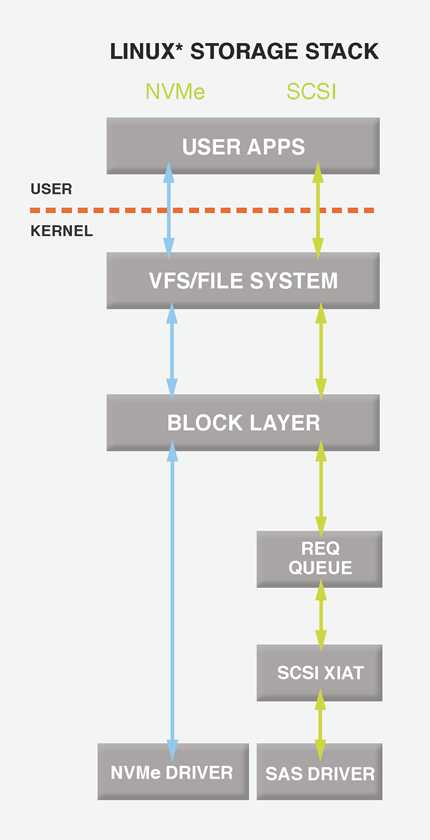

So get rid of SCSI

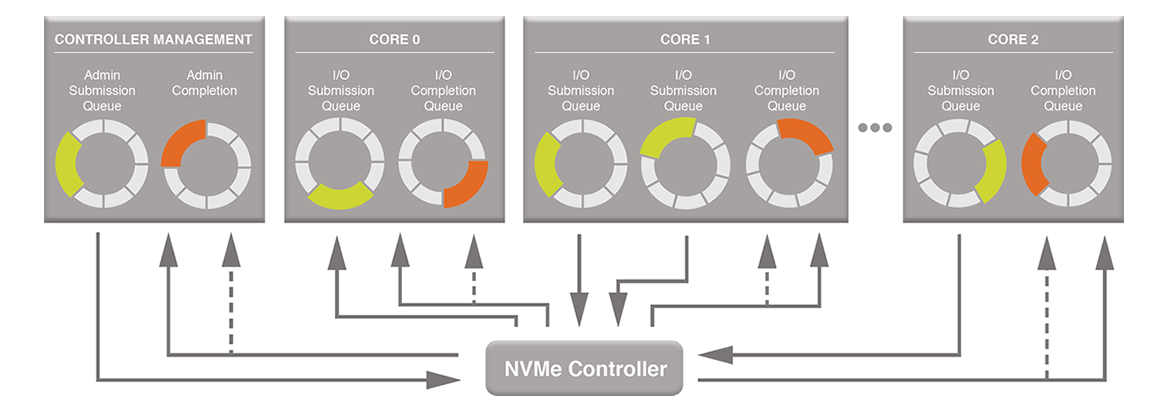

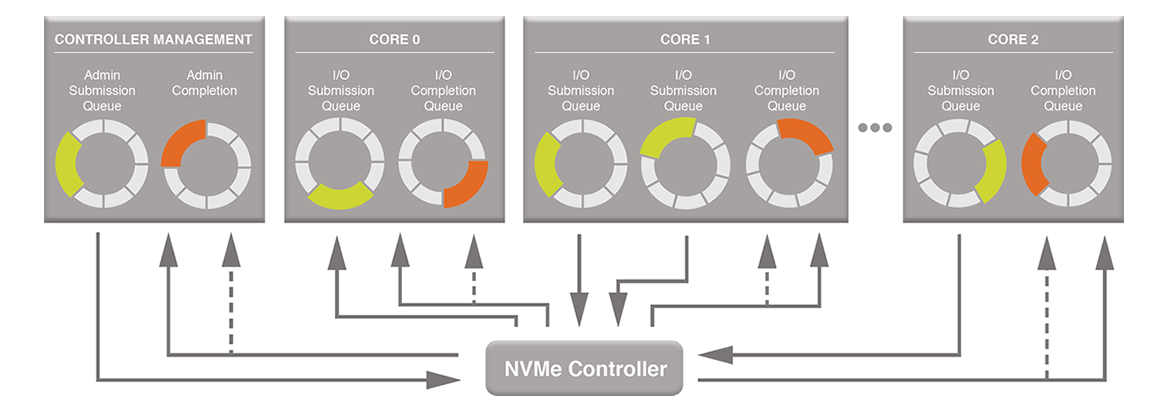

The scalable host controller interface supports up to 64K commands in one I / O queue, which can also be 64 thousand. The basis of NVM Express is the combined Submission and Completion Queue mechanism (the execution queue and the completed queue). Commands from the programs are placed in the Submission Queue, the result of the execution is placed in the Completion Queue controller. Several Submission Queues can use the same Completion Queue and all of them are located in the host’s memory.

Beautiful scheme

Admin Submission and Completion Queue exist for controlling devices — creating and deleting command queues, canceling command execution, etc. Only management commands from the Admin Command Set can enter these queues.

Custom software can create queues up to the controller limit, but it is considered normal practice to focus on server capabilities. For example, for four cores in the system, it is better to create four pairs of queues to avoid locks and bind processes to the cores. This approach provides efficient performance scaling and low latency.

Main features of NVMe:

Summary table of advantages over traditional AHCI interface (without translation)

Drivers for Windows were supported by the OpenFabrics Alliance , starting with Windows Server 2012 R2, NVMe support is built in by Microsoft itself.

The drivers for Linux from Intel were included in kernel 3.3, and the kernel 3.13 included a new block layer for working with SSDs, which were helped by Fusion-io employees. The new design of the kernel divides the internal queues into two levels (by processors and hardware submission queues), removing the bottleneck and parallelizing I / O. In release 3.13, only the virtioblk driver was modified, other drivers will be modified later.

It seems everything is fine, fantastic performance and low latency for accelerators have found their place in the market, but something is missing. Well, of course, what is a real corporate drive without hot swapping? Disorder, must be eliminated.

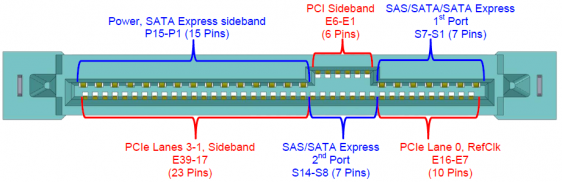

For distribution to the masses, it is also desirable to use the existing form factor, the choice fell on the 2.5 "format (logical), while preserving the SAS connector.

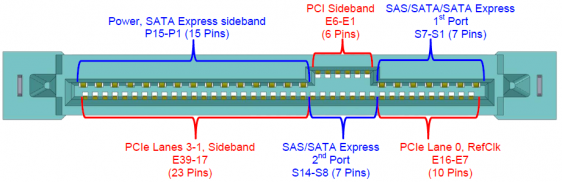

SAS connector with additional contacts

A traditional PCIe flash card requires careful handling in order not to damage static-sensitive chips. In addition to the possibility of hot-swapping, in a 2.5 "format, the flash is protected by a casing to avoid excesses.

NVMe SSD

Naturally, not without a small flaw. As we already wrote, the flash itself is a very slow product and controllers have a large number of channels to parallelize the work. In the 2.5 "format, space is limited and it is extremely problematic to achieve the performance of a full-size PCI card - there is not a lot of flash to fit.

Samsung XS1715 promises 740K IOPS for reading, Micron P420m and Intel P3700 are noticeably more modest - 430K and 450K, respectively.

Now about us and NVMe

Among current products, 4 NVMe SSDs can be installed into a four-processor ETegro Hyperion RS530 G4 server based on Intel Xeon E7-4800 v2.

The four drives on the left can be either SAS or NVMe, depending on the connected cables. Under three million IOPS, under 12GB / s read speed is a great addition to 60 cores and six terabytes of RAM :)

By the way, the server has four hot-swapped PCIe slots, so even the current PCIe cards can be changed on the fly, albeit with some inconvenience.

In the next generation of dual-processor systems based on Intel (which will be launched in the fall), regular seats for NVMe drives are also provided.

Traditional SAS / SATA interfaces are familiar, developed and convenient in everyday life. There is a large infrastructure - disks, controllers, compatibility with expanders, waste cable management. Attached is the SCSI and SATA stack operation, the latency of the interface controllers. The ongoing struggle to increase IOPS and reduce delays requires getting rid of unnecessary links and placing drives as close as possible to the processor. So what to do if the performance of the SAS / SATA SSD is not satisfied and you want even faster?

Choose a PCIe!

Trends, as usual, divided.

')

The simplest cards (as in the photo above) use regular SATA drives connected to a PCIe controller. There are no advantages over simple SATA SSDs, everything is transmitted to the same AHCI stack. This subspecies will not be considered, it is intended for consumer devices.

More sophisticated options use SAS bridge and flash memory controllers with connection to the bridge.

In fact, the second subspecies is an array of SAS SSD, which are combined into a single space by means of the OS. The undoubted advantage of this approach is the ability to use a standard SCSI stack. Works everywhere, complaints minimum, the decision worked out for decades. Density is also at altitude, because there is no restriction of 2.5 ”format and a flash can be placed a lot, the volume reaches 3.2TB. Typical representatives - Intel 910, LSI Nytro WarpDrive and Nytro XP, very fast cards with low CPU usage. The natural disadvantage is still the translation of all levels of the driver and the SCSI stack, which add a delay and I want to get rid of it.

In order to get rid of unnecessary SCSI stack, which was developed in ancient times, when the existence of flash in mass drives has not yet dreamed, some developers have come up with drives with specialized controllers that connect PCIe directly to the NAND controller. Such cards make Fusion-io, Virident, Huawei, Micron.

Pros:

- Low latency, the best figure for today is around three microseconds.

- High performance - up to 3.2 / 2.8 GB / s read / write in large blocks and

770K / 630K IOPS read / write in 4K blocks. - Lack of SCSI stack, optimization of work with NAND (for example, Fusion-io).

Minuses:

- High CPU utilization (not so important when processors with 16 cores are available).

- Impossibility of hot swapping: in general, PCIe cards do not allow this.

- Drivers are unique to each controller.

The uniqueness of the drivers complicates the spread of promising technology, so the search for a single solution began.

NVM Express

Over 90 companies have joined the NVMHCI Work Group to develop the new NVM Express protocol, which will unleash the potential of SSD solutions in a single standard. The future is not forgotten - multi-core optimization enables each thread to select its turn of commands and interrupts. An advanced error detection and control system is great for industrial applications. The self-encryption protocol is supported.

So get rid of SCSI

The scalable host controller interface supports up to 64K commands in one I / O queue, which can also be 64 thousand. The basis of NVM Express is the combined Submission and Completion Queue mechanism (the execution queue and the completed queue). Commands from the programs are placed in the Submission Queue, the result of the execution is placed in the Completion Queue controller. Several Submission Queues can use the same Completion Queue and all of them are located in the host’s memory.

Beautiful scheme

Admin Submission and Completion Queue exist for controlling devices — creating and deleting command queues, canceling command execution, etc. Only management commands from the Admin Command Set can enter these queues.

Custom software can create queues up to the controller limit, but it is considered normal practice to focus on server capabilities. For example, for four cores in the system, it is better to create four pairs of queues to avoid locks and bind processes to the cores. This approach provides efficient performance scaling and low latency.

Main features of NVMe:

- Supports 64K I / O queues, 64K commands each.

- Developed arbitration mechanism and priority queue I / O system.

- The command set is reduced to 13 without taking into account control and reservation commands.

- Support for MSI-X and interrupt aggregation.

- A total command size of 64 bytes ensures high performance on random operations in small blocks.

- Support for I / O virtualization, such as SR-IOV.

- Manages one record in the MMIO register in the command queue.

- Supports multiple namespaces.

- Advanced error detection and management.

- Supports MPIO (including backup), full data protection (DIF / DIX).

Summary table of advantages over traditional AHCI interface (without translation)

| AHCI | Nvme | |

| Maximum queue depth | 1 command queue; 32 commands per queue | 65536 queues; 65536 commands per queue |

| Uncacheable register accesses (2000 cycles each) | 6 per non-queued command; 9 per queued command | 2 per command |

| MSI-X and interrupt steering | single interrupt; no steering | 2048 MSI-X interrupts |

| Parallelism and multiple threads | requires synchronization lock to issue a command | no locking |

| Efficiency for 4 KB commands | command parameters require two serialized host DRAM fetches | gets command parameters in one 64 bytes fetch |

Drivers for Windows were supported by the OpenFabrics Alliance , starting with Windows Server 2012 R2, NVMe support is built in by Microsoft itself.

The drivers for Linux from Intel were included in kernel 3.3, and the kernel 3.13 included a new block layer for working with SSDs, which were helped by Fusion-io employees. The new design of the kernel divides the internal queues into two levels (by processors and hardware submission queues), removing the bottleneck and parallelizing I / O. In release 3.13, only the virtioblk driver was modified, other drivers will be modified later.

It seems everything is fine, fantastic performance and low latency for accelerators have found their place in the market, but something is missing. Well, of course, what is a real corporate drive without hot swapping? Disorder, must be eliminated.

For distribution to the masses, it is also desirable to use the existing form factor, the choice fell on the 2.5 "format (logical), while preserving the SAS connector.

SAS connector with additional contacts

A traditional PCIe flash card requires careful handling in order not to damage static-sensitive chips. In addition to the possibility of hot-swapping, in a 2.5 "format, the flash is protected by a casing to avoid excesses.

NVMe SSD

Naturally, not without a small flaw. As we already wrote, the flash itself is a very slow product and controllers have a large number of channels to parallelize the work. In the 2.5 "format, space is limited and it is extremely problematic to achieve the performance of a full-size PCI card - there is not a lot of flash to fit.

Samsung XS1715 promises 740K IOPS for reading, Micron P420m and Intel P3700 are noticeably more modest - 430K and 450K, respectively.

Now about us and NVMe

Among current products, 4 NVMe SSDs can be installed into a four-processor ETegro Hyperion RS530 G4 server based on Intel Xeon E7-4800 v2.

The four drives on the left can be either SAS or NVMe, depending on the connected cables. Under three million IOPS, under 12GB / s read speed is a great addition to 60 cores and six terabytes of RAM :)

By the way, the server has four hot-swapped PCIe slots, so even the current PCIe cards can be changed on the fly, albeit with some inconvenience.

In the next generation of dual-processor systems based on Intel (which will be launched in the fall), regular seats for NVMe drives are also provided.

Source: https://habr.com/ru/post/218853/

All Articles