RabbitMQ monitoring in Zabbix and hidden features of Zabbix key

Introduction

Faced with the task of monitoring a large number of metrics in the RabbitMQ cluster, there was a desire to create a universal parser for JSON data. The task was complicated by the fact that metrics appear and disappear dynamically while the cluster is running, plus the developers constantly want to collect / calculate something new. Unfortunately, in Zabbix there is no possibility to collect data in this form out of the box. But there is such a handy feature as zabbix_trapper, which allows you to make flexible customization. The article will talk about not using the standard way of using zabbix_trapper items. I didn’t want every time the developers asked to add new metrics, change the script that collects data and sends it to zabbix. Hence the idea to use the actual zabbix key itself as an instruction for collecting a new metric. The point is the following, we use the zabbix key as a command, with a predefined syntakis. That is, the zabbix key in this case will serve as an instruction similar to keys of the zabbix_agent type.

According to the official Zabbix documentation, item key has some restrictions on valid characters. After playing a bit with the creation of keys like zabbix trapper I found that, for example, a key of the form:

some.thing.here [one: two: three] [foo = x, bar = y]

are created in zabbiks without errors. That is, the restrictions only work on the fact that outside [] brackets, at least one [az] [AZ] character must also be in front of the brackets. Having the ability to create such keys, we can invent our own key syntax and program quite flexible logic in it. It remains only to write a handler invented syntax that will do all the basic work. Finally, by writing a dock for this handler and putting the code in public, the entire Zabbix community will have the opportunity to exchange such “as if” plugins.

')

In general, the article turned out to be a bit difficult to understand, so in order to better understand the concept, I would advise you first to familiarize yourself with the RabbitMQ API, at least just to see what the data looks like and what the API provides (see off site).

References to the code are given at the end of the article.

How it works

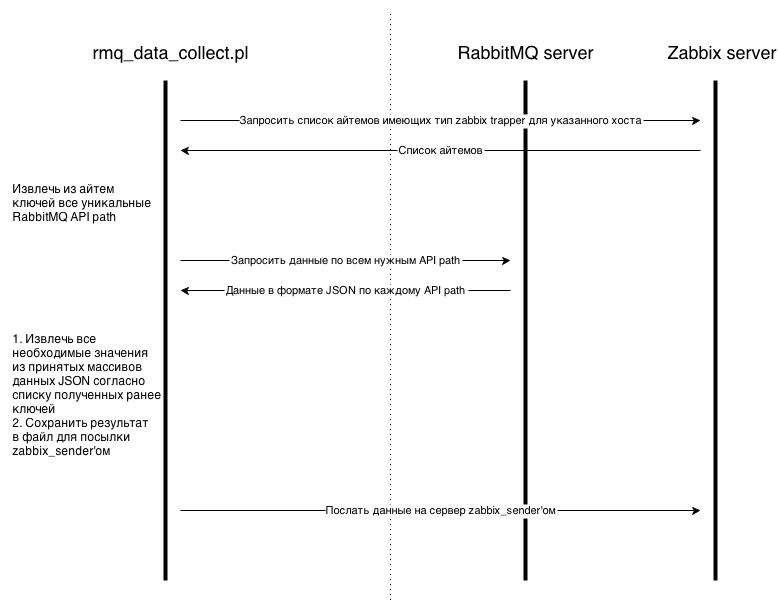

First we create in Zabbix front-end items of zabbix_trapper type according to the developed syntax (the syntax will be described below). Next, run the handler (rmq_data_collect.pl - then collector) in the crown with the frequency of collecting information, say 1 minute. Now the collector interacts with the Zabbix server and the RabbitMQ server as indicated in the diagram:

Those. The script makes 3 basic steps:

1) Requests a list of items from Zabbix server that should be collected.

2) Retrieves all the necessary data from RabbitMQ according to the list of items above.

3) Sends all collected data to Zabbix server / proxy to the corresponding item.

When first processed, the handler can interact either via Zabbix API or directly with the database. In my implementation, the interaction occurs with the Zabbix proxy base. This approach is more convenient when using distributed monitoring with some Zabbix proxy servers. In this case, the script must be installed on the zabbix proxy server, and the data for the connection to the database of the same proxy must be specified in the script configuration.

In addition to the handler, the disciver will also be considered, which is used for low level discovery. Next we will talk about the current implementation of monitoring for RabbitMQ, the theory and examples of customization.

What is implemented

The RabbitMQ API documentation describes 9 API urls + there is also a federation-links, which is put on a Rebbit by a separate plugin. Maybe there is something else. In the current implementation of my scripts, the following API paths can be monitored:

-nodes

-connections

-queues

-bindings

-federation-links

This was enough for my tasks, in case some other API paths are needed, then you need to add them to map_rmq_elements (see the comments on the code).

Install and configure scripts

To monitor RabbitMQ, you need to install and configure 2 scripts (Collector and Discoverer) + ZabbixProxyDB.pm. Scripts can be installed both on a Zabbix server and on a proxy, depending on your Zabbix configuration.

Collector

rmq_data_collect.pl - Used for processing zabbiks keys and collecting data from rabbitmq.

Using

It has one input parameter.

$ 1 is the full name of the RabbitMQ host in Zabbix, if RabbitMQ is not running as a cluster. In case rebbit works as a cluster, $ 1 is the common part of the host name in the cluster, i.e. hostnames in a classer must be specified according to a specific rule. For example, host names in a cluster:

- rmq-host1

- rmq-host2

- rmq-host3

In this case, $ 1 should be “rmq-host". The script will request from Zabbix server / proxy a list of all hosts with names containing “rmq-host”, then go through this list, requesting the necessary data to the RabbitMQ API. After the first successful response data from any of the hosts will be collected and written to a file for sending by zabbix_sender. At the time of this writing, there is a flaw in the code if more than one host from the RabbitMQ cluster does not respond, nothing will happen to the current implementation. with SQL query to the database, so far the only way.

The collector must be registered in crontab with a frequency equal to the frequency of data collection from the rebbit. The list of required modules can be found in the script itself.

Discoverer

rmq_data_discover.pl - Used for low-level discovery in Zabbix (low level discovery or LLD).

Using

It has 3 required input parameters:

$ 1 is the full name of the RabbitMQ host in Zabbix, if RabbitMQ is not running as a cluster. For a cluster, the principle is the same as that of a collector. After the first successful response, passing through the list will stop and the message will be compiled for low-level discovery.

$ 2 - regexp for which the selection of metrics will occur at the time of the script. Not to be confused with the regexp filter on the Zabbiks side in the LLD settings. This separation is convenient in some cases.

$ 3 - RabbitMQ API path, any of the list of supported (see p. What is implemented).

The script must be installed in the externalscripts folder specified in the Zabbix proxy / server configuration. Examples of setting up LLD rules are given at the end of the article.

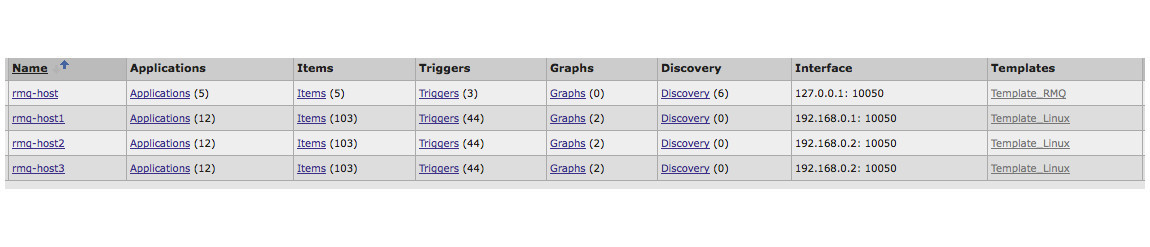

Zabbix hosts configuration example

There is a rabbitmq cluster consisting of three hosts. The hosts themselves are individually monitored by zabbix agents, the Template_Linux template, which contains the standard metrics for CPU, memory, etc. A separate host “rmq-host” has been created for cluster metrics. The host name for the entire cluster is the common part of the host names in the cluster. This is a prerequisite in the current implementation, otherwise sampling from the database will not work correctly.

Key Syntax

Now let's talk about the Rebbit syntax I developed. As mentioned above, in Zabbix items should be of type zabbix trapper.

To monitor a rebbit, there are two types of item, simple and aggregated, their syntax is slightly different. Simple items are used to select the values of individual parameters. Aggregated items are used to select an array of values for a given condition and aggregate them. In both cases, the conditions may not be specified (optional).

Simple values

Syntax : <path.to.value.inside.json> [$ type: $ api_path: $ element_name]

<path.to.value.inside.json> - The path to the value inside each element of the array.

$ type - may be the name of VHOST or “general”, in the case of VHOST, the search for values will be carried out by the specified VHOST, “general” is the keyword necessary for values that do not apply to specific VHOSTs.

$ api_path - RabbitMQ API path, any of the supported (see p. What is implemented).

$ element_name is the unique identifier of the array element at the specified $ api_path, for federation-links, this is exchange, for bindings this is the destination, for the rest of the name.

Aggregated Values

The general syntax is: <path.to.value.inside.json | rmq> [aggregated: $ api_path: $ func] [$ conditions]

aggregated - is a keyword, after which the collector (rmq_data_collect.pl) understands that the key syntax should be parsed as for a value of an aggregated type.

$ api_path - the path to the API, any of the supported (see p. What is implemented).

$ func - 2 functions are implemented, sum and count.

$ conditions is an optional parameter; if set, then the aggregation will take into account only those elements in the data array that fit the condition. The syntax of the conditions is as follows: [condition1 = “cond1”, condition2 = “cond2”, condition3 = “cond3”, etc]. Quotes are required. The condition itself is Perl regexp.

Sum function

Syntax: <path.to.value.inside.json> [aggregated: $ api_path: sum] [$ conditions]

The sum function sums the values located at the specified path <path.to.value.inside.json> inside each element of the array, obtained from $ api_path, and matching the condition $ condition.

<path.to.value.inside.json> - The path to the value inside each element of the array obtained by the RabbitMQ API path.

Count function

Syntax: rmq [aggregated: $ api_path: count] [$ conditions]

The count function counts the number of elements in the array obtained from $ api_path that match the condition.

rmq - is a mandatory word, but not used at all (there can be absolutely any set of letters). This is due to the limitations of Zabbix on an item's key of type “zabbix_trapper” - an item cannot begin with a square bracket.

Examples

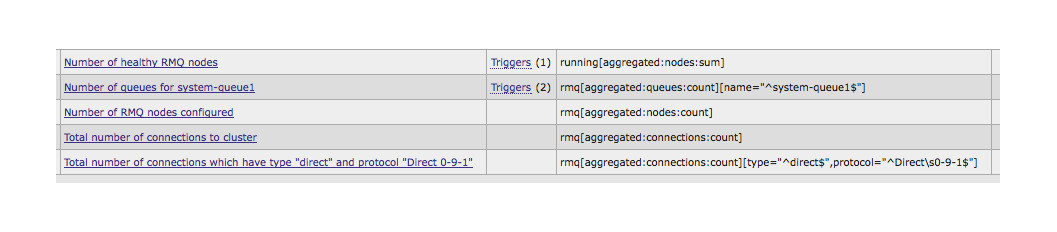

Aggregated values

1) It counts the sum of the elements of running in the array of nodes. Note: In the event that the rabbitmq node is working, running returns 1, respectively, but at the output we get the number of working nodes.

2) Count the number of elements in the queues array, where name = ^ system-queue1 $. Since The condition value is always treated as regexp. You must set the beginning and end of the line (^ $) to avoid an error if something else falls under the regexp. At the output we get the number of queues named system-queues1

3) It counts the total number of elements in the array of nodes. Those. number of tuned nodes in the claser.

4) It counts the total number of elements in the connections array. Those. number of connections to the cluster at the moment.

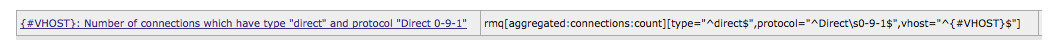

5) Count the number of elements in the connections array, for which type = “^ direct $” and protocol = “^ Direct \ s0-9-1 $”.

Examples for simple values are further in LLD. Since it is not convenient to set them statically, most of the queues constantly appear and disappear.

Low level discovery

In the case of a large rabbitmq cluster configuration, it is reasonable to use Zabbix low-level detection. The use of rmq_data_discover.pl is described above. Here I will give examples and values returned by the script.

The values returned by the script and which can be used in LLD:

Connections

"{#VHOST}" => $ vhost,

"{#NAME}" => $ name,

"{#NODE}" => $ node,

Nodes

"{#NODENAME}" => $ name,

Bindings

"{#SOURCE}" => $ queueSource,

"{#VHOST}" => $ vhost,

"{#DESTINATION}" => $ queueDest,

"{#THRESHOLD}" => $ threshold,

Note: all elements with an empty source are ignored.

Queues

"{#VHOST}" => $ vhost,

"{#QUEUE}" => $ queueName,

Federations

“{#VHOST”} => $ vhost,

“{#EXCHANGE} => $ name

LLD examples

Examples of running rules for each API path:

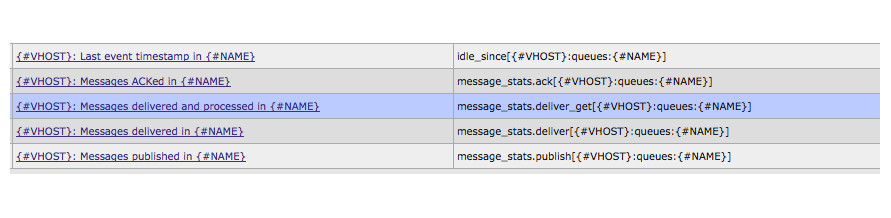

Examples of site prototypes

In the API path queues, we can collect statistics on processed messages, without worrying about the number of queues.

1) The value of the idle_since field. The only field that has processing inside rmq_data_collector.pl. As a result, we get a timestamp from which the queue is inactive.

2) The ack value inside the message_stats element.

3) The remaining values work with message_stats as well as item 2

Example for connections

Aytem counts the number of elements in the connections array with the given type, protocol for each {#VHOST}.

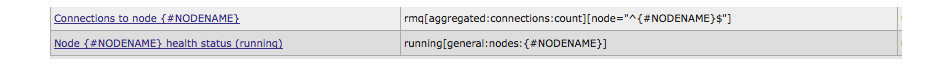

Example for nodes

1) Count the number of connections to each node.

2) Returns the value of the running field for each element of the nodes array. At the output we get the health status of each node.

Summarize

I hope it turned out not too confusing. If something is not clear, I will answer all questions in the comments.

The advantage of the described approach in creating custom keys that are specialized for a specific software is obvious. There is no need to change the code of Zabbix itself. Already, we can create such plugins, write documentation on them and share ready-made solutions on the Internet. If we develop the idea of creating customized keys in Zabbix further, then ideally I would like to see it, perhaps, in the form of a new feature. Having a similar plugin now, when you need to add some sort of new metric on RabbitMQ, you just need to create a corresponding item, as is done for zabbix_agent.

Script code here: github.com/mfocuz/zabbix_plugins

Source: https://habr.com/ru/post/218741/

All Articles