Portrait Habra-tutorial

In our age only useless things and are necessary for man. Oscar Wilde, Portrait of Dorian Gray ( source )

Have you ever wondered what an ordinary post on Habré (ordinary powder TM ) differs from tutorial? And how can this “different” be measured? Are there any regularities here and is it possible to predict the label from them:

In this article we will discuss the so-called exploratory data analysis or briefly EDA ( research data analysis ) in relation to the Habrahabr articles, and in particular we will pay special attention to the tutorial. First of all, EDA is aimed at a detailed study of the data, and is necessary for understanding what we are working with. The important part is the collection and cleaning of data and the choice of what data to collect. A feature of the method is to visualize and search for important characteristics and trends.

Exploratory data analysis is the first step in studying and understanding data, without it we can push ourselves into the numerous traps described by the author in the article: " How to lie with the help of statistics ".

')

How does the usual habra-tutorial

As a simple demonstration, consider the simplest picture of three parameters: views, favorites (favorite) and rating (number of pluses), for three classes: all articles together, a regular post (non-tutorial) and tutorial.

Even in such a simplified picture, the difference between the classes is noticeable. Our intuition and common sense tell us that on average, the tutorial is more often added to favorites, but intuition doesn’t tell how much more often, and that they gain fewer pluses and views. These and many other interesting questions will be discussed further in the article.

Article structure

- How does the usual habra-tutorial

- We collect data

- Habra data

- Explore the tutorials

- Parse interesting examples

- Predict label tutorial

- How to make a data set better

- Conclusion

- Further reading

We collect data

One of the most important properties of research and experiment is its reproducibility and transparency. Therefore, it is incredibly important to provide all the source materials that come with the work — the data, the algorithm for collecting them, the counting algorithm, the implementation, the visualization, and the output characteristics. All code, data and scripts for analysis and visualization are attached to this article - they are available through github . Separate links are provided for graphs and scripts, the most important and interesting parts of the code are also available in the article in the form of a drop-down text ("spoilers").

This allows you to check the authenticity of data, visualization and correctness of calculations. For example, the original image of the histograms at the beginning of the article is made using the histograms_into.R script on dataset all.csv (the description is given below).

Let's begin with the high-level description of data collection algorithm on habr-articles

We simply go through each link and parse the page.

One of the possible implementations of sorting articles by id (as well as collecting articles from the best) is shown here, the whole algorithm consists of three components: sorting pages of articles (given above in the form of a pseudo-code), parsing pages (

processPage ) and writing (class) Habra article ( habra-article ).Implementation of sorting articles in python

from __future__ import print_function import time from habraPageParser import HabraPageParser from article import HabraArticle class HabraPageGenerator: @staticmethod def generatePages(rooturl): articles = [] suffix = "page" for i in range(1,101): if i > 1: url = rooturl+suffix+str(i) else: url = rooturl print(url) pageArticles = HabraPageParser.parse(url) if pageArticles is not None: articles = articles + pageArticles else: break return articles @staticmethod def generateTops(): WEEK_URL = 'http://habrahabr.ru/posts/top/weekly/' MONTH_URL = 'http://habrahabr.ru/posts/top/monthly/' ALLTIME_URL = 'http://habrahabr.ru/posts/top/alltime/' articles = [] articles = articles + HabraPageGenerator.generatePages(ALLTIME_URL) articles = articles + HabraPageGenerator.generatePages(MONTH_URL) articles = articles + HabraPageGenerator.generatePages(WEEK_URL) return articles @staticmethod def generateDataset(dataset_name): FIRST_TUTORIAL = 152563 LAST_INDEX = 219000 BASE_URL = 'http://habrahabr.ru/post/' logname = "log-test-alive.txt" logfile = open(logname, "w") datafile = HabraArticle.init_file(dataset_name) print("generate all pages", file=logfile) print(time.strftime("%H:%M:%S"), file=logfile) logfile.flush() for postIndex in range(FIRST_TUTORIAL, LAST_INDEX): url = BASE_URL + str(postIndex) print("test: "+url, file=logfile) try: article = HabraPageParser.parse(url) if article: print("alive: "+url, file=logfile) assert(len(article) == 1) article[0].write_to_file(datafile) except: continue logfile.flush() logfile.close() datafile.close() Code habra-article :

Implementation of a class of habr-article

from __future__ import print_function class HabraArticle: def __init__(self,post_id,title,author,score,views,favors,isTutorial): self.post_id = post_id self.title = title self.author = author self.score = score self.views = views self.favors = favors if isTutorial: self.isTutorial = 1 else: self.isTutorial = 0 def printall(self): print("id: ", self.post_id ) print("title: ", self.title) print("author: ", self.author ) print("score: ", self.score ) print("views: ", self.views ) print("favors: ", self.favors ) print("isTutorial: ", self.isTutorial) def get_csv_line(self): return self.post_id+","+self.title+","+self.author+","+ self.score+","+self.views+","+self.favors+","+str(self.isTutorial) +"\n" @staticmethod def printCSVHeader(): return "id, title, author, score, views, favors, isTutorial" @staticmethod def init_file(filename): datafile = open(filename, 'w') datafile.close() datafile = open(filename, 'a') print(HabraArticle.printCSVHeader(), file=datafile) return datafile def write_to_file(self,datafile): csv_line = self.get_csv_line() datafile.write(csv_line.encode('utf-8')) datafile.flush() Code (beautifulsoup) function:

processPage :processPage

import urllib2 from bs4 import BeautifulSoup import re from article import HabraArticle class HabraPageParser: @staticmethod def parse(url): try: response = urllib2.urlopen(url) except urllib2.HTTPError, err: if err.code == 404: return None else: raise html = response.read().decode("utf-8") soup = BeautifulSoup(html) #print(soup.decode('utf-8')) #if the post is closed, return None cyrillicPostIsClosed = '\xd0\xa5\xd0\xb0\xd0\xb1\xd1\x80\xd0\xb0\xd1\x85\xd0\xb0\xd0\xb1\xd1\x80 \xe2\x80\x94 \xd0\x94\xd0\xbe\xd1\x81\xd1\x82\xd1\x83\xd0\xbf \xd0\xba \xd1\x81\xd1\x82\xd1\x80\xd0\xb0\xd0\xbd\xd0\xb8\xd1\x86\xd0\xb5 \xd0\xbe\xd0\xb3\xd1\x80\xd0\xb0\xd0\xbd\xd0\xb8\xd1\x87\xd0\xb5\xd0\xbd' if soup.title.text == cyrillicPostIsClosed.decode('utf-8'): return None articles = soup.find_all(class_="post shortcuts_item") habraArticles = [] for article in articles: isScoreShown = article.find(class_="mark positive ") #if the score is published already, then article is in, otherwise we go on to next one if not isScoreShown: continue post_id = article["id"] author = article.find(class_="author") if author: author = author.a.text title = article.find(class_="post_title").text score = article.find(class_="score" ).text views = article.find(class_="pageviews" ).text favors = article.find(class_="favs_count").text tutorial = article.find(class_="flag flag_tutorial") #we need to escape the symbols in the title, it might contain commas title = re.sub(r',', " comma ", title) #if something went wrong skip this article if not post_id or not author or not title: return None habraArticle = HabraArticle(post_id,title,author,score,views,favors,tutorial) habraArticles.append(habraArticle) return habraArticles

(obtained by using the scale_id.R script on the first 6.5k points of the alive_test_id.csv dataset )

You need to read the graph as follows: take 250 consecutive id values and write them out in a line; if the page is live, we mark it with red, otherwise with blue. Take the next 250 values and write them down to the next line, etc.

The real density of live links since the publication of the first tutorial (September 27, 2012) is 23%. If we assume that id is issued sequentially for each draft, three fourth Habra articles are either hidden or have not been added.

But (!) Most likely the real density is underestimated in measurements. This is due to the lack of the method of collecting articles: problems with the connection, page parsing or short-term inaccessibility of habrabra. Manual data verification showed (on all.csv) that in a small number of cases <= 5-10%, actual pages were not processed. Given this error, it is rational to assume that the actual density lies in the range of 30 + -5%. We will work to reduce the error in the next series.

Additional data

In addition to listing all articles by id (for a specified period), the following data was also collected:

- The best of all time

- Best month

- The best of the week

For the collection, the algorithm described above was used, which bypassed the links:

habrahabr.ru/posts/top/alltime/page $ i

habrahabr.ru/posts/top/monthly/page $ i

habrahabr.ru/posts/top/weekly/page $ i

for $ i from 1 to 100

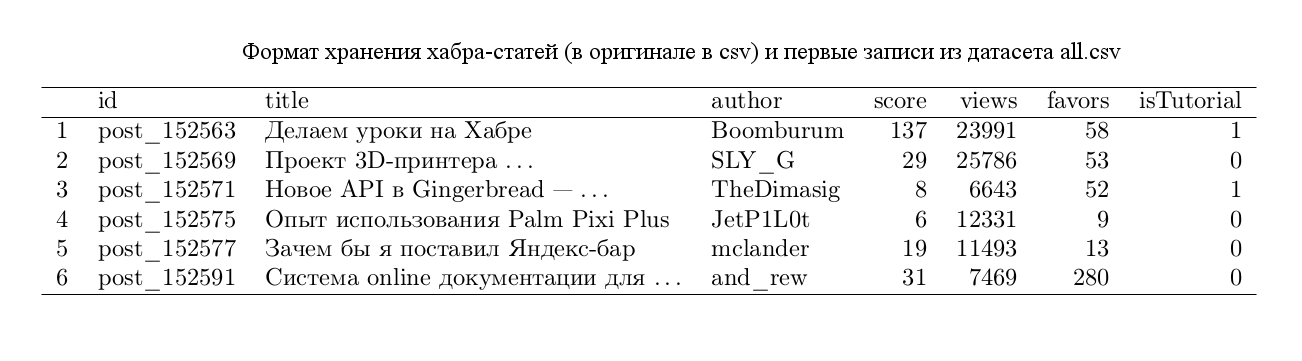

Habra data

The collected data is stored in csv ( comma separated values ) format and has the following form:

Altogether, along with the article datasets are available (downloaded on April 7, 2014):

- All articles: all.csv

- Best of all time: dataset_top_all_time.csv

- Best of the month: habra_dataset_monthly.csv

- Best of the week: habra_dataset_weekly.csv

- Log of live pages by id: alive_test_id.csv

All graphs and results presented in the article are based on the above data. Mostly we will work with the file all.csv.

Explore the tutorials

Consider the distribution of articles on the main parameters collected separately for the two classes: the tutorial and the usual post (non-tutorial). On the Y axis, the share of articles with the corresponding parameter values on the X axis. We have three main parameters: views, favorites, and the number of entries in the favorites.

(obtained using histograms_tutorial_vs_normal.R script on all.csv )

If you always waited for a convenient moment to read about Zipf's Law , then it has arrived. On the right, we see something well resembling this distribution (and I think we will see it more than once in the future).

In general, we see that the distribution of votes (pluses) and views of the tutorial is shifted to the left - relative to the distribution of ordinary posts and also resembles Zipf's law, although it is noticeable that the correspondence here is not so obvious. So on average, regular posts are gaining more pluses and views. And the distribution of favorites is already significantly shifted to the right of the tutorial and does not at all resemble Zipf's law. On average, we see that readers are much more actively adding the tutorial to their favorites. Almost the entire distribution of the tutorial dominates the usual posts twice, we give a short table of quantiles of the two distributions:

The table reads as follows: if the 20% quantile of a regular post is 16, this means that 20% of all the posts of ordinary posts gain no more than 16 entries to your favorites. The median is 50% quantile, the tutorial has 109, and the usual posts have 49.

It is also worth considering the distribution of parameters together. From the graphs above, we see that favorites play a special role for the training material and therefore we will give them special attention in the article.

(Obtained using the script joint_favours_score_view.R on all.csv )

The graph above shows the general trend in the data among tutorial, one plus has an average of several favorites, the median ratio for tutorials is 2.6 times higher than a regular post, and one view has an average (by median) 2.7 times more favourites than at the usual post.

This allows us to outline the area of interest to us, where most of the training material without labels is most likely located - the blue dots in the upper boundary of the red area. We can form queries to our data and test this guess. And it is possible to derive some rules that allow us to automatically "correct" or remind the authors of this label.

(The plots of the distributions are cut off in the graphs; a very small number of points fall outside the specified limits. But the inclusion of these points would increase the scale and make the other points virtually indistinguishable and unreadable.)

Parse interesting examples

In this part we will talk about some typical examples that are found in the data and that will help us better understand the existing potential patterns in the articles and their properties.

Many favorites - few advantages

Request:

Request in R language

query1 <- subset(data, favors > 1000 & score < 50 & isTutorial == FALSE)

Result:

As we can see, the first article is a pure training material without a label, the second one can also claim to be a teaching material. We can notice the following trend from the graphs above: all distributions show that on average, the tutorial is gaining less advantages and much more favourites than a regular article. Coupled with the fact that a large number of tutorial for some reason do not have a label, we assume that the article has a small number of pluses and a large number of entries in the favorites, according to general data trends has a good chance of being a teaching material.

Favorites are 10 times more than pluses (and at least 25 pluses)

Request:

Request in R language

query2 <- subset(data, favors > 10*score & score > 25)

Result:

A slightly complicated version of the previous request, we are looking for articles where favorites are ten times more than the advantages and at the same time the number of advantages is at least 25. Under these conditions, we find articles that have retained large numbers of people, which may serve as some indicator that The article will be useful in the future, which means it is a good candidate for educational material.

The disadvantage of this request is that half of the tutorial is gaining 18 or less pros, which means this rule cuts off a large number of potential tutorial articles.

The script with queries queries.R , dataset all.csv .

Predict label tutorial

The tutorial tag in the article corresponds to the isTutorial binary attribute. So we can formulate the task of defining the tutorial label by the parameters of score , view and favourites , as searching for some predicate (a function that returns 1 or 0) f such that for the entire data set it is true that

(the author admits that he is now simplifying everything and generally actively waving his hands - but this should give the general reader what to do)

In fact, classical machine learning methods (such as SVM, Random Forest, Recursive Trees, etc) will not show qualitative results on the collected data for the following reasons:

- Incredibly poor features space i. only three parameters that are not well distinguished tutorial from the usual post - this is written below

- A significant number of articles not marked as tutorial, but in fact they are - see the first request and the article: "Setting up a Nginx + LAMP server at home" - this is a classic tutorial, but it is marked as a regular post!

- The subjectivity of the mark itself, the presence and / or its absence is largely determined solely by the author's opinion

What can we do in this situation? According to the existing data, we can try to derive some sufficient conditions and look at their feasibility on the existing data. If the rules match the data, then by induction we can create some rule that would allow us to find and mark the tutorial without a label. Simple, interpretable and tasteful.

For example, the following rule is in good agreement with the data and will allow revising some labels (given for the sake of example only)

and the first entries in the answer:

Request in R language

query3 <- subset(data, favors >= 10*score, favors >= views/100)

As you can see, despite the fact that most of the entries do not have a tutorial tag, articles actually are (despite the small score values in the first 6 entries; although more than half of the tutorial have less than 18 pluses). Thus, we can conduct the so-called co-training , that is, by a small amount of data with labels, we can derive rules that allow us to mark the remaining data and create conditions for the application of classical machine learning methods.

But no matter what clever learning algorithms we use, the main problem in the classification of the tutorial is the proper construction of the attribute space, i.e. mapping an article to some vector of numerical or nominative (having a number of final values, for example, the color can be blue, red or green) variables.

How to make a data set better

The collected data set is far from ideal, so it's best to start criticizing it yourself, until others have done it. Certainly, only by the parameters, views, rating, favorites, it is not possible to unambiguously predict whether this article is a tutorial or not. However, we need to get a rule, or rather a classifier, that would work quite accurately. To do this, consider a few typical features of articles that may be useful.

Consider the first example:

What is striking is that in many tutorial, there are images, and often in large quantities - yes, they can also be found in ordinary posts, but this can be a valuable attribute in combination with other parameters. It is also worth not to discount the fact that many hubs are thematically much better suited for training material than others - perhaps this should also be taken into account.

Consider the second example:

Here, the most important is the presence of code and structure, it is natural to assume that these factors may well discriminate between classes and therefore, in principle, they can be taken into account in the model. We can also enter such a parameter as the availability of training materials among similar posts.

Hypothetically, this would give us a new dataset (and a new parameter space for classification), which could better distinguish classes of articles among themselves.

The space of features for classification can actually be huge: the number of comments, the presence of a video, keywords in the title, and many others. Space selection is key to building a successful classifier.

Why you should use the current cut of articles

Why for an assessment not to use all available articles from a habr? Doesn't this increase the accuracy of the classifier? Why do we take only the current cut? The width of the window is also really worth choosing wisely and this may require additional analysis.

But it is impossible to take a sample of all articles in general for the entire life of the resource for the following reason: the resource is constantly evolving and the characteristics of the articles change accordingly: if we consider the very first articles, eg 171 , 2120 , 18709 , we will see that their characteristics change significantly and they already should not be included in a representative sample of current articles, because, on average, we do not expect such parameters in new articles. In many ways, because the audience has changed, the articles themselves have changed and the channels for distributing articles on the Internet have changed.

Conclusion

We reviewed and analyzed the most basic parameters of articles. The idea that the label “tutorial” can be automatically predicted led us to the idea that we understood how to expand the data sets and in which direction it is worth looking. We also looked at the main differences between regular posts and training material in terms of: views, favorites, and rankings. Found that the main difference is the presence of substantially large records in the favorites and set the numerical estimates of this difference.

Only 2.8% in the top are labeled “training material”, with a total share of 9.1% for the entire time since its introduction (September 27, 2012), possibly due to the fact that a sufficient amount of material came to the top before the label appeared or the use of the “tutorial” label was not yet available immediately after its introduction. In favor of this hypothesis, he says that the total share of the tutorial at the best in a week and month practically does not differ from the share among all posts (8.1% per month and 7.8% per week; relativeFractionOfTutorials.R ).

Perhaps using the extended data set, we will be able to predict quite effectively (using various methods of machine learning) and inform the author: "You may have forgotten the tutorial label." This task will be primarily interesting because it will allow you to create a complete list with a selection of interesting training material that can be sorted or evaluated by parameters other than pluses, eg the number of people who added an article to your favorites.

Further reading

If the topic of data analysis seemed interesting, then below is a list of useful material.

- Udacity - Exploratory Data Analysis and Data Science

- Caltech - Learning from Data

- Coursera - Data Science Track (just started!)

- If you live in St. Petersburg, you can take courses at DMLabs

- If you live in Moscow, then you probably already heard about the SHAD

Source: https://habr.com/ru/post/218607/

All Articles