Software for editing and assembling non-linear interactive cinema

Hello colleagues.

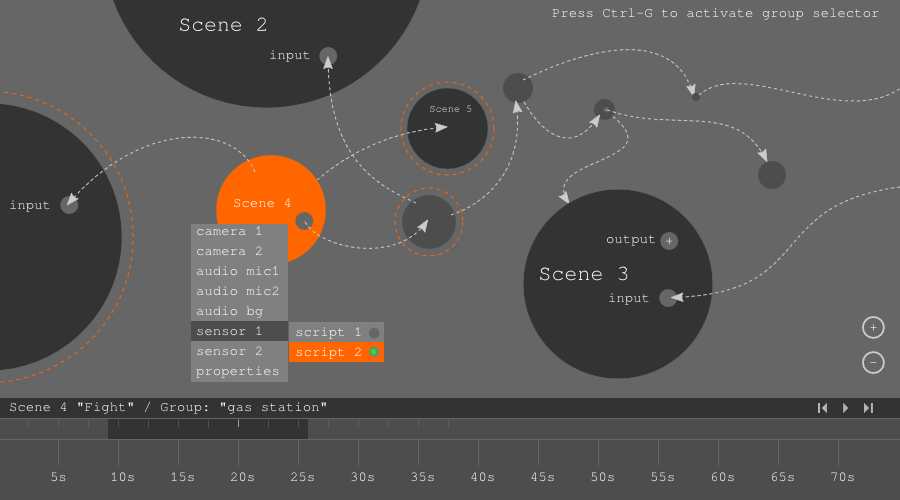

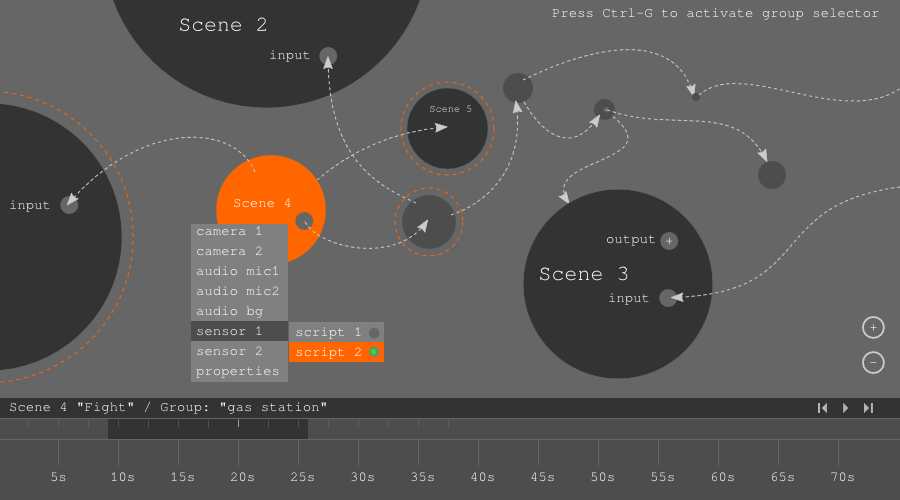

KDPV 1. Interface for working with nodes (scenes). Linking and linking events.

I have a long-standing idea to make an interactive movie. Not in the sense of Chinocher’s Kinoautomat and not in the type of “quest” with explicit cinematic outline and extensive narrative (press 3 if you want the heroine to stay, or 4 to let the heroine go) . I'll try to tell you what I mean.

')

Imagine that you have a certain location or set of locations in which a parallel action unfolds. Now imagine that you can navigate within a certain location map and observe different events. Those. this is in some sense an interactive parallel installation. At the same time, apart from simple switching and apart from the free plot canvas (or a set of parallel plot lines), additional connections are provided in the film structure, which are set by the author and which themselves are an additional expressive means along with the stage scene, the editing rhythm, the lighting solution, the sound, the plot itself (plots in our case), etc.

In general, I decided to approach this fundamentally and this is what I think in this connection to undertake. I want to make a editing tool for creating an interactive non-linear movie. This tool will be under an open source license. Below I will try to briefly outline the principal opportunities that I am going to realize.

TOOLS FOR INSTALLATION OF INTERACTIVE NONLINEAR MOVIE

Mounting module:

Pay attention to two essential aspects:

1. In this variant, the film may lack the concept of general timing, for example, if the connection graph of all nodes (microscene) is closed (see sketches). Those. all the plots can be looped, for example, or linked in some more sophisticated way.

2. It is not that the viewer presses the buttons or even has any special additional action in order to move around the space of the film. Instead, the movement is tied to external sources and embedded in the logic and topology of the links between the microscenes. Those. for example, eye movement or movement sensors or some additional factors thought up by the author can influence which scenes will be “mounted”. In this case, of course, all the locations and all the chronological logic should also be available for a direct transition. The latter can be implemented at the level of "meta-interface" for access to scenes. Those. some conventional volume "map" of the film.

Module classification and selection of source materials:

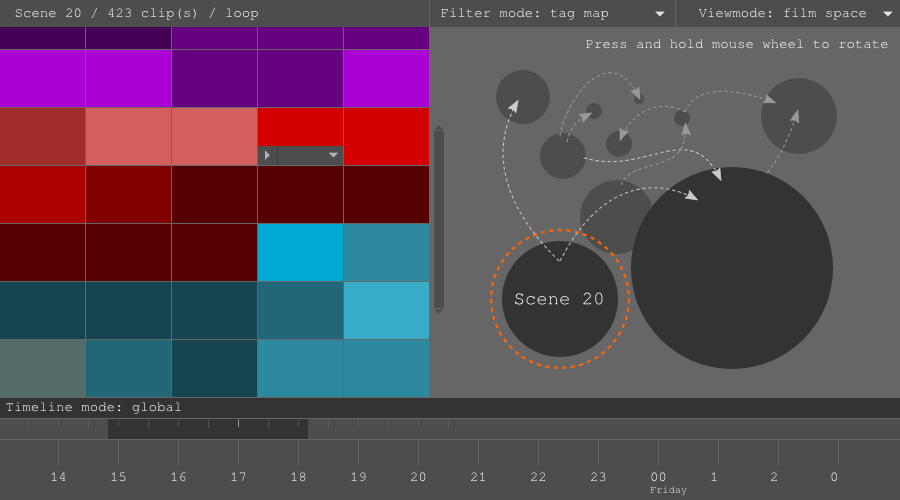

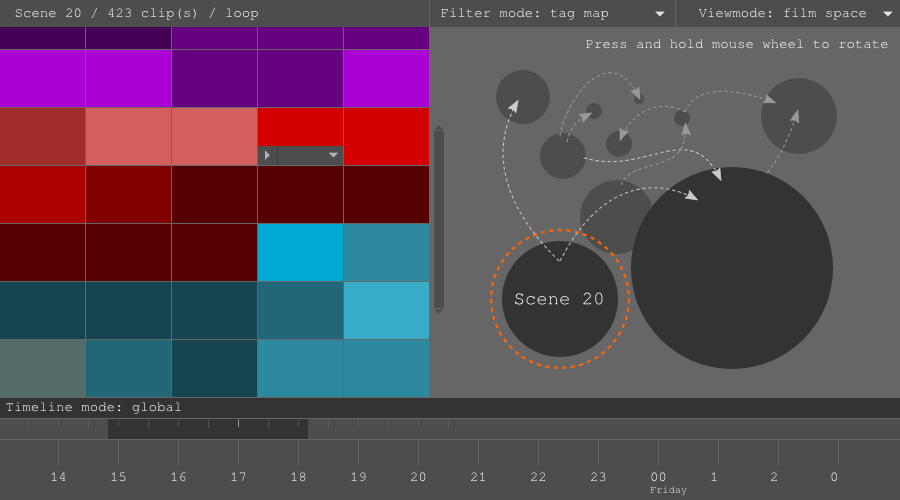

Sketch 2. Interface for working with source media material.

Since interactive cinema implies a deliberately larger amount of complexly structured source material, the presence of such a special module is likely to be required.

a) the possibility of splitting into frames in relation to:

● locations

● filming date or period

● chronological sequence

b) integration into scenes according to the same parameters

c) the ability to set additional algorithmic parameters

● by color dominant

● sound environment (sound level)

● by additional parameters (possibly recorded sensor data, for example)

● by moving the light in the frame

● by motion in the frame

d) simple ability to tag frames and scenes by additional parameters manually

And, obviously, for all this you need some kind of "player". As a first approximation, I think it could be ready for installation software, providing a web interface and providing multi-streaming + utility to run standalone with a config for setting up a media environment.

SCOPE OF APPLICATION

First of all, it should be immediately noted that this is an experimental idea. In this regard, I would like to focus not so much on the supposed artistic merits and demerits, but on technical and semantic implementation.

Obviously, such a venture is unsuitable for demonstration in the cinema. The most promising format is, apparently, individual or club viewing. And here, in some perspective, all the power of modern means of “immersion in virtual reality” can be involved. Therefore, in particular, I have included in the description the possibility of using all kinds of non-standard sources and media.

Actually I would like to hear the views of the community and discuss.

And if it suddenly happens that among the readers there is still someone from the coders of film-video experimenters who wants to take part in the venture, I will no doubt be glad of any cooperation. The project will be implemented under an open source GPL or similar license, open source. Estimated developer framework: Qt, C ++, cross-platform (Linux, Mac, Win).

KDPV 1. Interface for working with nodes (scenes). Linking and linking events.

I have a long-standing idea to make an interactive movie. Not in the sense of Chinocher’s Kinoautomat and not in the type of “quest” with explicit cinematic outline and extensive narrative (press 3 if you want the heroine to stay, or 4 to let the heroine go) . I'll try to tell you what I mean.

')

Imagine that you have a certain location or set of locations in which a parallel action unfolds. Now imagine that you can navigate within a certain location map and observe different events. Those. this is in some sense an interactive parallel installation. At the same time, apart from simple switching and apart from the free plot canvas (or a set of parallel plot lines), additional connections are provided in the film structure, which are set by the author and which themselves are an additional expressive means along with the stage scene, the editing rhythm, the lighting solution, the sound, the plot itself (plots in our case), etc.

In general, I decided to approach this fundamentally and this is what I think in this connection to undertake. I want to make a editing tool for creating an interactive non-linear movie. This tool will be under an open source license. Below I will try to briefly outline the principal opportunities that I am going to realize.

TOOLS FOR INSTALLATION OF INTERACTIVE NONLINEAR MOVIE

Mounting module:

- possibility to mount microscenes in the usual linear manner

- the ability to include non-standard media in scenes (for example, data from sensors)

- multi-timeline (multiple independent timelines for each microscene, the ability to link two or more timelines and alignment of parallel events)

- the ability to combine independent timelines and microscenes

- linking the microscene to a graph (i.e., the ability to set the scene linking logic for branching)

- possibility of branching by snaps or events coming from non-standard media

- Meta-gluing (forced editing effects when switching from one microscene to another, i.e. you can, for example, make a multi-frame)

- possibility of mounting loop

- the possibility of algorithmizing the switching of sources (cameras, sound sources, sensors) within one scene (i.e., mounting branches within the microscene)

Pay attention to two essential aspects:

1. In this variant, the film may lack the concept of general timing, for example, if the connection graph of all nodes (microscene) is closed (see sketches). Those. all the plots can be looped, for example, or linked in some more sophisticated way.

2. It is not that the viewer presses the buttons or even has any special additional action in order to move around the space of the film. Instead, the movement is tied to external sources and embedded in the logic and topology of the links between the microscenes. Those. for example, eye movement or movement sensors or some additional factors thought up by the author can influence which scenes will be “mounted”. In this case, of course, all the locations and all the chronological logic should also be available for a direct transition. The latter can be implemented at the level of "meta-interface" for access to scenes. Those. some conventional volume "map" of the film.

Module classification and selection of source materials:

Sketch 2. Interface for working with source media material.

Since interactive cinema implies a deliberately larger amount of complexly structured source material, the presence of such a special module is likely to be required.

a) the possibility of splitting into frames in relation to:

● locations

● filming date or period

● chronological sequence

b) integration into scenes according to the same parameters

c) the ability to set additional algorithmic parameters

● by color dominant

● sound environment (sound level)

● by additional parameters (possibly recorded sensor data, for example)

● by moving the light in the frame

● by motion in the frame

d) simple ability to tag frames and scenes by additional parameters manually

And, obviously, for all this you need some kind of "player". As a first approximation, I think it could be ready for installation software, providing a web interface and providing multi-streaming + utility to run standalone with a config for setting up a media environment.

SCOPE OF APPLICATION

First of all, it should be immediately noted that this is an experimental idea. In this regard, I would like to focus not so much on the supposed artistic merits and demerits, but on technical and semantic implementation.

Obviously, such a venture is unsuitable for demonstration in the cinema. The most promising format is, apparently, individual or club viewing. And here, in some perspective, all the power of modern means of “immersion in virtual reality” can be involved. Therefore, in particular, I have included in the description the possibility of using all kinds of non-standard sources and media.

Actually I would like to hear the views of the community and discuss.

And if it suddenly happens that among the readers there is still someone from the coders of film-video experimenters who wants to take part in the venture, I will no doubt be glad of any cooperation. The project will be implemented under an open source GPL or similar license, open source. Estimated developer framework: Qt, C ++, cross-platform (Linux, Mac, Win).

Source: https://habr.com/ru/post/217999/

All Articles