SSD: subspecies and prospects

Everyone remembers how the first truly massive SSD products appeared. Enthusiasm, growth rates, beautiful tens of thousands of IOPS performance. Almost idyll.

Naturally, for the server (single computers we do not consider) the market was a huge step forward - after all, magnetic media have long become a bottleneck for building a high-performance solution. Several cabinets with disks, which together mastered two or three thousand IOPS, were considered the norm, and there was such an opportunity to increase performance a hundred or more times from one drive (compared to SAS 15K).

Optimism was the sea, but in reality everything turned out to be not so smooth.

')

Here both compatibility issues and resource problems, when everything from the cheapest lines was put to the server, and performance degradation problems — issues of TRIM support in RAID controllers are still being raised.

The development of SSD technology proceeded in stages. At first, everyone worked on the speed of linear operations to reach the limit of interfaces. With SATA II it happened almost immediately, it took some time to conquer SATA III. The next step was to increase the performance of operations with random access, here we also managed to achieve decent growth.

The next point to notice was the performance stability:

Taken from the Anandtech review.

On average, it is, of course, a lot, but the jumps from 30K to values in a couple of dozen iops are strong, the spindle gives its performance steadily.

The first who loudly declared this was Intel with its DC S3700 line.

Taken from the Anandtech review.

If you bring the right side of the graph closer, the spread will be within 20%. Why is it important?

- The behavior of a disk in a RAID array is much more predictable, it is much easier for the controller to work when all the participants in the array have approximately the same performance. Very few people would think of building arrays of 7.2K and 15K disks at the same time, and an array of SSDs with instantaneous performance spreads a hundred times worse.

- Applications that need to consistently and quickly receive or write data at random will work more predictably.

Quite a long time ago, SAS drives appeared, on SLC (Single Level Cell) memory with space cost and almost unlimited resource. Naturally, they were designed to work as part of a storage system - there dual-port access is a prerequisite for the drive. Over time, more available products appeared on eMLC memory. The resource, of course, fell, but still remains very impressive due to the large reserve volume of memory inaccessible to the user.

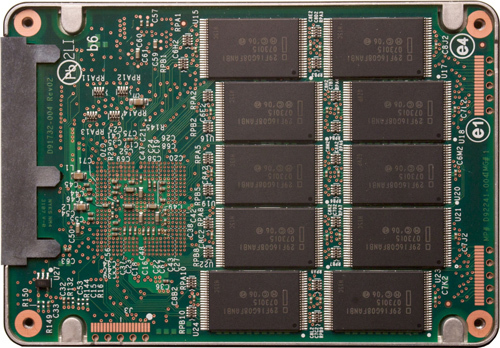

An example of a modern SAS SSD drive

Since they were originally designed to work in corporate systems, the stability of performance was at the height right away. Since the approaches of hard drives to SSD performance tests are hardly applicable, the SNIA Solid State Storage Performance Test was developed by the Storage Networking Industry Association (SNIA) industry consortium. The main feature of the technique is that the disk is first “prepared”, the purpose of preparation is to fill up all the available memory, because a particularly smart controller writes data not only to the allocated disk capacity, it spreads the data across all available memory. In order to get the result of the disk in a real environment after a long continuous operation in a synthetic test, it must be denied access to the “fresh” memory, where there was never any data. After this, the actual testing begins:

Random reading

Random entry

On random recording, you can see a significant advantage from 12G SAS, but the processor load for stream processing increases two or more times.

Current position on the SAS / SATA SSD market

Disks are divided into several groups, each of which is successfully used for certain tasks.

- Household drives for tasks related to reading. Extremely popular option at the Russian Internet holdings that use software arrays. This group also includes Toshiba HG5d type disks, which are positioned for entry-level enterprise workloads (they are excellent for installing OSs or tasks with preferential reading). They live under such loads for a long time, cost little, what else is needed for happiness?

- Corporate drives with 1-3 complete rewrites per day. Positioned in storage with a small percentage of writing, intensive reading or for read caching. Work well with RAID controllers, some are made for storage and have an SAS interface, the disk cache is necessarily protected by capacitors. Not significantly more expensive than the first group.

- Disks with 10 full overwrites per day. Universal workhorse in servers (where SATA drives are primarily used) and in storage. Noticeably more expensive than the first group.

- Disks with 25 full rewrites per day. The most expensive and fast, a bunch of memory under the reserve sets a high price tag per gigabyte of available capacity.

Now let's talk about the SSD in an unusual performance, because the flash (as opposed to magnetic plates) can be placed as you like.

SATA SSD in DIMM format

Due to the growth of memory modules and the efforts of Intel / AMD to increase the number of supported memory bars on the processor, few servers use all the slots on the board.

In our experience, even 16 memory strips in the server are not very common, while the RS130 / 230 G4 models offer 24 slots in the system.

Lots and lots of memory

When such a part of the platform's capabilities is idle, it is deeply insulting and annoying.

What can be done with this?

Empty slots can take SSD drives!

For example, such:

SSD in DIMM format

Now we have several such drives validated, the capacity of which reaches 200GB on SLC memory and 480GB on MLC / eMLC.

Technically, this is a conventional SSD based on the SandForce SF-2281 controller, familiar from many disks in 2.5 "format and quite popular in inexpensive disks for tasks with a predominance of reading (from the first group). The interface is standard SATA, only memory is taken from the memory slot. Used Toshiba flash (MLC NAND Toggle Mode 2.0, 19nm) TH58TEG8DDJBA8C, 3K P / E cycles, with a total volume of 256 gigabytes. The promised Bit Error Rate (BER) less than 1 in 10 ^ 17 bits read (what it gives - was considered in the previous material on hard drives ).

View of the controller

Installation in the server is simple and convenient - just insert it into the memory slot (power is taken from it) and pull the cable to the port:

View in the server

Original solutions

Current SSDs use the usual SATA connector, which is not found on all the boards. For example, on our RS130 G4 there are only two such connectors. If necessary, you can make a cable that combines four SSDs in mini-SAS or mini-SAS HD.

mini-SAS cable

Using this option you can make different interesting products, for example:

32 SSD in 1U case

About SSD with standard SAS / SATA interfaces, perhaps, everything. In the next article, we will look at the PCIe SSD and their future, but for now a little about the method of determining the SSD resource for writing.

Write resource

For home use, few people care about the disk resource for writing, while for more serious tasks this value can be critical. The record disc write per day (DWPD), which is defined as the total amount of data recorded by Total Terabyte Written divided by the period of work (usually 5 years), has already become traditional. The best SATA drives have an index of 10 DWPD, the best SAS SSDs reach up to 45 DWPD.

How is this magic figure measured? Need to delve into the theory of flash memory.

The main feature of the flash - to record (program) data cell first you need to erase (erase). Unfortunately, it is impossible to erase just a cell, such operations are carried out on blocks (Erase block), the minimum amount of memory for erasing, consisting of several pages. A page is a minimum area of memory that can be read or written in a single read / write operation.

This is how the concept of a Program / Erase cycle appeared. Writing data to one or several pages in a block and erasing a block, in any order.

The notion of write amplification factor - Write amplification factor (WAF) appeared in a logical way. The amount of data written to disk divided by the amount of data sent by the system for recording.

What affects the WAF?

The nature of the load:

- consistent or random;

- large or small blocks;

- is there any alignment of data by block sizes;

- kind of data (especially for SSD with compression).

For example, if the system sends 4KB for recording and 16KB (one block) is written to the flash, then WAF = 4.

One block of flash memory

Here is a single NAND block consisting of 64 pages. Suppose that each page has a size of 2KB (four sectors), with a total of 256 sectors per block. All pages of the block are occupied by useful data. Suppose the system overwrites only a few sectors in a block.

Rewriting Pages

To record 8 sectors, we need:

- Read the entire block in RAM.

- Change the data in pages 1, 2 and 3.

- Erase block from NAND.

- Write a block of RAM.

A total of 256 sectors are erased and rewritten for the sake of a change of only 8, WAF is already 32. But these are all the horrors of small blocks and non-optimized algorithms for working with flash, when recording large blocks of WAF will be equal to one.

JEDEC (an industrial consortium on any microelectronics) identified a bunch of factors that affect the life cycle of SSD drives and derived the dependency function as f (TBW) = (TBW × 2 × WAF) / C, where C is the disk capacity, and multiplier 2 is entered for prevent flush wear on storage reliability.

Total TBW Flash capacity * PE cycles / 2 * WA

As a result, the survivability of each SSD is determined by the type of load that will have to be determined manually. The linear recording case is the simplest, for random operations the NAND memory reserve, which is not used by the user, will still greatly influence.

If you take a disk with 3K P / E cycles per memory cell, then with linear recording TBW = 384 or about 1 DWPD for a capacity of 256GB for 5 years.

Enterprise workload, according to JEDEC, gives a WA of about 5, or about 0.2 DWPD for 5 years.

Source: https://habr.com/ru/post/217735/

All Articles