Testing “fast MongoDB” - TokuMX in conditions close to reality

In the latest issue of Radio T, we mentioned TokuMX - High-Performance MongoDB Distribution . This product sounds interesting - 9-fold data compression complete with a 20-fold increase in speed and transaction support. At the same time, the transition from mongo to tokumx is quite simple and from the point of view of an external observer, it is completely transparent. Such magic seemed to me more than attractive and I decided to check how well everything is in practice.

Now I use several mongodb systems of different sizes, both in AWS and in private data centers. My usage pattern is mainly focused on a rare but massive data recording (once a day), somewhat more frequent massive read operations (a variety of different analyzes), and constant reads in the front-end data mode. I have no special problems with Monga, except for the disk problem (calculated after 6 months). Data from our system is never deleted and in the end will no longer fit on the disks. In AWS, Monga runs on relatively expensive EBS volumes with guaranteed IOPS. I was already planning a conventional solution to the lack of disk space (transferring old data to a separate Mong in a cheap configuration), but then I caught the eye of TokuMX with the promise of compression 9 times, which would postpone my problem for the next 4 years. In addition, the rollback of the entry in the mongee is done only by the client, and it would be nice to do without it, but transfer it to the server level.

If you're wondering exactly how TokuMX magic works, then welcome to their website . Here I will not tell what it is and how to configure it, but I will share the results of surface testing. My tests do not pretend to scientific accuracy and have the main purpose to show what will happen in my real systems if I switch from Mongi to current.

Transparency transition:

')

With this, everything is fine. None of my tests covering work with Monga (about 200) had any problems. Everything that worked with Monga works with current. Integration tests also did not reveal any problems, i.e. in my case, you can redirect client systems to TokuMX addresses and they will continue to work without noticing the substitution. I did not test the mode of hybrid work with a current in one replica set, but I suspect that this will work as well.

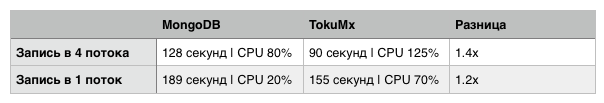

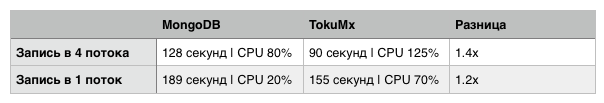

Recording Testing:

Tests were performed on 2 identical virtual machines with 2 processors for each, 20G disk and 1G RAM. The host computer is MBPR i7, SSD, 16G RAM. Entry (trade candles) in one day, total 1.4M candles. The average record size is 270 bytes. 3 additional indexes (one simple, 2 composite).

As you can see, there is a difference and TokuMX is really faster. Of course not in the promised 20 times, but not bad either. Although at the same time there is a significantly greater load on the processor, but this can be expected due to compression.

The size of the data + indexes in TokuMX was also smaller than that of Mongi, but only 1.6 times .

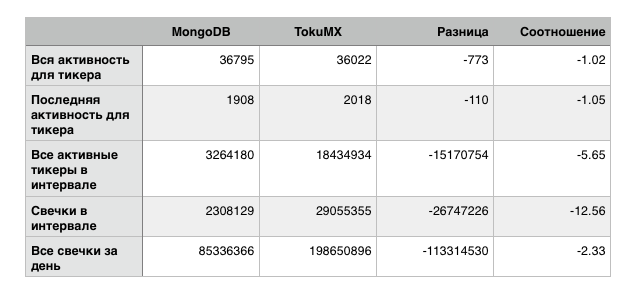

Reading Testing:

Reading was tested in a mode close to real use. All quick queries (by ticker) were carried out repeatedly for a random sample of 200 tickers, only 10,000 queries, the result was averaged. Interval (in time) requests were conducted for 10 random intervals and also repeatedly. The same requests were sent to Mongu and current in the same order. The main goal of this test was to create an activity as close as possible to the real one. The time is given in microseconds.

Not believing my eyes, I conducted these tests many times and a similar result (with small fluctuations) was repeated steadily. In all my tests, a similar difference, where mongo overtakes tokumx one and a half to three times, was invariably reproduced.

Deciding that the possible thing is that TokuMX needs more CPU, I had an unequal battle where a virtual machine with toku with 4 processors competed with Monga on two. The result is somewhat better, but still the mongo remains faster even in these unequal conditions (the difference is 1.4 times on average). The only test in which the current went around Mongu (almost 2 times) is the last one - all the candles for the day.

Thinking how to test all this again, I reduced the amount of RAM for both contestants so that the data set does not fit in memory. Here are the results:

In general, this result looks even worse and the difference of 12 times does not cause much optimism. However, the first two tests are noticeably closer to Monga, and in my case, the overall result will probably be comparable, since I have many times more similar “improved” requests than sagging heavily.

In the process of testing in a small amount of memory, I came across some strange current feature - if the specified cache size does not fit in the available RAM, the current simply cuts off the answer and of course the client side from this comes in great bewilderment and starts screaming that the data ended earlier than expected.

And one more thing - in all these tests was mongo “out of the box”. For TokuMX, I relaxed in the last test - I activated direct IO and set the cache size as recommended by them in the manual.

Findings:

My preliminary conclusion is: the fact that TokuMX writes about itself, namely “20 times faster and 9 times smaller out of the box,” is not entirely true in my case. Virtually all read operations were slower (sometimes much slower) in TokuMX and the frighteningly quiet circumcision of the answer is also not encouraging. For me, transaction support, write acceleration by 1.4 times (there is no lock to the base, but only to the document) and a 1.6-fold gain in data size does not cost much of the performance of all read operations.

Now I use several mongodb systems of different sizes, both in AWS and in private data centers. My usage pattern is mainly focused on a rare but massive data recording (once a day), somewhat more frequent massive read operations (a variety of different analyzes), and constant reads in the front-end data mode. I have no special problems with Monga, except for the disk problem (calculated after 6 months). Data from our system is never deleted and in the end will no longer fit on the disks. In AWS, Monga runs on relatively expensive EBS volumes with guaranteed IOPS. I was already planning a conventional solution to the lack of disk space (transferring old data to a separate Mong in a cheap configuration), but then I caught the eye of TokuMX with the promise of compression 9 times, which would postpone my problem for the next 4 years. In addition, the rollback of the entry in the mongee is done only by the client, and it would be nice to do without it, but transfer it to the server level.

If you're wondering exactly how TokuMX magic works, then welcome to their website . Here I will not tell what it is and how to configure it, but I will share the results of surface testing. My tests do not pretend to scientific accuracy and have the main purpose to show what will happen in my real systems if I switch from Mongi to current.

Transparency transition:

')

With this, everything is fine. None of my tests covering work with Monga (about 200) had any problems. Everything that worked with Monga works with current. Integration tests also did not reveal any problems, i.e. in my case, you can redirect client systems to TokuMX addresses and they will continue to work without noticing the substitution. I did not test the mode of hybrid work with a current in one replica set, but I suspect that this will work as well.

Recording Testing:

Tests were performed on 2 identical virtual machines with 2 processors for each, 20G disk and 1G RAM. The host computer is MBPR i7, SSD, 16G RAM. Entry (trade candles) in one day, total 1.4M candles. The average record size is 270 bytes. 3 additional indexes (one simple, 2 composite).

As you can see, there is a difference and TokuMX is really faster. Of course not in the promised 20 times, but not bad either. Although at the same time there is a significantly greater load on the processor, but this can be expected due to compression.

The size of the data + indexes in TokuMX was also smaller than that of Mongi, but only 1.6 times .

Reading Testing:

Reading was tested in a mode close to real use. All quick queries (by ticker) were carried out repeatedly for a random sample of 200 tickers, only 10,000 queries, the result was averaged. Interval (in time) requests were conducted for 10 random intervals and also repeatedly. The same requests were sent to Mongu and current in the same order. The main goal of this test was to create an activity as close as possible to the real one. The time is given in microseconds.

Not believing my eyes, I conducted these tests many times and a similar result (with small fluctuations) was repeated steadily. In all my tests, a similar difference, where mongo overtakes tokumx one and a half to three times, was invariably reproduced.

Deciding that the possible thing is that TokuMX needs more CPU, I had an unequal battle where a virtual machine with toku with 4 processors competed with Monga on two. The result is somewhat better, but still the mongo remains faster even in these unequal conditions (the difference is 1.4 times on average). The only test in which the current went around Mongu (almost 2 times) is the last one - all the candles for the day.

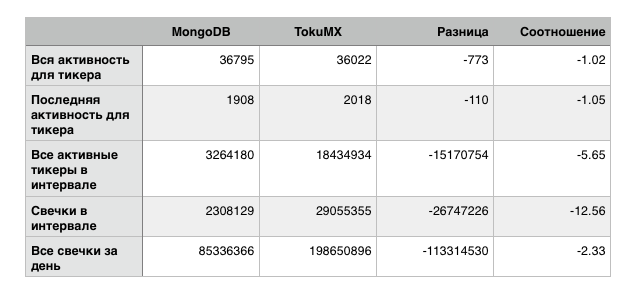

Thinking how to test all this again, I reduced the amount of RAM for both contestants so that the data set does not fit in memory. Here are the results:

In general, this result looks even worse and the difference of 12 times does not cause much optimism. However, the first two tests are noticeably closer to Monga, and in my case, the overall result will probably be comparable, since I have many times more similar “improved” requests than sagging heavily.

In the process of testing in a small amount of memory, I came across some strange current feature - if the specified cache size does not fit in the available RAM, the current simply cuts off the answer and of course the client side from this comes in great bewilderment and starts screaming that the data ended earlier than expected.

And one more thing - in all these tests was mongo “out of the box”. For TokuMX, I relaxed in the last test - I activated direct IO and set the cache size as recommended by them in the manual.

Findings:

My preliminary conclusion is: the fact that TokuMX writes about itself, namely “20 times faster and 9 times smaller out of the box,” is not entirely true in my case. Virtually all read operations were slower (sometimes much slower) in TokuMX and the frighteningly quiet circumcision of the answer is also not encouraging. For me, transaction support, write acceleration by 1.4 times (there is no lock to the base, but only to the document) and a 1.6-fold gain in data size does not cost much of the performance of all read operations.

Source: https://habr.com/ru/post/217617/

All Articles