Neurointegrum Media Fiction

Hi, habrayuzer. In this post I want to tell you about the project I have been working on for the last 4 months.

This is a tech media score, which was created as part of the new stage of the Alexandrinsky Theater. The main characters of the play are the emotional state and thoughts of the performer. For a start, the official description of the social. networks:

Neurointegrum is the first theatrical project implemented on the basis of the New Stage Media Center of the Alexandrinsky Theater.

')

For those who are interested, I ask under the cat. Exclusively for habrayuzerov there will be several photos of the workflow.

The Neuro-Integrum media concept raises the question of how human, computer, and interface interactions work, about the range of each of them.

The sound and image on the stage are generated in real time by software specially written for the performance. They define a dynamic coordinate system, relative to which events, coincidences, dynamics, and dramaturgy are considered. The controlling element of the performance is the emotional state of the performer, who, through information and energy exchange with the computer, controls the sound and visual media. As a result of the performer's reaction to audiovisual events, the source of which is himself, biofeedback occurs.

To read the emotional state of the performer, the neural interface Emotiv Epoc Research Edition is used. His SDK allows you to get the parameters of the emotional state, such as emotional arousal and frustration, as well as learn several mental commands that can be assigned to control any system parameters. In the network, you can google, for example, as a disabled person controls the chair by the power of thought. In the photo below, performer Sasha Rumyantseva makes a 3D cube move during one of the workouts. Also on the screen you can see graphics with indications of electrical potentials of the brain, taken in real time.

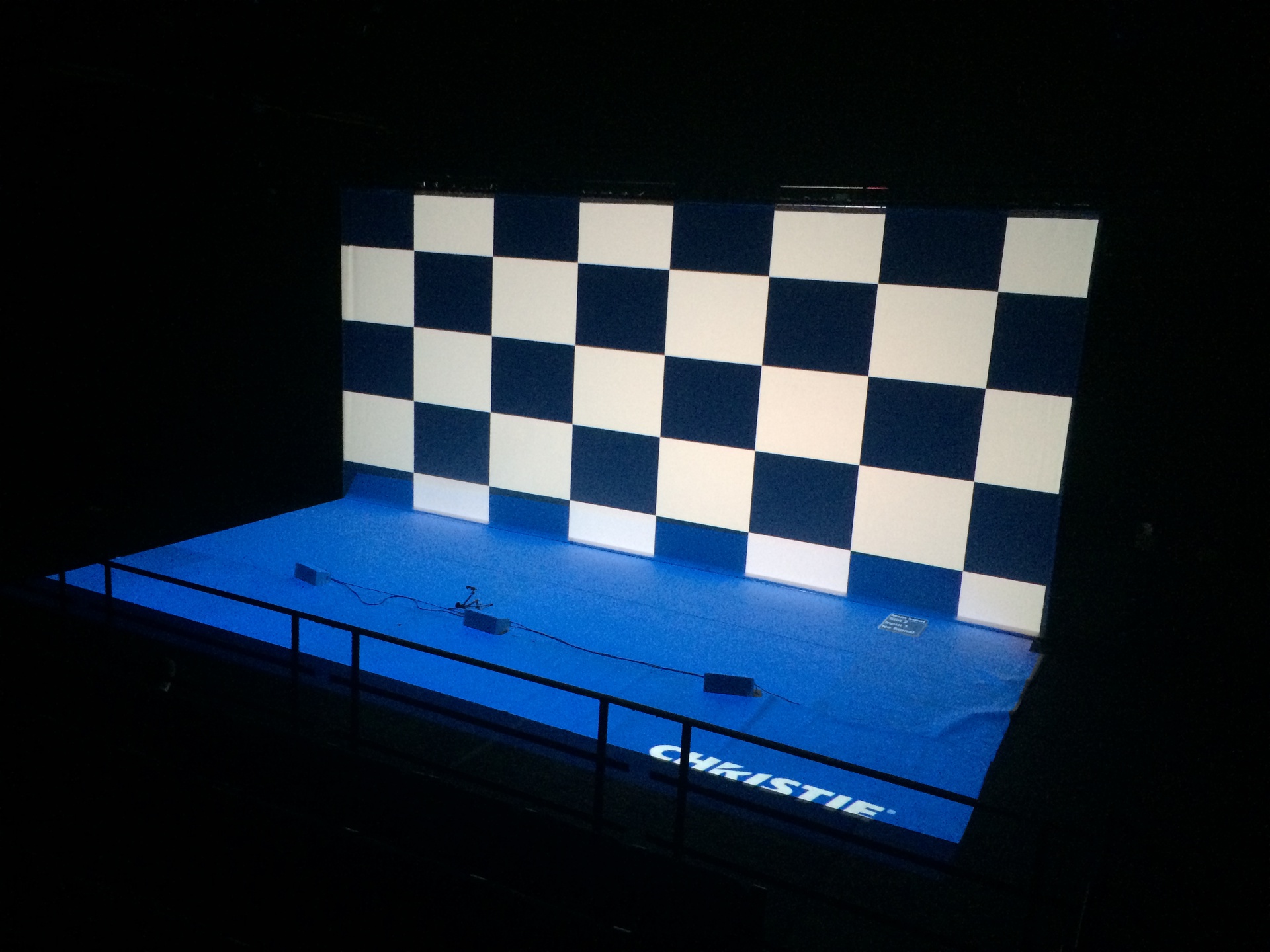

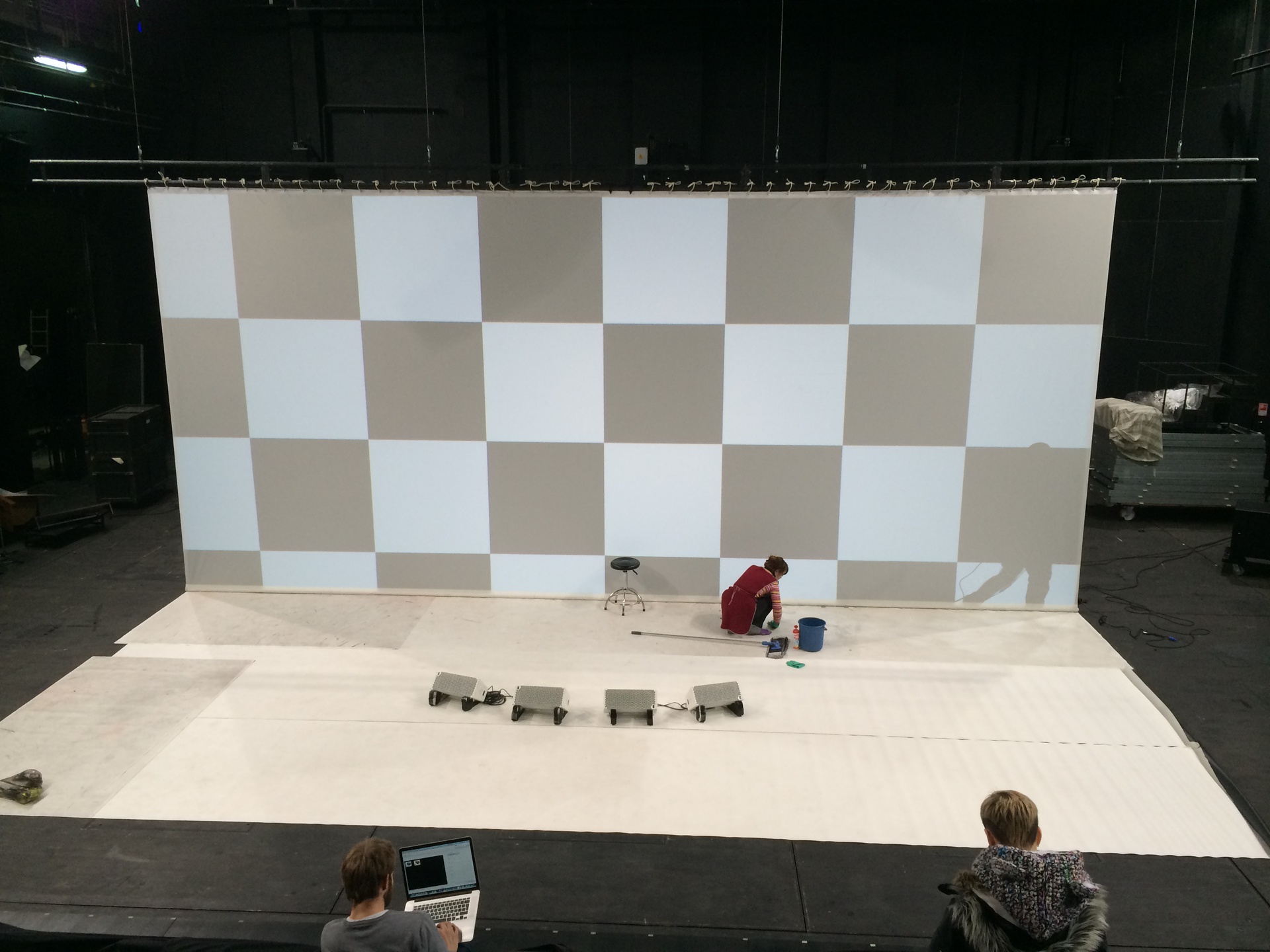

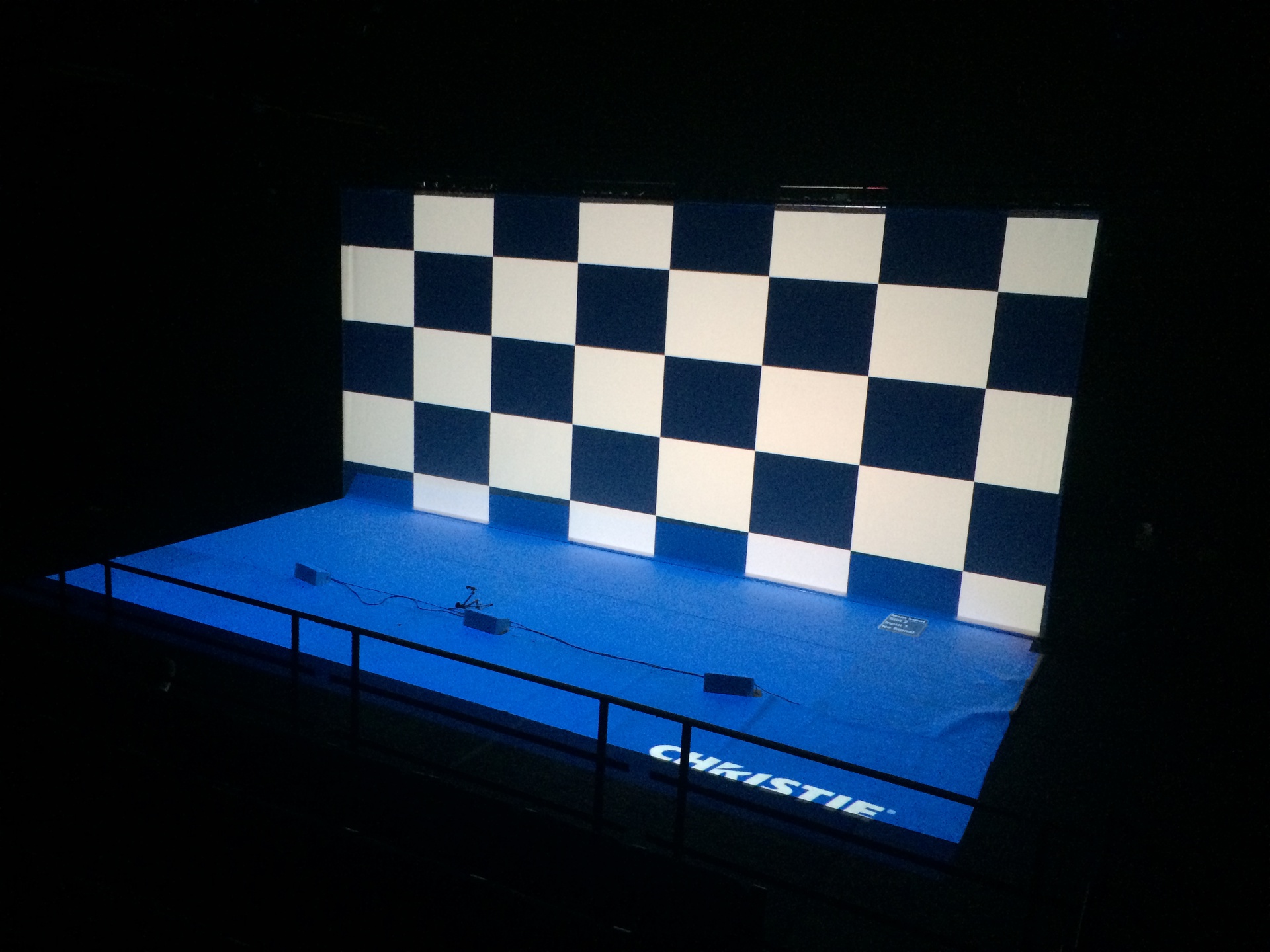

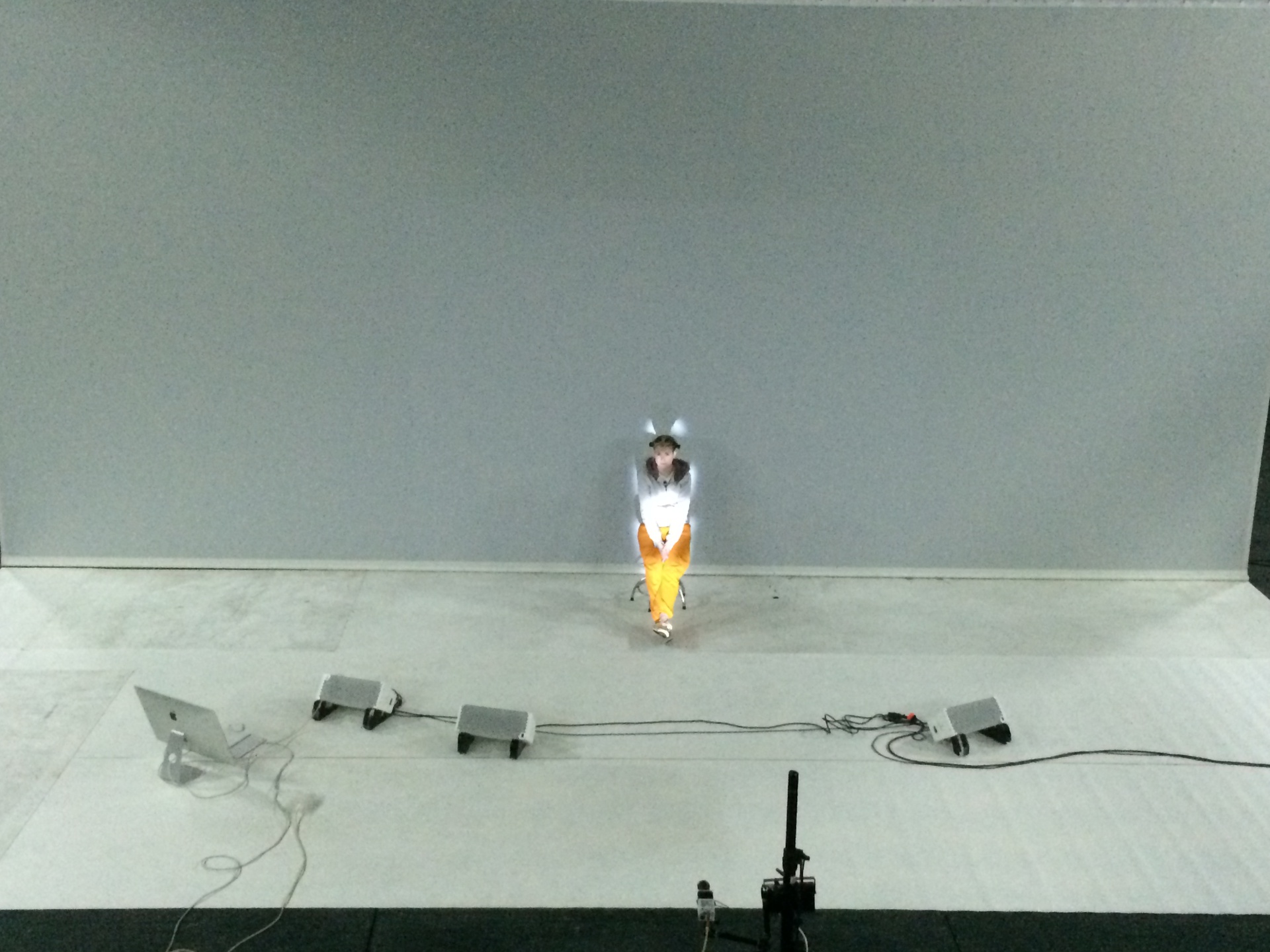

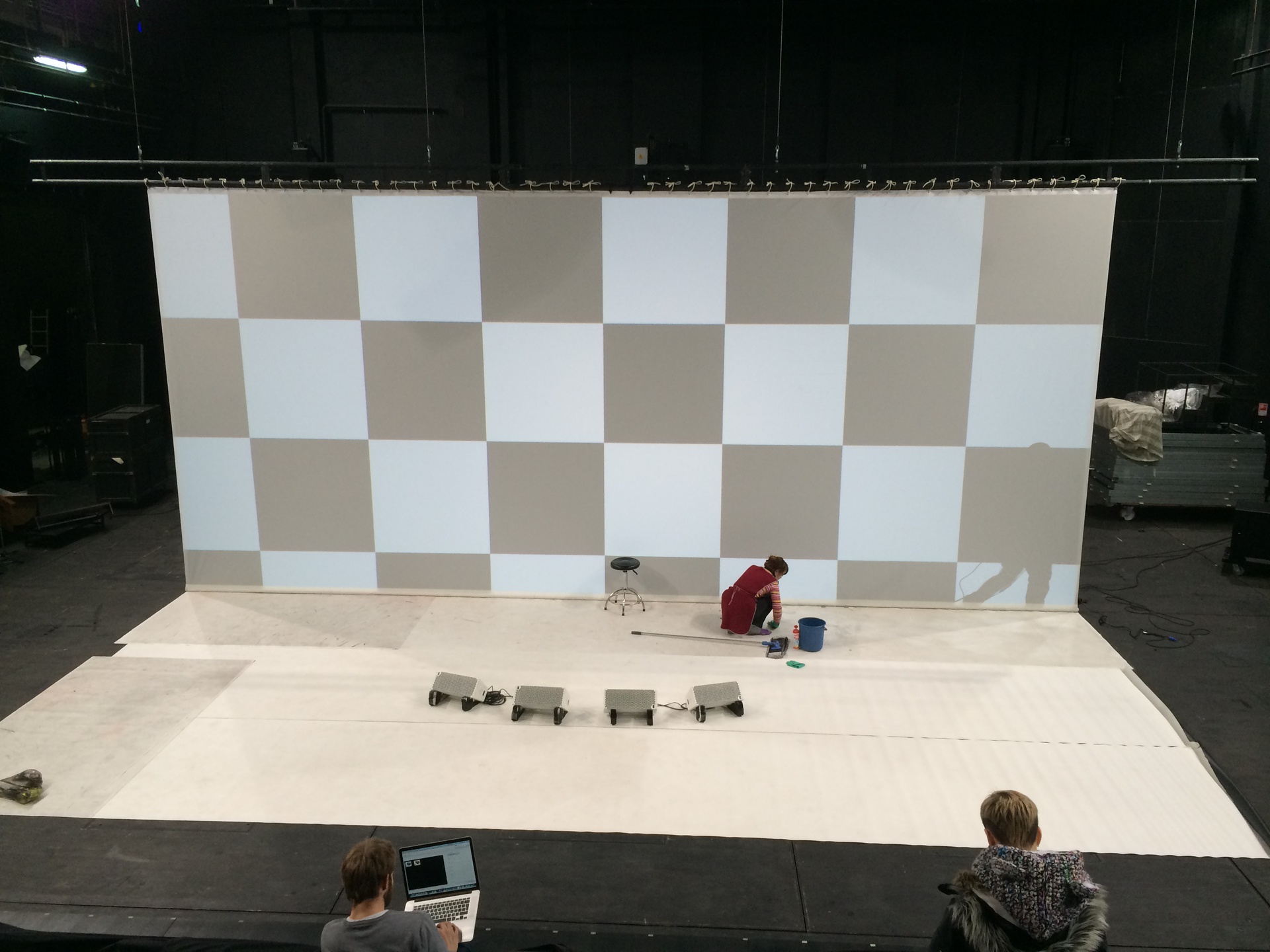

The stage itself consists of two screens, 10x4.5 meters in size, for projections of graphics (did I already say that we do not use pre-recorded video and generate graphics in rltime?), A separate projection on the silhouette of a performer, 14 sound channels, 2 subwoofers, IR -Camera, Kinect, as well as a lighting device Clay Paky A.leda Wash K20, which is interactively controlled by Sasha’s head in one of the scenes. All this stuff is controlled from four computers: 2 PCs for generating projections (on the floor, on the back projection screen, and on Sasha’s silhouette), 1 PC (or rather, iMac with Windows installed) to work with the neural interface and kinekt, and 1 Mac for to generate sound. And yes, the sound is also generated in real time, including surround pieces.

I'll tell you a little more detail about the technical side. Graphics software was written in C ++ using the OpenFrameworks framework. For tasks related to highlighting the silhouette of the performer, OpenCV is used, with which the image from the IR camera is processed. Below is a photo where you can see an analog camera and IR illuminator:

Below you can see how the silhouette lights. The uneven illumination (the upper part of the body is not highlighted) is due to the fact that Sasha's "home" clothes reflect the IR radiation poorly. For the performance, of course, there was a special suit made of cloth, which we approved, that is, the programmers.

Kinect is used to recognize some gestures:

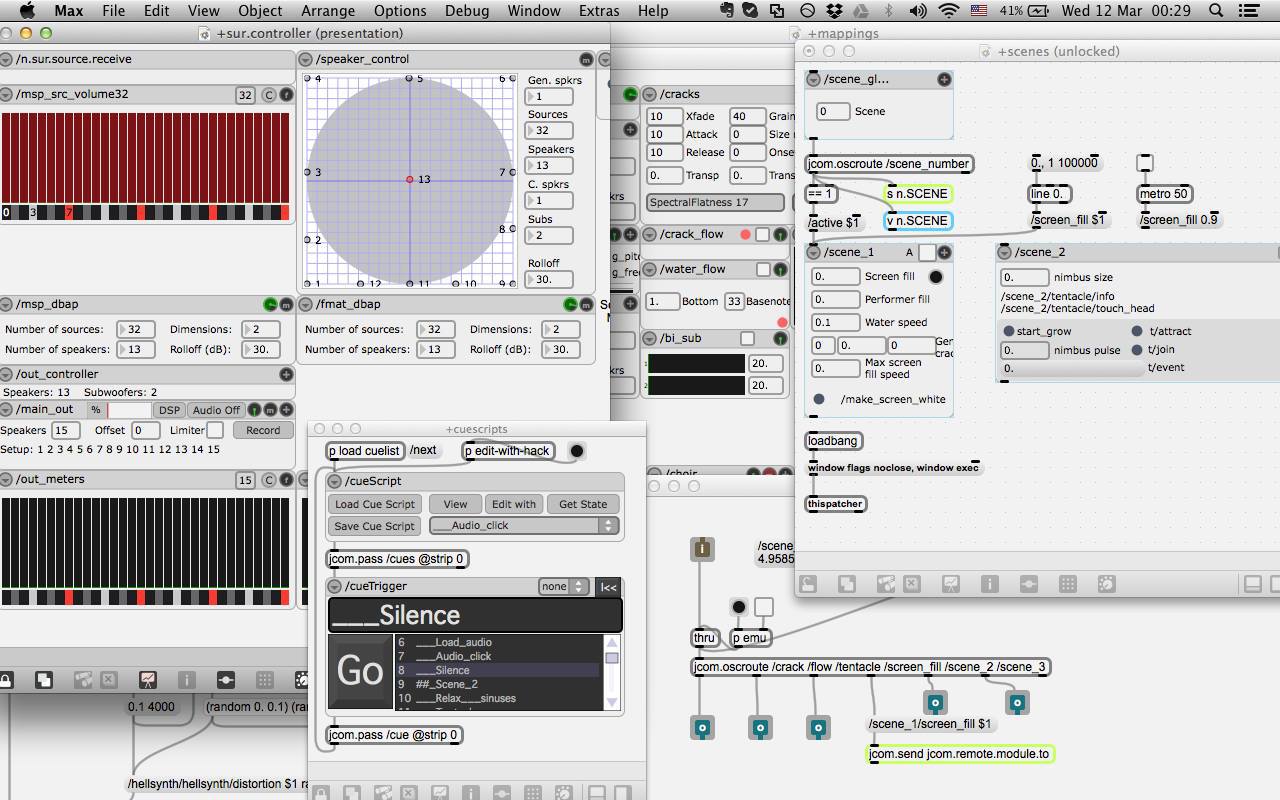

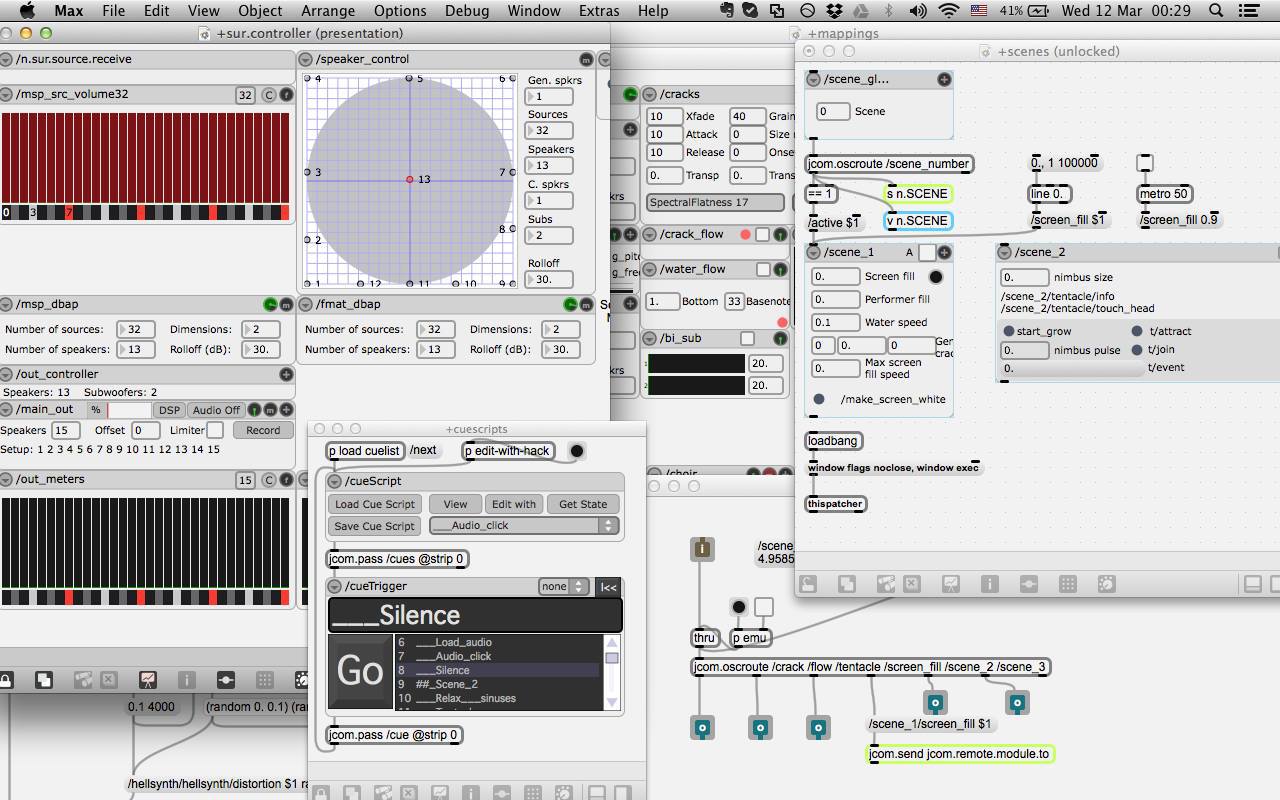

Let's go to the sound. Software for sound made in Max / MSP . This is a visual programming environment for creating interactive audiovisual applications. The history of Max / MSP begins in the late 80s at the notorious Paris Institute for the Study of Coordination and Acoustics IRCAM (I once wrote a review of this environment a long time ago). The sound patch of "Neurointegrum" looks like this:

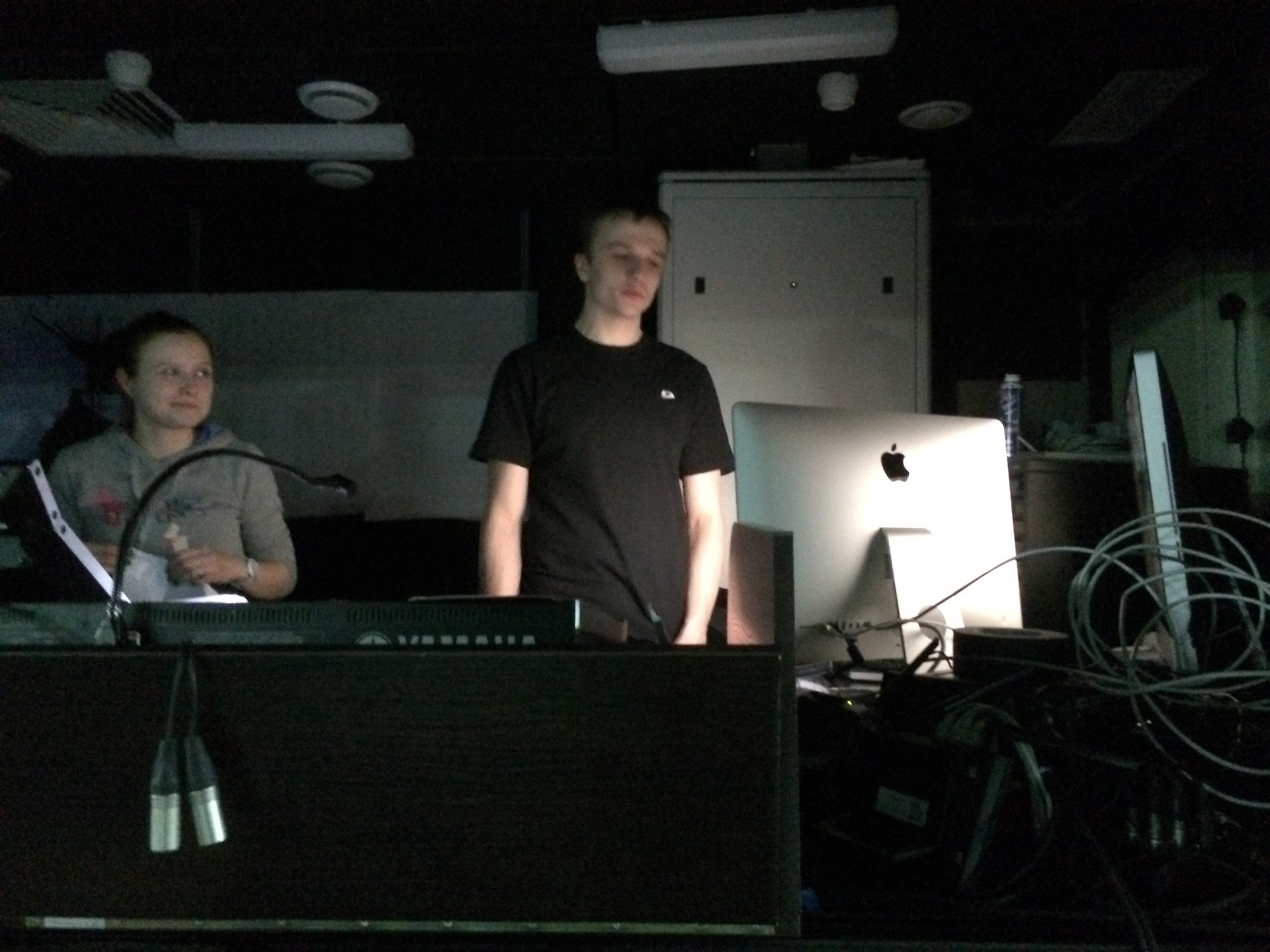

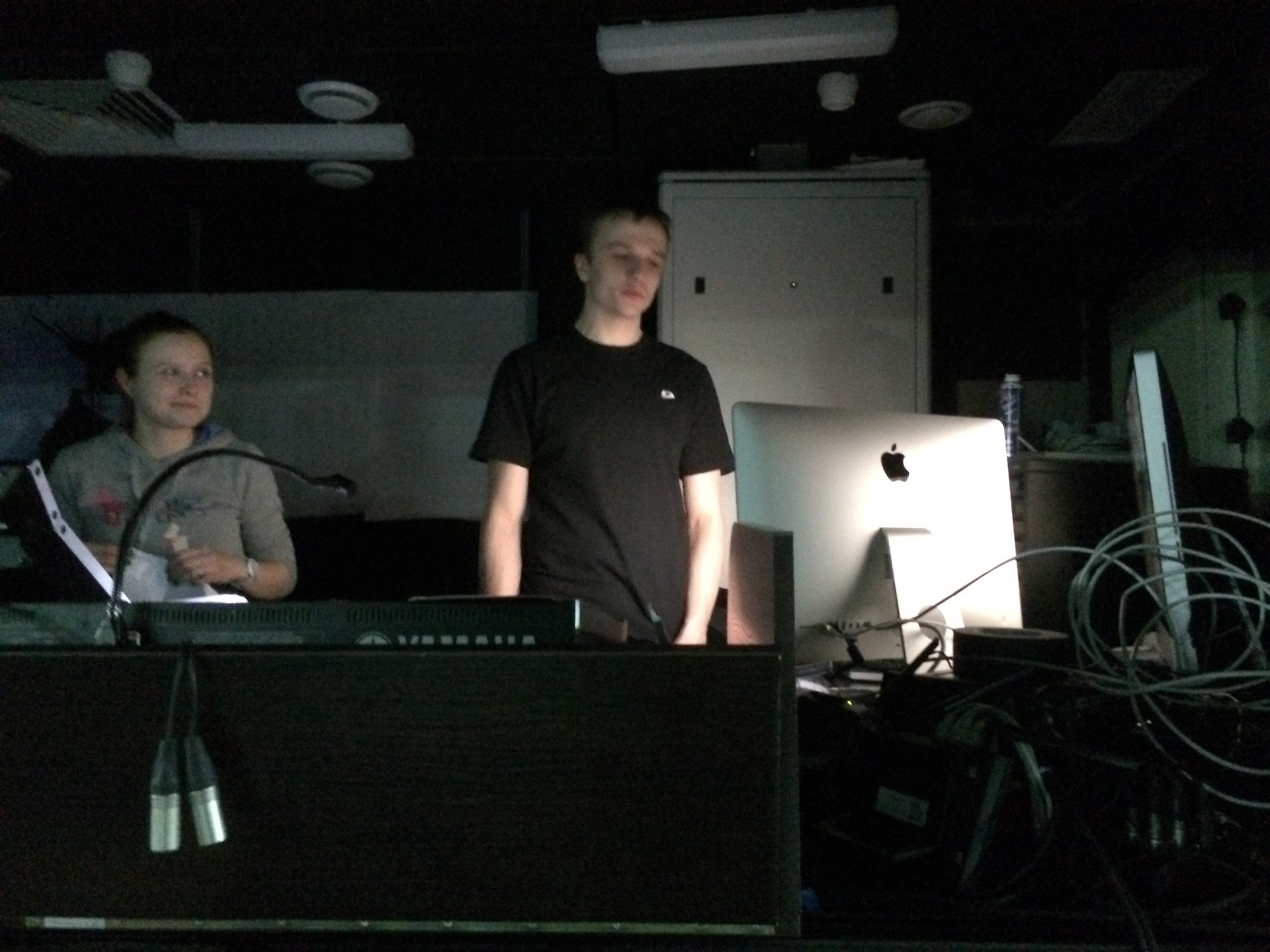

From this patch, the sound through the optics through the RME MADIface is sent to the mixer Yamaha M7CL. In the following photo, I and Masha, a very intelligent sound engineer Alexandrinka:

If you noticed on one of the previous photos, then in the center of the scene are also located the speakers. This “trick” goes back to the Acousmonium system, developed by students of Pierre Schaeffer, in the 70s at the GRM parjysk center (by the way, a workshop by author Austrian Acusmonium Thomas Gorbach is planned to be held at the Alexandrinki media studio in late June). It allows you to create the feeling that the sound goes to the center of the scene.

In addition to the fact that the sound in the performance is fundamentally spatial, it is also interactive. No Ableton and audio players. In order to achieve this, information about what is currently being drawn is constantly transmitted from the graphic program, and on the basis of this, the graphic element is being voiced. For example, I will tell you a secret, in the first scene a large number of similar graphic objects are drawn. And for each such object, information about its location and percentage of growth is transmitted over the network - all this will be used to voice each such element separately and direct it to the corresponding point in the spatial picture of the sound. The sound also affects the state of the performer, which already affects the graphics.

The event starts at 19:30 and consists of three parts: 1) a film about the work on the play, 2) the play itself and 3) an open discussion where you can ask any questions to the authors.

In addition, before each event at 18:00 in the foyer of the new stage there will be a new lecture on the subject of technological art. Like for a lecture free.

Upcoming shows will be held on April 3, 4 and 5 at St. Petersburg, nab. R. Fontanka, 49a.

It is already known for sure that on the 3rd day there will be a lecture by Lyubov Bugayeva “ Neurokino and Neuro-Literature ”. Under the link you can sign up for an event.

I pay attention, there are loud sound and stroboscopic effects in the performance, therefore epileptics are not recommended to attend the event.

Group performance in social. networks: vk , facebook .

For Habr posting a few photos of the process.

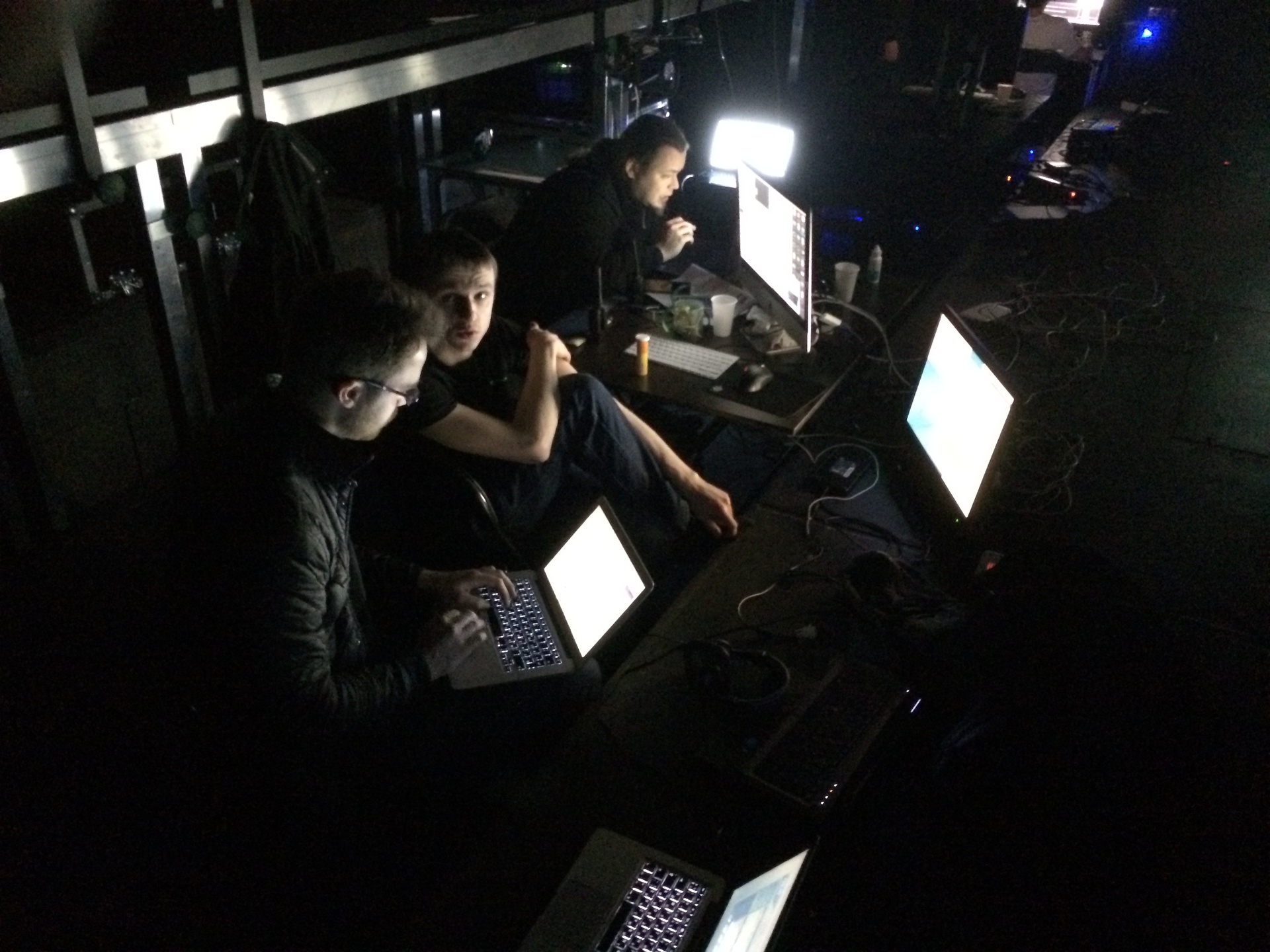

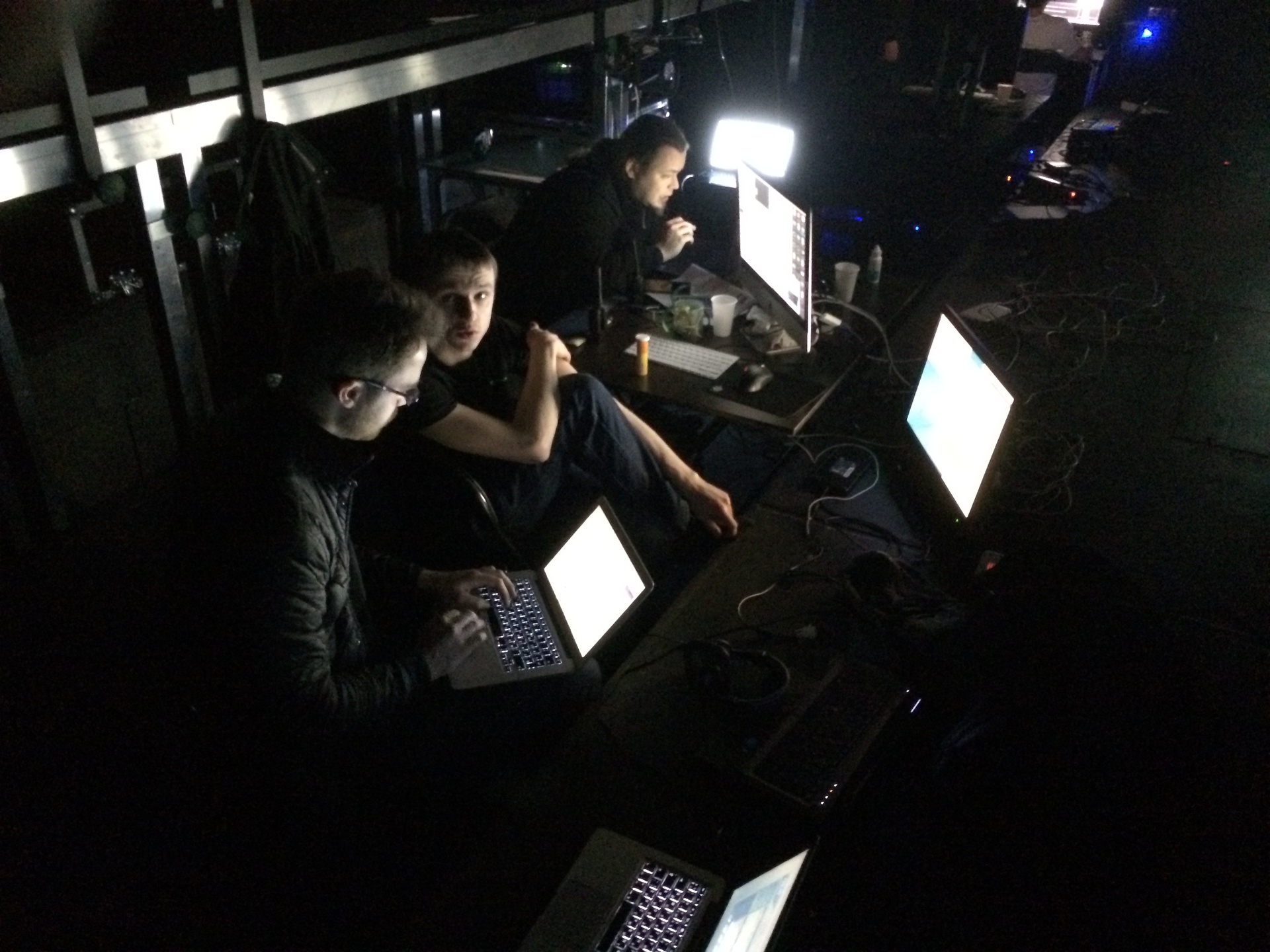

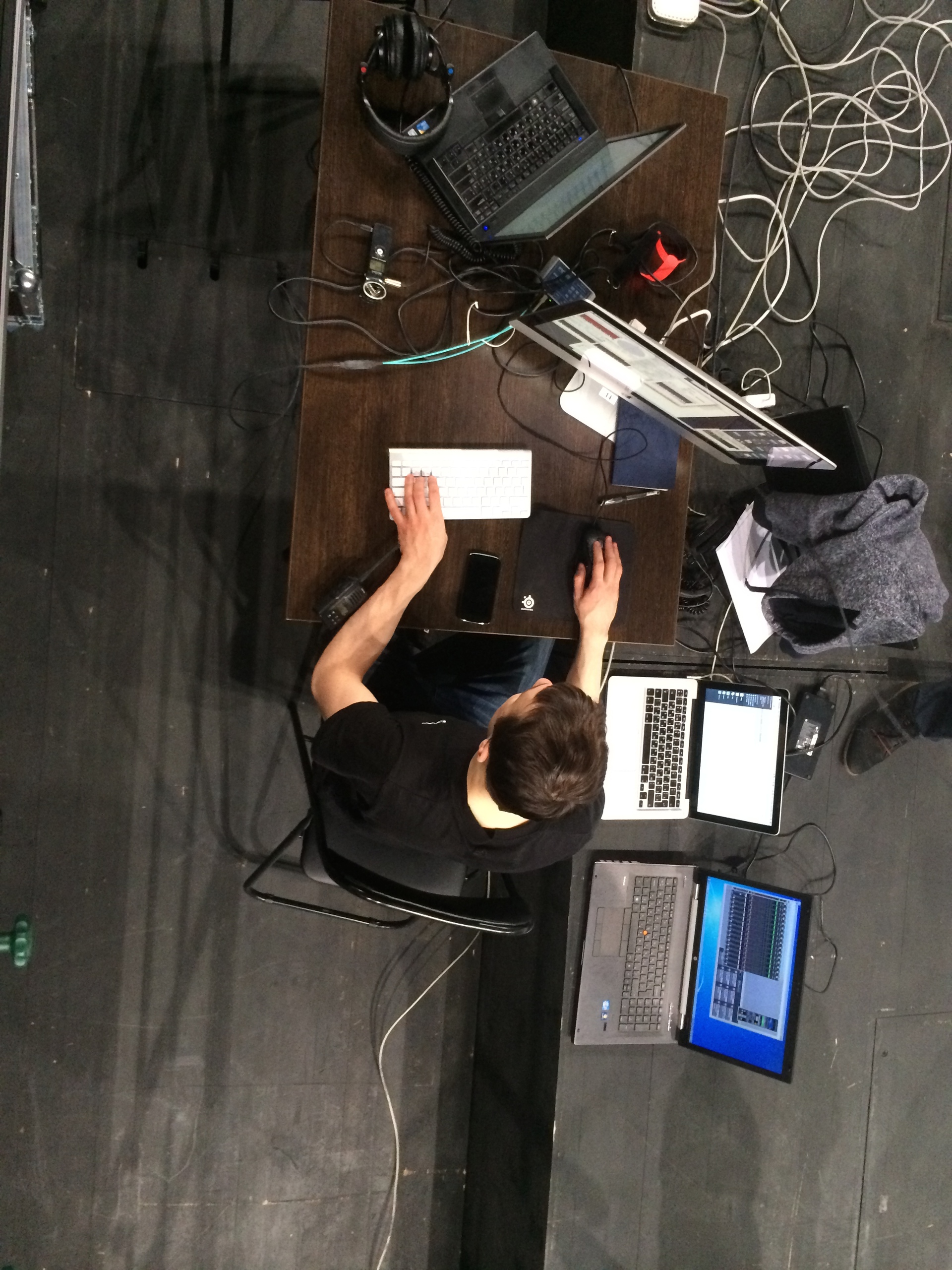

My friend, a computer network specialist, is sitting on the left, configuring the network to control light devices programmatically using the ArtNet protocol:

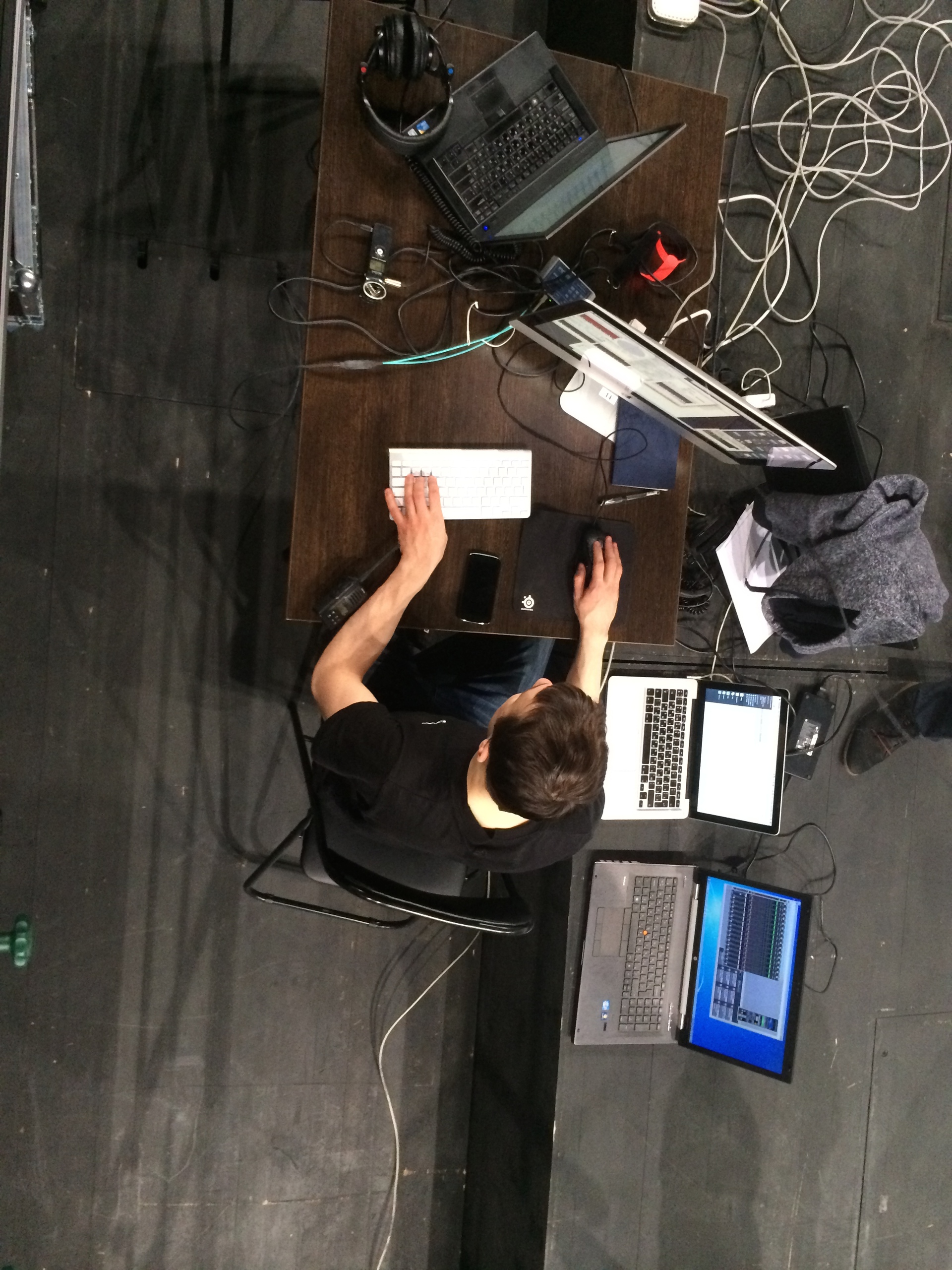

Below me and Lesha Oleynikov. We sit, removing the slippers, and warm our feet on the battery:

This is a tech media score, which was created as part of the new stage of the Alexandrinsky Theater. The main characters of the play are the emotional state and thoughts of the performer. For a start, the official description of the social. networks:

Neurointegrum Media Fiction is a pilot project representing science science, or science art, which is rare on today's domestic stage, using and interpreting the achievements of modern technologies. The performance, created under the guidance of the famous St. Petersburg media artist Yuri Didevich, explores one of the key plots of the culture of the turn of the century - the interaction of man and machine: an attempt to establish a cultural dialogue between them - the super-plot of “Neurointegrum. The performance uses the biopotentials of the performer’s brain (EEG electroencephalogram) to control the audiovisual setting algorithms.

Neurointegrum is the first theatrical project implemented on the basis of the New Stage Media Center of the Alexandrinsky Theater.

')

For those who are interested, I ask under the cat. Exclusively for habrayuzerov there will be several photos of the workflow.

The essence

The Neuro-Integrum media concept raises the question of how human, computer, and interface interactions work, about the range of each of them.

The sound and image on the stage are generated in real time by software specially written for the performance. They define a dynamic coordinate system, relative to which events, coincidences, dynamics, and dramaturgy are considered. The controlling element of the performance is the emotional state of the performer, who, through information and energy exchange with the computer, controls the sound and visual media. As a result of the performer's reaction to audiovisual events, the source of which is himself, biofeedback occurs.

What is Neurointegrum from?

To read the emotional state of the performer, the neural interface Emotiv Epoc Research Edition is used. His SDK allows you to get the parameters of the emotional state, such as emotional arousal and frustration, as well as learn several mental commands that can be assigned to control any system parameters. In the network, you can google, for example, as a disabled person controls the chair by the power of thought. In the photo below, performer Sasha Rumyantseva makes a 3D cube move during one of the workouts. Also on the screen you can see graphics with indications of electrical potentials of the brain, taken in real time.

The stage itself consists of two screens, 10x4.5 meters in size, for projections of graphics (did I already say that we do not use pre-recorded video and generate graphics in rltime?), A separate projection on the silhouette of a performer, 14 sound channels, 2 subwoofers, IR -Camera, Kinect, as well as a lighting device Clay Paky A.leda Wash K20, which is interactively controlled by Sasha’s head in one of the scenes. All this stuff is controlled from four computers: 2 PCs for generating projections (on the floor, on the back projection screen, and on Sasha’s silhouette), 1 PC (or rather, iMac with Windows installed) to work with the neural interface and kinekt, and 1 Mac for to generate sound. And yes, the sound is also generated in real time, including surround pieces.

Instruments

I'll tell you a little more detail about the technical side. Graphics software was written in C ++ using the OpenFrameworks framework. For tasks related to highlighting the silhouette of the performer, OpenCV is used, with which the image from the IR camera is processed. Below is a photo where you can see an analog camera and IR illuminator:

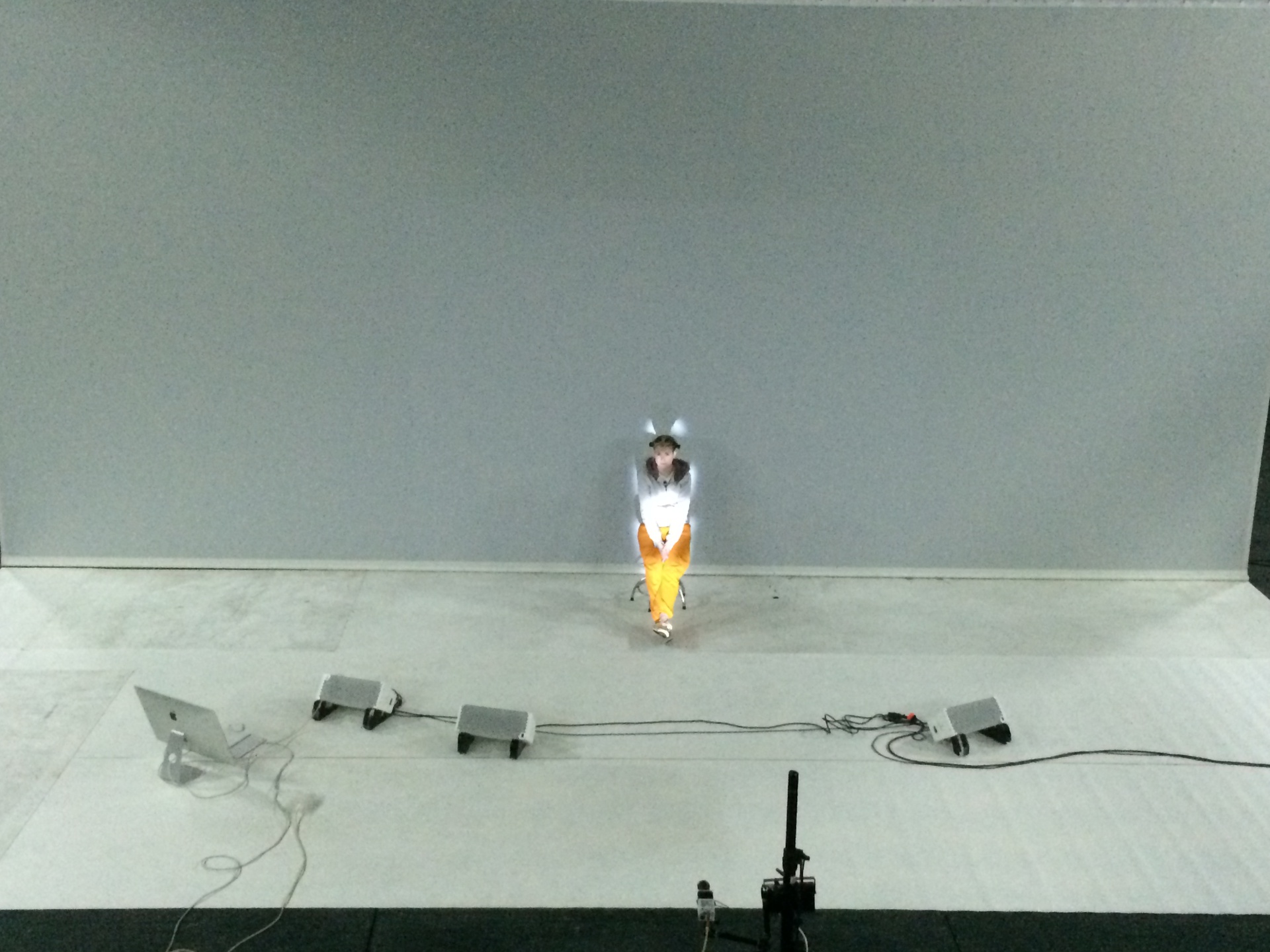

Below you can see how the silhouette lights. The uneven illumination (the upper part of the body is not highlighted) is due to the fact that Sasha's "home" clothes reflect the IR radiation poorly. For the performance, of course, there was a special suit made of cloth, which we approved, that is, the programmers.

Kinect is used to recognize some gestures:

Let's go to the sound. Software for sound made in Max / MSP . This is a visual programming environment for creating interactive audiovisual applications. The history of Max / MSP begins in the late 80s at the notorious Paris Institute for the Study of Coordination and Acoustics IRCAM (I once wrote a review of this environment a long time ago). The sound patch of "Neurointegrum" looks like this:

From this patch, the sound through the optics through the RME MADIface is sent to the mixer Yamaha M7CL. In the following photo, I and Masha, a very intelligent sound engineer Alexandrinka:

If you noticed on one of the previous photos, then in the center of the scene are also located the speakers. This “trick” goes back to the Acousmonium system, developed by students of Pierre Schaeffer, in the 70s at the GRM parjysk center (by the way, a workshop by author Austrian Acusmonium Thomas Gorbach is planned to be held at the Alexandrinki media studio in late June). It allows you to create the feeling that the sound goes to the center of the scene.

In addition to the fact that the sound in the performance is fundamentally spatial, it is also interactive. No Ableton and audio players. In order to achieve this, information about what is currently being drawn is constantly transmitted from the graphic program, and on the basis of this, the graphic element is being voiced. For example, I will tell you a secret, in the first scene a large number of similar graphic objects are drawn. And for each such object, information about its location and percentage of growth is transmitted over the network - all this will be used to voice each such element separately and direct it to the corresponding point in the spatial picture of the sound. The sound also affects the state of the performer, which already affects the graphics.

When and where

The event starts at 19:30 and consists of three parts: 1) a film about the work on the play, 2) the play itself and 3) an open discussion where you can ask any questions to the authors.

In addition, before each event at 18:00 in the foyer of the new stage there will be a new lecture on the subject of technological art. Like for a lecture free.

Upcoming shows will be held on April 3, 4 and 5 at St. Petersburg, nab. R. Fontanka, 49a.

It is already known for sure that on the 3rd day there will be a lecture by Lyubov Bugayeva “ Neurokino and Neuro-Literature ”. Under the link you can sign up for an event.

I pay attention, there are loud sound and stroboscopic effects in the performance, therefore epileptics are not recommended to attend the event.

Group performance in social. networks: vk , facebook .

Process photo

For Habr posting a few photos of the process.

My friend, a computer network specialist, is sitting on the left, configuring the network to control light devices programmatically using the ArtNet protocol:

Below me and Lesha Oleynikov. We sit, removing the slippers, and warm our feet on the battery:

Source: https://habr.com/ru/post/217565/

All Articles