Algorithms for video processing processor TI DM368

We begin a series of articles on Habré, dedicated to video processors TI DM368, DM369 and the development of algorithms based on them.

Let's consider the main processing units of the video stream from the sensor to the network “broadcaster”, we’ll discuss in more detail the algorithms of autoexposure, white balance and autofocus (3A), gamma correction, as well as HDR or WDR extended dynamic range, and, finally, motion detection and analytics its basis.

Examples of pictures will be presented for the SONY IMX136 sensor, the algorithm is also tested on Aptina MT9P006 , AR0331 , MT9M034 sensors .

The main players in the processor market for video surveillance ip are 3 companies TI , Ambarella and Hisilicon . All of them have chips of a different price range, the most popular on the market today are the ability to encode FHD (1920x1080) video stream up to 30 frames per second DM368 (TI), A5 (Ambarella), Hi3516 (Hisilicon) at prices from $ 10 to $ 20 and more powerful up to 60 frames per second DM385 (TI), A7 (Ambarella), Hi3517 (Hisilicon) at prices ranging from $ 20 to $ 40. The characteristics of these chips are about the same.

Since TI is a fairly open company, all the documentation on hardware is on the site, it’s easiest to work with them. To get the software and the entire design, it is enough to buy a camera from Appro for $ 1000 and go. To obtain documentation from HiSilicon, you must sign an NDA, and the cost of support and reference fee is $ 5000. The most expensive was Ambarella, to get the documentation and support you need to pay $ 25,400.

So back to DM368:

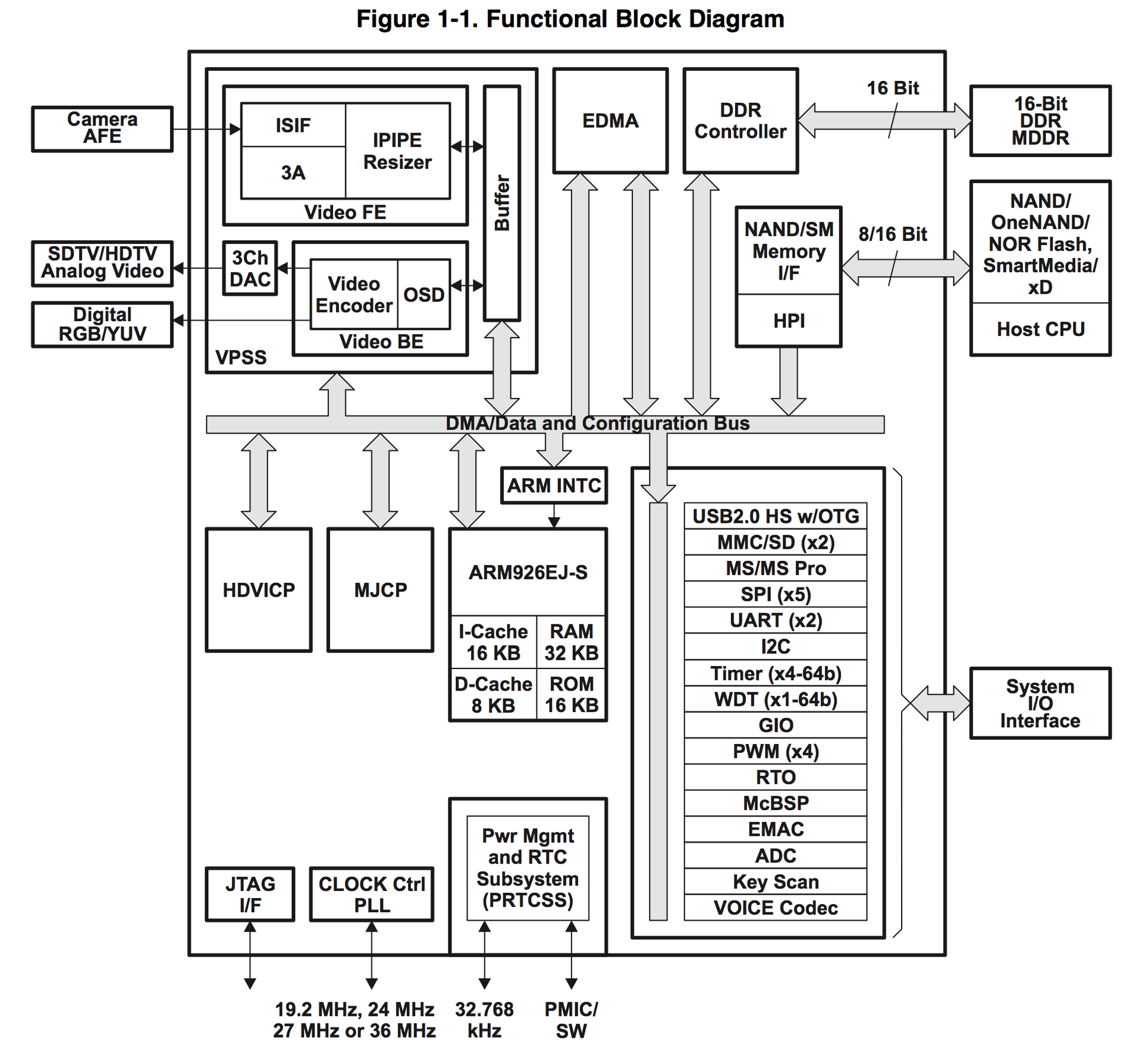

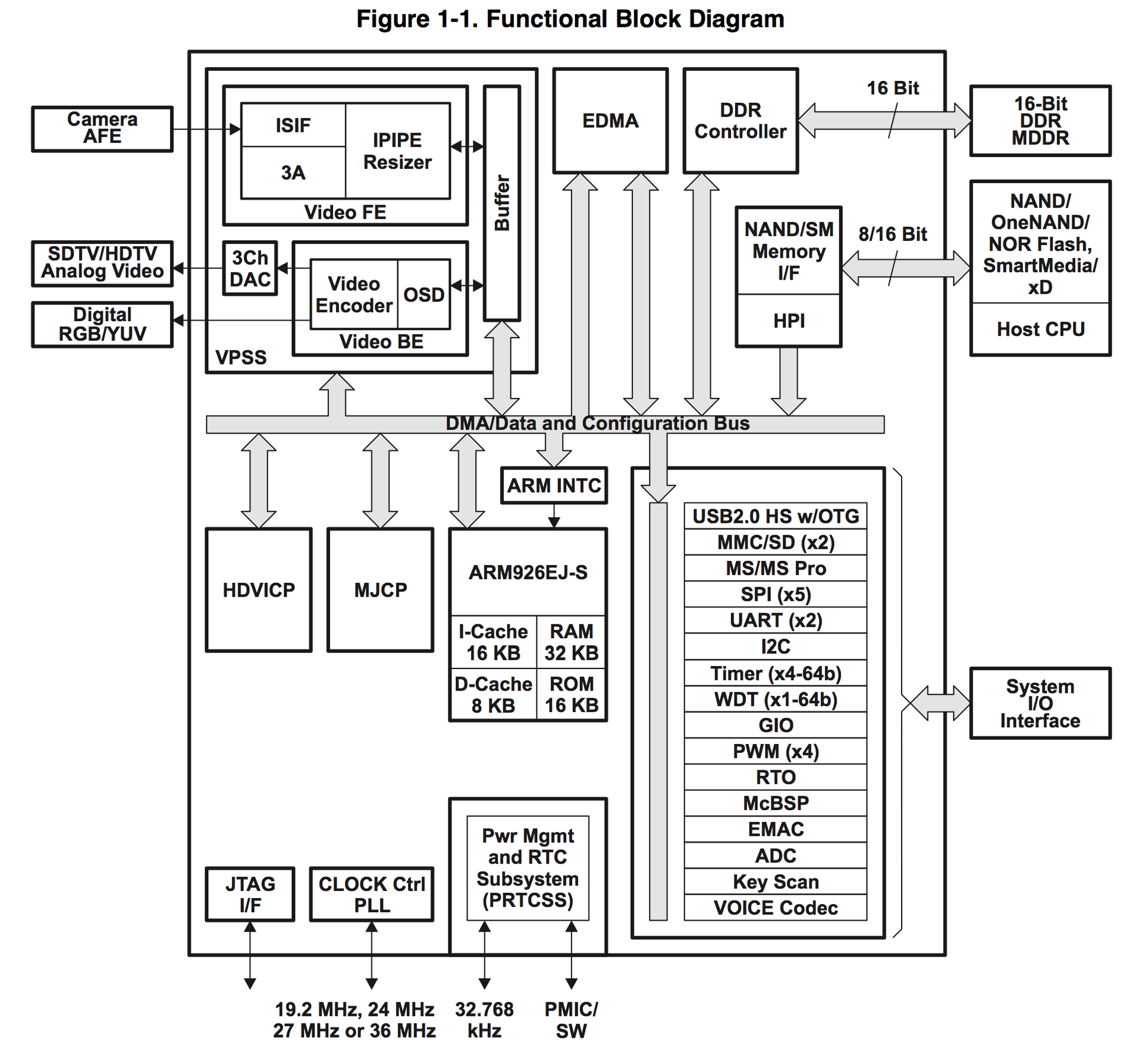

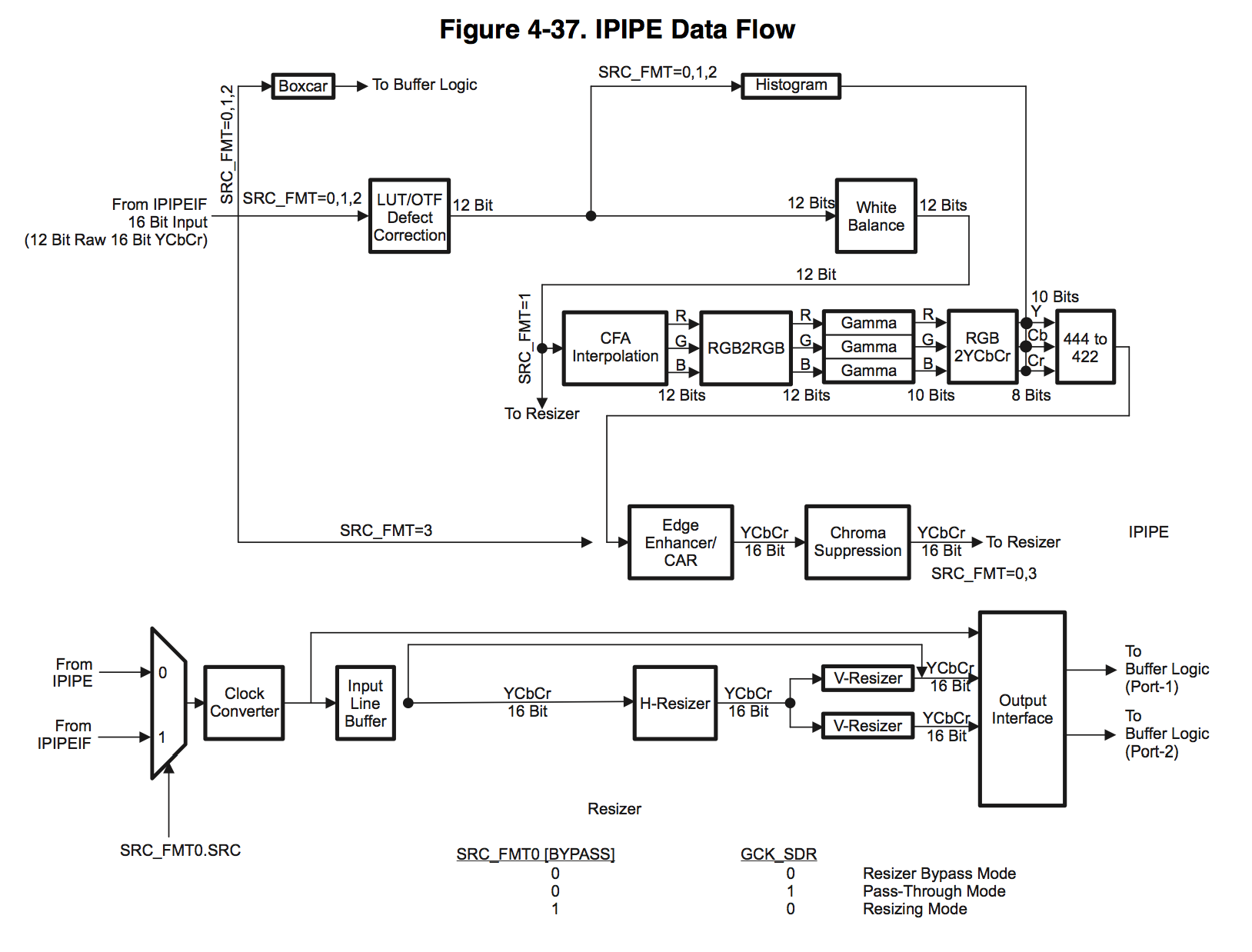

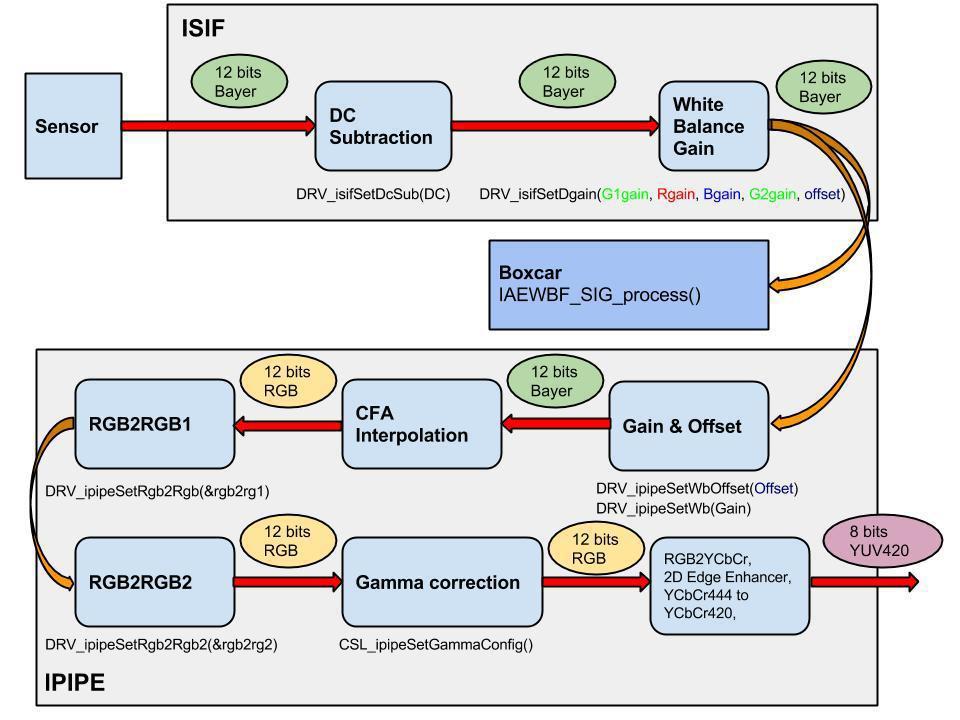

As can be seen in the diagram, the processor has everything you need for an ip camera and not only for it. Video Processing deals with Video Processing Subsystem (VPSS), in turn, it consists of two Video FE (Front End) and Video BE (Back End) blocks. Video FE is responsible for inputting and processing the video signal, and Video BE for encoding and outputting to various devices. Hardware support for 3A algorithms is located in the Video FE module.

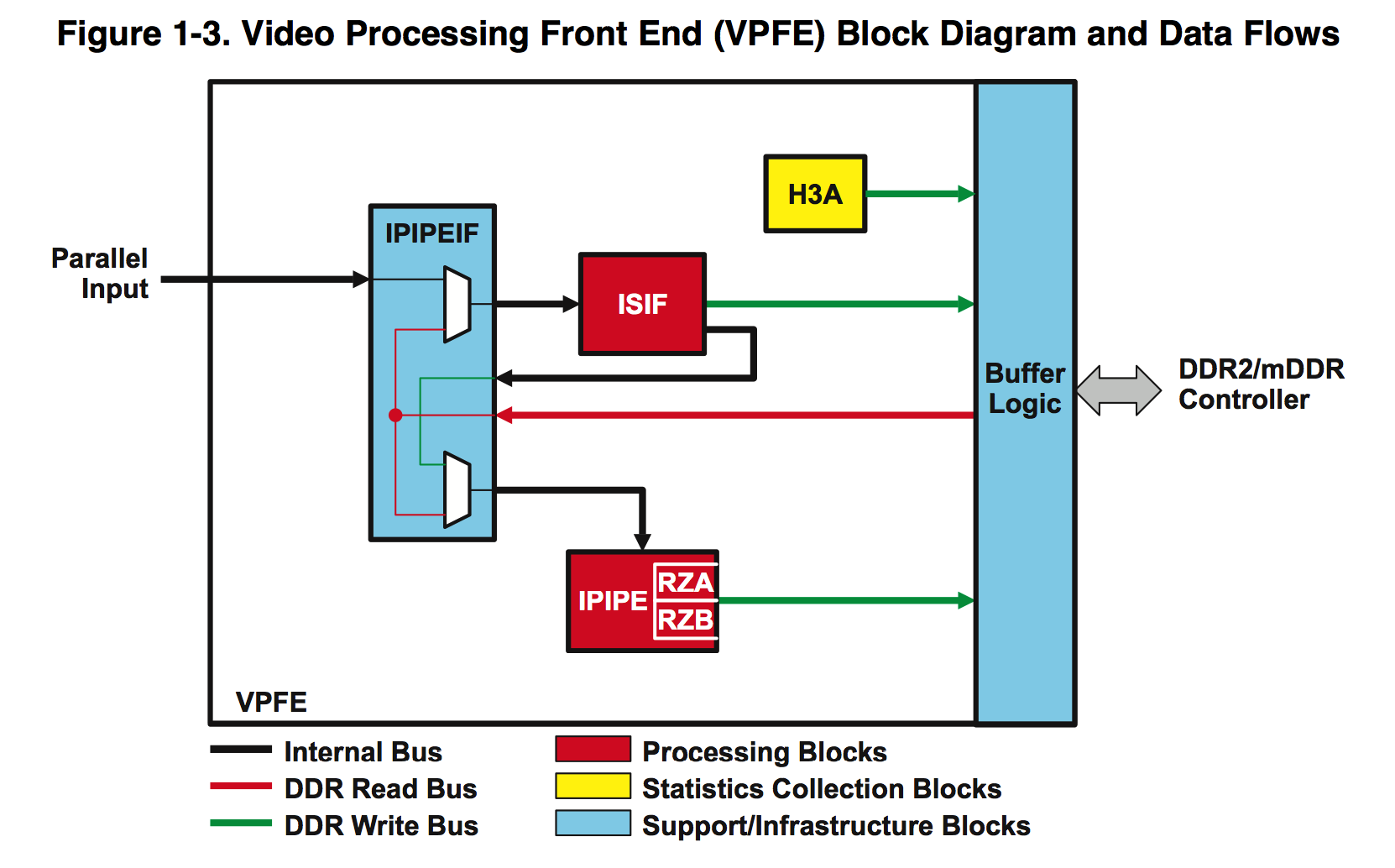

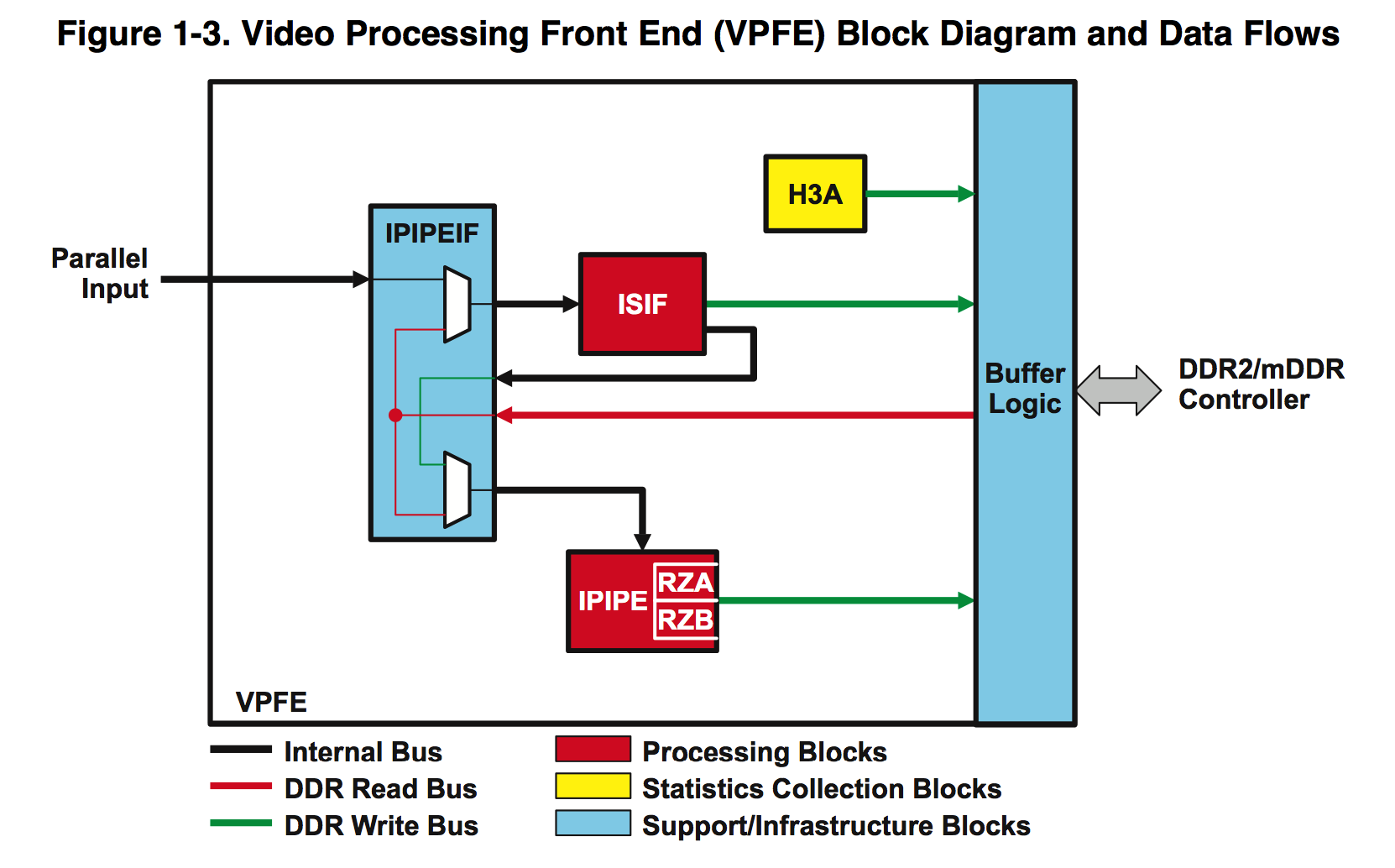

Consider it in more detail:

')

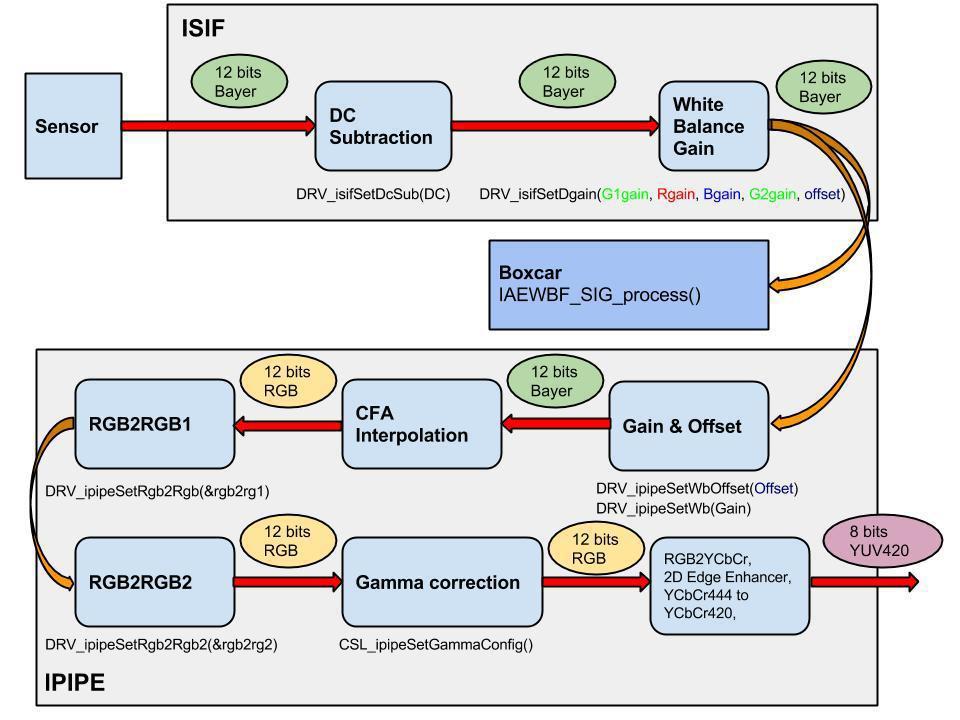

The input IPIPEIF (Image Pipe Interface) receives a “raw” raw 12 bit image in Bayer format from the sensor. This unit itself does nothing particularly useful, it is mainly used to synchronize the work of ISIF and IPIPE, it can also subtract from each new dark frame stored in memory, but we do not use it.

The Image Sensor Interface (ISIF) can convert Bayer to various formats, multiply, subtract, etc., for more information about the possibilities, see the documentation . We from this block for our algorithms use:

1. A “subtractor” of a constant value from all colors, with our two sensors, in total darkness, not 0, but a constant, is issued for SONY 176, but for Aptina 172. This must be taken into account for correct color balancing, especially in the dark.

2. Gain and Offset - we make them white balance. For each color, you can set your multiplication factor from 0 to (7 + 511/512), in the standard format for all hardware “multipliers”: OUT = IN * G >> 9, where IN is the input color value, G is the “multiplier” from 0 until 4095.

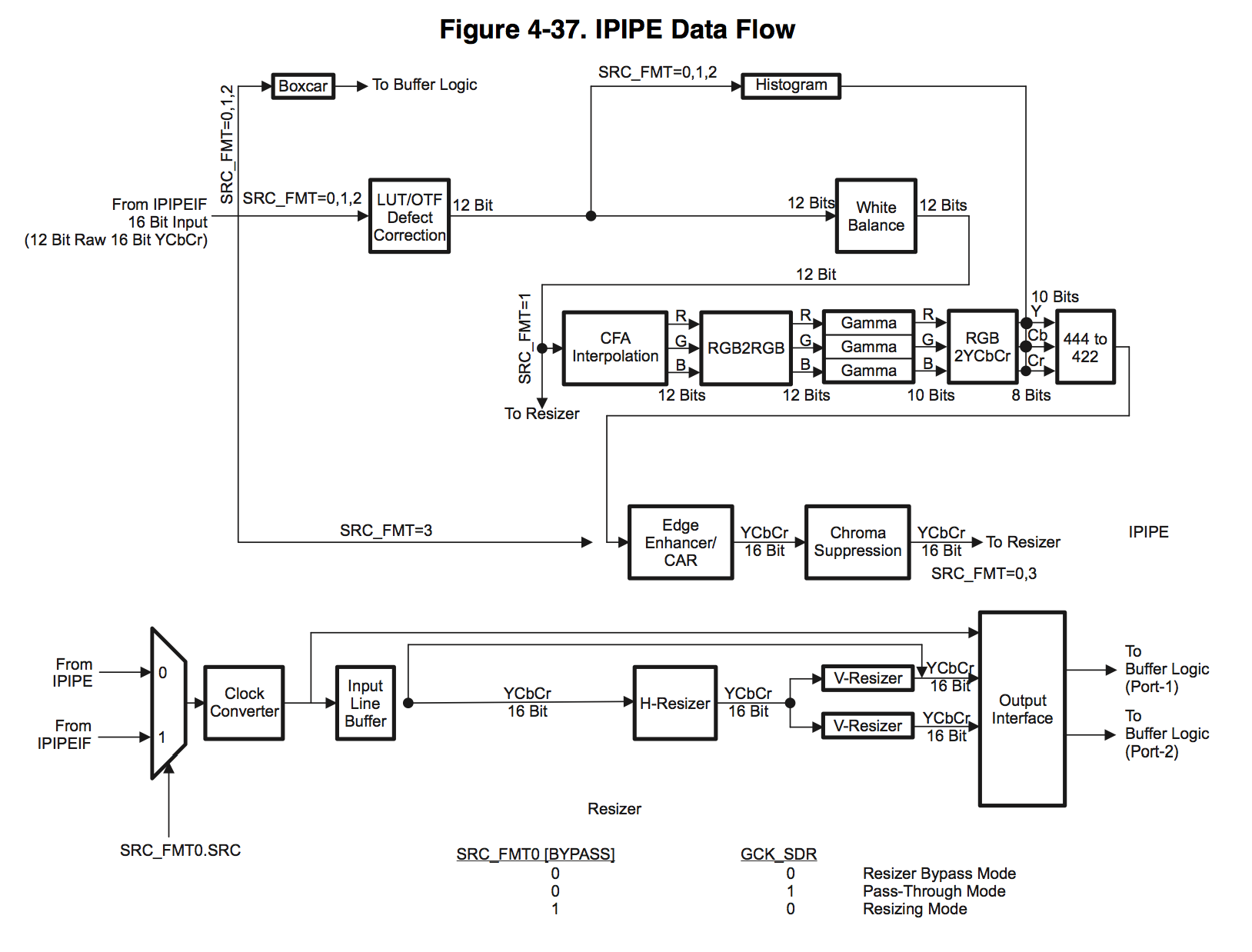

The next Image Pipe (IPIPE) block is used by us for everything else.

Let's start in order:

1. Defect Correction can process “broken” pixels, but before processing it needs to know their coordinates, and in order to specify them, each sensor needs to be calibrated in the dark, which greatly complicates production. Not too many defective sensors come across, and if caught, it is easier to replace.

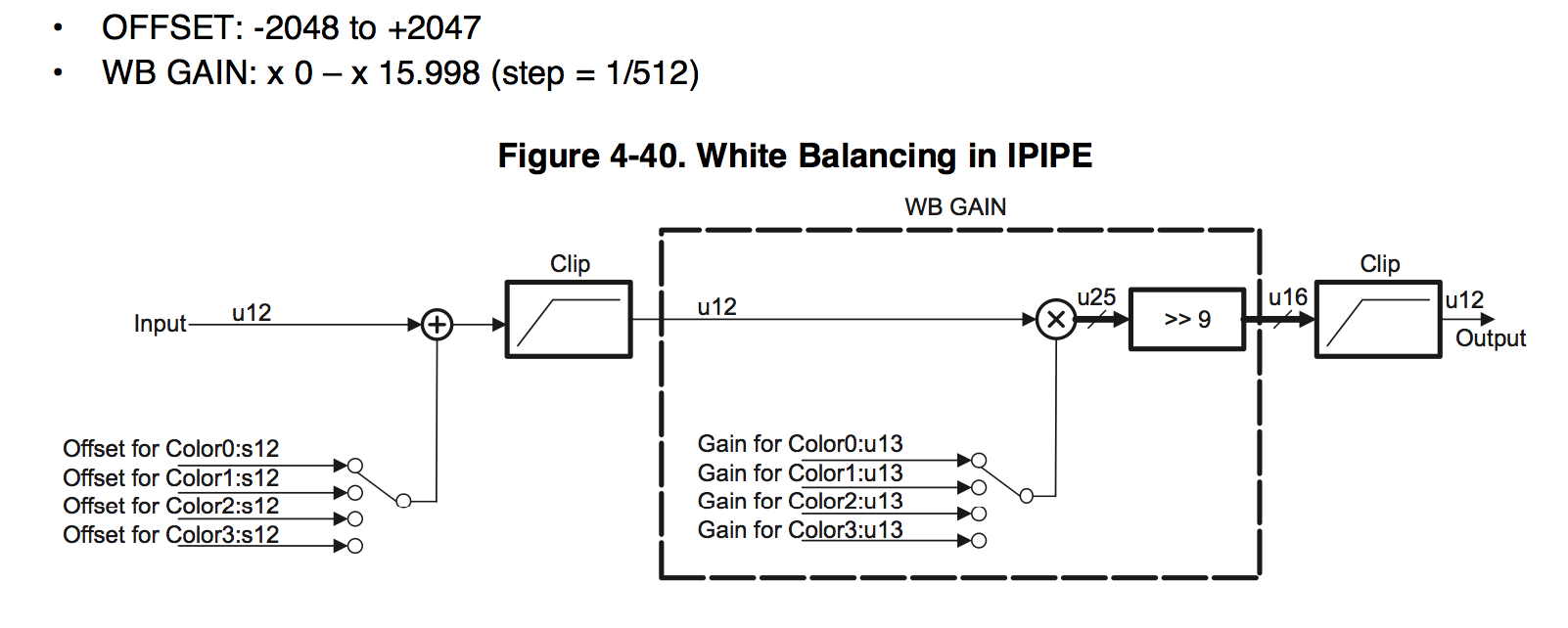

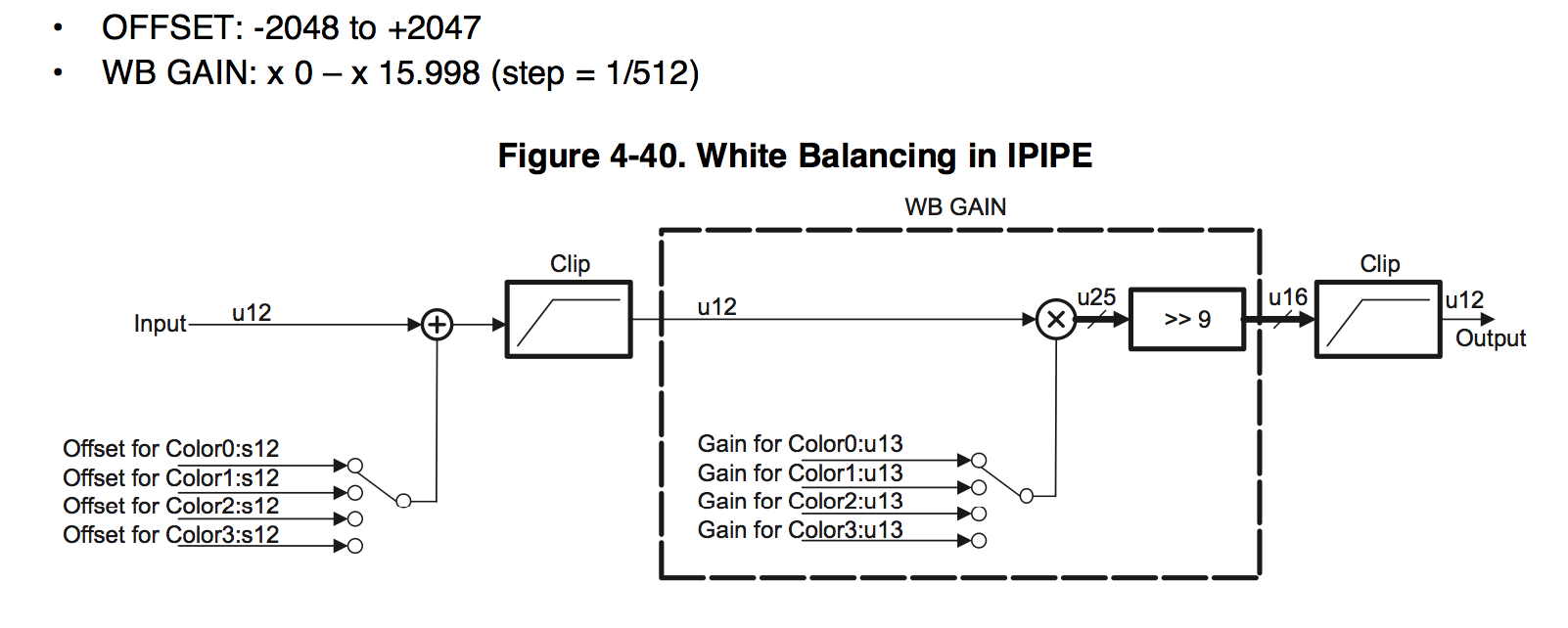

2. The next White Balance block consists of 3 multipliers and 3 subtractors, one for each color, we use it as a global multiplication factor and a threshold subtractor, the same for all colors.

the format is the same as in the ISIF block, only the multiplication range is twice as large.

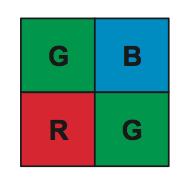

3. CFA Interpolation converts from Bayer format

What interpolation algorithm is used, we have not found.

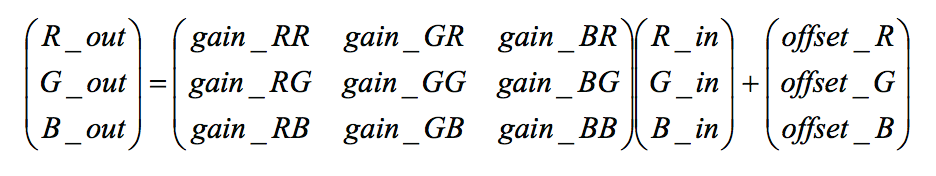

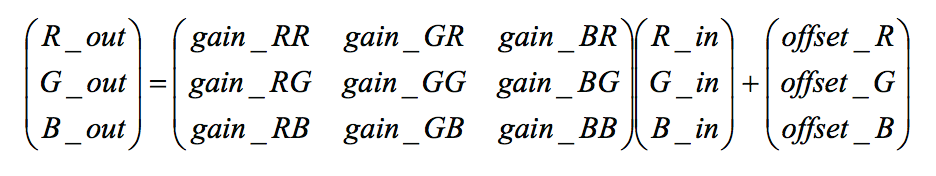

4. RGB2RGB blending module passes each pixel of RGB through the matrix

where gain can vary from -8 to +7.996 with a step of 1/256 = 0.004, a offset from -4096 to 4095.

Moreover, these blocks turned out to be two, one after another, and we both use them in our algorithms. Each manufacturer provides a table for their sensors. Honestly, I don’t quite understand how it works and how colors can mix, but the picture really gets better. Soon everyone will see for yourself.

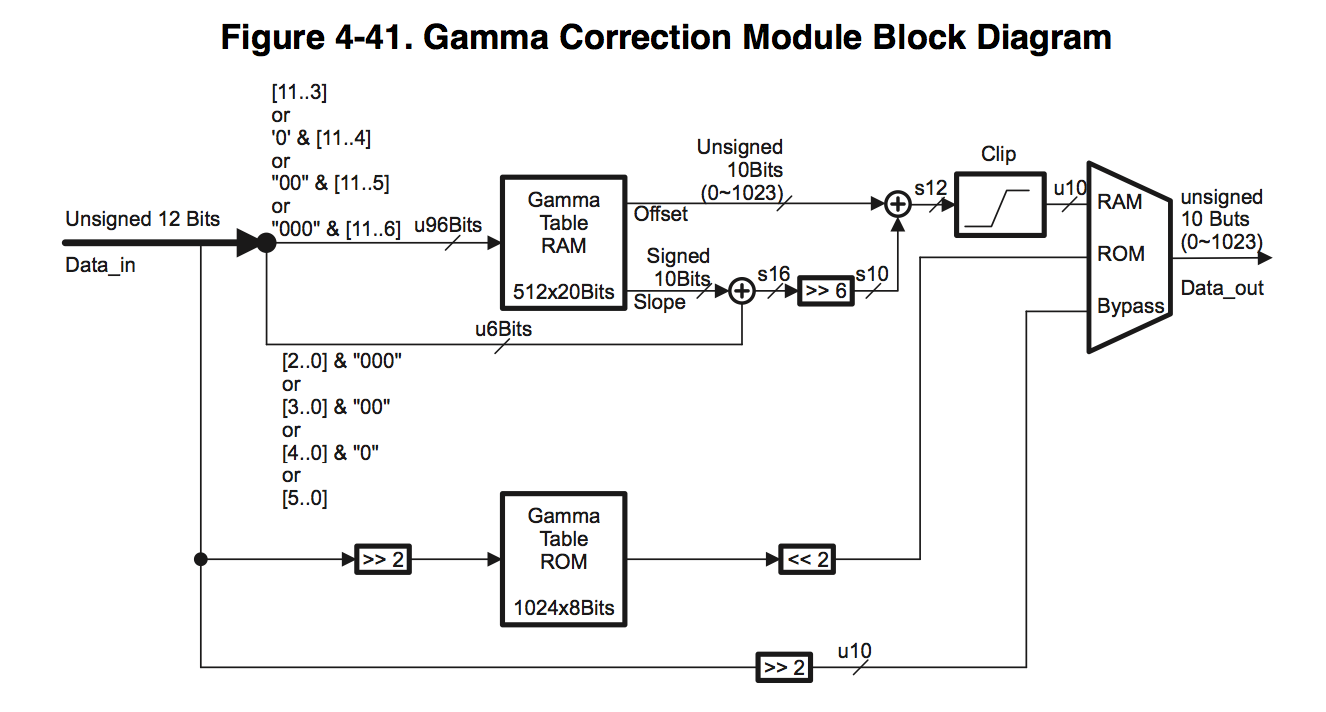

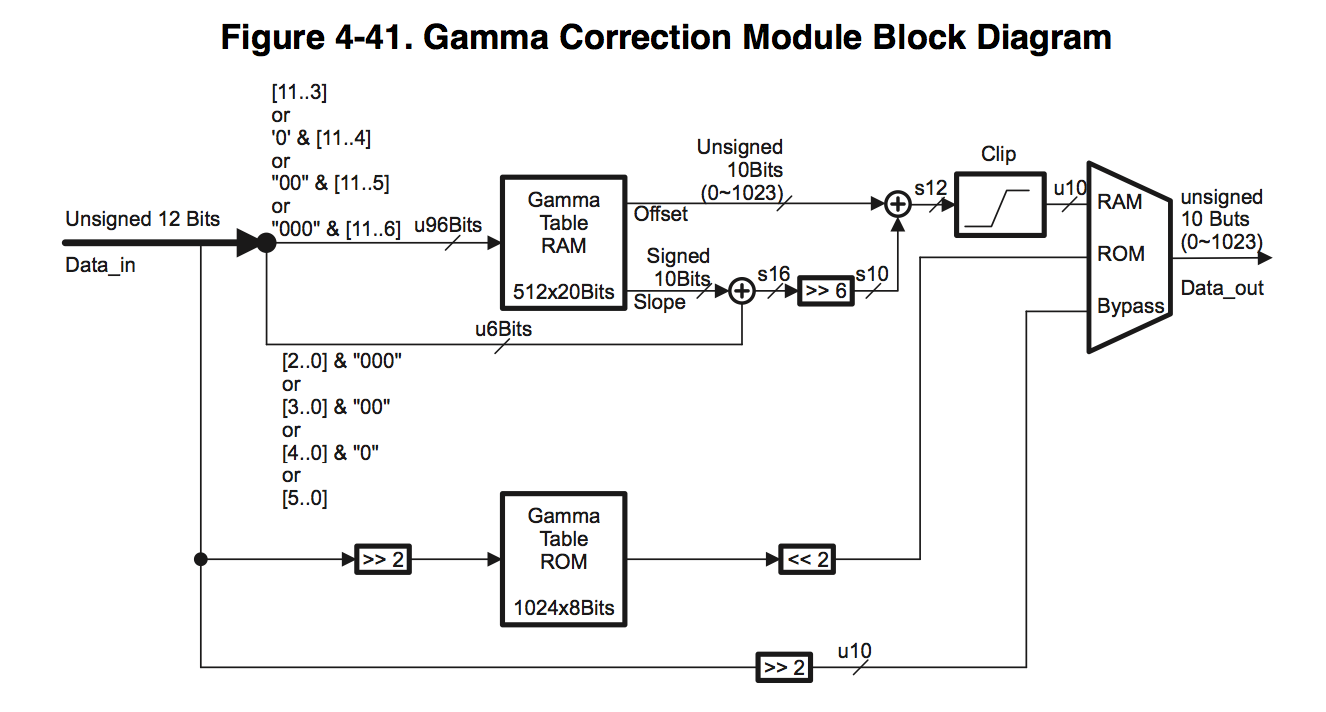

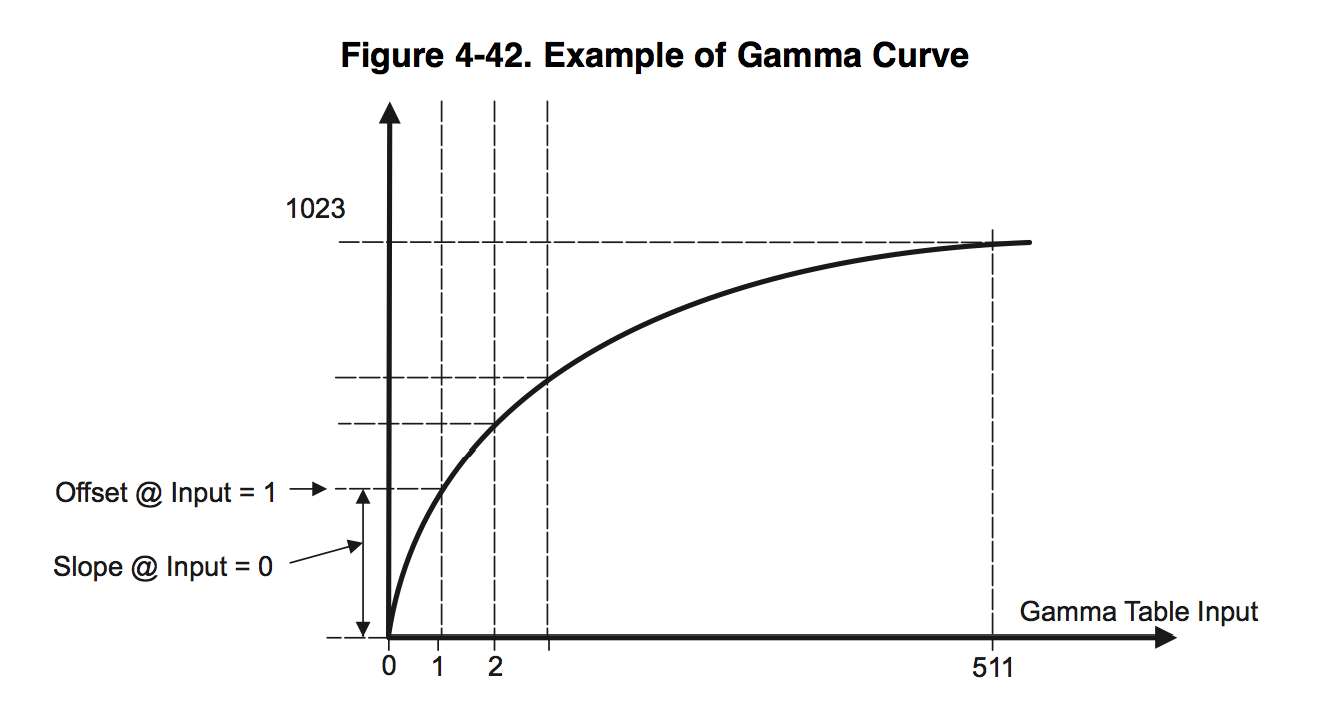

5. The gamma correction block consists of 3 LUTs (look-up-table), one for each color.

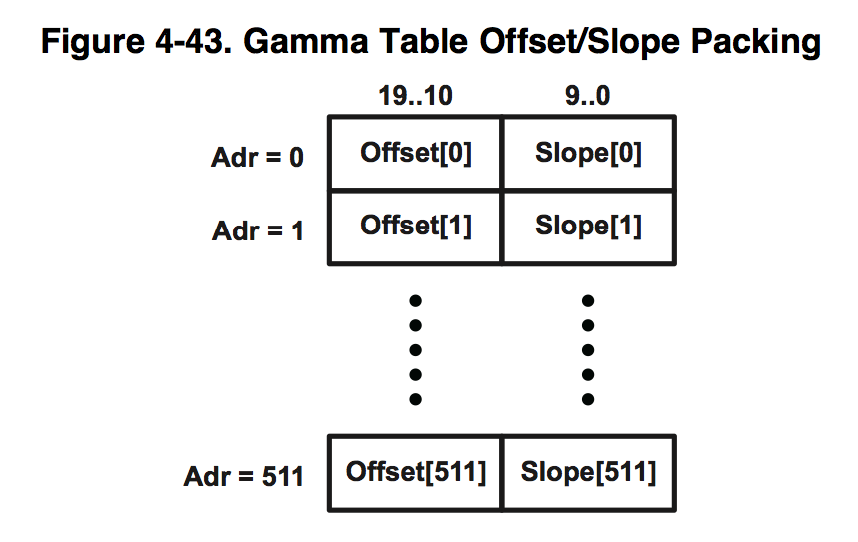

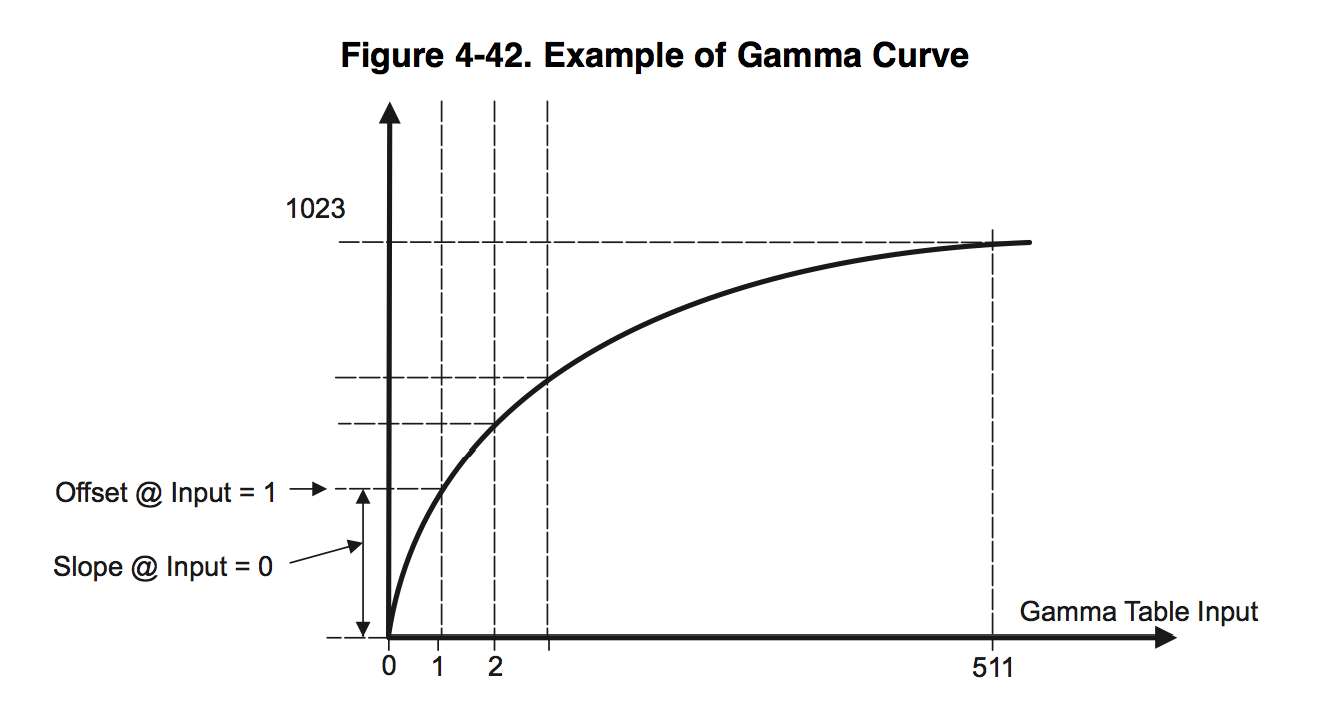

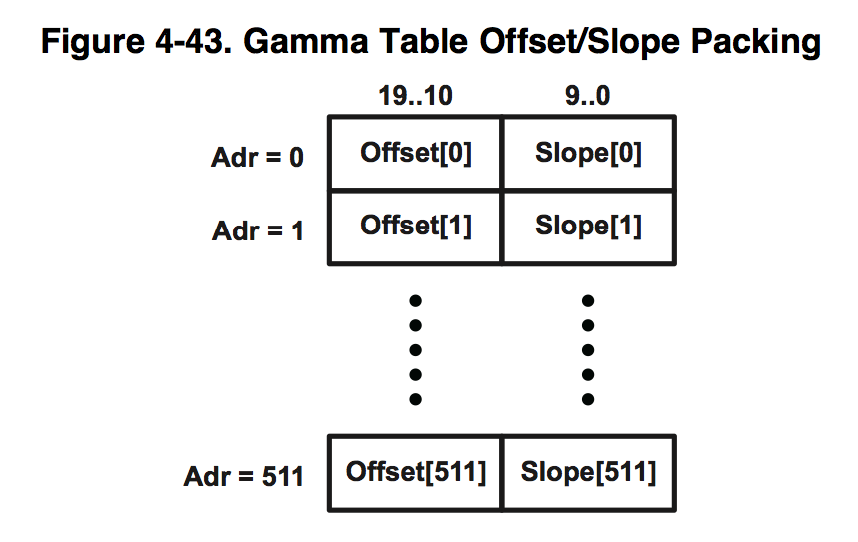

You can choose a table size from 512 to 64 values, we use 512. Each element of the table consists of two 10 bit words Offset and Slope.

The input is 12-bit IN data, then it is split into two parts: HIGH = IN >> 3, LOW = IN & 7, the upper 9 bits and the lower 3 bits. The output value is calculated using the following formula OUT = (Offset [HIGH] << 3) + (Slope [HIGH] >> 3) * LOW. That is, the gamma curve is approximated by a piecewise smooth function.

6. The RGB2YCbCr unit translates the RGB color space to YCbCr.

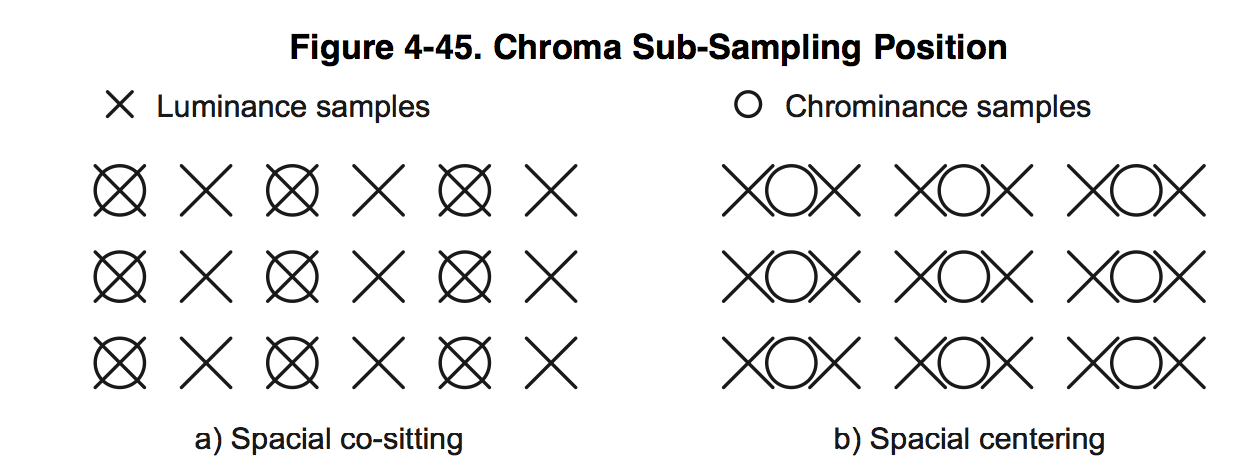

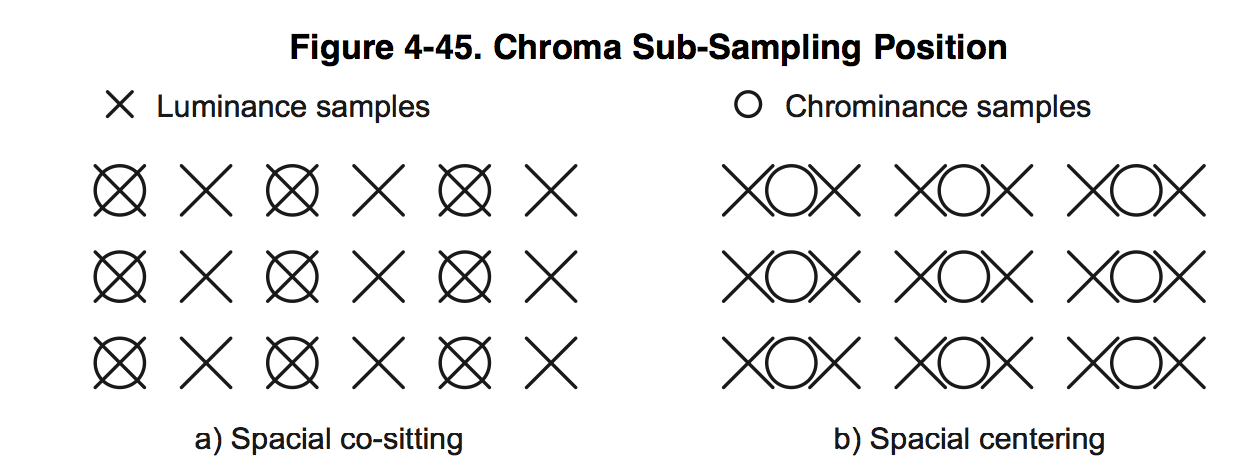

7. 4: 2: 2 Conversion Module halves the color components of Cb and Cr

horizontally.

8. 2D Edge Enhancer, this is a two-dimensional 5x5 filter that improves the contrast of the image - this is a separate topic for the article, here I will give only examples of its work:

2D EE is turned off:

2D EE included:

That is a very useful thing.

9. Resizer reduces the picture vertically and horizontally for the second and third streams, and also converts YCbCr 422 to YCbCr 420 for the encoder.

Separately from the entire video processing path, there is a block of H3A statistics. It is needed to implement all the algorithms. On the basis of the data obtained from it, the exposure, gain factors and shifts are set.

1. Statistics for the exposure and color balance is obtained by dividing the image into cells up to 56 horizontally and 128 vertically. For each cell, you can get the sum of all pixels for each color, the minimum and maximum values.

2. The block for autofocus also breaks the picture into cells up to 12 horizontally and 128 vertically. And for each cell gives the maximum value of the focus.

But this is not explained. Studying the software didn’t really clarify anything, it only became known that it was a kind of RIF filter, in which you can change the coefficients and thresholds, but it didn’t help much, and autofocus didn’t work stably.

So, why did we undertake this and what did not suit us in the existing algorithms? The main problem of the existing algorithm is the incomplete use of dynamic range.

An example of the algorithm for cloudy weather:

In the mornings and evenings, as a rule, the white balance floats:

And this example with our algorithm:

And so that everything worked well with different sensors, we decided not to edit a bunch of other people's software, but to write ours from scratch. Another task is to transfer the algorithm as easily as possible to other platforms. On luck, the DM368 had a very useful hardware unit, the so-called Boxcar. Nothing less than an averager in 8x8 or 16x16 blocks

That is, at the output of boxcar, we get a 16 times reduced raw image on each axis. If the input resolution is 1920x1080, then for statistics we have 120x68.

We took this block as a source of statistics.

Let us draw a block diagram of the operation of our algorithm and proceed to the code.

Live broadcast from our and other cameras can be viewed here .

To be continued…

Let's consider the main processing units of the video stream from the sensor to the network “broadcaster”, we’ll discuss in more detail the algorithms of autoexposure, white balance and autofocus (3A), gamma correction, as well as HDR or WDR extended dynamic range, and, finally, motion detection and analytics its basis.

Examples of pictures will be presented for the SONY IMX136 sensor, the algorithm is also tested on Aptina MT9P006 , AR0331 , MT9M034 sensors .

| Before | After |

|  |

The main players in the processor market for video surveillance ip are 3 companies TI , Ambarella and Hisilicon . All of them have chips of a different price range, the most popular on the market today are the ability to encode FHD (1920x1080) video stream up to 30 frames per second DM368 (TI), A5 (Ambarella), Hi3516 (Hisilicon) at prices from $ 10 to $ 20 and more powerful up to 60 frames per second DM385 (TI), A7 (Ambarella), Hi3517 (Hisilicon) at prices ranging from $ 20 to $ 40. The characteristics of these chips are about the same.

Since TI is a fairly open company, all the documentation on hardware is on the site, it’s easiest to work with them. To get the software and the entire design, it is enough to buy a camera from Appro for $ 1000 and go. To obtain documentation from HiSilicon, you must sign an NDA, and the cost of support and reference fee is $ 5000. The most expensive was Ambarella, to get the documentation and support you need to pay $ 25,400.

So back to DM368:

As can be seen in the diagram, the processor has everything you need for an ip camera and not only for it. Video Processing deals with Video Processing Subsystem (VPSS), in turn, it consists of two Video FE (Front End) and Video BE (Back End) blocks. Video FE is responsible for inputting and processing the video signal, and Video BE for encoding and outputting to various devices. Hardware support for 3A algorithms is located in the Video FE module.

Consider it in more detail:

')

The input IPIPEIF (Image Pipe Interface) receives a “raw” raw 12 bit image in Bayer format from the sensor. This unit itself does nothing particularly useful, it is mainly used to synchronize the work of ISIF and IPIPE, it can also subtract from each new dark frame stored in memory, but we do not use it.

The Image Sensor Interface (ISIF) can convert Bayer to various formats, multiply, subtract, etc., for more information about the possibilities, see the documentation . We from this block for our algorithms use:

1. A “subtractor” of a constant value from all colors, with our two sensors, in total darkness, not 0, but a constant, is issued for SONY 176, but for Aptina 172. This must be taken into account for correct color balancing, especially in the dark.

2. Gain and Offset - we make them white balance. For each color, you can set your multiplication factor from 0 to (7 + 511/512), in the standard format for all hardware “multipliers”: OUT = IN * G >> 9, where IN is the input color value, G is the “multiplier” from 0 until 4095.

The next Image Pipe (IPIPE) block is used by us for everything else.

Let's start in order:

1. Defect Correction can process “broken” pixels, but before processing it needs to know their coordinates, and in order to specify them, each sensor needs to be calibrated in the dark, which greatly complicates production. Not too many defective sensors come across, and if caught, it is easier to replace.

2. The next White Balance block consists of 3 multipliers and 3 subtractors, one for each color, we use it as a global multiplication factor and a threshold subtractor, the same for all colors.

the format is the same as in the ISIF block, only the multiplication range is twice as large.

3. CFA Interpolation converts from Bayer format

| in RGB |  |

What interpolation algorithm is used, we have not found.

4. RGB2RGB blending module passes each pixel of RGB through the matrix

where gain can vary from -8 to +7.996 with a step of 1/256 = 0.004, a offset from -4096 to 4095.

Moreover, these blocks turned out to be two, one after another, and we both use them in our algorithms. Each manufacturer provides a table for their sensors. Honestly, I don’t quite understand how it works and how colors can mix, but the picture really gets better. Soon everyone will see for yourself.

5. The gamma correction block consists of 3 LUTs (look-up-table), one for each color.

You can choose a table size from 512 to 64 values, we use 512. Each element of the table consists of two 10 bit words Offset and Slope.

The input is 12-bit IN data, then it is split into two parts: HIGH = IN >> 3, LOW = IN & 7, the upper 9 bits and the lower 3 bits. The output value is calculated using the following formula OUT = (Offset [HIGH] << 3) + (Slope [HIGH] >> 3) * LOW. That is, the gamma curve is approximated by a piecewise smooth function.

6. The RGB2YCbCr unit translates the RGB color space to YCbCr.

7. 4: 2: 2 Conversion Module halves the color components of Cb and Cr

horizontally.

8. 2D Edge Enhancer, this is a two-dimensional 5x5 filter that improves the contrast of the image - this is a separate topic for the article, here I will give only examples of its work:

2D EE is turned off:

2D EE included:

That is a very useful thing.

9. Resizer reduces the picture vertically and horizontally for the second and third streams, and also converts YCbCr 422 to YCbCr 420 for the encoder.

Separately from the entire video processing path, there is a block of H3A statistics. It is needed to implement all the algorithms. On the basis of the data obtained from it, the exposure, gain factors and shifts are set.

1. Statistics for the exposure and color balance is obtained by dividing the image into cells up to 56 horizontally and 128 vertically. For each cell, you can get the sum of all pixels for each color, the minimum and maximum values.

2. The block for autofocus also breaks the picture into cells up to 12 horizontally and 128 vertically. And for each cell gives the maximum value of the focus.

But this is not explained. Studying the software didn’t really clarify anything, it only became known that it was a kind of RIF filter, in which you can change the coefficients and thresholds, but it didn’t help much, and autofocus didn’t work stably.

So, why did we undertake this and what did not suit us in the existing algorithms? The main problem of the existing algorithm is the incomplete use of dynamic range.

An example of the algorithm for cloudy weather:

In the mornings and evenings, as a rule, the white balance floats:

And this example with our algorithm:

And so that everything worked well with different sensors, we decided not to edit a bunch of other people's software, but to write ours from scratch. Another task is to transfer the algorithm as easily as possible to other platforms. On luck, the DM368 had a very useful hardware unit, the so-called Boxcar. Nothing less than an averager in 8x8 or 16x16 blocks

That is, at the output of boxcar, we get a 16 times reduced raw image on each axis. If the input resolution is 1920x1080, then for statistics we have 120x68.

We took this block as a source of statistics.

Let us draw a block diagram of the operation of our algorithm and proceed to the code.

Live broadcast from our and other cameras can be viewed here .

To be continued…

Source: https://habr.com/ru/post/217317/

All Articles