Friendly AI and the capture of the universe

Suppose we have two options.

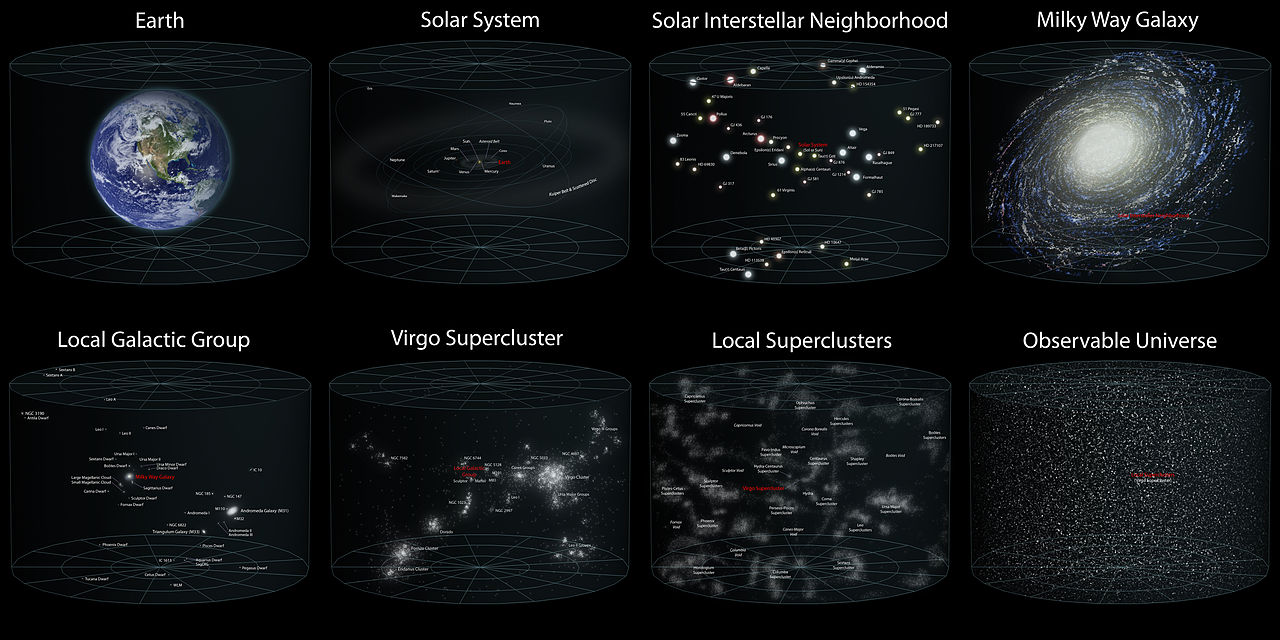

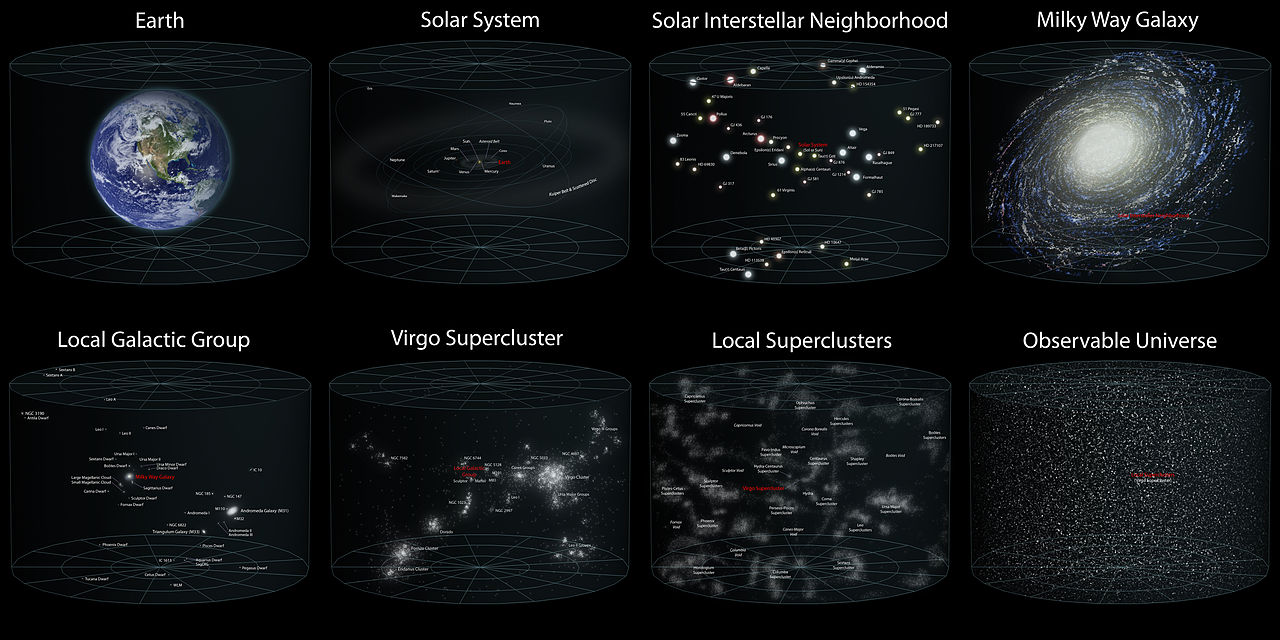

What is worse? If you think a little bit, the second option is much worse. Why? Well, humanity will die out and so what? Not a big deal. The problem is not this, but the problem is that if the last 1000 people die, we will lose trillions and trillions of lives that could be born and live from today and ending with the thermal death of the Universe. Our solar system, the Milky Way, the Virgo Supercluster and the whole Universe will remain unexamined and not populated.

')

We live in an interesting moment of history. We have the opportunity to epically screw up and destroy humanity in a matter of hours. If everything goes well, then sooner or later, humanity will go beyond Earth and colonize hundreds and thousands of worlds. Then it will be impossible to just take and destroy humanity. Those. we, as humanity, are now going through the most dangerous phase of growth and maturity, and if we slip through it, then humanity will have an interesting and beautiful future.

To get to this big and bright future, we need technology, new discoveries, optimization of resource consumption. The greatest hope in these matters is the help of artificial intelligence. Now thousands and thousands of people are fighting over the problems of creating AI. At the moment there is significant progress. AI pulled away from the zero mark and began to gain power. He is still very far from a man, but you shouldn’t relax. AI has already gone a far greater way than it separates stupid and genius. On the absolute scale of intelligence, a person is very clever, but the relative difference between individuals is not that big. If (when) AI comes to the level of intelligence of a stupid person, he will very quickly become smarter than genius and we will not be the crown of creation, but only the second. A big plus of AI is that it is physiologically much faster than a person. The clock frequency of our brain is only about 100 Hertz. Everything rests on massive parallelism. If you just stupidly repeat the human brain on transistors, it will work thousands of times faster. The second plus of AI is that it is capable of self-modification. A person can learn, but cannot rebuild his brain. The AI will be capable of this and will definitely do it. Thus, when an AI reaches the intellectual level of its creators, it can rewrite itself better than its creators did. Rewrite, become smarter, and find a way to rewrite yourself even better. It turns out a loop with positive feedback, which will allow the AI to shoot at the space distances of the intellect. This phenomenon is called the "explosion of intelligence."

The AI will gain power, even if through a text console, but it will be able to control the outside world. Older brother will come to us. What will this AI do? We cannot predict this because we are not smart enough. Perhaps he will decide to destroy humanity, perhaps he will decide to shower us with all the benefits that we can think of. Perhaps it is he who will lead us into space and open the way to the Universe for us. The question is how we program it. And here comes the most interesting.,

You can not just take and program the AI for happiness for all people for nothing. This may result in the AI making all people lobotomy. We will be happy, but this is not what we would like. The standard approach to machine learning is programming to maximize a certain set of functions. f (happiness) -> max, f (HDI) -> max. But we cannot list all the parameters of what we want the AI to maximize. We cannot list these parameters even for ourselves. In addition, our understanding of what we want, and what world order is right for us, has undergone many changes. Only in the last 200 years, mankind has abolished slavery, equalized women’s rights, invented human rights, etc. It would be foolish to take our today's moral code and program the AI to follow it. The AI must follow the vector of our moral code, not the code itself. How to teach him this is not at all clear. Is that through observation of people and learning.

There is a huge space of possible AI. And we need to do exactly that which will not lead us to destruction and will not make us lobotomized idiots. It turns out that there are people in the world who think about this problem, organize conferences and whole institutions.

- all people will die, except for 1000 people. This is 7 billion deaths.

- last 1000 people will die. This is only 1000 deaths.

What is worse? If you think a little bit, the second option is much worse. Why? Well, humanity will die out and so what? Not a big deal. The problem is not this, but the problem is that if the last 1000 people die, we will lose trillions and trillions of lives that could be born and live from today and ending with the thermal death of the Universe. Our solar system, the Milky Way, the Virgo Supercluster and the whole Universe will remain unexamined and not populated.

')

We live in an interesting moment of history. We have the opportunity to epically screw up and destroy humanity in a matter of hours. If everything goes well, then sooner or later, humanity will go beyond Earth and colonize hundreds and thousands of worlds. Then it will be impossible to just take and destroy humanity. Those. we, as humanity, are now going through the most dangerous phase of growth and maturity, and if we slip through it, then humanity will have an interesting and beautiful future.

To get to this big and bright future, we need technology, new discoveries, optimization of resource consumption. The greatest hope in these matters is the help of artificial intelligence. Now thousands and thousands of people are fighting over the problems of creating AI. At the moment there is significant progress. AI pulled away from the zero mark and began to gain power. He is still very far from a man, but you shouldn’t relax. AI has already gone a far greater way than it separates stupid and genius. On the absolute scale of intelligence, a person is very clever, but the relative difference between individuals is not that big. If (when) AI comes to the level of intelligence of a stupid person, he will very quickly become smarter than genius and we will not be the crown of creation, but only the second. A big plus of AI is that it is physiologically much faster than a person. The clock frequency of our brain is only about 100 Hertz. Everything rests on massive parallelism. If you just stupidly repeat the human brain on transistors, it will work thousands of times faster. The second plus of AI is that it is capable of self-modification. A person can learn, but cannot rebuild his brain. The AI will be capable of this and will definitely do it. Thus, when an AI reaches the intellectual level of its creators, it can rewrite itself better than its creators did. Rewrite, become smarter, and find a way to rewrite yourself even better. It turns out a loop with positive feedback, which will allow the AI to shoot at the space distances of the intellect. This phenomenon is called the "explosion of intelligence."

The AI will gain power, even if through a text console, but it will be able to control the outside world. Older brother will come to us. What will this AI do? We cannot predict this because we are not smart enough. Perhaps he will decide to destroy humanity, perhaps he will decide to shower us with all the benefits that we can think of. Perhaps it is he who will lead us into space and open the way to the Universe for us. The question is how we program it. And here comes the most interesting.,

You can not just take and program the AI for happiness for all people for nothing. This may result in the AI making all people lobotomy. We will be happy, but this is not what we would like. The standard approach to machine learning is programming to maximize a certain set of functions. f (happiness) -> max, f (HDI) -> max. But we cannot list all the parameters of what we want the AI to maximize. We cannot list these parameters even for ourselves. In addition, our understanding of what we want, and what world order is right for us, has undergone many changes. Only in the last 200 years, mankind has abolished slavery, equalized women’s rights, invented human rights, etc. It would be foolish to take our today's moral code and program the AI to follow it. The AI must follow the vector of our moral code, not the code itself. How to teach him this is not at all clear. Is that through observation of people and learning.

There is a huge space of possible AI. And we need to do exactly that which will not lead us to destruction and will not make us lobotomized idiots. It turns out that there are people in the world who think about this problem, organize conferences and whole institutions.

Source: https://habr.com/ru/post/217285/

All Articles