Boat for the arcade. Part number 2: connect OpenCV

Introduction

We continue

We continue For image processing, take the widespread OpenCV library . It is non-native (unmanaged) for .net, so we connect it via the OpenCvSharp wrapper.

We need OpenCV in order to apply various transformations to images, choosing such a transformation that will separate the background and the shadows from the objects, and the objects from each other. To this goal and we will move today.

')

Topics covered : the choice of library for image processing, the choice of wrapper for working with OpenCV, the basic functions of OpenCV, the selection of moving objects, the color model of HSV.

Why OpenCV?

There are other good libraries for image processing, the light on OpenCV has not come together.When programming under .net, you should also pay attention to the Accord.Net library (and its earlier version AForge.Net ). These two libraries are also free, but native (managed) for the .net platform, unlike OpenCV.

When developing a product for sale, not “for fun” - I would rather focus on managed libraries. The choice of good managed libraries does not degrade performance, but it greatly simplifies deployment, portability, and subsequent maintenance. But in startups and in the development of “for fun”, a large community is more important than the future simplification of maintenance. And that brings us back to the OpenCV library, which is much more widely known.

Big community

When self-selecting libraries, it makes sense to focus on those that have a large active community. A large number of followers makes the library highly tested, a small percentage of inconveniences of use, a variety of documentation, and makes it easy to google questions that arise during the course of work.OpenCV fully supports this thesis. There is a book on it: Learning OpenCV ( frankly, I have not read it yet, but I am going to fix it in the near future ), there is its translation into Russian locv.ru ( I’m not opening now ), there is online documentation , there are a lot of questions with answers on stackoverflow .

All of this hiring gives a quick start, providing an approach “Pl! Get ready! Aim ”(when the library is being studied as work progresses) instead of the classic“ Get ready! Aim! Pl! ”(When at first a considerable time is spent on preliminary familiarization with the library device).

Google questions

Most of the tasks were solved with the help of fast googling: the keywords in the topic of the question are hammered into Google, and the first links immediately contain the answer. When self-searching for answers, be sure to pay attention to what keywords are used to describe the desired perspective. Google is not important beauty and grammatical correctness of the question, for it is only important the presence of keywords, for which he will choose the answer.Examples of issues to be solved:

- select the R-component from the image - request in google: google: opencv get single channel , and the first link says that this is done using the Split function

- find differences between images: google: opencv difference images , and the example from the first answer says that the absdiff function does this. If, when searching, to use the word compare instead of difference, then Google will start to show completely different pages, and this will give more general answers with a recommendation to use histogram comparison, etc. This shows the importance of choosing keywords when searching for the answer to your question.

Choosing .net-wrapper for OpenCV

The library was chosen, it remains to befriend her with C #. This problem has already been solved before us, and again we just have to make a choice between the available options. There are two common wrappers : Emgu Cv and OpenCvSharp . Emgu Cv is older and more cool, OpenCvSharp is more modern. The choice focused on OpenCvSharp, bribed the words of the author that IDisposable is supported. This means that the author not only transferred 1 to 1 structures and functions from C / C ++ to C #, but also doped them with a file in order to make them more convenient to use in the C # style of writing code.We connect OpenCvSharp to the project

Connecting OpenCvSharp to the project is done in a standard way, without any special quotation. There is a small tutorial from the author, it is also possible to connect OpenCvSharp via nuget .Basic basic functions

OpenCV has many features for working with images. Let us dwell only on the basic basic functions that are used in solving the problem of selecting objects from an image. OpenCV has two uses: C-style and C ++ - style. To simplify the code, we will use the C ++ style (or rather, its counterpart via OpenCvSharp).There are two main classes: Mat and Cv2. Both are in the namespace-e OpenCvSharp.CPlusPlus. Mat is the image itself, and Cv2 is a set of actions on images.

Functions:

// var mat = new Mat("test.bmp"); // mat.ImWrite("out.bmp"); // bitmap var bmp = mat.ToBitmap(); // bitmap ( OpenCvSharp.Extensions) var mat2 = new Mat(bmp.ToIplImage(), true); // using (new Window("", mat)) { Cv2.WaitKey(); } // (, ) Cv2.CvtColor(mat, dstMat, ColorConversion.RgbToGray); // Cv2.Split(mat, out mat_channels) // Cv2.Merge(mat_channels, mat) // Cv2.Absdiff(mat1, mat2, dstMat); // , / (50) (0) (255) Cv2.Threshold(mat, dstMat, 50, 255, OpenCvSharp.ThresholdType.Binary); // mat.Circle(x, y, radius, new Scalar(b, g, r)); mat.Line(x1, y1, x2, y2, new CvScalar(b, g, r)); mat.Rectangle(new Rect(x, y, width, height), new Scalar(b, g, r)); mat.Rectangle(new Rect(x, y, width, height), new Scalar(b, g, r), -1); // mat.PutText("test", new OpenCvSharp.CPlusPlus.Point(x, y), FontFace.HersheySimplex, 2, new Scalar(b, g, r)) Also in OpenCV, there are special functions for selecting objects ( Structural Analysis and Shape Descriptors , Motion Analysis and Object Tracking , Feature Detection , Object Detection ), but squeezing the useful result out of them did not work out ( you probably need to read the book after all ) so leave them for later.

Selection of objects

A simple method of selecting objects is to invent a filter that cuts off the background from objects and objects from each other. Unfortunately, the image of Zuma's field is very colorful, and a simple brightness cut-off does not work. Below are the original image, its black and white version, and a ladder of various cutoffs. The last image shows that in all cases the background merges with the balls, or either the one and the other is present, or at the same time is absent.

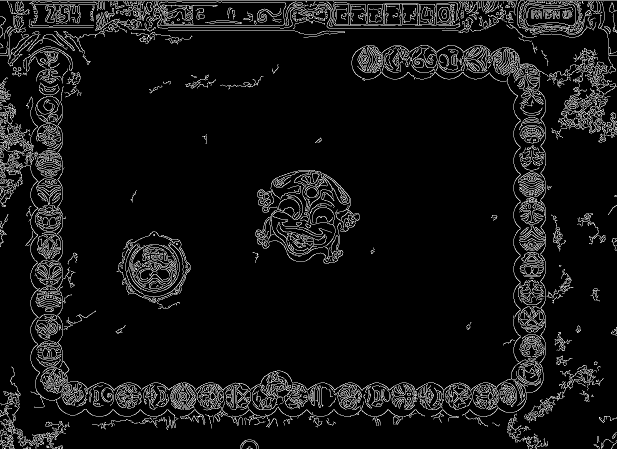

The selection of objects greatly complicates their “banding”. Here, for example, how the Canny function reacts, highlighting the outlines of objects.

Using separate color components life does not do better.

Selection of moving objects

The basis for selecting moving objects is simple: two files are compared - the points that have changed and are the desired objects. In practice, everything is more complicated, and the devil, as always, lies in the details ...Formation of a series of image

To form a series of images, add a small code to our bot. The bot will keep a history of the last frames, and by pressing the space bar will drop them to disk. var history = new List<Bitmap>(); for (var tick = 0; ;tick++) { var bmp = GetScreenImage(gameScreenRect); history.Insert(0, bmp); const int maxHistoryLength = 10; if (history.Count > maxHistoryLength) history.RemoveRange(maxHistoryLength, history.Count - maxHistoryLength); if (Console.KeyAvailable) { var keyInfo = Console.ReadKey(); if (keyInfo.Key == ConsoleKey.Spacebar) { for (var i = 0; i < history.Count; ++i) history[i].Save(string.Format("{0}.png", i)); } [..] } [..] } We launch, click and voila - we have two frames on hand.

Neighbor Comparison

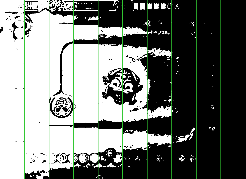

Subtract one image from another ... and what is called "mixed in a bunch of horses, people." The balls turned into something “strange” (it can be clearly seen on the full-sized fragment), but it's okay, the main thing is that the balls turned out to be separated from the background.

Comparison with specially prepared background

Comparison of adjacent frames is well suited for "detached" moving objects. If it is necessary to isolate densely moving objects, then a comparison with a specially prepared static background works better.Prepare this background:

Compare:

Much better, but everything spoils the shadows. I tried to get rid of the shadows in many ways, but the best effect was given by the idea that the shadow, in fact, is a change in brightness, and this has already led me to the idea of the HSV color model.

HSV

The HSV color model, as well as RGB, consists of three channels. But unlike him (and the same CMYK) - this is not just a mixture of colors.

The HSV color model, as well as RGB, consists of three channels. But unlike him (and the same CMYK) - this is not just a mixture of colors.- First channel, H (Hue) - color tone. In the first approximation, this is the number of colors from the rainbow.

- The second channel, S (Saturation) - saturation. The smaller the value of this channel, the closer the color is to gray, the more - the more pronounced the color. High saturation colors are known under the colloquial name “acidic”.

- The third channel, V (Value) - brightness. This is the easiest to understand channel, the greater the illumination, the higher the value of this channel.

The picture on the right shows the relationship of channels and colors among themselves. There is a rainbow in a circle - this is the H channel. The triangle for a particular color (now it is red) shows the change in the S channel - saturation (direction to the top-right) and the change in the V channel - brightness (to the top-left). Classically, the values of the channel H are in the range of 0-360, S - 0-100, V - 0-100. In OpenCV, the values of all channels are brought to the range of 0-255 in order to maximally use the dimension of one byte.

The RGB color model is close to the human eye, to how it works. The HSV color model is close to how the color is perceived by the brain. Below, I specifically cited a series of images of what will happen if each channel is changed to plus / minus 50 parrots. They show that even after changing channels S and V by 100 units (and this is half of the range), the image is perceived almost as much, but even a small change in channel H greatly changes the perception, making the image “addicted”. This is due to the fact that the brain for many years of evolution has learned to separate more stable data from less stable.

What does "stable" mean? This is the part of the information that is least affected by some external conditions. Take a real object, for example, a solid ball. It has some kind of its own color, but the perception of this color will vary depending on external conditions: light, air transparency, reflection of neighboring objects, etc. Accordingly, if there is a task to allocate a ball from the surrounding world, regardless of external conditions, then it is necessary to focus more on that part of information that changes little from external conditions, and ignore the part that changes most strongly. The least stable is the brightness (channel V): moved into the shadows and the brightness of the surrounding world has changed, the sky has covered with clouds - and the brightness has changed again. Saturation (channel S) also changes during the day, the color perception changes more precisely - the lower the illumination, the more the cone contribute (black and white vision) and the less information comes from the sticks (color vision). The color tone (channel H) changes the least and most consistently reflects the color of an object.

Comparison with background in hsv space

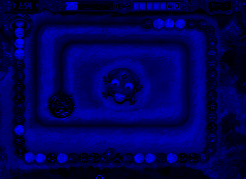

We repeat the subtraction from the static background, but now after the conversion to the hsv space, and Oh! Miracle! In the H and S channels, the balls are clearly separated from the shadows, all the shadows almost completely went into channel V. In the H-channel, the “broken” balls even disappear, but, unfortunately, the yellow balls begin to merge with the background. In the S-channel, the irregularity remains, but all the balls are clearly visible, and translating into a two-color image (with “garbage” cut off less than 25) gives clear circles and removes all unnecessary.

Summary

The goal set for today has been achieved (the balls are separated from the background and from their shadows), and with peace of mind you can go to sleep.PS

All images are generated using OpenCV (code under the cut).Hidden text

var resizeK = 0.2; var dir = "Example/"; var src = new Mat("0.bmp"); var src_g = new Mat("0.bmp", LoadMode.GrayScale); var src_1 = new Mat("1.bmp"); var src_1_g = new Mat("1.bmp", LoadMode.GrayScale); var background = new Mat("background.bmp"); var background_g = new Mat("background.bmp", LoadMode.GrayScale); src.Resize(resizeK).ImWrite(dir + "0.png"); src_g.Resize(resizeK).ImWrite(dir + "0 g.png"); src_g.ThresholdStairs().Resize(resizeK).ImWrite(dir + "0 g th.png"); var canny = new Mat(); Cv2.Canny(src_g, canny, 50, 200); canny.Resize(0.5).ImWrite(dir + "0 canny.png"); Mat[] src_channels; Cv2.Split(src, out src_channels); for (var i = 0; i < src_channels.Length; ++i) { var channels = Enumerable.Range(0, src_channels.Length).Select(j => new Mat(src_channels[0].Rows, src_channels[0].Cols, src_channels[0].Type())).ToArray(); channels[i] = src_channels[i]; var dst = new Mat(); Cv2.Merge(channels, dst); dst.Resize(resizeK).ImWrite(dir + string.Format("0 ch{0}.png", i)); src_channels[i].ThresholdStairs().Resize(resizeK).ImWrite(dir + string.Format("0 ch{0} th.png", i)); } if (true) { src.Resize(0.4).ImWrite(dir + "0.png"); src_1.Resize(0.4).ImWrite(dir + "1.png"); background.Resize(0.4).ImWrite(dir + "bg.png"); var dst_01 = new Mat(); Cv2.Absdiff(src, src_1, dst_01); dst_01.Resize(resizeK).ImWrite(dir + "01.png"); dst_01.Cut(new Rect(50, src.Height * 4 / 5 - 50, src.Width / 5, src.Height / 5)).ImWrite(dir + "01 part.png"); dst_01.Cut(new Rect(50, src.Height * 4 / 5 - 50, src.Width / 5, src.Height / 5)).CvtColor(ColorConversion.RgbToGray).ImWrite(dir + "01 g.png"); dst_01.CvtColor(ColorConversion.RgbToGray).ThresholdStairs().Resize(resizeK).ImWrite(dir + "01 g th.png"); var dst_01_g = new Mat(); Cv2.Absdiff(src_g, src_1_g, dst_01_g); dst_01_g.Cut(new Rect(50, src.Height * 4 / 5 - 50, src.Width / 5, src.Height / 5)).ImWrite(dir + "0g1g.png"); dst_01_g.ThresholdStairs().Resize(resizeK).ImWrite(dir + "0g1g th.png"); } if (true) { var dst_0b = new Mat(); Cv2.Absdiff(src, background, dst_0b); dst_0b.Resize(0.6).ImWrite(dir + "0b.png"); var dst_0b_g = new Mat(); Cv2.Absdiff(src_g, background_g, dst_0b_g); dst_0b_g.Resize(0.3).ImWrite(dir + "0b g.png"); dst_0b_g.ThresholdStairs().Resize(0.3).ImWrite(dir + "0b g th.png"); } if (true) { var hsv_src = new Mat(); Cv2.CvtColor(src, hsv_src, ColorConversion.RgbToHsv); var hsv_background = new Mat(); Cv2.CvtColor(background, hsv_background, ColorConversion.RgbToHsv); var hsv_background_channels = hsv_background.Split(); var hsv_src_channels = hsv_src.Split(); if (true) { var all = new Mat(src.ToIplImage(), true); for (var i = 0; i < hsv_src_channels.Length; ++i) { hsv_src_channels[i].CvtColor(ColorConversion.GrayToRgb).CopyTo(all, new Rect(i * src.Width / 3, src.Height / 2, src.Width / 3, src.Height / 2)); } src_g.CvtColor(ColorConversion.GrayToRgb).CopyTo(all, new Rect(src.Width / 2, 0, src.Width / 2, src.Height / 2)); all.Resize(0.3).ImWrite(dir + "all.png"); } foreach (var pair in new[] { "h", "s", "v" }.Select((channel, index) => new { channel, index })) { var diff = new Mat(); Cv2.Absdiff(hsv_src_channels[pair.index], hsv_background_channels[pair.index], diff); diff.Resize(0.3).With_Title(pair.channel).ImWrite(dir + string.Format("0b {0}.png", pair.channel)); diff.ThresholdStairs().Resize(0.3).ImWrite(dir + string.Format("0b {0} th.png", pair.channel)); hsv_src_channels[pair.index].Resize(resizeK).With_Title(pair.channel).ImWrite(dir + string.Format("0 {0}.png", pair.channel)); foreach (var d in new[] { -100, -50, 50, 100 }) { var delta = new Mat(hsv_src_channels[pair.index].ToIplImage(), true); delta.Rectangle(new Rect(0, 0, delta.Width, delta.Height), new Scalar(Math.Abs(d)), -1); var new_channel = new Mat(); if (d >= 0) Cv2.Add(hsv_src_channels[pair.index], delta, new_channel); else Cv2.Subtract(hsv_src_channels[pair.index], delta, new_channel); var new_hsv = new Mat(); Cv2.Merge(hsv_src_channels.Select((channel, index) => index == pair.index ? new_channel : channel).ToArray(), new_hsv); var res = new Mat(); Cv2.CvtColor(new_hsv, res, ColorConversion.HsvToRgb); res.Resize(resizeK).With_Title(string.Format("{0} {1:+#;-#}", pair.channel, d)).ImWrite(dir + string.Format("0 {0}{1}.png", pair.channel, d)); } } } static class OpenCvHlp { public static Scalar ToScalar(this Color color) { return new Scalar(color.B, color.G, color.R); } public static void CopyTo(this Mat src, Mat dst, Rect rect) { var mask = new Mat(src.Rows, src.Cols, MatType.CV_8UC1); mask.Rectangle(rect, new Scalar(255), -1); src.CopyTo(dst, mask); } public static Mat Absdiff(this Mat src, Mat src2) { var dst = new Mat(); Cv2.Absdiff(src, src2, dst); return dst; } public static Mat CvtColor(this Mat src, ColorConversion code) { var dst = new Mat(); Cv2.CvtColor(src, dst, code); return dst; } public static Mat Threshold(this Mat src, double thresh, double maxval, ThresholdType type) { var dst = new Mat(); Cv2.Threshold(src, dst, thresh, maxval, type); return dst; } public static Mat ThresholdStairs(this Mat src) { var dst = new Mat(src.Rows, src.Cols, src.Type()); var partCount = 10; var partWidth = src.Width / partCount; for (var i = 0; i < partCount; ++i) { var th_mat = new Mat(); Cv2.Threshold(src, th_mat, 255 / 10 * (i + 1), 255, ThresholdType.Binary); th_mat.Rectangle(new Rect(0, 0, partWidth * i, src.Height), new Scalar(0), -1); th_mat.Rectangle(new Rect(partWidth * (i + 1), 0, src.Width - partWidth * (i + 1), src.Height), new Scalar(0), -1); Cv2.Add(dst, th_mat, dst); } var color_dst = new Mat(); Cv2.CvtColor(dst, color_dst, ColorConversion.GrayToRgb); for (var i = 0; i < partCount; ++i) { color_dst.Line(partWidth * i, 0, partWidth * i, src.Height, new CvScalar(50, 200, 50), thickness: 2); } return color_dst; } public static Mat With_Title(this Mat mat, string text) { var res = new Mat(mat.ToIplImage(), true); res.Rectangle(new Rect(res.Width / 2 - 10, 30, 20 + text.Length * 15, 25), new Scalar(0), -1); res.PutText(text, new OpenCvSharp.CPlusPlus.Point(res.Width / 2, 50), FontFace.HersheyComplex, 0.7, new Scalar(150, 200, 150)); return res; } public static Mat Resize(this Mat src, double k) { var dst = new Mat(); Cv2.Resize(src, dst, new OpenCvSharp.CPlusPlus.Size((int)(src.Width * k), (int)(src.Height * k))); return dst; } public static Mat Cut(this Mat src, Rect rect) { return new Mat(src, rect); } public static Mat[] Split(this Mat hsv_background) { Mat[] hsv_background_channels; Cv2.Split(hsv_background, out hsv_background_channels); return hsv_background_channels; } } Boat for DirectX-arcade. Part number 1: make contact

Boat for the arcade. Part number 2: connect OpenCV

Source: https://habr.com/ru/post/216757/

All Articles