And gave the robot a hand, and taught to use it

Whenever it comes to robots, people represent a machine that can do something useful in our material world, and not just dialogue and movement. Thanks to the development of current technologies it has become much easier to use the manipulators in their own projects. This article will discuss such a great software for manipulating a manipulator as MoveIt and some functionality implemented on its base in our project of the service robot Tod.

A small lyrical digression. Perhaps many of you, dear habravchane, were deprived of the opportunity to get acquainted with our previous article , presenting a small summary of the work done with interesting video material. But we would like to give it a second chance, because it contains an important questionnaire for our project that would help us determine the further format of its development. If you can easily read the article and vote, it will help us a lot.

And now to the point.

Although adding a hand is a relatively simple task, it is much more difficult for a robot to do something useful with it. When using our own hands and hands, it seems easy to reach an object in space, even when it is limited to nearby objects (for example, you need to reach the oil at the dinner table without spilling a glass near your elbow). However, when we try to program a robot to perform such actions, this turns out to be a complex mathematical task. Fortunately, Willow Garage has published a new motion planning software called MoveIt, which is aimed at solving problems of controlling devices with complex kinematics (for example, manipulators), checking collisions, capturing and perception. This software really deserves attention. For a deeper understanding, we have analyzed it in the past few weeks. One of the nice features of MoveIt is that it doesn’t matter what to control, be it a real or a virtual robot. So we can save time by checking the work in the simulator before using it in reality.

')

What you need to know about MoveIt

First of all, to use Moveit successfully, he needs to know with what he will work. To do this, you need a URDF model of a robot or just a manipulator. Based on this model, a self-collision matrix is generated. It is designed to search for pairs of links on the robot. Communication data can be safely disconnected from collision checking, reducing the processing time of motion planning. These pairs of links are disabled in several cases:

- in constant collision

- in the constant absence of collisions

- when the connections are adjacent to each other on the kinematic chain

The sampling density of the self-collision matrix determines the number of random positions of the robot in order to check all possible collisions. Higher density requires more computation time, while at low density the probability of eliminating a pair, which should not be turned off, is lower. Collision checking is done in parallel to reduce processing time.

Planning groups in MoveIt are used to semantically describe various parts of a robot, for example, determining which hand (left, right) or grip. To do this, you must select all the joints that are, for example, the right arm.

What is at the entrance

In order for the “magic” of MoveIt to come into action, it needs, first of all, to know the current state of the manipulator. The current state means the position in which the robot is now, whether its hand is behind its back or stretched forward.

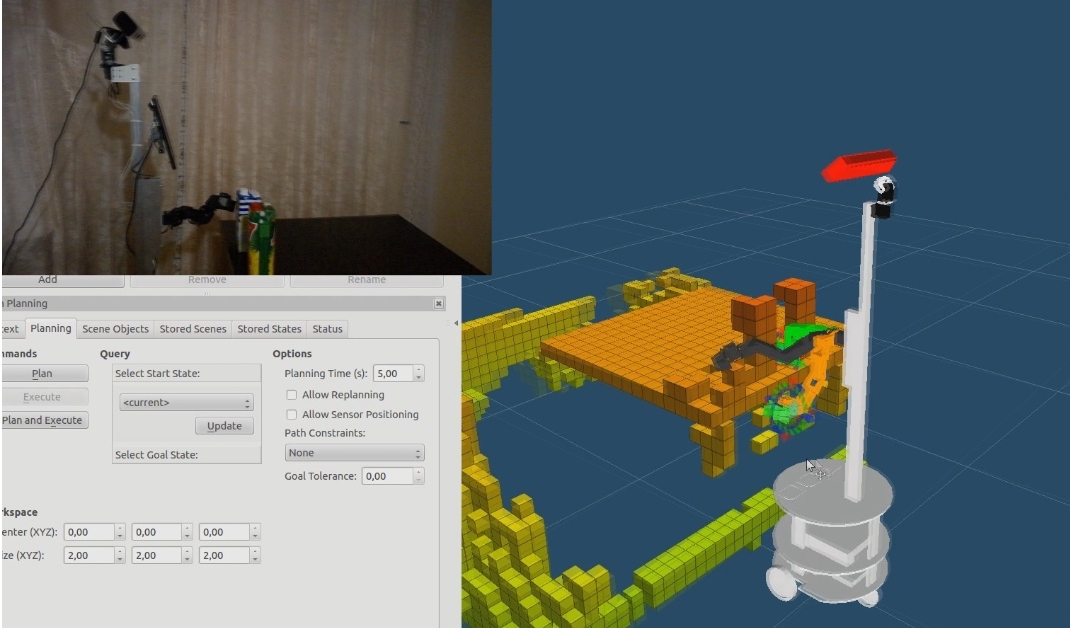

In addition to the current state, you will need an understanding of the environment in order to know what is around the robot, and inadvertently bring something down. For these purposes, we will use everyone's favorite Kinect. Information about the world in MoveIt is presented in the form of a collision matrix, but this is not the matrix that was mentioned earlier, so I will ask not to be confused. In general, the visualization of this matrix in Rviz, in my opinion, seemed to be a very entertaining thing, and even at some point it was fascinating. I could not tear myself away from her for a long time, as, in fact, others to whom I demonstrated her work in action. The video below shows the world through the eyes of a robot in MoveIt.

The distance for constructing a collision matrix can be determined by anyone (the limit is the Kinect measurement range), we set it to 2.5 meters. When setting the maximum distance, you can build a whole room.

And of course, we will need a goal, for the sake of which all this was started. The goal can be coordinates in space, set by the user manually through Rviz, or programmatically, specified in the code or generated by other ROS nodes, for example, by the object recognition unit. Important note: the target location should not contradict the self-collision matrix, i.e., we will not be able to force the robot to place its hand in the body or in the head.

What is the output

The first thing we will see is the computed motion plan of the manipulator shown in Rviz via moveit_msgs :: DisplayTrajectory messages. This message is used only for visualization of planning.

If you do not take into account the visualization, because we will not always need it, then the main information output will be the message moveit_msgs :: RobotTrajectory. Those. in case of reaching the target position of the manipulator, we will simply receive a sequence of moveit_msgs :: RobotTrajectory messages addressed to the controller (the controller is responsible for managing the active joints of the manipulator).

So what is moveit_msgs :: RobotTrajectory? It is just a sequence of points with timestamps, speeds (optional), accelerations (optional). This message sets the group's joints (the groups mentioned above, the group is the right hand, the left hand, etc.) called in accordance with the movement planning.

Since we all live in a world where the environment can change over time, there is a need to monitor it. If during the execution by the controller of the trajectory there are changes in the environment that may lead to collisions in the future, the system stops the execution of the trajectory and starts re-planning.

As a demonstration, we will use the task of reaching the target location, while not allowing any part of the robot's hand to collide with the table or objects on it. The video below shows how well MoveIt is able to cope with this task.

To increase the speed of tracking the trajectory of movement, you can resort to the help of a custom IKFast solver based on OpenRAVE, which we intend to implement in the near future.

In the next post, we will show the implementation of the telepresence of the robot Tod. Stay tuned to our blog.

Source: https://habr.com/ru/post/216681/

All Articles