Denial of Service Attacks: Testing Practice

Post - summary

I think it’s worth starting with the fact that lately more and more customers are turning not only to penetration testing, but also to check the stability of their services to denial of service attacks, most often web sites. And in our practice, there has not been a single case so that a real working site (not a previously prepared site) will not fail, incl. under different protection systems. And this post is a summary of the current experience of (D) DoS testing using different methods of completely different infrastructures (from banks to typical corporate sites).

DDoS attack visualization

So let's do it again. The task is to disable the resource by any available methods, whether we are intruders. In 99% domain / IP is given as source data.

How is work going?

To begin with, a testing methodology is chosen and agreed with the customer. The selection consists of:

- Attacks to the channel (to reach maximum throughput);

- Attacks on network services (slow http, use of exploits for DDoS of certain web servers, attacks on SSL, other technologies);

- And the coolest and most interesting is the attack on the application level (using the features of this application).

The time, power is coordinated, the skype chat is going at the right time and there all the actions are coordinated.

')

And now about each of the methods.

Attack on the channel

Or attack in the forehead. The task is to clog the channel of the server and lead to its inaccessibility for legitimate clients. In reality, attackers use different techniques - from real botnets to attacks through public dns / ntp servers. What are we doing? On Habré there was an article - Million concurrent connections on Node.js. Great idea to use Amazon cloud! Add a few of our tools to generate traffic to the image / set after launching on nodes and get our mini bot-no with 100 Mbps cards on board! As a result, the number of nodes we can control the attack and hit the target from different regions of the world (since Amazon provides servers in different countries), reaching an attack of several gigabits (most real attacks on speed <1 Gbit / s, judging by various statistics). In practice, the entire rack at the hoster will fall off, then something else. Responsibility for working with the hoster takes the customer (to warn that there will be testing, etc.). There was the experience of testing servers and under various protections against such attacks, for example, services like CloudFlare.

The scheme of CloudFlare and many other services to protect against DDoS'a

And in practice - they really provide protection. The site both worked and works. But servers under the protection are found very rarely. But it is still worth remembering what happened when using dns / ntp servers. From such capacities, it is not that the DDoS protection service becomes bad, but sometimes the traffic exchange points themselves.

Attacks on network services

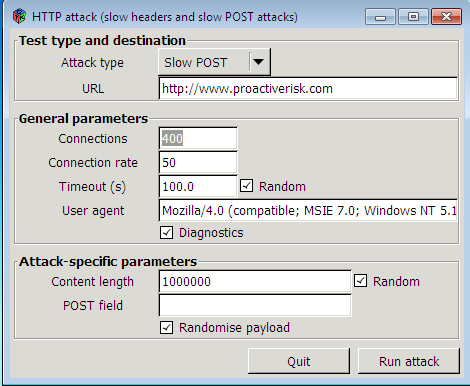

The point is to put the network service itself (web server, proxy, balancer, something else looking out) in any way. Of course, the first thing that comes to mind is slow http! About which more than once was written on Habré. I will try to explain the essence in a couple of sentences using the example of Slow POST: send an http request, indicating a large content-length and slowly transmitting just one byte, cutting off your connection at one of the moments and starting a new one. According to the standard, the server must wait until the data has been completely sent (full — by receiving the contents in Content-Length bytes), interrupting only on timeout. Everything! The server opens a cloud of connections, spends resources (most often - the number of open file descriptors in the system) and lays down. The same Apache falls in one two-second. At home, you can download the tool from OWASP .

OWASP HTTP Post Tool

In a similar pattern, in the case of the SSL server, an attack on “slow SSL” is tried (very slowly we get an SSL certificate). Also flood on the port, if possible - an exploit for the web server itself. And you can still do this with nodes, the server may simply not serve such a number of connections (even legitimate ones).

By the way, from practice. Once we faced the fact that Microsoft Forefront was installed on the tested object and we tried slow http. And he successfully repulsed her - it was in the logs.

TCP and non-TCP packets per second exceeded the system limit. As a result, Forefront TMG has been written in the log.

Forefront TMG.

Forefront TMG flood mitigation.

But later still lay down from the attack on the channel. Here there are many methods of solving “at home”, it all depends on the attack. By the way, protection methods with examples of scripts and configs are described in the Russian Wikipedia article on DoS (and in English only theory).

Attack to application level

The point is to find the features of the application and through them put the server. No protection, balancers, external services do not save from this. What I mean? Various "heavy" queries, such as sampling large amounts of data. One by one completely legitimate request from a couple of dozen cars - and “arrived”. Naturally, such places are easier to find if testing for DoS within the Pentest and there is time to study the application. As real examples:

- ZIP bombs ( 42 kb archive in 8.5 petabytes )

- XML bombs - en.wikipedia.org/wiki/Billion_laughs (if there is XXE, but you cannot connect external entities, only internal forwarding)

- As said - just obviously a large sample of data

- Parameter tampering when sampling various data (for example, it is said that you can select 100 products, and we manually change the HTTP request and select 999999+ products)

- Search functionality

- Other specific cases, such as Django and password change.

Basically, of course, all this is aimed at attacking the backend (post data processing, database), but the problem is that there is no universal protection against this, and admins / programmers / security people are needed to analyze the situation and fix it. . It sometimes happens that the problem cannot be solved at all without revising the architecture and then the crutches begin (well, that if possible at all).

Conclusion

Testing for denial of service can reveal implicit problems in the architecture from which external services for protecting against DoS, configs, new rules in iptables, etc., cannot be saved. and it is worth paying due attention to this type of testing.

Source: https://habr.com/ru/post/216131/

All Articles