Natural language highlighting

I came up with the idea of such highlighting in connection with the bill on equating computer languages with foreign 416D65726963612043616E20436F646520 , considered by the US Congress in December 2013. The use of syntax highlighting in creating programs has long been a practice, but the issue of highlighting natural languages at the time of writing this material was limited to a couple of short discussions on English-language forums. However, if you can facilitate the visual perception of the text by automatically highlighting some words, why not try.

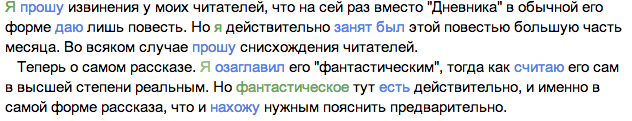

Computer languages certainly differ in severity, constructions are usually highlighted in accordance with the function performed (operator, number, string, etc.) and the first idea that comes to mind is a similar highlighting of natural texts (subject, predicate, etc.). As an example, consider the beginning of the “Fantastic Story” by F. M. Dostoevsky, select all subject to be green, and predicates - blue:

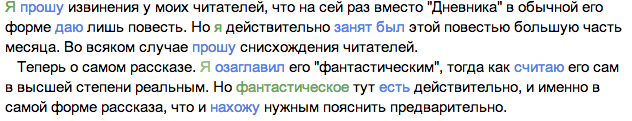

Such markup requires a rather complex text analysis, but in my opinion the backlight should not be complicated (the speed of the backlight algorithm should be approximately the same order as the page loading speed) so that it can be used comfortably. I decided to dwell on a simpler version, namely, on highlighting the frequency, based on the idea that the more often a word is repeated, the less sense it carries. Strictly speaking, this is certainly not true, but if you mark Dostoevsky’s excerpt indicated above, noting in bold those words that are not further used in the story, the following will turn out:

')

Since the selection of words greatly affects the perception of the text - it is useful to have your own solution or solution, the algorithm of which is open. For this reason, the remainder of the article is devoted to the development of such an extension for chrome.

To begin with, we will write a function that, according to a given regular expression, updates the word frequency dictionary:

Collect statistics on the use of words from the DOM:

Then, we exclude from the statistics those words that occur more often than we need it:

And finally highlight all occurrences of words of interest to us:

It would be good to take into account word forms and possibly save up history (that is, work not within the same page, but build a frequency dictionary gathering everything together). In addition, it would be nice to be able to influence the dictionary (fix some words there, and never include some).

Fully cited examples can be taken from github:

github.com/parsifal-47/nalacol

or immediately as a Chrome app:

chrome.google.com/webstore/detail/natural-language-colorer/jjcldlhpnolppcclcgdbblbilmealfjd

I will be glad if this article will inspire readers to create some interesting algorithm for highlighting texts in natural language.

Computer languages certainly differ in severity, constructions are usually highlighted in accordance with the function performed (operator, number, string, etc.) and the first idea that comes to mind is a similar highlighting of natural texts (subject, predicate, etc.). As an example, consider the beginning of the “Fantastic Story” by F. M. Dostoevsky, select all subject to be green, and predicates - blue:

Such markup requires a rather complex text analysis, but in my opinion the backlight should not be complicated (the speed of the backlight algorithm should be approximately the same order as the page loading speed) so that it can be used comfortably. I decided to dwell on a simpler version, namely, on highlighting the frequency, based on the idea that the more often a word is repeated, the less sense it carries. Strictly speaking, this is certainly not true, but if you mark Dostoevsky’s excerpt indicated above, noting in bold those words that are not further used in the story, the following will turn out:

')

Since the selection of words greatly affects the perception of the text - it is useful to have your own solution or solution, the algorithm of which is open. For this reason, the remainder of the article is devoted to the development of such an extension for chrome.

To begin with, we will write a function that, according to a given regular expression, updates the word frequency dictionary:

function collect(text, frequences, pattern) { var words = text.split(/\s+/); // for (var j = 0; j < words.length; j++) { // var current = words[j].toLowerCase().replace(pattern,''); // if (!current || current.length < MIN_LENGTH) continue; // -- , -- if (!frequences[current]) frequences[current] = 1; else frequences[current] += 1; } } Collect statistics on the use of words from the DOM:

var pattern = /[^-]/g var freq = {} function stat(element) { if (/(script|style)/i.test(element.tagName)) return; // if (element.nodeType === Node.ELEMENT_NODE && element.childNodes.length > 0) for (var i = 0; i < element.childNodes.length; i++) stat(element.childNodes[i]); // collect if (element.nodeType === Node.TEXT_NODE && (/\S/.test(element.nodeValue))) { collect(element.nodeValue, freq, pattern); } } stat(window.document.getElementsByTagName('html')[0]); Then, we exclude from the statistics those words that occur more often than we need it:

function remove(o, max) { var n = {}; for (var key in o) if (o[key] <= max) n[key] = o[key]; return n; } freq = remove(freq, maxFreq); And finally highlight all occurrences of words of interest to us:

function markup(element, pattern) { if (/(script|style)/i.test(element.tagName)) return; // if (element.nodeType === Node.ELEMENT_NODE && element.childNodes.length > 0) { // , -, , var freq = {}; for (var i = 0; i < element.childNodes.length; i++) if (element.childNodes[i].nodeType === Node.TEXT_NODE && (/\S/.test(element.childNodes[i].nodeValue))) collect(element.childNodes[i].nodeValue, freq, pattern); if (freq && freq.length !== 0) { var efreq = []; var total = 0; // , // [, ] , // , for (var key in freq) if (freqRus[key]) efreq.push([key, freq[key]]); efreq.sort(function(a, b) {return a[0].length - b[0].length}); // , -, // var max = element.childNodes.length*efreq.length*2; for (var i = 0; i < element.childNodes.length; i++) { if (total++ > max) break; if (element.childNodes[i].nodeType === Node.TEXT_NODE) { var minPos = -1, minJ = -1; // for (var j in efreq) { key = efreq[j][0]; var pos = element.childNodes[i].nodeValue.toLowerCase().indexOf(key); if (pos >= 0 && (minJ === -1 || minPos>pos)) { minPos = pos; minJ = j; } } // -- if (minPos !== -1) { key = efreq[minJ][0]; val = efreq[minJ][1]; // , , "strong" var spannode = window.document.createElement('strong'); var middle = element.childNodes[i].splitText(minPos); var endbit = middle.splitText(key.length); var middleclone = middle.cloneNode(true); spannode.appendChild(middleclone); element.replaceChild(spannode, middle); } } } } } } } markup(window.document.getElementsByTagName('html')[0], pattern); Conclusion

It would be good to take into account word forms and possibly save up history (that is, work not within the same page, but build a frequency dictionary gathering everything together). In addition, it would be nice to be able to influence the dictionary (fix some words there, and never include some).

Fully cited examples can be taken from github:

github.com/parsifal-47/nalacol

or immediately as a Chrome app:

chrome.google.com/webstore/detail/natural-language-colorer/jjcldlhpnolppcclcgdbblbilmealfjd

I will be glad if this article will inspire readers to create some interesting algorithm for highlighting texts in natural language.

Source: https://habr.com/ru/post/215611/

All Articles