Testing on the platform 1C: Enterprise 8. The practical part

Continuing to popularize testing on the 1C: Enterprise platform, we turn to the practical application of the testing system.

The system consists of external processing and a separate information database that acts as a back-end test repository and automatic test execution environment on a schedule.

The system was designed and used in platform version 8.1 and unmanaged platform configurations 8.2. For use in managed configurations in external processing mode, the entire interface must be rewritten or configuration parameters must be changed.

Due to the fact that two projects are merged in the back-end, then, if you want to run automatic tests, the version of the back-end platform should be the same as the platform version of the configuration under test.

A lot of pictures.

Having determined the platform version, and, if necessary, setting the tested configuration ( TC ) to start unmanaged external processing, create the back-end information base ( CU ) from the configuration on the same platform version as the TC .

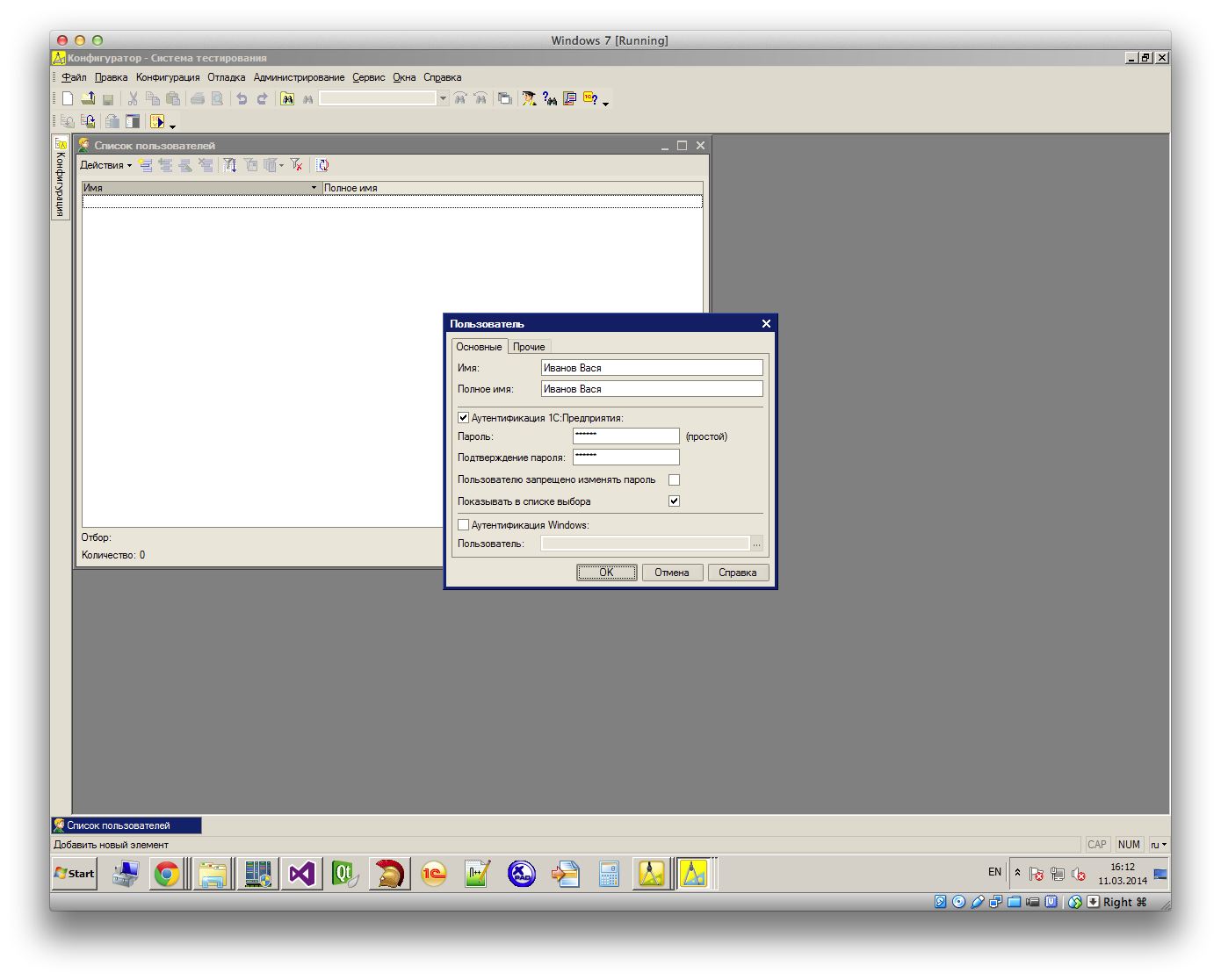

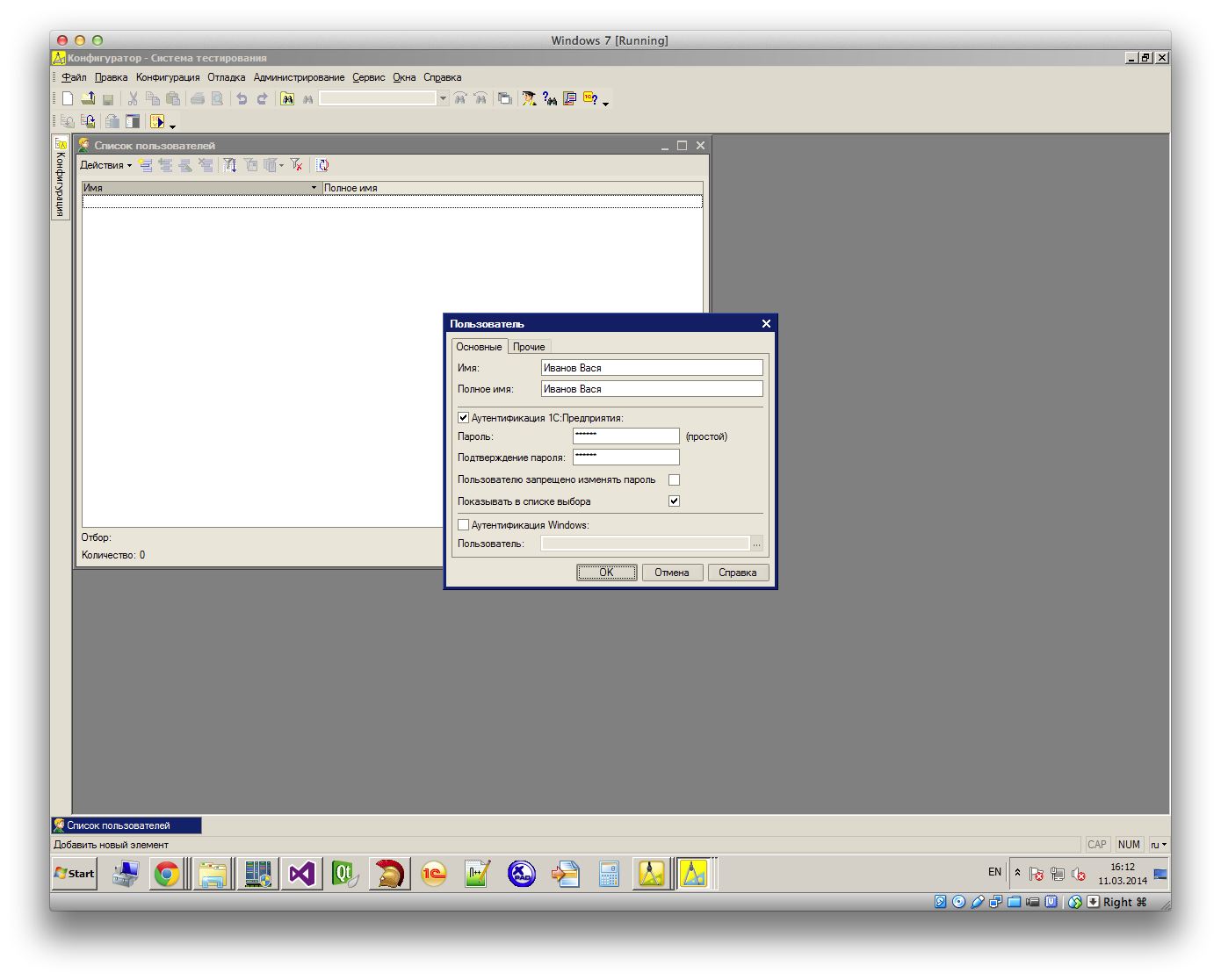

Before you start entering tests, you need to set up the information base of the BU To do this, you need to get the necessary configuration users:

And give one of them the rights of the Administrator:

After that we start the database in the Enterprise mode, go under the administrator and start the built-in processing Settings :

For storage of tests of various configurations the concept Project is used . Unrelated configurations should be tested in different projects. If the configuration makes a qualitative leap in development, but most of the tests from the previous version run without errors, then this is a new Configuration Version of this Project .

Add your project:

The fields Connect with IB with tests and the Path to test processing are used to perform automatic testing. Now we will not fill them, for the input and manual start of tests, the existence of a project in the base of BE is sufficient.

Below you can list the version of TC , this data is used when running the test, if the version of TC is not specified in this list, then the test will not run. Therefore we will get the current version of your TC . The version is taken from the configuration properties:

Add a version:

For each version of the TC, you can set the connection parameters to the storage where this version is developed, then before starting the automatic tests, the system will try to take the current configuration version from the specified storage, roll this configuration onto the database under test and then run the selected tests. You can also execute shell scripts before running tests and after. We leave all additional fields empty, save the created version and write down the project.

Click the Next button:

Here we see the project settings we created, we can add / remove versions / projects.

Click Next again:

This window lists configuration users, here you can set the name of the current user, add other users.

Fill in the current user full name and choose a project by default:

You can also check the box to force the user to change their password when they first log into the test system.

Save the user and click the Next button:

Add current contact information to the current user:

All specified and included e-mail will receive reports on each result of automatic testing.

We press the button Next , we have a form for filling constants:

Here are the parameters for sending generated emails with the results of automatic testing.

We skip this step and click Next again:

Before us is a window where you can create tasks for automatic testing of configurations.

While we leave unchanged, click Done .

At the moment, we have created one project, it has created one version of the current TC , everything is ready to add and run tests.

We launch the TC in the Enterprise mode and open the external processing ( VO ) (the VO is in the aforementioned BE configuration , called Testing , it needs to be unloaded from the configuration as an external processing):

Fill in the authorization form and connect to the test database.

As a result, the main form for working with tests will appear:

On the left is a list of groups into which tests are divided. Add a new group in which we will create our new tests:

In the selected group create a new test. For example, I created a common module named Tests and added an export function there:

In the name of the test, I indicated that I will test. In the Module field, I indicate the location of the test method — the general module Tests :

If I tested a method located in a module of a directory object or a document, then you must additionally specify a link to a specific object of the information base, since The method is executed in the specific context of the specific object. In this case, the context is a global context and there is no need to specify a reference to the context object.

Next, copy the method signature and paste it into the Method Header field:

After that we press the first button in the toolbar above this field - Disassemble the method :

as a result, the Parameters and Results fields are filled.

Fill in the input values for the arguments:

Is done.

Now you can run the test to calculate the results by clicking on the second button (green arrow) Calculating the results and admire the result:

The test worked correctly - we remember the result as a standard, by clicking the third button Remember results as a standard :

Next, we save our work to the test database:

')

In principle, this is all.

In the toolbar above the tests there is a button to start all the tests in this group, in the toolbar above the method header there are buttons to start the test and view the saved reference value.

Pile of fields Before and after execution, executable code, etc. were described in a previous article.

You can experiment.

The binaries are currently available on GitHub . If someone has a desire to refine, correct mistakes, then please share your experiences with the community. So far I have no idea how to collectively develop 1C configurations synchronizing via the Internet. 1C server storage - useless add-on file access and I see no reason to deploy it. The upload / download of the configuration in xml is implemented only in 8.3, perhaps this is the only normal way, but there are configurations under 8.1 and 8.2, which also need to be tested. So for now let it be GitHub , if there is a real need - we will think.

The code is distributed under the GPLv2 license, i.e. - publish your changes in the code, if you use it.

Unrealized dreams:

- This is, of course, ridiculous, but there are no tests for configuration testing. It would be nice to start writing them.

- Refactoring code. The code is not perfect, somewhere crutches and other bad-smelling structures are used. The code of other developers was added to the project, this code was not intently reviewed, there may be amazing places.

- Differentiate access rights for projects. Now, any user has access to any project, in a good way it would be necessary to ensure that each user is assigned only the projects he needs. Ideally, it would be to make a full-fledged system of roles - the right to add a test, to launch a group of tests, etc.

- Transform the form managed. Provides the ability to test managed configurations without changing the settings of these configurations to compatibility mode or enabling external processing as built-in. By and large, the entire model of work with tests was submitted to the external processing module, and in the form modules there was only the connecting code of the controllers.

- Separate the test repository and the automatic testing environment into two configurations, this will make it possible to use any version of the platform for the repository of tests, and take the automatic testing to another computer. In principle, the internal architecture of these parts is already divided, it remains to make this separation and replace the direct reference from the test execution environment to the information base of the test storage for connecting to the storage and calling already existing methods through this connection.

- Improvement of the functional and interface improvement.

The system consists of external processing and a separate information database that acts as a back-end test repository and automatic test execution environment on a schedule.

The system was designed and used in platform version 8.1 and unmanaged platform configurations 8.2. For use in managed configurations in external processing mode, the entire interface must be rewritten or configuration parameters must be changed.

Due to the fact that two projects are merged in the back-end, then, if you want to run automatic tests, the version of the back-end platform should be the same as the platform version of the configuration under test.

A lot of pictures.

Having determined the platform version, and, if necessary, setting the tested configuration ( TC ) to start unmanaged external processing, create the back-end information base ( CU ) from the configuration on the same platform version as the TC .

Before you start entering tests, you need to set up the information base of the BU To do this, you need to get the necessary configuration users:

And give one of them the rights of the Administrator:

After that we start the database in the Enterprise mode, go under the administrator and start the built-in processing Settings :

For storage of tests of various configurations the concept Project is used . Unrelated configurations should be tested in different projects. If the configuration makes a qualitative leap in development, but most of the tests from the previous version run without errors, then this is a new Configuration Version of this Project .

Add your project:

The fields Connect with IB with tests and the Path to test processing are used to perform automatic testing. Now we will not fill them, for the input and manual start of tests, the existence of a project in the base of BE is sufficient.

Below you can list the version of TC , this data is used when running the test, if the version of TC is not specified in this list, then the test will not run. Therefore we will get the current version of your TC . The version is taken from the configuration properties:

Add a version:

For each version of the TC, you can set the connection parameters to the storage where this version is developed, then before starting the automatic tests, the system will try to take the current configuration version from the specified storage, roll this configuration onto the database under test and then run the selected tests. You can also execute shell scripts before running tests and after. We leave all additional fields empty, save the created version and write down the project.

Click the Next button:

Here we see the project settings we created, we can add / remove versions / projects.

Click Next again:

This window lists configuration users, here you can set the name of the current user, add other users.

Fill in the current user full name and choose a project by default:

You can also check the box to force the user to change their password when they first log into the test system.

Save the user and click the Next button:

Add current contact information to the current user:

All specified and included e-mail will receive reports on each result of automatic testing.

We press the button Next , we have a form for filling constants:

Here are the parameters for sending generated emails with the results of automatic testing.

We skip this step and click Next again:

Before us is a window where you can create tasks for automatic testing of configurations.

While we leave unchanged, click Done .

At the moment, we have created one project, it has created one version of the current TC , everything is ready to add and run tests.

We launch the TC in the Enterprise mode and open the external processing ( VO ) (the VO is in the aforementioned BE configuration , called Testing , it needs to be unloaded from the configuration as an external processing):

Fill in the authorization form and connect to the test database.

As a result, the main form for working with tests will appear:

On the left is a list of groups into which tests are divided. Add a new group in which we will create our new tests:

In the selected group create a new test. For example, I created a common module named Tests and added an export function there:

Function Addition (Value Argument1, Value Argument2) Export

Return Argument1 + Argument2;

End Function

In the name of the test, I indicated that I will test. In the Module field, I indicate the location of the test method — the general module Tests :

If I tested a method located in a module of a directory object or a document, then you must additionally specify a link to a specific object of the information base, since The method is executed in the specific context of the specific object. In this case, the context is a global context and there is no need to specify a reference to the context object.

Next, copy the method signature and paste it into the Method Header field:

After that we press the first button in the toolbar above this field - Disassemble the method :

as a result, the Parameters and Results fields are filled.

Fill in the input values for the arguments:

Is done.

Now you can run the test to calculate the results by clicking on the second button (green arrow) Calculating the results and admire the result:

The test worked correctly - we remember the result as a standard, by clicking the third button Remember results as a standard :

Next, we save our work to the test database:

')

In principle, this is all.

In the toolbar above the tests there is a button to start all the tests in this group, in the toolbar above the method header there are buttons to start the test and view the saved reference value.

Pile of fields Before and after execution, executable code, etc. were described in a previous article.

You can experiment.

The binaries are currently available on GitHub . If someone has a desire to refine, correct mistakes, then please share your experiences with the community. So far I have no idea how to collectively develop 1C configurations synchronizing via the Internet. 1C server storage - useless add-on file access and I see no reason to deploy it. The upload / download of the configuration in xml is implemented only in 8.3, perhaps this is the only normal way, but there are configurations under 8.1 and 8.2, which also need to be tested. So for now let it be GitHub , if there is a real need - we will think.

The code is distributed under the GPLv2 license, i.e. - publish your changes in the code, if you use it.

Unrealized dreams:

- This is, of course, ridiculous, but there are no tests for configuration testing. It would be nice to start writing them.

- Refactoring code. The code is not perfect, somewhere crutches and other bad-smelling structures are used. The code of other developers was added to the project, this code was not intently reviewed, there may be amazing places.

- Differentiate access rights for projects. Now, any user has access to any project, in a good way it would be necessary to ensure that each user is assigned only the projects he needs. Ideally, it would be to make a full-fledged system of roles - the right to add a test, to launch a group of tests, etc.

- Transform the form managed. Provides the ability to test managed configurations without changing the settings of these configurations to compatibility mode or enabling external processing as built-in. By and large, the entire model of work with tests was submitted to the external processing module, and in the form modules there was only the connecting code of the controllers.

- Separate the test repository and the automatic testing environment into two configurations, this will make it possible to use any version of the platform for the repository of tests, and take the automatic testing to another computer. In principle, the internal architecture of these parts is already divided, it remains to make this separation and replace the direct reference from the test execution environment to the information base of the test storage for connecting to the storage and calling already existing methods through this connection.

- Improvement of the functional and interface improvement.

Source: https://habr.com/ru/post/215409/

All Articles