Another do-it-yourself NAS, part 1: from what was

annotation

On average, the next post about the NAS appears about once every six months, and talks about how to put the system on the documentation. We will complicate the task by tying it to a real project and limiting the budget. In addition, we will also try to spread our straw in places where the young sysadmin has not yet walked, and also destroy several industry myths.

This article is not for server storage professionals, gamers and other overclockers. For you, colleagues, and so the whole industry works. It is for novice sysadmins, fans of UNIX-systems and free software enthusiasts. All accumulated old iron. Everyone needs to store large volumes at home or in the office. But not everyone has easy access to server technologies.

I really hope that you will find some useful ideas for yourself and still learn from the mistakes of others. Remember: the system does not cost as much as you paid for the iron, but how much time and effort you put into testing and operation.

If you do not want to read - see the links and conclusions at the end; maybe you change your mind.

')

DISCLAIMER

The information is provided by AS-IS without any liability for its use by anyone, anywhere and ever. All inadvertently mentioned trademarks are the property of their respective owners. Some of them do not need advertising so much that I make them funny names.

Thanks

Respect Andrei Alexandrovich Bakhmetyev, engineer and inventor. I am proud that Andrei Aleksandrovich taught for me at the institute! I wish him every success in his projects!

Task

So, there is a small business startup that generates about 50GB of files per week, with the need to archive them for several years. Files are large (about 10–20 MB each); they are not compressible by conventional algorithms. The initial data volume is about 2TB. Very old data can be stored offline, connecting on demand.

It is necessary to meet a very modest initial budget of the decision of 500 euros (in the prices of summer 2013) and a two-week period for assembly and testing .

For this money, you need to build a system that allows you to work with files on a small group on the same local network from different platforms (Windows, Mac OS). It requires long-term work without a sysadmin on the site, protection against failures and basic functions of managing access rights.

Traditional ways

Of course, you can buy network storage: they are made by NetApp , QNAP , Synology and other players, and, moreover, they do well even for small businesses. But our 500 euros is just the beginning of a conversation for an empty box, without the disks themselves. If you have 1000-2000 euros, better buy the finished product, and we will try to pay as much as possible with knowledge and minimally with time and money.

UPD (spoiler rev. 2 dated 2014-03-08):

If you collect from new iron, but not from trash

From the aggregate of this post and its comments, kindly provided by the habrocomunity, I propose the following algorithm for a simple four- disk system:

- If the double size of the most capacious of the available disk models is not enough for the stored data, stop reading the spoiler (example: 4TB model, you need to store 7TB of data, then continue; if you want to store 10Tb, then we stop)

- Choose a product from the MicroServer line of the famous server manufacturer Harlampy-Pankrat; for example, n36l, n40l, n54l, with four drive bays (the main thing is to support ECC memory)

- We are surely completing our server with parity memory (ECC) at the rate of 1GB for every 1TB of stored data, but not less than 8GB (as recommended by FreeNAS for disks up to 4Tb, just 8GB is obtained)

- If we do not have an ECC memory, immediately stop reading this spoiler , read the post to the end

- Select the manufacturer of the discs using the current overview of failures; for example, this one: http://habrahabr.ru/post/209894

- Choosing an inexpensive line of SATA disks with the mandatory presence of ERC , and why, read here: http://habrahabr.ru/post/92701

- Select the capacity of the disks (2Tb, 3Tb or 4Tb) on the basis that there will be four of them, and that only half will be available for data (the second half for RAID redundancy)

- Before purchasing, we carefully and thoroughly check the compatibility of the iron with each other, the number of slots, compartments, strips and other things, but for FreeNAS the most important thing is the support of all the iron with the actual FreeBSD kernel

- Choosing a good bootable flash drive after reading the continuation of this post (part 2: good memories)

- We buy, inhale the flavors of new iron, collect, connect, run; for ZFS, be sure to turn off all hardware RAIDs

- Create a RAIDZ2 volume from four disks, always with double redundancy (on a size of about 12TB there is a risk to meet the evil URE, read about it in this post; if we are not afraid of URE and still collect the RAIDZ on four disks, check the size of the physical sector - on modern disks, it is 4Kb, and in this case it will turn out to be a completely ridiculous 43Kb strip, which will also drop us the speed of the array: forums.servethehome.com/hard-drives-solid-state-drives/30-4k-green-5200-7200- questions.html )

- Salt, sugar, pepper, jail'y, balls, scripts and the like sour cream add to taste

But what about cloud storage , you ask? At the time of this writing, popular cloud storage for our volumes look more expensive than we would like. For example, the cost of storing an unlimited amount of data for 36 months at a well-known service. Drop Boxing will cost you a couple of thousand dollars or more, although you can pay them out gradually. Of course, there are services like Amazon Glacier (thank AM for the hint) or Open Window, but, first, they charge not only storage, but also appeal (how to calculate it a priori?), And second, let's not forget that business is sitting on a 10Mbps Internet uplink, and terabytes maneuvers will require not only some effort to manage the processes, but will also be very tedious for users.

Usually in such cases, take the old computer, buy more large disks, put Linux (not necessarily, someone contrives and Windows 7), make an array of RAID5. Fine. Everything works well for about six months or a year, but one sunny morning, the server suddenly disappears from the network without any warning. Of course, the sysadmin has been working in another company for a long time (staff turnover), there is no backup copy (the volumes are too large), and the new sysadmin cannot repair the system (and the old sysadmin and Linux YYY dialect stand on the light) ZZZ, then there really would be no problems). All these stories are repeated a long time and in the same way, only OS versions change and data volumes grow.

Industry Myths

RAID5 myth

The most common myth in which I myself believed until recently was that there could not be a second failure in an array in practice according to the theory of probability. And here can, and how! Let's simulate a real situation: the server has worked for a couple of years, after which the disk fails in the array. So far nothing terrible, we put a new disk, and what happens? Yeah, array reconstruction, i.e. prolonged maximum load on the already worn discs. In such a situation, failures are very possible and occur.

But that's not all. There is still a manufacturer’s methodical probability of a read error, which, under certain circumstances, now almost guarantees that RAID5 will not build back after a disk failure.

Terabyte myth

You can, of course, assume that all disk manufacturers are novice programmers, but one industry kilobyte has 1000 bytes received, strictly according to the SI system (the other kilobyte has actually been called kibibyte since 1998 and is denoted by KiB). However, this is not all. The fact is that all manufactured spindle disks have defects already found at the factory, the number of which is random, and therefore the actual available size “walks”. In budget models, he walks even within the same batch of identical products, both in the larger and in the smaller direction. In my set of four identical disks with a nominal value of 2 TB, two turned out to be about 2 GB less, and the other two - about 400 MB more than the nominal volume. Those. kilobytes, like a sine in wartime, ranges from 999 bytes to 6 bits to an honest 1000 bytes, even with a nib at the end. Either the products are delivered to our market by leaking submarines, or the flood is to blame, but the bits go somewhere.

Do not underestimate this factor: if replacing a failed disk in an array turns out to be at least one block shorter than the nominal volume, then the degraded RAID will not theoretically gather to an optimal state, and we will get a headache that could have been easily avoided at first. Proceeding from this, more does not mean better, the main thing is constancy.

I assume that server hardware manufacturers solve this problem by always making technological reserves and at the same time artificially lowering the amount of available space in the disk firmware, so with a certain product code they always (within the support) get a disk that has the same capacity . This is probably one of the reasons why the Seagate drive under the well-known server trademark Harlampy-Pankrat and his “brother” without it is not quite the same product. But this is just my guess. Perhaps the leaders of the data storage market have up-to-date trump cards as well.

Project risks

In any project it is important to understand the risks, because in the end we are building not for fun, but for the success of the business. In order to achieve harmony Crepsondo (excuse, business continuity), for the beginning we will build a simplified risk model that should take into account possible failures and their consequences.

Hardware

According to the budget, we do not have access to server hardware, therefore we can use only cheap disks and controllers, and this is a territory of spontaneous failures out of the blue. Hardware risks include: mechanical wear (spindle disks, fans), electrical wear (especially flash memory), errors in the firmware of the disk or controller, poor-quality power supply, poor-quality disks, spilling hardware RAID. Lack of spare parts of the instrument’s property in stock due to obsolescence can also be considered as a risk.

Software

Software malfunctions include the problems of standard operating systems that tend to self-destruct and are not the best self-healing ability after power failures, requiring regular administration. Add to this the reconstruction of the software RAID array, errors in the controller drivers, user actions (intentional and unintentional), actions of malicious code.

Iron available

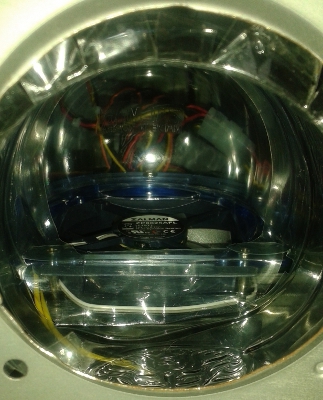

At hand was my old computer around 2004. the release on the motherboard Socket 478 GA-8IPE1000MK , with a Pentium 4 @ 3 GHz CPU and 1 GB of RAM. The case says ZEUS, it has as many as six internal 3.5 ”compartments (by the standards it is a lot), one 3.5” for archaic FDD, four 5.25 ”, two spaces for cooling fans and a 250W power supply. The ATI RADEON 8500 video card at one time rendered hits such as Soldiers of Anarchy, but its fan on the oil bearing howls for a long time, like the Baskervilles dog (of course, when it turns out to be a rotation at all). The cooling of the CPU was decided by Zalman CNPS5700D-Cu , which pulled the heated air from the radiator and blew it through the eccentric duct into the case, from where it had to be blown out again with a second fan.

One day I was so sick of this whole airfield that I decided to cut it out literally: I took a power saw and cut a round hole in the case (on the fan grill), having increased the air duct with a piece of plastic bottle from under the Karma Doma mineral water. Removed the second fan and lowered the first (on the CPU) revolutions rheostat.

In such a slightly punk form, all this material part was sad on the shelf until our days.

Complementing my Kunstkamera hole in the rear panel of the case because of the free interpretation of the manufacturer of the body of standards ATX: it was impossible to drive a blank panel there without a file, and I left these attempts.

The motherboard had a RAM controller, which did not allow changing the bracket in STANDBY mode (this is when the computer is turned off with the button, but the power supply is turned on). There's even a special LED led displayed RAM_LED, whose task was to warn the sysadmin about the presence of voltage in the circuit:

When RAM_LED is ON, do not install / remove DIMM from socket

Of course, in the end, the controller is covered; and if you do not move the memory in the slot in a certain shamanic manner, the motherboard did not see it and began to squeak resentfully. In the reference book, this signal could mean both a RAM problem and a power supply problem, which was completely confusing. To complete the picture, the BIOS created some kind of particularly wicked environment when booting from flash drives, which is why I categorically did not load all the SYSLINUX derivatives (for reference: this is almost the only CD / flash drive bootloader for a huge number of Linux variants).

So what am I doing all this for?

Findings:

- Such a computer for a server task is completely unsuitable.

- Young sysadmins categorically contraindicated sex with old iron.

Ideas

Iron replacement

Of course, the buggy mother, the worn out mechanics and the old power supply unit do not fit the Crepsondo philosophy at all (oh, sorry business continuity again), and therefore must be replaced first and foremost without any further discussion. Harmony Crepsondo is more important for us, so say goodbye to the old iron, it fulfilled its historical mission.

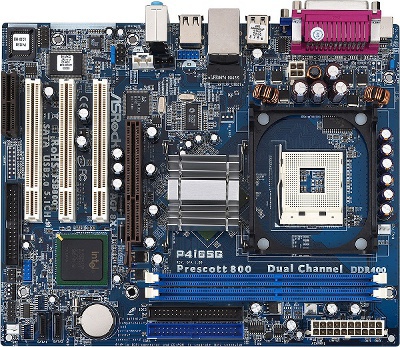

The replacement choice for Socket 478 turned out to be small: ASRoc P4i65G . It seems to be a good mother with onboard graphics, three PCI, two SATA and six USB on board. Hardware monitoring is based on Winbond W83627 (supported in the lm-sensors package; this later turned out to be useful in calibrating the rheostat of the fan based on the CPU temperature of the operating system).

Now nothing beeps, booting from flash drives works fine, which is already pleasing. Onboard a hundred megabits for the NAS is not enough, so one PCI slot immediately occupies the budget D-Link DGE-530T , and two more PCIs are left on the disk controllers. Usually they have up to four ports, which together with two onboard will give us the opportunity to connect ten drives.

I will tell about the new power supply unit later, for the time being I’ll just note that 250W was enough for my Socket 478-based system. Therefore, having estimated the power of 200W in my mind for the promotion of spindle disks, I immediately agreed to the budget source FSP Group ATX-450PNR with a nominal value of 450W offered to me in the store. Superficially, I liked the large low-speed 120mm fan - which means noise will be less ( UPD: looking ahead, ATX-450PNR, despite all the tricks, failed to cope with the task, and I do not recommend using it ; see habrahabr.ru/post/218387 ).

At the same time, I grabbed a pair of Zalman ZM-F1-FDB fans on a trendy hydrodynamic bearing: the first one goes to the CPU cooler, the second one to blow the first group of disks.

Actually, it remains to choose the most important.

Disk subsystem

For network storage, the most important task is to select an array mode ( RAID ). Since the budget of the solution does not allow us to use server hardware, we sigh and immediately postpone hardware RAID controllers, SAS and other Fiber Channel aside. There we lay aside and solid-state drives. If we have a NAS in the kitchen (sorry for the pun), the thorny path will pass through the magical world of software RAID solutions based on cheap SATA spindle drives . So much more entertaining, but practice crepsondo will help us.

Discs

In my subjective opinion, SATA products (compared to SAS / FC) are still more confused and more mixed with marketing. In the Seagate spindle drive, I saw two conditional price ranges, which differ by about 40%. The top one is considered to be a solution for medium-sized businesses, and the bottom one - for home users and small businesses. What threatens the use of the cheapest discs? According to the subjective estimates of some experts ( link ), cheap drives fail significantly more expensive in the first week of operation, and the trend continues for the year. Carefully citing this table here, I repeat that this is a very approximate subjective assessment of one of the Internet users, without specifying specific products:

| Technology | Bounce in the first week | Bounce in the first year |

| Fiber Channel | 1 of 40 | 2 of 40 |

| SAS | 1 of 34 | 2 of 34 |

| SATA dear | 1 of 14 | 2-4 of 14 |

| Sata cheap | 1 of 8 | 2-4 of 8 |

According to the observation of the same user, about one or two of a dozen yearling SATA drives fail in the second year of life. Of course, all SATA significantly behave worse than SAS or Fiber Channel, with this one can hardly argue. As, however, with a dedicated budget, which leaves us little choice.

I chose the manufacturer of Seagate quite intuitively, so I will not describe this process.

UPD:

Since the events described took place in the summer of 2013, I did not read this wonderful post: http://habrahabr.ru/post/209894/ . It follows from it that Seagate is not the best choice, but the reader, of course, is now warned and armed. Thank you, habrokomyuniti, you are the best!

Quickly analyzing the offers in stores, I noted that the price of budget disks of large volume 4Tb is almost 90% higher than offers for 2Tb, i.e. the unit cost of storing a gigabyte grew almost linearly from the volume. Why is this so important? The fact is that I could not find a controller for a PCI bus with guaranteed support for 4Tb drives, and it was not possible to experiment. This posed a difficult choice: either to limit the 2TB disks or to abandon the old hardware and switch to the PCI Express bus (with the purchase of a new computer). Fortunately, the almost linear dependence of price on capacity has saved difficult decisions, but I recommend the reader to always consider the total cost of the disk subsystem, because in NAS it is decisive, and the benefit from capacious disks can outweigh everything else.

I liked its price model ST2000DM001 . It was the most budgetary option in the Seagate line at 2Tb, uses the new sector size of 4Kb and requires proper initialization (formatting) of the file system. Interestingly, representatives of ST2000DM001 come across with both two and three plates (in the picture - an option with two).

Take four pieces of ST2000DM001 at 7200 rpm, enough to start. Three discs were purchased with two plates (a larger recess on the case and a third letter of the serial number: E), and a fourth with three plates (a smaller recess on the case, the third letter of the serial number: F). Drive modification 1CH164, firmware version CC26. Do not forget that we are dealing with cheap SATA-drives, so at least update the firmware to CC29.

Of course, a representative of the NAS HDD ST2000VN000 line is more adapted to our task: this model has the most useful for the ERC array and runs at 5900 rpm; it can be expected that the drive heats up less, lasts longer, and the difference in speed is unlikely to be noticeable in the network storage mode.

UPD:

When choosing disks for an array, be sure to ask for an ERC, read about it here: habrahabr.ru/post/92701

Initially, the model ST2000DM001 was greatly confused by the Power-On Hours 2400h parameter, but for Seagate this is the number of operating hours per year, not the total lifetime. Well, let's hope that a third day of work for a small business should be enough. You can take the spindles to STANDBY on timeout, sacrificing the start-stop mechanics of the device. Whether such savings are justified, time will tell.

Controller

The choice of vintage SATA controllers for the PCI bus turned out to be small. I bought a budget 4-port STLab A-224 based on Silicon Image SiI3114. This controller does not officially support disks larger than 2.2TB, although rare forum users claim the opposite.

Since we work with low-end hardware, hardware RAID is better not to use. Why? Because the industry rests on low-end RAID controllers, leaving insidious bugs in them. Because the software array is easier to monitor, repair and tyunit. Because our computer is actually a RAID controller with a network. But I still wish good luck to any daredevil who does not agree with me.

Despite a lot of flaws, archaic iron still has one significant advantage: drivers, most likely, have long been sewn into the kernels of all operating systems in the world, and over the years of operation, almost all the bugs have been shaken out, and from both drivers and firmware controllers themselves. I hope that this is the case with A-224, because firmware bugs are very, very dangerous. The seller, give two controllers, until they finally disappeared from sales.

Total

| Product | Price approx., EUR | Qty | Cost, EUR |

| Mat. ASRock P4I65G board | 47 | one | 47 |

| STLab A-224 controller | 20 | 2 | 40 |

| Disk ST2000DM001 | 76 | four | 304 |

| Network adapter D-Link DGE-530T | eight | one | eight |

| ATX 450PNR power supply | 32 | one | 32 |

| Zalman ZM-F1-FDB Fan | 7 | 2 | 14 |

| 8GB USB flash memory | five | one | five |

| Cables, adapters, thermal grease, etc. | ten | - | ten |

| TOTAL | 460 |

Let's look again at our design. Simple as a sleeper, the controller in JBOD mode will not fail. Four third-generation SATA disks younger than the controller are about ten years old, and they give out 150 Mb / s on average over the plate (this is more than the entire PCI). Therefore, they will squeeze out all the juice from the controller, but this is unlikely to be much noticeable on the network. Restoration of a degraded mirror 2Tb will take from 8 hours, which is a lot, but not fatal; on 4tb it would be 16h. There is a slow processor, some RAM, several USB ports, a gigabit network, a completely new mechanic, free ports on the controller, free compartments in the case and an electrical power reserve. In the budget for iron laid out, we will deal with software.

Software selection

Of the free storage systems, FreeNAS , OpenMediaVault (OMV) and openfiler are most often mentioned. Everyone has their own "chips": for example, OMV is very modest in requirements, and openfiler has the function of remote replication .

Let's look at the FreeND project based on the FreeBSD platform. For those who do not know: “frishka” (as it is affectionately called) is not Linux , but a free classical UNIX named after the University of Berkeley, California. By the way, the fry can be considered the grandfather (grandmother?) Of modern Mac OS X.

Why FreeNAS?The main advantage of FreeNAS is that it is a free project of a system with an industrial design, like the famous brand hypervisor Wash Varyu loaded from a small-sized flash drive. What does industrial design mean? FreeNAS mounts the root file system of the flash drive in read-only mode, and writes logs to the repository or to the RAM disk (did not find such a mode in OMV or in openfiler). This not only saves flash memory from wear and tear, but also protects the server from software failures. FreeFreeNAS is realistically implemented as a turnkey box, i.e. box with one button ON. According to the Crepsondo paradigm, this is the ideal solution for business. Recall also the requirements for customer support for Windows and Mac OS workstations.

FreeNAS's great "trick" are frivolous jails (

But do not take FreeNAS as a budget solution. The focus of the FreeNAS team is on the development of a modern ZFS file system . This is a whole galaxy of technologies, but for us, its RAM voracity is of paramount importance: 8GB of memory and, accordingly, 64-bit architecture is the beginning of a conversation for adequate performance on our amount of stored data ( link1 , link2). I do not argue, ZFS can be started on the i386 architecture, even with 1GB of RAM. But despite my efforts, on the old hardware, even in simple linear read / write operations, ZFS showed such disgusting performance that I can only consider this option as a system layout, but not a solution. In addition to this, on four drives, ZFS recommends using RAIDZ2, i.e. Effective volume will be half (as in RAID1), but at the same time require an exorbitant consumption of CPU and RAM resources. The question is, how much is ZFS justified on all four disks? In theory, the ZFS array is reconstructed faster, but there is one factor that is very unpleasant for us.

The main problem is that the ZFS system is very sensitive to RAM errors. Here is the conclusion of an expert study of ZFS methodological resilience to RAM errors, conducted by Yupu Zhang, Abhishek Rajimwale, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau from the University of Wisconsin-Madison (quoted from End-to-end Data Integrity for File Systems: A ZFS Case Study ):

In the last section we showed the robustness of ZFS to disk corruptions. Although ZFS was not specifically designed to tolerate memory corruptions, we still would like to know how ZFS reacts to memory corruptions, ie, whether ZFS can detect and recover from a single bit flip in data and metadata blocks. Our fault injection experiments indicate that ZFS has no precautions for memory corruptions: bad data blocks are returned to the user or written to disk, file system operations fail, and many times the whole system crashes .

Wow, how do you get the whole array to fail on a bunch of TB because of a RAM error? No, thank you. We are adepts of Crepsondo, we see similar options in advance and through. On the new memory bars with error control (ECC RAM) and the new server motherboard (and at the same time: the processor, cooling, chassis, power supply, etc.), our budget is definitely not enough. Therefore, without further regret, we put ZFS aside. Good technology, but without server hardware - time bomb.

Conclusion: if you choose an industrial design in the turnkey box style, then this is FreeNAS; if you collect on the old trash, then this is not ZFS; UFS remains on the GEOM framework. The only problem is that FreeNAS even with UFS recommends at least 2GB of RAM, which we don’t have. This is a risk, but our workload will be quite small.

A bit of history

The geom (4) frame is a modular subsystem for processing disk operations, developed around 2003. Network Associates (McAfee) security lab for the FreeBSD project as part of a contract with DARPA, alma mater of the entire Internet. Those.From a certain angle, GEOM can be considered a kind of disk relative of the Internet itself, “wired” into the UNIX core by the efforts of antivirus programmers. Here is a cocktail.

It is worth remembering the fate of the FreeNAS project itself, which has experienced a kind of split personality (more precisely, development, if we consider the mentioned OMV on the Debian platform). Without going into details, at the exit we have two very similar projects: the new FreeNAS and the old one, for legal reasons now called NAS4free .

It seems that the new owners of the FreeNAS project did not spare the energy for a deep refactoring of the code, which was probably due to the rejection of some “outdated” functions (for example, RAID5). In any case, FreeNAS looks like a strong development driver for FreeBSD, and there is a clear interest in the development of ZFS in the “frishny” core. Well, we wish good luck to colleagues.

If we compare FreeNAS and its NAS4free ancestor branch, then for me subjectively FreeNAS looks stronger, despite the lack of RAID5. There is a certain feeling that is not easy to explain with words: through the graphical interface of NAS4free it smells like a code smell that requires deep refactoring (“code with a smell”). So what is this refactoring? Here is a simple example: unlike NAS4free, even when working with flash drives, FreeNAS can apply configuration changes without a full system reboot. And this is despite the fact that the root system is mounted in read-only mode. For me it was a strong argument. In addition, FreeNAS has moved to the storage configuration in RDBMSSQlite , and NAS4free still uses simple, but not the most reliable XML format.

RAID5 or non RAID5

Although GEOM's UFS and software RAID arrays do not fit to ZFS with RAIDZ (at first glance, it seems that this is a competition of a set of sleepers against a cable-stayed bridge), GEOM does have some popular modes. However, modern FreeNAS does not allow creating RAID5 volumes, and only the simplest modes RAID0 (stripe) and RAID1 (mirror) are left for compatibility.

Why is that?

There are probably two reasons for this, let's call them simple: mechanical and mathematical (although they are intertwined in spindle disks like wave-particle duality).

Imagine a failure / replacement of one disk in an array of 10TB after two years of operation: the reconstruction process during the week (!) Will be tortured by already worn spindles (see above RAID5 Myth ). But with such stress, the old disks may not stretch even three days, finally dropping the array, then stress will begin with us, and what kind.

You ask: how is it, why is the week for reconstruction? Let's look at the representatives of two generations of Seagate Barracuda (use materials http://www.storagereview.com ):

| Ruler | Approximate year | Capacity | Wed plate reading speed, MB / s | Full reading, h | RAID5 Reconstruction |

| 7200.9 | 2005 | 500GB | 50 | ~ 02: 45 | a very long time |

| 7200.14 | 2012 | 4TB | 150 | ~ 07: 25 | prohibitively long |

If the capacity has grown about 8 times, then the speed is only three times. The irony, however, is that, a priori, we can imagine the RAID1 reconstruction speed here, and even such a fast option on our vintage PCI controller will not be so hot. In RAID5 arrays, the speed is generally determined by the mathematical abilities of the processor, and according to various estimates it is about a day for each TB of data (alas, I can’t give you any links, sorry).

But that's not all, dear reader. The disks have a parameter called the Unrecoverable Read Error Rate, which on modern low-end SATA models is 1 sector for every one hundred trillion bits. Those.approximately from each recorded 12TB disk, one time says “forgive, master, but it is absolutely impossible to give back the necessary sector; read error. This is a methodological mistake made by the manufacturer and therefore theoretically guaranteeing the impossibility of reconstructing a RAID5 array with a capacity of more than 12TB on cheap disks (in fairness, we note that the URE on SAS disks is at least an order of magnitude smaller, and the critical volume, respectively, is greater). RAID5 epitaph was written by Robin Harris in his article Why RAID 5 stops working in 2009 .

Following the selection of iron, the maximum total capacity of our disks is 20TB (18 TiB ), so once again we remind ourselves about the path to business continuity through the crepsondo philosophical practices, let us take a breath and remember RAID5 together.

:

So, I refuse both hardware RAID (expensive), and ZFS (expensive) and software RAID5 (slow and unreliable). I choose FreeNAS with UFS volumes based on GEOM technologies: it can be repaired easily, reliably and, if necessary, like a Kalashnikov rifle. That is necessary.

Add a USB flash drive to boot the system - we will completely allocate the spindle disks for the data. We don’t want someone to pull out a bootable flash drive sticking out from the outside, so we’ll choose the budget flash drive with the smallest dimensions (as it turned out, it was a fatal and rash decision: http://habrahabr.ru/post/214803/ ).

From options Stripe and Mirror, I choose, of course, Mirror (ie, RAID1). The final disk system looks like a set of several independent mirror volumes. Each mirror is assembled from a pair of 2TB disks (controller limitation), initialized and mounted independently. The maximum amount of online stored data on ten disks will be about 10TB in five independent volumes (more precisely, 9TiB).

Although such a design may seem a bit clumsy, it is really justified with our data volumes and the number of disks: otherwise we would get a non-separable monolith with an extreme reconstruction time for failures.

Let's add one small touch here: since cheap consumer disks are used, it will be necessary to artificially reduce the volume when creating volumes so that there are no problems with replacing the failed disks with new ones (with a floating capacity of about 2 TB). Leave at the end of technological "tails" for better sleep.

On the carrying capacity of a car loaded with streamer cassettes

From the point of view of archival storage, you should not get upset about capacity at all: we have a collapsible array. Having exhausted the available amount of stored data on the online server in volumes No. 1-5, we can manually disable the oldest volume No. 1, extract its disks, install two new 2TB disks and initialize the new volume No. 6. Old disks can then be shod in a USB-constructive and can be connected to the same FreeNAS server at the request of the business without disassembling the entire case. You can mount them read-only. With a great desire, you can connect it to both Windows and Mac. In any case, remember: the old spindle drive is better not to shake for nothing, and then from age magnetic sand from the HDA will fall down.

There is another interesting scenario with unionfs : transfer the filled volumes to read mode and put them “down” under the file system of the “upper” volume, then there will be an illusion of continuity of disk space. True, unionfs is an abstruse piece and therefore dangerous, and the option with read-only is probably the only one that is more or less tested.

All, the volume of archival storage is now limited by the volume of the cabinet or case, where the old disks are added. If this case is also moved in space, then the bandwidth is generally off scale.

Case engineering

Let's think a little about primary cooling, because our disks at 7200rpm will be lukewarm. We find in the case a place for blowing 3.5 ”compartments and with almost surgical work we adapt our Zalman ZM-F1-FDB fan on anti-vibration bands that we have to pull through the thin slits of the case. Damn these consumer corps with their aisles and slots ...

I remembered the old comedy.

The soldier is asked: "Why do you see so badly?" He replies: "Well, there is one eye surgery, but they do it through the anus, and I will not let a single guy in there" ...

The eccentric-green plastic bottle from under the Karma House mineral water, protruding from the back of the case, already had an eye on the eyes. Therefore, we analyze the cooler CNPS5700D-Cu, take the duct with us and go to the grocery store for shopping. Trying out the different types of mineral water bottles in turn, we are convinced that the diameters of the two-liter Aqua Ping bottle with the round part of the CNPS5700D-Cu duct match perfectly (did they, at one plant, have it?).

Thank the Stuck School company for such a successful coincidence, and, after spending a couple more hours with various sharp objects, we get a part of an air duct of complex shape made of transparent plastic.

We put a new ZM-F1-FDB 80mm fan in the cooler, its hydrodynamic bearings have a comparable resource, but quieter than ringing ball bearings. At the last moment, of course, it turns out that the hole on the hull is half a centimeter higher than necessary, so we add a petal skirt made of adhesive tape, the idea of which was suggested by the aircraft designers of fighters with a variable thrust vector.

Our product really looks like a deflecting nozzle, but it doesn't look so punk anymore.

Finally, the time has come to figure out the very place where I failed to unravel the Great Chinese Engineering Plan a decade ago. Let me remind you that we are talking about the back panel of the ATX connectors, which comes bundled with the motherboard, more precisely, about the impossibility of installing it in this slot:

It turns out that the rebus is completely solved by pliers, simply accelerate the profile around the perimeter, centimeter by centimeter. The socket will perfectly keep its holes on the connectors, and the bumps will go inside the case and do not violate our engineering aesthetics:

In order to avoid the effect of spaghetti, SATA cords are tied to each other with ties, because there is no place for pasta in server housings. We mark cables using twisted pair markers. The rheostat of the fan is fixed to the case on an empty leg for the motherboard, which turned out to be very appropriate. The old disks are still in the case for better airflow calibration, but we will get rid of them soon.

Guided again by considerations of thermal efficiency, mirrors-mirrors from disks will be collected through at least one compartment, i.e. so that the disks of one array are not neighbors in the compartments and do not heat each other , especially on long reconstruction operations. Disks are also labeled, at least the volume number. UPD: it is better to place the serial number of the disc by printing it on a thermal thermal tape printer, and in the absence thereof, simply on a strip of paper under a transparent adhesive tape. When there are more than two disks, it can be very useful during emergency and emergency work.

It remains only to turn on the power, measure the temperature and calibrate the rheostats of the fans under load.

Nutrition

The power unit of the FSP Group ATX-450PNR responds rather positively, but they consider the disadvantage ( reference1 , reference2 ) of the reboiler's efficiency and archaic minimalist design (no power corrector). The advantage is

The starting power of four spindles ST2000DM001 is expected to be about 2.5A x 4 x 12V = 120W, which, in combination with the cold architecture of the Pentium 4 without graphics, should climb 250W with a margin.

It is noteworthy that on the Taiwan website of the FSP Group I did not manage to find this power supply unit among the products, but the stores in the Russian Federation clearly did not suffer from them. There was a suspicion that this is a specially cheaper OEM version for the CIS market, in which everything that is possible due to low efficiency is torn off. After all, we have long winters and an excess of electricity in the country, which we gladly turn by inefficient appliances into the cozy warmth of homes and offices.

In short, despite the efficiency of the boiler, our unit still produces about 200W more than is required, which is good news. But there is a nuance about which we will write in the following parts of our history ...

findings

- The disproportionate growth of storage capacities has practically buried the time-tested things like RAID5.

- In the struggle for the time of the array reconstruction, new, high-tech file systems are winning, but they are realizable only on expensive hardware (due to ECC memory).

- Building a server on the trash was and remains a risk; in such a situation, rational simplicity wins, bordering on the primitive (like a collapsible array of mirrors).

- Archaic hardware - vintage technology, but in a new "industrial design" packaging.

To be continued

Read in the following parts: about the experience of actual operation, failures, the next turn of the hull engineering and other system tuning.

UPD:

All parts of the story about Another NAS do it yourself :

part 1: from what was

Part 2: Good memories (Flash memory for downloading FreeNAS and other embedded OS)

part 3: adventures in the old tower

part 4: the ghost of Chernobyl

Links

End-to-End Data Integrity for File Systems: A ZFS Case Study by Yupu Zhang,

Abhishek Rajimwale, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau (Computer

Sciences Department, University of Wisconsin-Madison)

www.netapp.com

www.qnap.com

www.synology.com

www.openmediavault.org

www.openfiler.com

aws.amazon.com/glacier

www.freebsd.org

www.linux.org

sqlite.org

www.freenas.org

www.nas4free.org

forums.freenas.org/threads/what-number-of-drives-are-allowed-in-a-raidz-config.158/#post-38835

www.zdnet.com/blog/storage/why-raid-5-stops-working-in-2009/162

www.wikipedia.org/wiki/ZFS

wiki.freebsd.org/ZFSTuningGuide

doc.freenas.org/index.php/Hardware_Recommendations

hardforum.com/showthread.php?t=1689724

www.wikipedia.org/wiki/GEOM

www.freebsd.org/cgi/man.cgi?query=geom&sektion=4

www.wikipedia.org/wiki/Unix_File_System

www.asrock.com/mb/overview.asp?Model=P4i65G

www.lm-sensors.org/ticket/1865

www.fsp-power.ru/product/atx_450pnr

www.fsp-group.com.tw

article.techlabs.by/print/36_29785.html

www.wasp.kz/articles.php?article_id=465

www.computerhope.com/beep.htm

www.gigabyte.com/products/product-page.aspx?pid=1648

www.zalman.com/eng/product/Product_Read.php?Idx=266

www.zalman.com/eng/product/Product_Read.php?Idx=410

www.dlink.com/us/en/home-solutions/connect/adapters/dge-530t-dge-530t-32-bit-10-100-1000-base-t-pci-adapter

Source: https://habr.com/ru/post/214707/

All Articles