FineReader 12: new in the interface and the complexity of converting achievements to percentages

We built, built and, finally, built!

We built, built and, finally, built!As the name implies, we recently stumbled. In this regard, under the cut, we will try to explain in simple Russian what FineReader 12 is good for, so that those who need it can understand, run now to the online store for a new version or wait quietly for a couple of years to see a happy thirteenth.

Pro interface

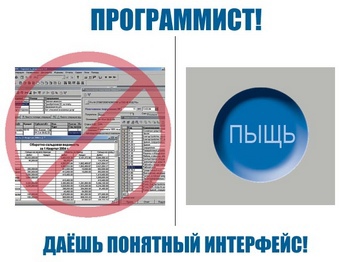

To begin with, it is sure to catch the eye of users of old versions. That is, from the interface. No, we did not reach the ideal, as in the left picture, but we are moving towards it systematically. The next steps in this movement were features with the internal names “non-modality” and “quick discovery”.

')

If the FineReader 11 (and, of course, older versions) throw the document into k pages, then he will think for n seconds before it is possible to do something useful with his hands. For all these n seconds, our technologies will take over, not wanting to share access to pages with users.

In version 12, for starters, the user will see the added pages immediately . And the most important thing is that the ability to edit blocks / make special manual image preprocessing when there is such a need (it usually arises when a bad document is useless, a terrible document is exaggerated) appears instantly . Moreover, at this time recognition can already go. In short, almost all of our mechanisms can work simultaneously with the user so that the latter does not lose time waiting. The unconquered peaks on the way to complete non-modality remained the processes that we have called document synthesis and export. The peculiarity of these processes is that they process the document as a whole, and not page by page, so they cannot work in parallel with the user. However, these processes usually take less than 10% of the total time, and they occur in the final stages, when the user most likely has already done everything he wanted.

In addition, the new version provides a citation script. It assumes that the user does not need the entire document as a file (/ - s), but only its individual pieces for their further copying somewhere. Honestly, if in your usual work we are talking about quoting one or two paragraphs, then I would recommend using our Screenshot Reader (by the way, it is not only sold separately, but also part of FineReader as a bonus). However, if you need to get 15 specific paragraphs from a 100-page document, the new feature will be most welcome. The tangible advantage in such a scenario is that FineReader selects blocks quite decently, which means it remains only to find the necessary page and click Copy on the target block. If you do not have a Pentium 3, but something fresher, then, most likely, it will take no more than a second.

In addition, the new version provides a citation script. It assumes that the user does not need the entire document as a file (/ - s), but only its individual pieces for their further copying somewhere. Honestly, if in your usual work we are talking about quoting one or two paragraphs, then I would recommend using our Screenshot Reader (by the way, it is not only sold separately, but also part of FineReader as a bonus). However, if you need to get 15 specific paragraphs from a 100-page document, the new feature will be most welcome. The tangible advantage in such a scenario is that FineReader selects blocks quite decently, which means it remains only to find the necessary page and click Copy on the target block. If you do not have a Pentium 3, but something fresher, then, most likely, it will take no more than a second.Help we now keep online. The product, as aram_pakhchanian rightly noted , is stuffed with possibilities, and this obviously obliges the certificate to be accurate and relevant. Onlineness here is the perfect way out: today we are testing embedding of a pre-translation, and tomorrow any user already uses up-to-date information.

In addition, we now have an auto-update mechanism. Of course, you will not have to download a whole distribution kit, but only a patch (units of megabytes). FineReader will do everything itself, except that it will clarify whether the user wants to update.

I, in general, are engaged in testing, so I see the product for a long time and, to be honest, I don’t even know what to add - I got used to its new look and new features. In this regard, here's a review on 3dnews , and I give the microphone 57ded , which will tell you why the recognition quality grows from 98% for many years to 30-40% in the product cycle, but still has not reached 200%.

About technology

Everything is clear about new or well forgotten old image processing functions. On the one hand, we finally made the removal of color stamps on office documents as in the picture on the right, which, IMHO, is quite acceptable and corresponds to the current level of science and technology.

Everything is clear about new or well forgotten old image processing functions. On the one hand, we finally made the removal of color stamps on office documents as in the picture on the right, which, IMHO, is quite acceptable and corresponds to the current level of science and technology.On the other hand, a thick layer of naphthalene was blown off with the functions of restoring white balance and other “visual improvement” of the image, first introduced back in 2007 as part of FineReader 8. In addition, an improvement was announced on the work on documents with tables and diagrams. As for diagrams, I note that, most likely, here to diagrams because of some kind of misunderstanding, or unwillingness to understand the details, carried documents with pictures, where there was a screenshot in the picture - we just solved this problem purposefully.

As FineReader’s announcement says (we’re not going to give the link, there’s no place for announcements here, really) improvements have been made in “up to” 33% on charts with screenshots and “up to” 40% on tables. No normal interested skeptic will simply miss these numbers, therefore we will explain where they came from. Captain Evidence suggests that the accuracy of recognition is usually measured by comparing the result of the work of the OCR program with a certain standard "as it should be." If we measure the accuracy of text recognition, when it is already known where exactly this text is located (a task that captivates many people with its beauty and asks to use all the power of the classification theory), then measuring how close the result is to the original does not pose any problems at all .

For the task of selecting text zones, it is not so easy to measure the result: for example, because you can combine the text and the title to it into one block, and you can do two text blocks on it. Now many in the world need to measure the quality of the work of the text zone selector, everything measures a little differently, but the general idea is very simple. In the benchmark store all the tables, texts and images on the page. We make sure that the results of recognition of the table and the pictures are in place, and the text is inside the text zones. And further, in various cunning ways, we prohibit various bad ones. For example, you cannot combine two columns into one block (reading order is lost), you cannot break the lines in succession and tear off the initial letter from the text (for the same reason), you do not need to combine black text on a white background and white on a black into one block.

Now, in order to measure accuracy for tables and diagram screenshots, there is nothing left: to see how many tables the previous version could not find, compare with the number of tables the current one failed to cope with, take 100% of the “shortage” tables of the previous version and get the desired result ... Oops ..., again, not so simple.

First, the table should not just be found, it should be marked on the cells. When the cell boundaries are not labeled in any way, it becomes an easy task. But, as we have already mentioned , we have achieved significant progress in the previous version, so that in the current one we could allow ourselves to move “by inertia”. By the way, to measure the quality of the division of tables into cells is a fairly simple task. As a rule, you can firmly say whether there is a border of cells in a given place or not, so to assess the quality of FineReader, you need to calculate the number of missing cell boundaries and add the number of redundant ones.

In fact

the loss of the border between the columns of the table should be considered a more significant error than the random division of one cell in the table header, so we believe that the borders of each cell - that is, the weight of the vertical separator is equal to the number of cells it divides.

Secondly, it is quite difficult to mark a fairly large database of images. We will explain a little here what the difficulty is. Suppose we mark one page from some document. It is well placed on it if there is one tablet, maybe a couple of pictures and quite a lot of text. To measure the accuracy of recognition, we get several thousand characters and hundreds of words, and for the problem of searching tables - yes, just one table. It hardly makes sense here to continue whining on the topic, how difficult it is, you already understood everything yourself.

Thirdly (do you seriously believe that the snags have run out already?), In some cases humanity cannot say for sure whether the table is in front of us or the text. Say, as in the picture on the right.

Thirdly (do you seriously believe that the snags have run out already?), In some cases humanity cannot say for sure whether the table is in front of us or the text. Say, as in the picture on the right.In such cases, we take the Solomon solution "And so, and so right."

Applying the mentioned witch, we came to the conclusion that in our database ...

“Stop!” - any opponent will rightly be indignant, - “To use the training base as a test one is beyond good and evil !!!”. Well, have to agree with him. Indeed, comparative testing should be carried out on a completely new database of freshly downloaded or freshly scanned images, so that there is a guarantee that the programmers didn’t try to tune them. But here we are just remembering that marking this base is not cheap. So far, the solution is as follows:

- Well, what could the base, that and adapted for honest testing;

- Accurately measuring the improvement figures will not work - and we write “improved by one-third” or “improved by two-fifths” - which in marketing materials turns into 30-33-40% (sometimes it seems to me that their authors are competing in the frequency of using the word "). From there, and our shy “to” or “up to”.

- But then, since the base is small, we can carry out a “subjective” test - with our eyes see this couple of hundreds of images and say which version has worked better for them. The above figures have found a subjective confirmation, which strengthens us in the confidence that this is true.

In general, we note that the very task of identifying texts, tables and pictures on a document cannot be beautifully “mathematically” formulated, therefore, the started cycle of posts about artificial intelligence very well relates to it.

On this, allow me to bow out, offering at parting to get acquainted with the trial of our wonderful product.

Source: https://habr.com/ru/post/214681/

All Articles