The logic of thinking. Part 2. Factors

In the previous section, we described the simplest properties of formal neurons. It was said that the threshold adder reproduces the nature of a single spike more accurately, and the linear adder allows you to simulate a neuron response consisting of a series of pulses. It was shown that the value at the output of the linear adder can be compared with the frequency of the spikes caused by a real neuron. Now we look at the basic properties that such formal neurons possess.

Hebba Filter

Further we will often refer to neural network models. In principle, almost all the basic concepts from the theory of neural networks are directly related to the structure of the real brain. A person, faced with certain tasks, invented many interesting neural network structures. Evolution, going through all possible neural mechanisms, selected everything that turned out to be useful for it. It is not surprising that for very many models, invented by man, you can find clear biological prototypes. Since our narrative does not aim at any detailed description of the theory of neural networks, we will touch only the most general points needed to describe the main ideas. For a deeper understanding, I highly recommend referring to the special literature. For me, the best tutorial on neural networks is Simon Heikin “Neural networks. Full course "(Heikin, 2006).

')

At the core of many neural network models is the well-known rule of learning Hebb. It was proposed by the physiologist Donald Hebbb in 1949 (Hebb, 1949). In a slightly liberal interpretation, it has a very simple meaning: the connections of neurons that are activated together must be strengthened, the connections of neurons that are triggered independently should weaken.

The output state of the linear adder can be written:

If we initiate the initial values of the weights in small quantities and give different images to the input, then nothing prevents us from trying to train this neuron according to Hebb's rule:

where n is a discrete time step, -

learning rate parameter.

learning rate parameter.By this procedure, we increase the weights of those inputs to which the signal is sent.

, but we do it all the more, the more active the reaction of the most trained neuron

, but we do it all the more, the more active the reaction of the most trained neuron  . If there is no reaction, then there is no learning.

. If there is no reaction, then there is no learning.True, such weights will grow indefinitely, so normalization can be used to stabilize. For example, divide by the length of the vector obtained from the "new" synaptic weights.

With this training, the weights redistribute between synapses. Understand the essence of redistribution is easier if you follow the changes in weights in two steps. First, when the neuron is active, those synapses that receive a signal receive an additive. Weights of synapses without a signal remain unchanged. Then the total normalization reduces the weights of all synapses. But at the same time, synapses without signals lose in comparison with their previous value, and synapses with signals redistribute these losses among themselves.

Hebb's rule is nothing but the implementation of the gradient descent method on the surface of an error. In fact, we force the neuron to adjust to the signals supplied, each time shifting its weight in the direction opposite to the error, that is, in the direction of the antigradient. In order for the gradient descent to lead us to a local extreme, without skipping over it, the descent speed must be fairly small. That in Hebbovsky training is taken into account by the smallness of the parameter

.

.The smallness of the learning speed parameter allows you to rewrite the previous formula as a series of

:

:

If we discard the terms of the second order and above, we get the Oja rule of learning (Oja, 1982):

A positive additive is responsible for Hebbov training, and a negative one for overall stability. Writing in this form allows you to feel how such training can be implemented in an analog environment without using computation, operating only with positive and negative connections.

So, this extremely simple training has an amazing property. If we gradually reduce the learning rate, then the weights of the synapses of the trained neuron converge to such values that its output begins to correspond to the first main component, which would have been obtained if we applied the corresponding principal component analysis procedures to the supplied data. This design is called the Hebb filter.

For example, we give a pixel image to the input of a neuron, that is, we associate one image point to each neuron synapse. We will feed only two images to the neuron input - images of vertical and horizontal lines passing through the center. One learning step - one image, one line, either horizontal or vertical. If these images are averaged, the cross will be obtained. But the result of training will not be similar to averaging. This will be one of the lines. The one that will be more common in the submitted images. The neuron does not select the averaging or intersection, but the points that most often occur together. If the images are more complex, the result may not be so clear. But it will always be the main component.

Learning a neuron leads to the fact that a certain image is highlighted (filtered) on its scales. When a new signal is delivered, the more accurate the coincidence of the signal and the balance settings, the higher the response of the neuron. A trained neuron can be called a neuron detector. In this case, the image, which is described by its weights, is called the characteristic stimulus.

Main components

The very idea of the principal component method is simple and ingenious. Suppose we have a sequence of events. We describe each of them through its influence on the sensors with which we perceive the world. Let's say that we have

sensors describing

sensors describing  signs

signs  . All events for us are described by vectors.

. All events for us are described by vectors.  dimensions

dimensions  . Each component

. Each component  such a vector indicate the value of the corresponding

such a vector indicate the value of the corresponding  th sign. Together, they form a random variable X. These events we can depict as points in

th sign. Together, they form a random variable X. These events we can depict as points in  -dimensional space, where the signs observed by us will act as axes.

-dimensional space, where the signs observed by us will act as axes.

Averaging values

gives the mathematical expectation of a random variable X , denoted as E ( X ). If we center the data so that E ( X ) = 0, then the cloud of points will be concentrated around the origin.

gives the mathematical expectation of a random variable X , denoted as E ( X ). If we center the data so that E ( X ) = 0, then the cloud of points will be concentrated around the origin.

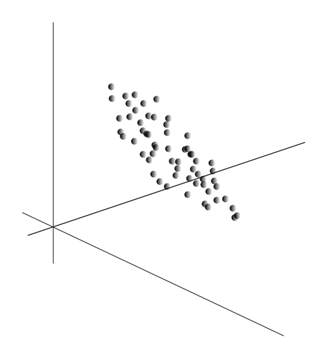

This cloud may be elongated in any direction. Having tried all possible directions, we can find one along which the data dispersion will be maximal.

So, this direction corresponds to the first main component. The main component itself is determined by a unit vector emerging from the origin of the coordinates and coinciding with this direction.

Next, we can find another direction perpendicular to the first component, such that along it the dispersion is also the maximum among all perpendicular directions. Finding it, we get the second component. Then we can continue the search by setting the condition that we should search among the directions perpendicular to the components already found. If the source coordinates were linearly independent, then so we can do

times, until the dimension of space ends. So we get

times, until the dimension of space ends. So we get  mutually orthogonal components

mutually orthogonal components  sorted by the percentage of data variance they explain.

sorted by the percentage of data variance they explain.Naturally, the obtained main components reflect the internal laws of our data. But there are more simple characteristics that also describe the essence of the existing laws.

Suppose we have n events in all. Each event is described by a vector.

. Components of this vector:

. Components of this vector:

For each feature

You can write down how he manifested himself in each of the events:

You can write down how he manifested himself in each of the events:

For any two signs on which the description is based, you can calculate the value showing the degree of their joint manifestation. This value is called the covariance:

It shows how much the deviations from the mean value of one of the signs coincide in manifestation with similar deviations of another sign. If the mean values of attributes are zero, then the covariance takes the form:

If we correct the covariance by the standard deviations inherent in the signs, then we obtain a linear correlation coefficient, also called the Pearson correlation coefficient:

The correlation coefficient has a remarkable property. It takes values from -1 to 1. Moreover, 1 means the direct proportionality of the two quantities, and -1 means their inverse linear relationship.

Of all the pairwise covariances of features, a covariance matrix can be made.

which, as it is easy to be convinced, is the mathematical expectation of the product

which, as it is easy to be convinced, is the mathematical expectation of the product  :

:

We will further assume that our data are normalized, that is, they have unit variance. In this case, the correlation matrix coincides with the covariance matrix.

So it turns out that for the main components

rightly so:

rightly so:

That is, the main components, or, as they are called, the factors are eigenvectors of the correlation matrix

. They correspond to their own numbers

. They correspond to their own numbers  . In this case, the larger the eigenvalue, the greater the percentage of variance explains this factor.

. In this case, the larger the eigenvalue, the greater the percentage of variance explains this factor.Knowing all the main components

for each event

for each event  which is an implementation of X , you can write its projections onto the main components:

which is an implementation of X , you can write its projections onto the main components:

Thus, it is possible to present all the source events in the new coordinates, the coordinates of the main components:

In general, there is a distinction between the search procedure for the main components and the procedure for finding the basis of the factors and its subsequent rotation, which facilitates the interpretation of the factors, but since these procedures are ideologically close and give a similar result, we will call both of them factor analysis.

For a fairly simple procedure of factor analysis lies a very deep meaning. The fact is that if the source feature space is an observable space, then the factors are features that, although they describe the properties of the surrounding world, are generally (if they do not coincide with the observed features) entities that are hidden. That is, the formal procedure of factor analysis allows us to proceed from the phenomena of observables to the discovery of phenomena, although directly and invisible, but nevertheless existing in the surrounding world.

It can be assumed that our brain actively uses the allocation of factors as one of the procedures for cognition of the surrounding world. Selecting factors, we get the opportunity to build new descriptions of what is happening with us. The basis of these new descriptions is the manifestation in the events of those phenomena that correspond to the selected factors.

I will explain a little the essence of the factors at the household level. Suppose you are a personnel manager. Many people come to you, and for each one you fill out a certain form, where you record various observable data about the visitor. After reviewing your notes, you may find that some columns have a certain relationship. For example, a haircut for men will be on average shorter than for women. You are likely to meet bald people only among men, and only women will paint their lips. If factor analysis is applied to personal data, then gender is one of the factors explaining several patterns at once. But factor analysis allows you to find all the factors that explain the correlation dependencies in the data set. This means that in addition to the gender factor, which we can observe, there will be other, including implicit, unobservable factors. And if the floor is explicitly featured in the questionnaire, another important factor will remain between the lines. Assessing the ability of people to express their thoughts, assessing their career success, analyzing their diploma and similar marks, you will come to the conclusion that there is a general assessment of a person’s intelligence, which is not explicitly recorded in the questionnaire, but which explains many of its points. The assessment of intelligence is the hidden factor, the main component with a high explanatory effect. Obviously, we do not observe this component, but we record signs that are correlated with it. Having a life experience, we can subconsciously, by individual signs, form an idea about the intellect of the interlocutor. That procedure, which our brain uses in this case, is, in fact, a factor analysis. Observing how certain phenomena manifest themselves together, the brain, using a formal procedure, highlights factors as a reflection of the stable statistical patterns inherent in the world around us.

Selection of a set of factors

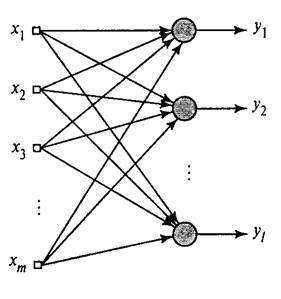

We showed how the Hebba filter allocates the first major component. It turns out that with the help of neural networks one can easily get not only the first, but also all the other components. This can be done, for example, in the following way. Suppose we have

input signs. Take

input signs. Take  linear neurons where

linear neurons where  .

.

Hebba's generalized algorithm (Heikin, 2006)

We will train the first neuron as a Hebb filter, so that it selects the first main component. But each subsequent neuron will be trained on the signal, from which we exclude the influence of all previous components.

The activity of neurons in step n is defined as

And the amendment to synoptic scales is like

Where

from 1 to

from 1 to  , but

, but  from 1 to

from 1 to  .

.For all neurons, this looks like learning, similar to Hebb's filter. With the only difference that each subsequent neuron does not see the entire signal, but only that which the previous neurons did not see. This principle is called re-evaluation. We actually, by a limited set of components, restore the original signal and force the next neuron to see only the remainder, the difference between the original signal and the restored one. This algorithm is called the generalized Hebba algorithm.

In the generalized Hebb's algorithm, it is not entirely good that it is too “computational” in nature. Neurons must be ordered, and the calculation of their activities must be strictly sequential. This is not very compatible with the principles of the cerebral cortex, where each neuron, while interacting with the others, works autonomously, and where there is no pronounced "central processor" that would determine the overall sequence of events. From such considerations, the algorithms, called decorrelation algorithms, look somewhat more attractive.

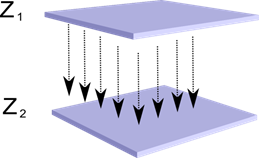

Imagine that we have two layers of neurons Z 1 and Z 2 . The activity of the neurons of the first layer forms a certain pattern, which is projected along axons to the next layer.

Projection of one layer to another

Now imagine that each neuron of the second layer has synaptic connections with all axons coming from the first layer if they fall within the boundaries of a certain neighborhood of this neuron (figure below). Axons that fall into such a region form the receptive field of a neuron. The receptive field of a neuron is the fragment of total activity that is available to it for observation. Everything else for this neuron simply does not exist.

In addition to the receptive field of the neuron, we introduce a region of somewhat smaller size, which we call the suppression zone. Connect each neuron with its neighbors that fall into this zone. Such connections are called lateral or, following the terminology accepted in biology, lateral. We make lateral connections inhibiting, that is, lowering the activity of neurons. The logic of their work - an active neuron inhibits the activity of all those neurons that fall into its zone of inhibition.

Excitatory and inhibitory connections can be distributed strictly with all axons or neurons within the boundaries of the respective areas, and can be set randomly, for example, with a dense filling of a certain center and an exponential decrease in the density of connections as it moves away from it. Continuous filling is simpler for modeling, random distribution is more anatomical from the point of view of organizing connections in a real crust.

The function of the activity of the neuron can be written:

where is

total activity, -

total activity, -  many axons that fall into the receptive area of the selected neuron,

many axons that fall into the receptive area of the selected neuron,  - many neurons, in the zone of suppression of which the selected neuron falls,

- many neurons, in the zone of suppression of which the selected neuron falls,  - the power of the corresponding lateral inhibition, taking negative values.

- the power of the corresponding lateral inhibition, taking negative values.Such a function of activity is recursive, since the activity of neurons is dependent on each other. This leads to the fact that the practical calculation is performed iteratively.

Learning synaptic weights is done similarly to Hebb's filter:

Lateral weights are trained according to anti-Hebbovsky rule, increasing inhibition between "similar" neurons:

The essence of this construction is that the Hebbov learning should lead to the selection on the neuron scales of values corresponding to the first main factor characteristic of the supplied data. But the neuron is able to learn in the direction of any factor only if it is active. When a neuron begins to allocate a factor and, accordingly, react to it, it begins to block the activity of neurons that fall into its zone of suppression. If several neurons claim for activation, mutual competition leads to the fact that the strongest neuron wins, oppressing all the others. There is nothing left for other neurons except how to learn in those moments when there are no neighbors with high activity nearby. Thus, decorrelation occurs, that is, each neuron within the region, the size of which is determined by the size of the suppression zone, begins to select its own factor, orthogonal to the rest. This algorithm is called an adaptive principal component extraction (APEX) algorithm (Kung S., Diamantaras KI, 1990).

The idea of lateral inhibition is similar in spirit to the well-known for different models principle “winner takes all,” which also allows for the decorrelation of the area in which the winner is sought.This principle is used, for example, in the Fukushima neocognitron, the self-organizing Kohanen maps, and this principle is also used in teaching Jeff Hawkins' well-known hierarchical temporal memory.

The winner can be determined simply by comparing the activity of neurons. But such a brute force, easily implemented on a computer, somewhat does not correspond to the analogies with the real crust. But if we aim at doing everything at the level of neuron interaction without using external algorithms, then the same result can be achieved if, in addition to lateral inhibition of neighbors, the neuron will have a positive feedback that excites it. Such a technique for finding a winner is used, for example, in Grossberg’s adaptive resonance networks.

If the ideology of the neural network allows for this, then using the “winner takes all” rule is very convenient, since it is much easier to find the maximum activity than iteratively cheating the activity with regard to mutual inhibition.

It's time to finish this part. It turned out for a long time, but I really didn’t want to split up the narrative of the meaning. Do not be surprised KDPV, this picture was associated for me at the same time with artificial intelligence and with the main factor.

Continuation

References

Part 1. Neuron

Alexey Redozubov (2014)

Source: https://habr.com/ru/post/214241/

All Articles