The logic of thinking. Part 1. Neuron

About one and a half years ago I laid out a cycle of video lectures on Habr with my vision of how the brain works and what are the possible ways to create artificial intelligence. Since then, it has been possible to significantly advance. Something went deeper to understand, something managed to simulate on the computer. What is nice, like-minded people have appeared, actively participating in the work on the project.

In this series of articles it is planned to talk about the concept of intelligence on which we are currently working and demonstrate some of the solutions that are fundamentally new in the field of modeling the work of the brain. But so that the narration is understandable and consistent, it will contain not only the description of new ideas, but also a story about the work of the brain in general. Some things, especially at the beginning, may seem simple and well-known, but I would advise not to skip them, since they largely determine the overall conclusiveness of the narrative.

')

General idea of the brain

Nerve cells, they are neurons, together with their fibers that transmit signals, form the nervous system. In vertebrates, the main part of neurons is concentrated in the cranial cavity and spinal canal. This is called the central nervous system. Accordingly, distinguish the brain and spinal cord as its components.

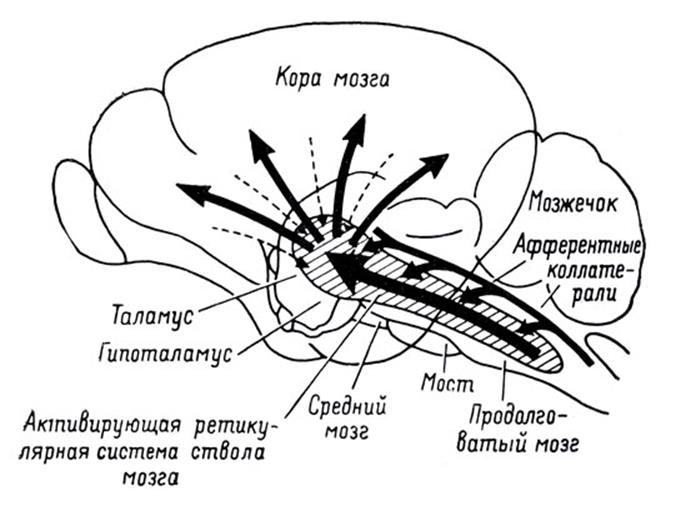

The spinal cord collects signals from most body receptors and transmits them to the brain. Through the structures of the thalamus, they are distributed and projected onto the cortex of the cerebral hemispheres.

The projection of information on the bark

In addition to the big hemispheres, the cerebellum is also engaged in information processing, which, in fact, is a small independent brain. The cerebellum provides precise motor skills and coordination of all movements.

Sight, hearing and smell provide the brain with a stream of information about the outside world. Each of the components of this stream, passing along its own path, is also projected onto the cortex. The bark is a layer of gray matter with a thickness of 1.3 to 4.5 mm, constituting the outer surface of the brain. Due to the convolutions formed by the folds, the bark is packed in such a way that it occupies three times less space than in the straightened form. The total area of the cortex of one hemisphere is approximately 7000 sq. Cm.

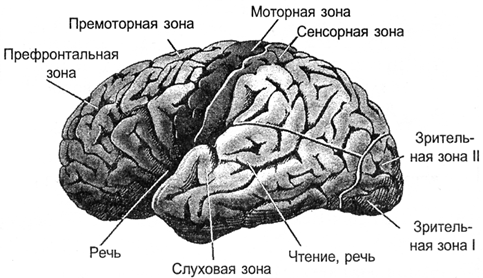

As a result, all signals are projected onto the cortex. The projection is carried out by bundles of nerve fibers, which are distributed over limited areas of the cortex. The area on which either external information is projected or information from other parts of the brain forms a zone of the cortex. Depending on what signals come to such a zone, it has its own specialization. Distinguish between the motor zone of the cortex, the sensory zone, the zones of Brock, Wernicke, visual zones, occipital lobe, only about a hundred different zones.

Bark zones

In the vertical direction, the crust can be divided into six layers. These layers do not have clear boundaries and are determined by the predominance of one or another cell type. In different zones of the cortex, these layers can be expressed differently, stronger or weaker. But, in general, we can say that the core is quite universal, and it is assumed that the functioning of its different zones is subject to the same principles.

Bark layers

For afferent fibers signals enter the cortex. They fall on the III, IV level of the cortex, where they are distributed in the neurons nearby to the place where the afferent fiber has fallen. Most neurons have axonal connections within their cortex. But some neurons have axons that go beyond it. For these efferent fibers, signals go either beyond the brain, for example, to the executive organs, or are projected onto other parts of the cortex of their own or of the other hemisphere. Depending on the direction of signal transmission, efferent fibers can be divided into:

- associative fibers that bind parts of the cortex of one hemisphere;

- commissural fibers that connect the cortex of the two hemispheres;

- projection fibers that connect the cortex with the nuclei of the lower parts of the central nervous system.

If we take the direction perpendicular to the surface of the cortex, it is noticed that the neurons located along this direction react to similar stimuli. Such vertically arranged groups of neurons are called cortical columns.

You can imagine the cerebral cortex as a large canvas, cut into separate zones. The pattern of activity of the neurons of each of the zones encodes certain information. Bundles of nerve fibers, formed by axons that extend beyond their bark, form a system of projection connections. Certain information is projected onto each of the zones. Moreover, several information flows that can come from both their own and the opposite hemisphere zones can arrive at the same zone. Each information flow is similar to a peculiar picture drawn by the activity of the axons of the nerve bundle. The functioning of a separate zone of the cortex is the acquisition of a set of projections, the memorization of information, its processing, the formation of its own picture of activity and the further projection of information resulting from the operation of this zone.

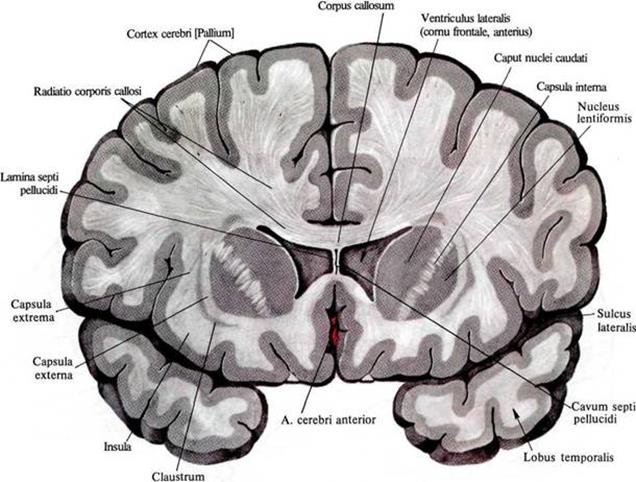

Significant brain volume is white matter. It is formed by axons of neurons, creating the same projection paths. In the figure below, white matter can be seen as a bright filling between the cortex and the internal structures of the brain.

The distribution of white matter in the frontal section of the brain

Using diffuse spectral MRI, we managed to track the direction of individual fibers and build a three-dimensional model of connectivity of the cortex zones (Connectomics project (Connect)).

The structure of relationships is well represented by the figures below (Van J. Wedeen, Douglas L. Rosene, Ruopeng Wang, Guangping Dai, Farzad Mortazavi, Patric Hagmann, Jon H. Kaas, Wen-Yih I. Tseng, 2012).

View from the left hemisphere

Back view

Right view

By the way, the asymmetry of the projection paths of the left and right hemispheres is clearly visible in the rear view. This asymmetry largely determines the differences in those functions that acquire the hemispheres as they learn.

Neuron

The basis of the brain is a neuron. Naturally, brain modeling using neural networks begins with an answer to the question, what is the principle of its operation.

The basis of the work of a real neuron are chemical processes. In a state of rest between the internal and external environment of the neuron there is a potential difference - the membrane potential of about 75 millivolts. It is formed by the work of special protein molecules that work as sodium-potassium pumps. These pumps at the expense of the nucleotide energy of ATP drive potassium ions inside, and sodium ions - outside the cell. Since the protein acts as ATP-ase, that is, an enzyme that hydrolyzes ATP, it is called “sodium-potassium ATP-ase”. As a result, the neuron turns into a charged capacitor with a negative charge inside and positive outside.

Neuron Scheme (Mariana Ruiz Villarreal)

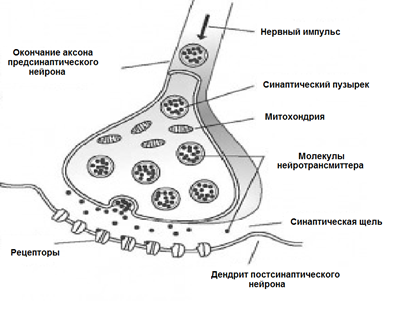

The surface of the neuron is covered with branching processes - dendrites. Axon endings of other neurons adjoin dendrites. The places of their connections are called synapses. Through synaptic interaction, a neuron is able to respond to incoming signals and, under certain circumstances, generate its own impulse, called a spike.

Signal transmission in synapses occurs due to substances called neurotransmitters. When the nerve impulse along the axon enters the synapse, it releases from the special bubbles the neurotransmitter molecules characteristic of this synapse. On the membrane of a neuron that receives a signal, there are protein molecules - receptors. Receptors interact with neurotransmitters.

Chemical synapse

Receptors located in the synaptic cleft are ionotropic. This name emphasizes the fact that they are also ion channels capable of moving ions. Neurotransmitters affect receptors in such a way that their ion channels open. Accordingly, the membrane is either depolarized or hyperpolarized, depending on which channels are affected and, accordingly, what type of synapse it is. In excitatory synapses, channels are opened, allowing cations to enter the cell, the membrane depolarizes. In the inhibitory synapses, conductive anions open, leading to membrane hyperpolarization.

In certain circumstances, synapses can change their sensitivity, which is called synaptic plasticity. This leads to the fact that the synapses of a single neuron acquire a different susceptibility to external signals.

At the same time, a multitude of signals arrive at the neuron synapses. Braking synapses pull the membrane potential towards charge accumulation inside the cage. Activating synapses, on the contrary, try to defuse a neuron (figure below).

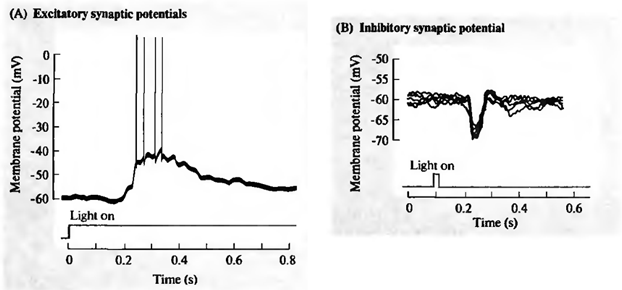

Excitation (A) and inhibition (B) of the retinal ganglion cell (Nicolls J., Martin R., Wallace B., Fuchs P., 2003)

When the total activity exceeds the initiation threshold, a discharge occurs, called an action potential or a spike. The spike is a sharp depolarization of the neuron membrane, which generates an electrical impulse. The whole process of pulse generation lasts about 1 millisecond. At the same time, neither the duration nor the amplitude of the pulse depends on how strong the reasons for it were (figure below).

Registration of ganglion cell action potential (Nicholls J., Martin R., Wallace B., Fuchs P., 2003)

After spike, ion pumps provide a neurotransmitter reuptake and clearing the synaptic cleft. During the refractory period coming after the spike, the neuron is not able to generate new impulses. The duration of this period determines the maximum generation frequency that a neuron is capable of.

Adhesions that occur as a result of activity at synapses are called induced. The frequency of the spikes caused encodes how well the incoming signal matches the sensitivity setting of the neuron synapses. When the incoming signals fall on the sensitive synapses that activate the neuron, and the signals coming to the inhibitory synapses do not interfere with this, the response of the neuron is maximal. The image that is described by such signals is called a neuron-specific stimulus.

Of course, the idea of the work of neurons should not be overly simplified. Information between some neurons can be transmitted not only by the spikes, but also through the channels connecting their intracellular contents and transmitting electrical potential directly. Such a distribution is called gradual, and the connection itself is called an electrical synapse. Dendrites, depending on the distance to the neuron body, are divided into proximal (close) and distal (distant). Distal dendrites can form sections that work as semi-autonomous elements. In addition to the synaptic pathways of excitation, there are extrasynaptic mechanisms that cause metabotropic adhesions. In addition to the induced activity, there is also spontaneous activity. Finally, the neurons of the brain are surrounded by glial cells, which also have a significant effect on the processes that occur.

The long path of evolution has created many mechanisms that are used by the brain in their work. Some of them can be understood by themselves, the meaning of others becomes clear only when considering fairly complex interactions. Therefore, you should not take the above description of the neuron as exhaustive. To go to deeper models, we first need to deal with the "basic" properties of neurons.

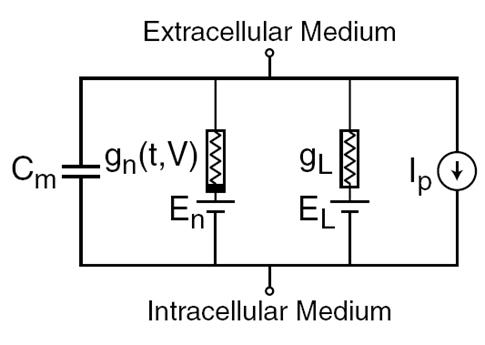

In 1952, Alan Lloyd Hodgkin and Andrew Huxley made descriptions of electrical mechanisms that determine the generation and transmission of the nervous signal in the giant squid axon (Hodgkin, 1952). What was evaluated by the Nobel Prize in the field of physiology and medicine in 1963. The Hodgkin-Huxley model describes the behavior of a neuron by a system of ordinary differential equations. These equations correspond to the autowave process in the active medium. They take into account many components, each of which has its own biophysical analogue in a real cell (figure below). Ion pumps correspond to a current source I p . The inner lipid layer of the cell membrane forms a capacitor with a capacity of C m . Ion channels of synaptic receptors provide electrical conductivity g n , which depends on the supplied signals, varying with time t, and the total value of the membrane potential V. The leakage current of the membrane pores is created by the conductor g L. The movement of ions through ion channels occurs under the action of electrochemical gradients, which correspond to voltage sources with electromotive force E n and E L.

The main components of the Hodgkin-Huxley model

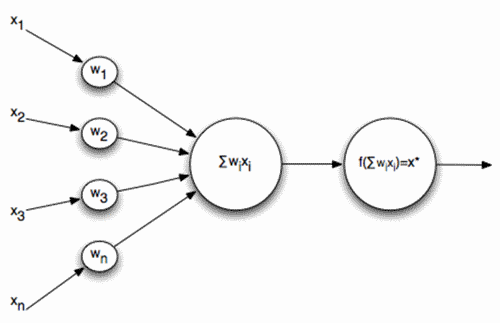

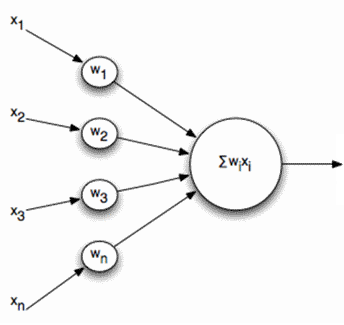

Naturally, when creating neural networks, there is a desire to simplify the neuron model, leaving only the most essential properties in it. The most well-known and popular simplified model is the McCulloch-Pitts artificial neuron, developed in the early 1940s (J. McCulloch, W. Pitts, 1956).

McCulloch-Pitts Formal Neuron

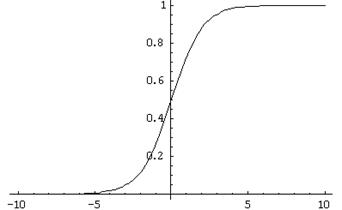

The inputs of such a neuron signals. These signals are weightedly summed. Further, a certain nonlinear activation function, for example, sigmoidal, is applied to this linear combination. Often the logistic function is used as sigmoidal:

Logistic function

In this case, the activity of the formal neuron is recorded as

As a result, such a neuron turns into a threshold adder. With a sufficiently steep threshold function, the neuron's output signal is either 0 or 1. The weighted sum of the input signal and the neuron weights is a convolution of two images: the image of the input signal and the image described by the weights of the neuron. The result of convolution is the higher, the more precisely the correspondence of these images. That is, the neuron, in fact, determines how much of the signal supplied is similar to the image recorded on its synapses. When the value of convolution exceeds a certain level and the threshold function switches to one, this can be interpreted as a neuron’s resolute statement that he has learned the image being presented.

Real neurons are really somehow similar to McCulloh-Pitts neurons. The amplitudes of their spikes do not depend on what signals at the synapses caused them. Spike is either there or not. But real neurons respond to a stimulus not with a single impulse, but with an impulse sequence. In this case, the frequency of the pulses is the higher, the more accurately the image characteristic of the neuron is recognized. This means that if we build a neural network of such threshold adders, then with a static input signal, although it will give some kind of output, this result will be far from reproducing how real neurons work. In order to bring the neural network closer to the biological prototype, we will need to simulate the work in dynamics, taking into account the time parameters and reproducing the frequency properties of the signals.

But you can go the other way. For example, you can select a generalized characteristic of the activity of a neuron, which corresponds to the frequency of its impulses, that is, the number of spikes for a certain period of time. If you go to this description, you can think of a neuron as a simple linear adder.

Linear adder

The output signals and, accordingly, the input for such neurons are no longer dicatomic (0 or 1), but are expressed by a certain scalar value. The activation function is then written as

A linear adder should not be perceived as something fundamentally different compared to a pulsed neuron, it just allows you to switch to longer time intervals when modeling or describing. And although the impulse description is more correct, the transition to a linear adder in many cases is justified by a strong simplification of the model. Moreover, some important properties that are difficult to see in a pulsed neuron are quite obvious for a linear adder.

Continuation

References

Alexey Redozubov (2014)

Source: https://habr.com/ru/post/214109/

All Articles