How to save the Olympiad?

All the media in recent times live and breathe only the Olympics. In order not to violate this trend and not to miss the opportunity to share an examination of the issue of data storage at such an event, let me speculate a little on the topic of the mutual penetration of technology into sports and sports in technology.

According to some reports, the Olympics in Sochi has become a big event not only for athletes and fans, but also for the Russian IT community, because provided an example of using the latest trends in the IT industry for sporting events.

As a platform for the rapid deployment of computing power, the organizers chose a Microsoft Windows Azure cloud environment running on top of Hyper-V hypervisors located in Microsoft data centers, automating accounting, logistics and budgeting was done using Microsoft Dynamics AX. Distributed video and audio streaming systems, a variety of web portals, databases and other applications that provide this planetary-scale event were raised. In addition to cloud capacity, a local data center network was also created specifically for the Olympiad.

This entire infrastructure generates a huge amount of data - just imagine how many different types of statistics, documents, reports, medical data, video and audio content are generated in a short period of time for playing games! This is petabytes of information. Now imagine that all this content should be available 24x7 during games and in the form of archives / backups for a very long time - after all, this information is later used for various kinds of analytics, and all financial and logistic reporting should be stored for years in accordance with applicable legislation in relevant areas. Yes, I hint that all this data should be stored for a long time and efficiently, and it would be nice to do it cheaply!

But with the end of the Olympic Games, these data will almost instantly become fixed - expensive solutions aimed at modifying existing content and access speed will no longer be suitable.

')

A storage system for such “lazy data” must meet three requirements:

To these three main issues of the global storage problem “Big Data” I would add another important aspect - the ease of deployment, monitoring and control over the data warehouse. Well, as a visual example, I will try to briefly comment on exactly how Acronis Storage solves the aforementioned tasks:

For guaranteed reliable data storage, Acronis uses a proprietary technology that provides redundancy by using special error correction codes, and checksums are used to ensure the integrity of the stored data.

One way or another, some data redundancy cannot be eliminated in any way, but the logic underlying the algorithm allows organizing the storage system in various configurations:

As for the scalability of this system, you can easily add and remove storage servers without the risk of data loss. The system itself will balance the load. The node (node) - an element of scalability and reliability enhancement Acronis Storage - works on any typical server hardware running Linux. Node components can be (in any combination):

Acronis Storage was created with a view to storing data not only efficiently, but also cheaply, so this software storage system can be deployed on the simplest servers (not necessarily branded) by connecting them directly to hard drives. The system will merge all these hard drives from all servers into a single pool and provide access to them via the NFS protocol or using the FUSE client, while ensuring high-speed cache.

As for the convenience of installing the system, there are no problems here either. Acronis Storage is deployed using an ISO boot image from which the main node is lifted, then all the other nodes of the system are loaded via PXE. After that, you will need a few minutes to configure server roles (MDS / FES / STS) via the web interface, as well as create and configure NFS balls. After that, the system is ready for use.

In conclusion, I would like to note that the Acronis Storage solution has no restrictions on the size of the stored data and is a unique offer on the market based on the combination of characteristics. It is specially sharpened by developers for reliable and flexible storage of “lazy data” (backups, logs, documents, audio, video, medical data) in the cheapest and most efficient way.

The most striking evidence of this is the fact that it is this proprietary technology that Acronis has been using in its data centers around the world to organize cloud storage of users' backups since 2009.

According to some reports, the Olympics in Sochi has become a big event not only for athletes and fans, but also for the Russian IT community, because provided an example of using the latest trends in the IT industry for sporting events.

What is it all about?

As a platform for the rapid deployment of computing power, the organizers chose a Microsoft Windows Azure cloud environment running on top of Hyper-V hypervisors located in Microsoft data centers, automating accounting, logistics and budgeting was done using Microsoft Dynamics AX. Distributed video and audio streaming systems, a variety of web portals, databases and other applications that provide this planetary-scale event were raised. In addition to cloud capacity, a local data center network was also created specifically for the Olympiad.

Main problem

This entire infrastructure generates a huge amount of data - just imagine how many different types of statistics, documents, reports, medical data, video and audio content are generated in a short period of time for playing games! This is petabytes of information. Now imagine that all this content should be available 24x7 during games and in the form of archives / backups for a very long time - after all, this information is later used for various kinds of analytics, and all financial and logistic reporting should be stored for years in accordance with applicable legislation in relevant areas. Yes, I hint that all this data should be stored for a long time and efficiently, and it would be nice to do it cheaply!

But with the end of the Olympic Games, these data will almost instantly become fixed - expensive solutions aimed at modifying existing content and access speed will no longer be suitable.

')

A storage system for such “lazy data” must meet three requirements:

- Data availability - data must be available at any time for analytics or recovery (in the case of a backup copy);

- Storage scalability - it should be possible to both easily increase and decrease the storage capacity by any increment (disk, server);

- Storage cost - I want to store data on inexpensive, easily replaceable media.

To these three main issues of the global storage problem “Big Data” I would add another important aspect - the ease of deployment, monitoring and control over the data warehouse. Well, as a visual example, I will try to briefly comment on exactly how Acronis Storage solves the aforementioned tasks:

Store data securely

For guaranteed reliable data storage, Acronis uses a proprietary technology that provides redundancy by using special error correction codes, and checksums are used to ensure the integrity of the stored data.

One way or another, some data redundancy cannot be eliminated in any way, but the logic underlying the algorithm allows organizing the storage system in various configurations:

- Minimum redundancy - you need at least 4 storage servers, and you can lose one server without losing data;

- Normal redundancy - you need at least 9 storage servers, while you can easily lose 2 storage servers;

- Maximum redundancy - 13 storage servers are needed, and you can lose 3 storage servers without serious consequences.

It should be noted that the overhead of disk space in the first embodiment will be 50%, in the second 40%, and in the third 43%. For comparison, if you try to organize storage on HDFS, then your overhead will be 200%, because Hadoop doubles the source data.

Scale the system

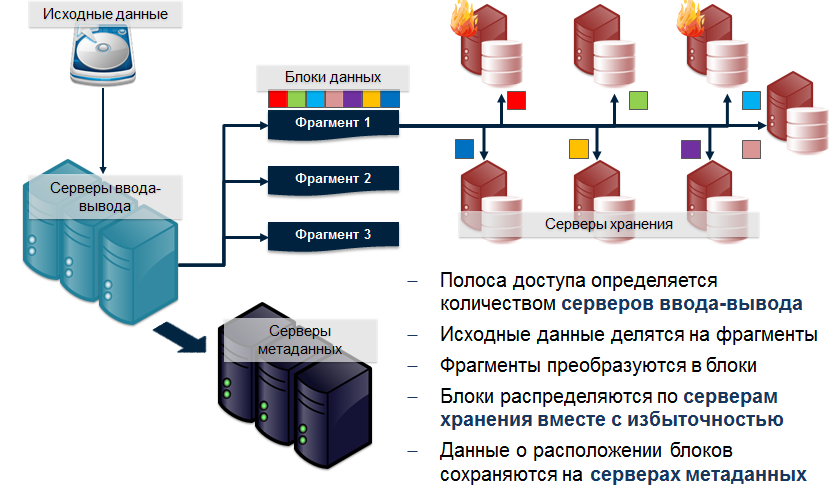

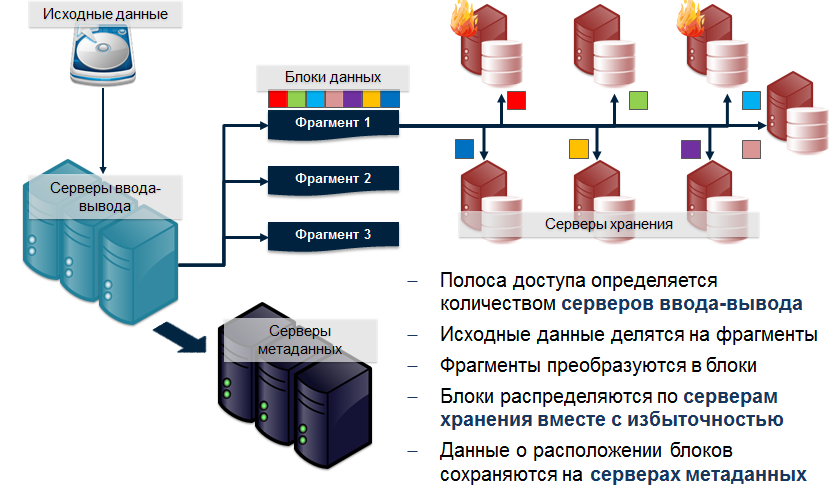

As for the scalability of this system, you can easily add and remove storage servers without the risk of data loss. The system itself will balance the load. The node (node) - an element of scalability and reliability enhancement Acronis Storage - works on any typical server hardware running Linux. Node components can be (in any combination):

- Metadata Service (MDS) - monitors the distribution of data across storage servers and ensures data integrity.

- I / O service (FES) - distributes data across storage servers and performs read-write operations.

- Storage Server (STS) - stores blocks (chunks) of data.

Architectural solutions

Acronis Storage was created with a view to storing data not only efficiently, but also cheaply, so this software storage system can be deployed on the simplest servers (not necessarily branded) by connecting them directly to hard drives. The system will merge all these hard drives from all servers into a single pool and provide access to them via the NFS protocol or using the FUSE client, while ensuring high-speed cache.

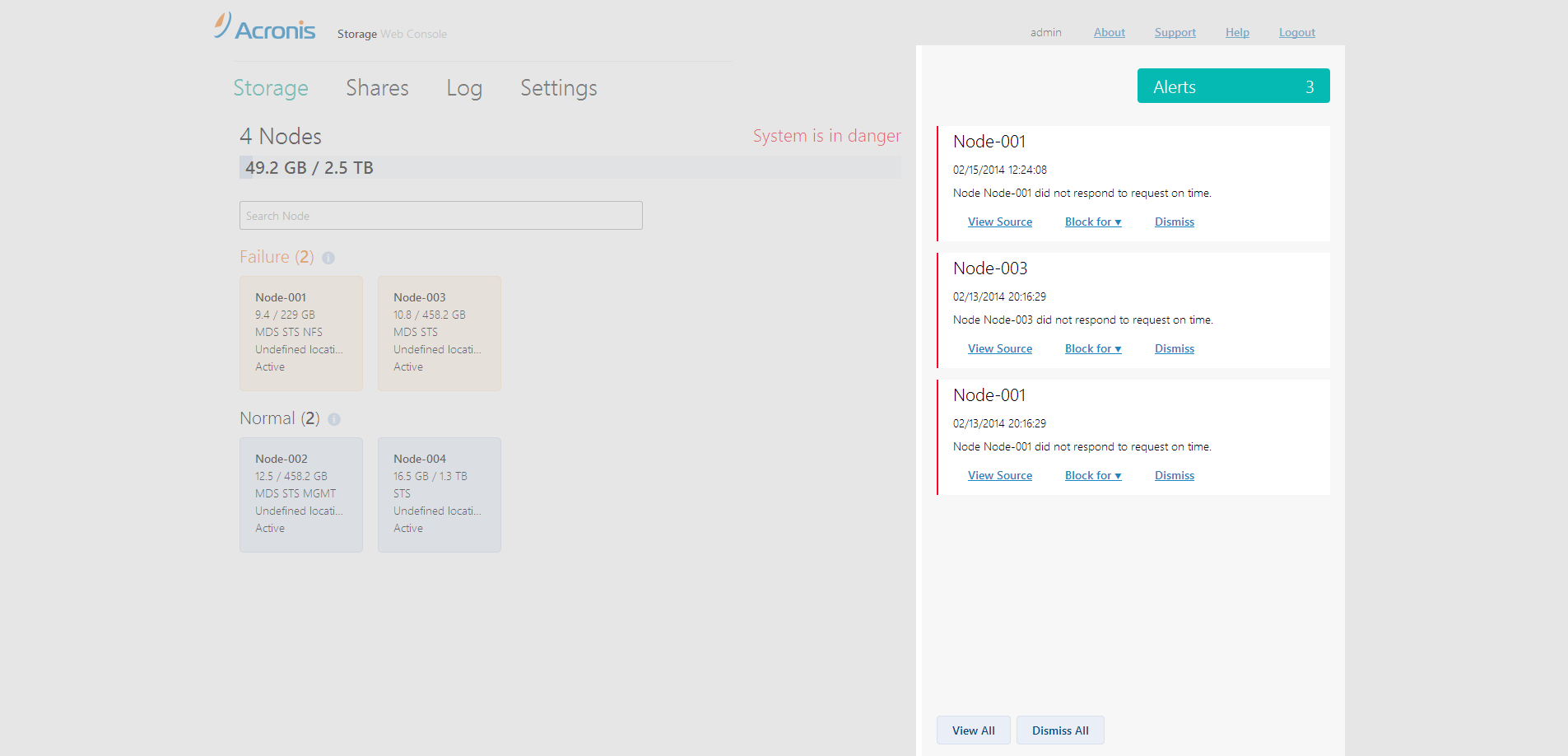

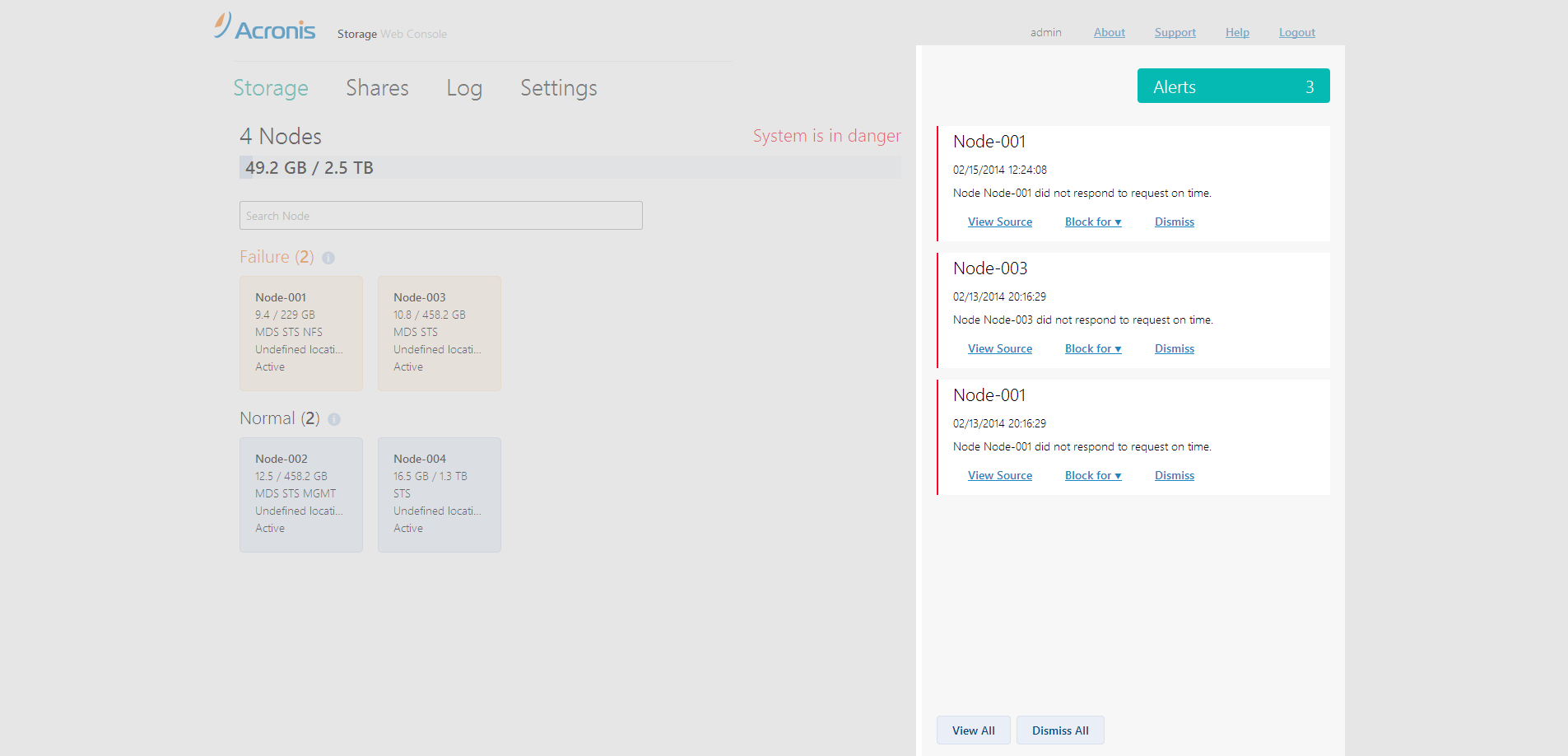

As for the convenience of installing the system, there are no problems here either. Acronis Storage is deployed using an ISO boot image from which the main node is lifted, then all the other nodes of the system are loaded via PXE. After that, you will need a few minutes to configure server roles (MDS / FES / STS) via the web interface, as well as create and configure NFS balls. After that, the system is ready for use.

In conclusion, I would like to note that the Acronis Storage solution has no restrictions on the size of the stored data and is a unique offer on the market based on the combination of characteristics. It is specially sharpened by developers for reliable and flexible storage of “lazy data” (backups, logs, documents, audio, video, medical data) in the cheapest and most efficient way.

The most striking evidence of this is the fact that it is this proprietary technology that Acronis has been using in its data centers around the world to organize cloud storage of users' backups since 2009.

Source: https://habr.com/ru/post/213949/

All Articles