40G Ethernet performance with Intel ONS based switch

Today a decent number of interfaces is available, each of which claims to be useful and necessary. Traditional Ethernet with 1G, 10G, 40G; InfiniBand FDR 56G and QDR 40G; FibreChannel 8G, 16G, promised 32G.

All promise happiness and talk about their utmost necessity and usefulness in everyday life. How to deal with this, what to choose and where are the pitfalls?

')

How tested:

Traditional for all gigabits, which is in each server, 40G Ethernet, QDR and FDR InfiniBand, 10G did not take part. Immediately it should be noted that we consider FC to be an outgoing interface, now convergence is in trend. 32G is not yet available, and 16G is out of the league of high-speed solutions.

To determine the limits, tests were carried out to achieve minimum delays and maximum throughput, which is convenient to do with the help of HPC techniques, at the same time we tested the suitability of 40G Ethernet for HPC applications.

Invaluable assistance was provided and conducted by field tests by colleagues from the Central Control Center , to whom one switch based on Intel ONS was transferred for testing.

By the way, the distribution of the switch in the most interesting project continues.

Hardware:

- 40G Ethernet Switch

- Communication adapter Mellanox ConnectX-3 VPI adapter card; single-port QSFP; FDR IB (56Gb / s) and 40GigE; PCIe3.0 x8 8GT / s

- Dual Processor with Intel® Xeon® E5-2680 v2

- OFED-3.5 ( drivers and low-level libraries for Mellanox)

- ConnectX® EN 10 and 40 Gigabit Linux Driver

Software:

- Measurement of performance was performed using a synthetic test pingpong from the Intel MPI Benchmark v3.2.3 test suite .

- The version of the MPI library used by S-MPI v1.1.1 is a clone of Open MPI.

In the process of preparation, the nuance of the Mellanox adapters was found out - they require a forced switch to Ethernet mode. For some reason, the declared functionality of automatic detection of the type of connected network does not work, it can be corrected.

To evaluate performance, we “traditionally” look at the values of latency during the transmission of 4-byte messages and of the bandwidth when transmitting a 4-MB message.

The beginning of experiences:

It is used for normal Ethernet mode. In our terminology, a tcp factory was used. That is, the MPI library uses the socket interface to transmit messages. Driver, switch, and MPI configuration by default.

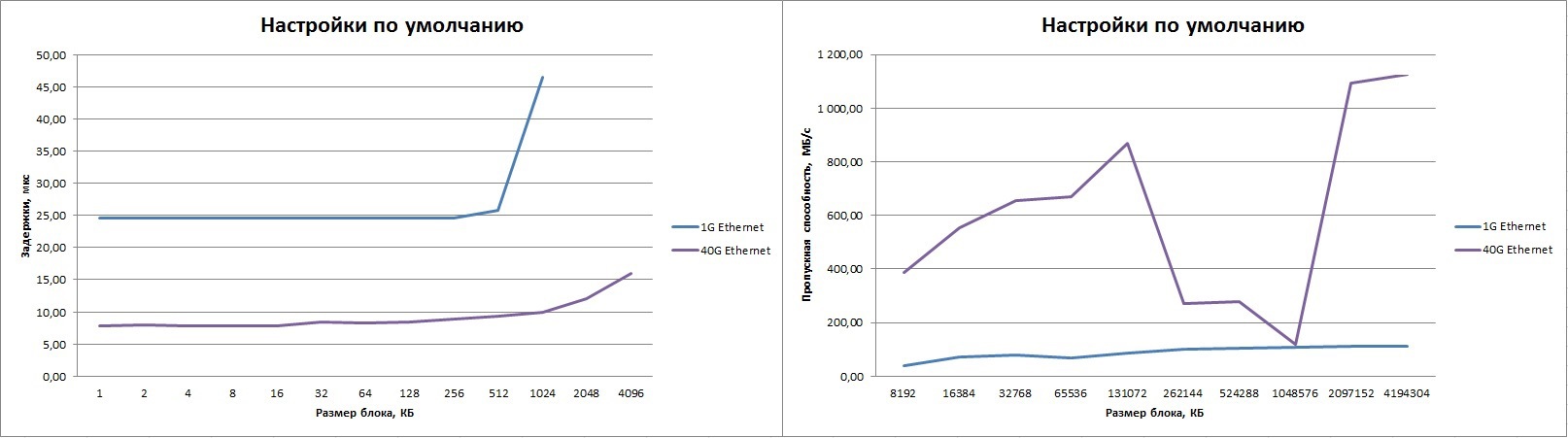

The table on the left shows the results for 1G ethernet, on the right for 40G (with our switch). We see “dips” in the band for messages of length 256K-1M and the maximum result suitable for 10G Ethernet. Obviously, you need to customize the software. The delay, of course, is very high, but when passing through the standard TCP stack, you should not expect much. We must pay tribute, three times better than 1G Ethernet.

Mpirun –n 2 –debug-mpi 4 –host host01,host02 –nets tcp --mca btl_tcp_if_include eth0 IMB-MPI1 PingPong | mpirun -n 2 -debug-mpi 4 -host host01,host02 -nets tcp --mca btl_tcp_if_include eth4 IMB-MPI1 PingPong |

Clickable:

We continue:

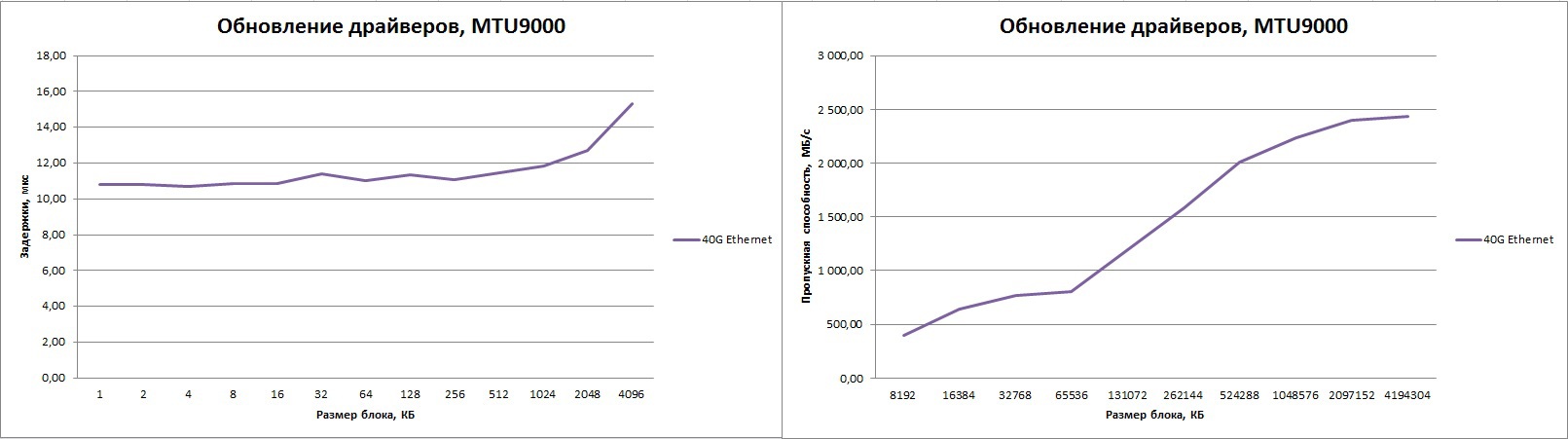

Update the driver. We take the driver from the site Mellanox .

To reduce the “drawdown” in the 256K-1M range, increase the size of the buffers through the MPI settings:

export S_MPI_BTL_TCP_SNDBUF=2097152

export S_MPI_BTL_TCP_RCVBUF=2097152

mpirun -n 2 -debug-mpi 4 -host host1,host2 -nets tcp --mca btl_tcp_if_include eth4 IMB-MPI1 PingPongClickable:

As a result, an improvement of one and a half times and a decrease in failures, but still not enough, and even delays on small packets have grown.

Of course, to improve the bandwidth when using high-speed Ethernet interfaces, it makes sense to use extra-long Ethernet frames (Jumbo frames). We configure the switch and adapters to use MTU 9000, and we get a significantly higher bandwidth, which still remains at 20 Gbit / s.

Clickable:

The latency on small packets is growing again.

Tried other parameters "twist", but did not receive any fundamental improvements. In general, we decided that "we are not able to cook them."

Which way to look?

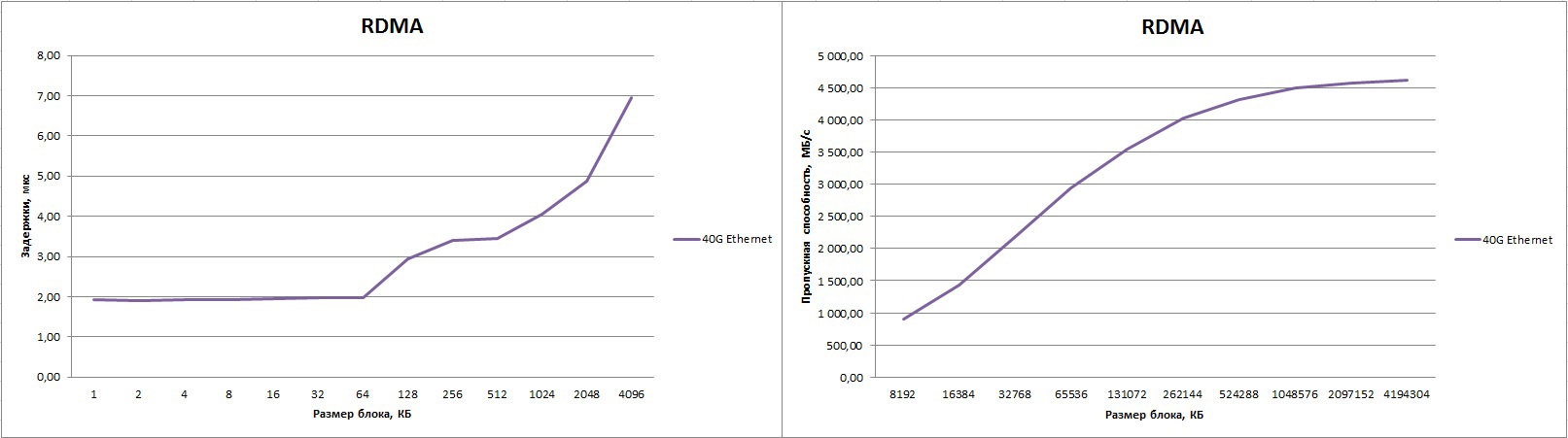

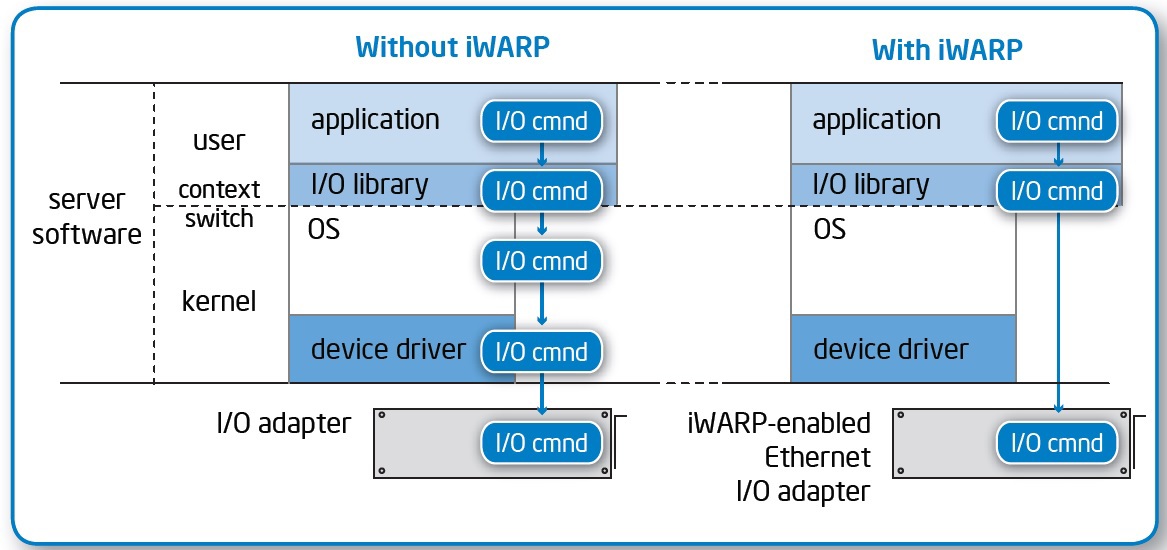

Of course, if you look for performance, you need to do this bypassing the TCP stack. The obvious solution is to try RDMA technology.

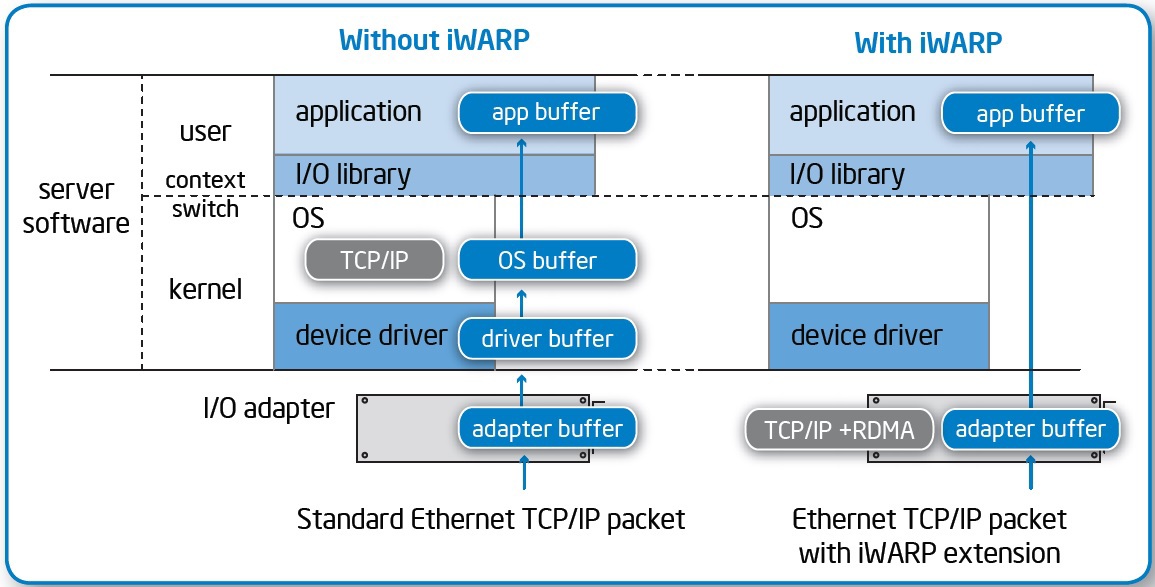

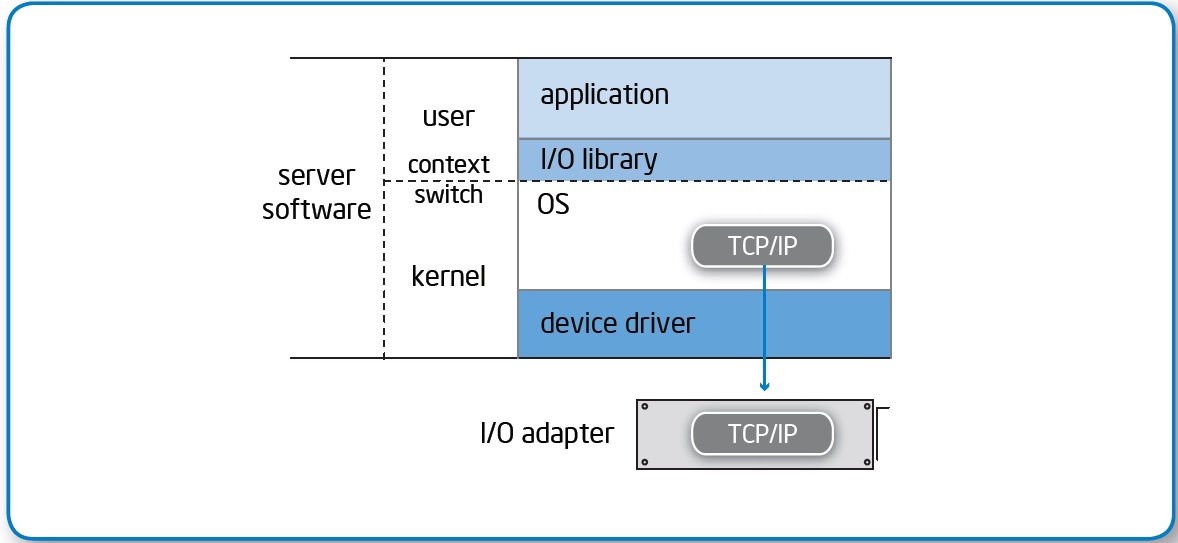

The essence of technology:

- Delivering data directly to the user's environment, without switching the application context between kernel and user modes.

- Data from the adapter is placed immediately in the memory of the application, without intermediate buffers.

- Network controllers handle the transport layer without involving the processor.

One of the consequences is a significant reduction in access latency.

There are two competing standards, the Internet Wide Area RDMA Protocol (iWARP) and RDMA over Converged Ethernet (RoCE), their competition and the attempts of supporters to drown each other are worthy of a separate holivar and bulk material. Pictures are for iWARP, but the essence is common.

Mellanox adapters support RDMA over Converged Ethernet (RoCE), use OFED-3.5.2.

As a result, got great numbers. The delay is 1.9 μs and the band is 4613.88 MB / s (this is 92.2% of the peak!) When using Jumbo frames. If the MTU size is left as default, the band will be lower, approximately 4300 MB / s.

mpirun -n 2 -debug-mpi 4 -host host1,host2 --mca btl openib,self --mca btl_openib_cpc_include rdmacm IMB-MPI1Clickable:

For the Pingpong test, the delay time can be improved up to 1.4 µs; for this, the processes must be placed on the CPUs “close” to the network adapter. What practical value does it have in real life? Below is a small Ethernet vs InfiniBand comparison label. In principle, this “trick” can be applied to any interconnect, so the table below shows the range of values for some network factories.

| 1G Ethernet (TCP) | 40G Ethernet (TCP) | 40G Ethernet (RDMA) | InfiniBand QDR | InfiniBand FDR | |

| Latency, usec | 24.5 | 7.8 | 1.4-1.9 | 1.5 | 0.86-1.4 |

| Bandwidth, Mbytes / s | 112 | 1126 | 4300-4613 | 3400 | 5300-6000 |

The data for 40G and FDR float depending on the proximity of the cores on which the processes run to the cores responsible for the network adapter, for some reason this effect was almost invisible on QDR.

40G Ethernet significantly outpaced IB QDR, but before IB FDR did not catch up in absolute figures, which is not surprising. However, 40G Ethernet is leading in efficiency.

What follows from this?

The triumph of converged Ethernet technology!

It is not for nothing that Microsoft is pushing hard for SMB Direct, which relies on RDMA, and RDMA support is also built into NFS.

To work with block access, there are iSCSI offload technologies on network controllers and iSCSI Extensions for RDMA (iSER) protocol, aesthetes can try FCoE :-)

When using such switches:

You can build a bunch of interesting high-performance solutions.

For example, a software-configured storage system like the FS200 G3 with a 40G interface and a server farm with 10G adapters.

With this approach, there is no need to build a dedicated network for data, significantly saving both money on the second set of switches and the time to deploy the solution, because the cables also need to be connected and installed twice as low.

Total:

- On Ethernet, you can build a high-performance network with ultra-low latency.

- The active use of modern controllers with RDMA support significantly improves the performance of the solution.

- Intel matrix with 400 ns cut-through delay level helps to get excellent results :-)

Source: https://habr.com/ru/post/213911/

All Articles