Elastic Search in enterprise projects

In this article, I will share the experience of using Elastic Search in 2GIS internal products, in particular, in our own system for organizing a call center. And also I will tell you what problems we were able to solve using this search engine.

In the depths of 2GIS there is an InfoRussia project, with the help of which operators call and verify the cards of organizations. The database stores 4 million cards with data about organizations in Russia, the total size of which is more than 150 GB. The data is stored in a relational database, partially decomposed into tables, partially in xml-fields.

The list of problems and tasks to be solved:

1. Search by a large number of criteria.

2. Add information to the index without full reindexing.

3. Unload data without locking tables.

4. Reduce the load on the base, remove large join on the tables.

5. Support multi-language search without major investment in development.

')

After analyzing the available solutions, we chose Elastic, as it satisfied most requirements.

Based on analytics, the requirements were as follows.

The main candidates for the role of the search engine - ElasticSearch, Solr and Sphinx.

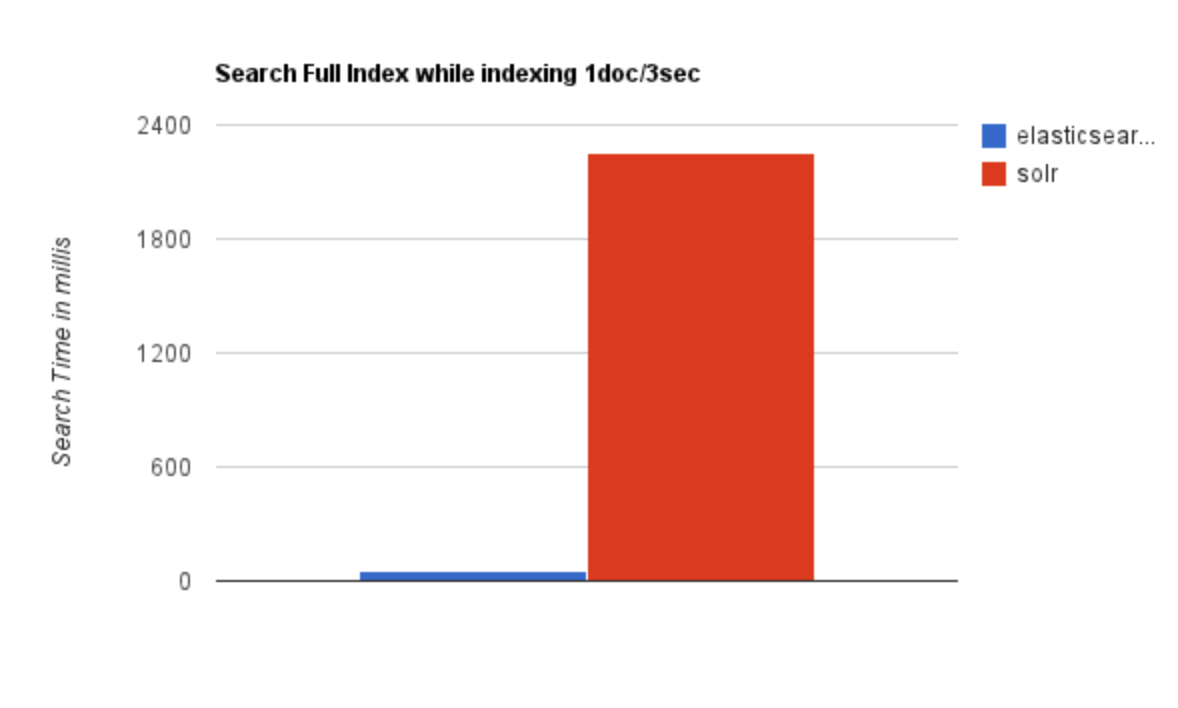

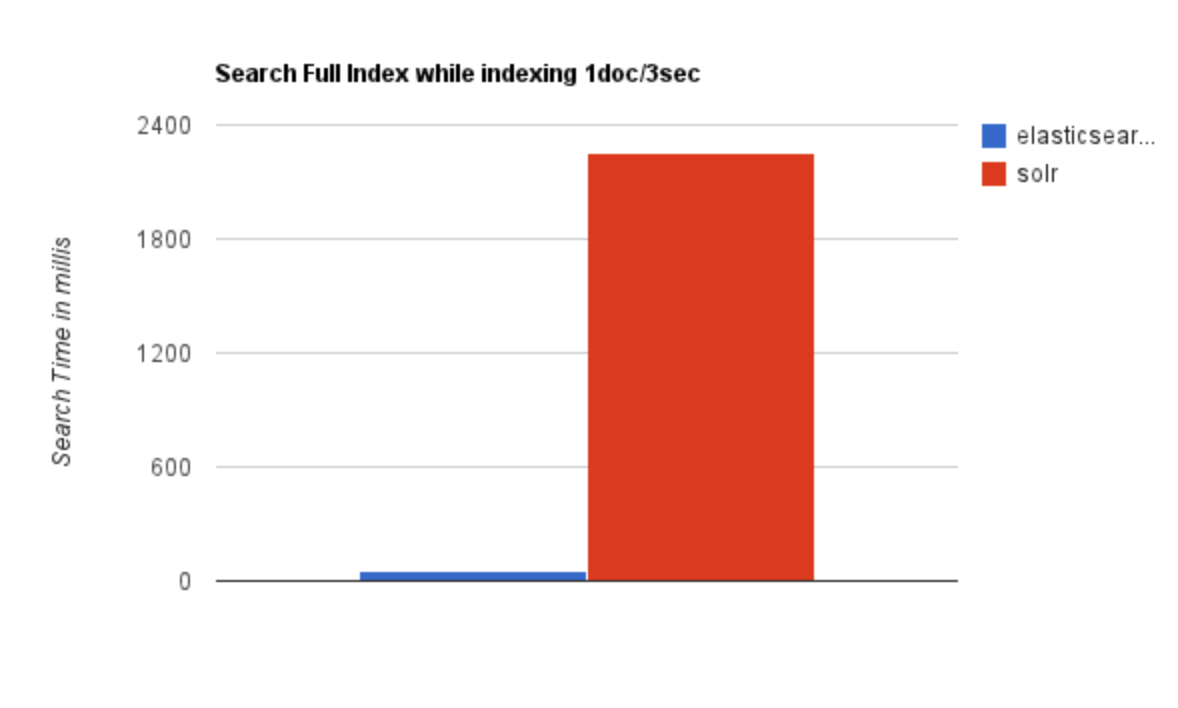

Solr did not fit because with online indexing, the search speed drops dramatically (you can learn more from the article ). The picture below illustrates this fact. In addition, you can read the article , which examines Solr vs ElasticSearch in detail.

The next candidate we considered is Sphinx. ElasticSearch won by supporting sharding, replication, the ability to make complex queries and pull out the original data from the search.

The first prototype, which was made to search on the basis of ES, turned out to be so good that it immediately moved into the combat application and still lives there.

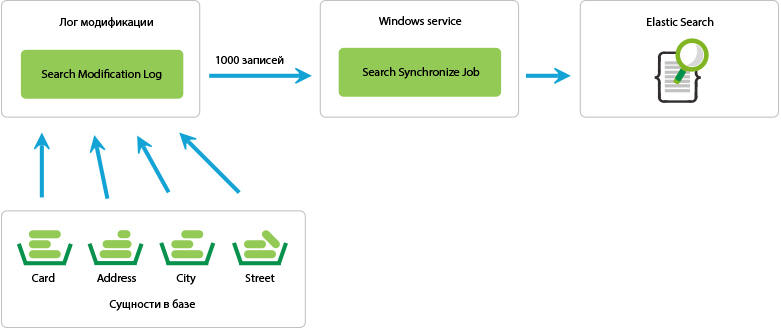

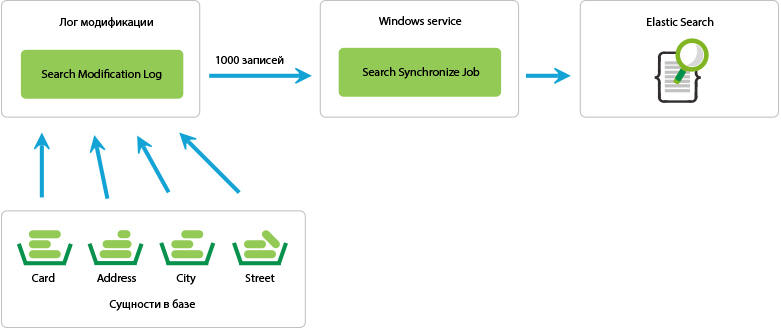

When the project was just beginning, there was no River mechanism in ES yet, so we wrote our job to re-index the database.

The service takes the data about the modified entities and adds them to the ES index until it processes the entire queue. For quick loading, you can apply the following optimizations:

1. Use bulk operations.

2. Set the refresh_interval parameter to -1.

This parameter is responsible for the internal re-indexing time in ES. After all data is loaded into the search, you need to return the default value. When using this method, everything that we load will not be analyzed and, accordingly, will not be searchable until internal reindexing occurs.

Search in our project is indexed online; we index 100 cards at a time.

After the data is in the index, we can search for them.

Tasks that require a quick search on various parameters, we solve using Elastic Search.

For example, having 4 million cards in the database, you need to browse all the phone cards and find the same phone (in our data scheme, phones are stored inside the xml field). Such a request to MS SQL database takes up to 10-15 minutes. Elastic Search does the job for a few milliseconds.

So, the main cases that we solved using Elastic Search:

When entering a new card in the building, the operator sees what other organizations exist in this building, and can verify information on organizations that are nearby.

It also happens that several organizations can be checked by one phone, so we show which other cards have the same contact.

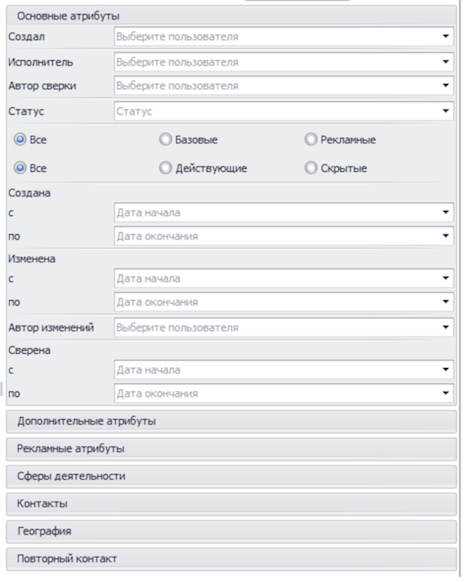

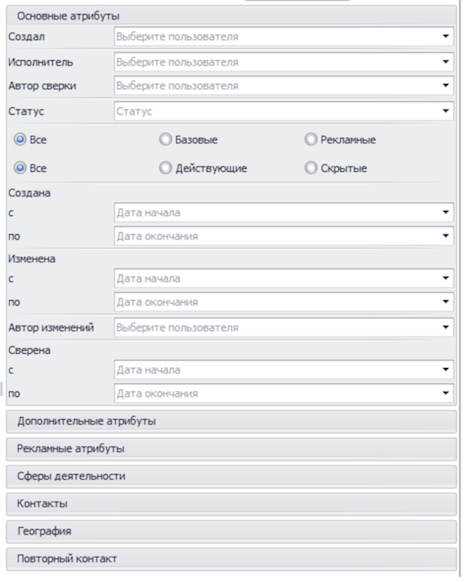

In addition, the application has a search form that allows you to search by a huge number of criteria. This form is used for planning the reconciliation of organizations, quality control, etc.

Each tab has another 4–5 parameters for a query to the search engine.

Building indexes in the relational database for so many fields is quite wasteful, so Elastic helped us with this problem.

One of the business cases of the application is the support for uploading data to Excel. Uploads are used by various specialists to analyze the quality of the collected data, to plan the work of information gathering specialists.

We also use ES to upload data from an Excel search. This is very convenient, since Elastic supports a special mode for selecting data - Scroll.

Scroll mode allows you to "fix" the results of a search query and receive these results in small portions.

This mode has a number of limitations. For example, you cannot sort the data (in fact, you can, but with a drop in performance and memory consumption).

Our tests have shown that we can upload the entire 2GIS database to the Excel file in 24 hours.

When organizing unloadings of such a large volume, the availability of all the necessary data in the storage is of great importance. Thus, we use Elastic as a NoSQL repository, in which data needed only for unloading is stored in non-indexable fields.

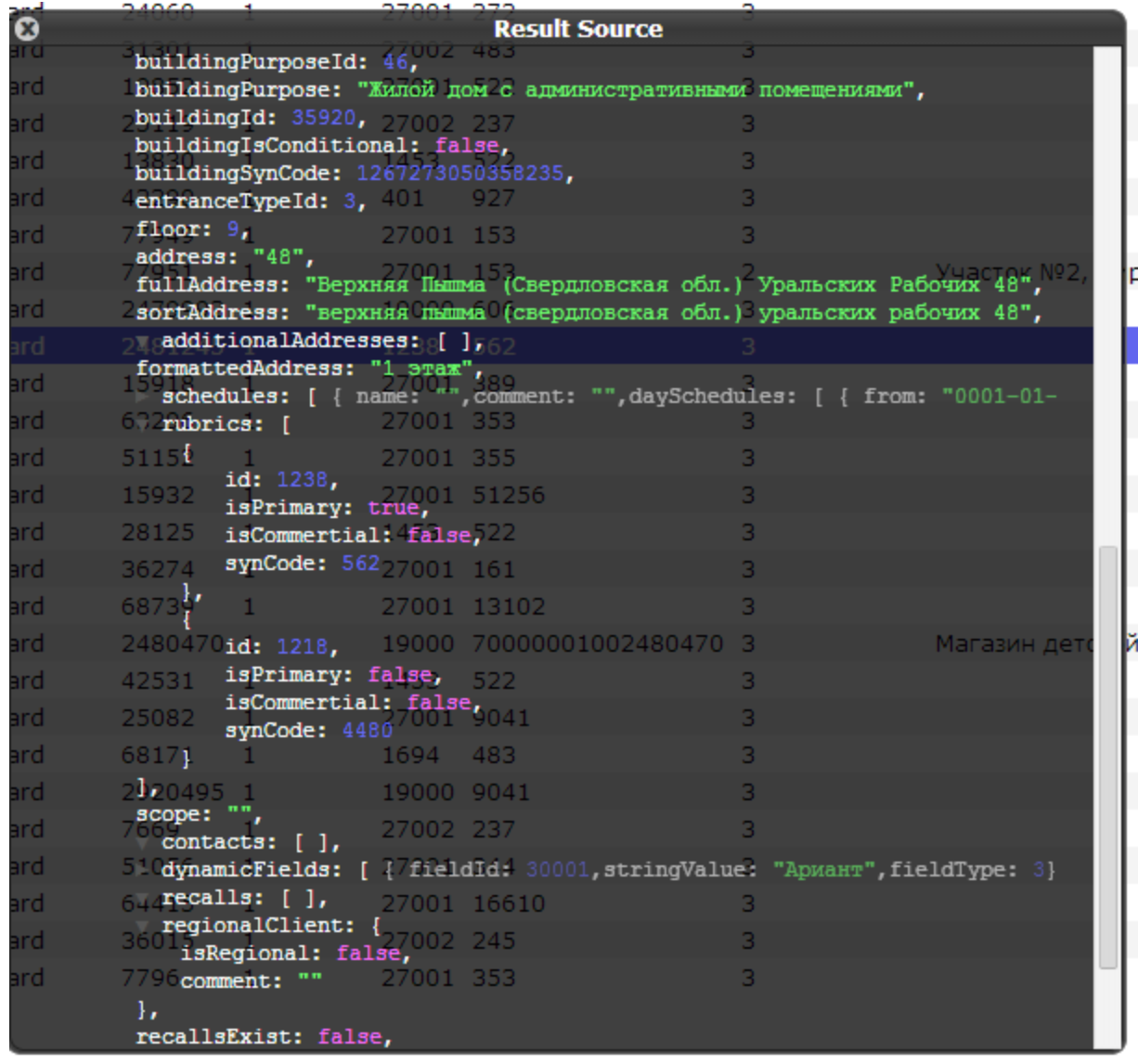

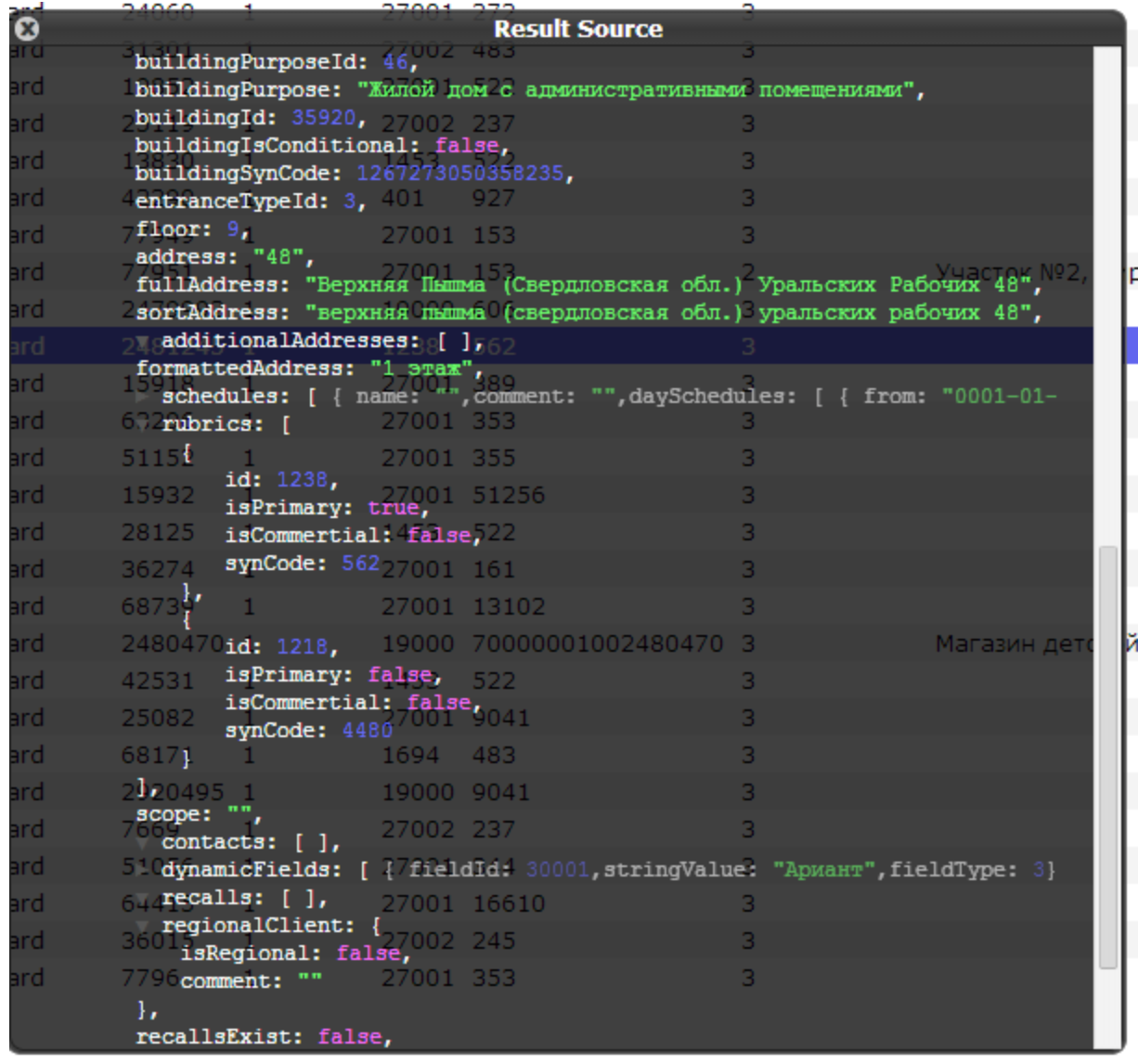

The screenshot shows that the index contains denormalized data, we store the id of the city and its name along with the card.

In addition, you can make nested entities, for example, the time of work and rubrics in which the organization is located.

Must mark this item separately. Not long ago, 2GIS launched several international projects. To support Prague , Limassol , Padua we did not need to refine the search queries. We simply loaded the cards into the index and the search itself earned.

Recently, we have slightly refined queries to support search with a diacritic, but, in fact, this is a built-in Elastic function.

Elastic supports both sharding and the installation of several nodes in a cluster. Now we have a cluster of two nodes that serve 300 people online.

For 2 years of operation, problems with the search engine occurred only because of the fall of virtual machines or other problems with the infrastructure. Like any search engine, Elastic loves good disks and RAM, so it is recommended to pay special attention to these parameters during load testing.

Elastic Search fully meets our requirements for the search engine and allowed us to implement an important business feature as soon as possible. Reliability, speed and ease of use - these are the components whose combination allowed us to make a choice in his direction. Of course, there are other solutions on the market, a review of which is beyond the scope of this article. The purpose of the article was to show what tasks in business applications can be closed using the free Elastic Search.

Considering our positive experience with Elastic, other internal teams have started using this search engine in their projects.

In the depths of 2GIS there is an InfoRussia project, with the help of which operators call and verify the cards of organizations. The database stores 4 million cards with data about organizations in Russia, the total size of which is more than 150 GB. The data is stored in a relational database, partially decomposed into tables, partially in xml-fields.

The list of problems and tasks to be solved:

1. Search by a large number of criteria.

2. Add information to the index without full reindexing.

3. Unload data without locking tables.

4. Reduce the load on the base, remove large join on the tables.

5. Support multi-language search without major investment in development.

')

After analyzing the available solutions, we chose Elastic, as it satisfied most requirements.

Based on analytics, the requirements were as follows.

Functional requirements:

- Search by criteria (exact match by single value or by list of values).

- Search by range (number, date).

- Full-text search (morphology, wildcard patterns).

- Boolean search (combining search criteria by AND / OR).

Non-functional requirements:

- Reliability (support for distributed indexes).

- Scalability (the ability to distribute to multiple servers and support all the necessary infrastructure "out of the box").

- Real-time indexing (new data should be indexed without the obvious need for a complete rebuilding of the index and at a reasonable time).

- Configurability (in particular, adding new fields to the index without the need for its complete rebuilding is required for indexing additional attributes).

- Ease of use (the presence of "clients" for the necessary platforms / languages, the use of public protocols, for example, HTTP).

- Productivity (how much response time should be provided with a load of 300 operators online, the amount of data - all of Russia).

- Cost of ownership / support.

The main candidates for the role of the search engine - ElasticSearch, Solr and Sphinx.

Solr did not fit because with online indexing, the search speed drops dramatically (you can learn more from the article ). The picture below illustrates this fact. In addition, you can read the article , which examines Solr vs ElasticSearch in detail.

The next candidate we considered is Sphinx. ElasticSearch won by supporting sharding, replication, the ability to make complex queries and pull out the original data from the search.

Search

The first prototype, which was made to search on the basis of ES, turned out to be so good that it immediately moved into the combat application and still lives there.

When the project was just beginning, there was no River mechanism in ES yet, so we wrote our job to re-index the database.

The service takes the data about the modified entities and adds them to the ES index until it processes the entire queue. For quick loading, you can apply the following optimizations:

1. Use bulk operations.

2. Set the refresh_interval parameter to -1.

This parameter is responsible for the internal re-indexing time in ES. After all data is loaded into the search, you need to return the default value. When using this method, everything that we load will not be analyzed and, accordingly, will not be searchable until internal reindexing occurs.

Search in our project is indexed online; we index 100 cards at a time.

After the data is in the index, we can search for them.

Tasks that require a quick search on various parameters, we solve using Elastic Search.

For example, having 4 million cards in the database, you need to browse all the phone cards and find the same phone (in our data scheme, phones are stored inside the xml field). Such a request to MS SQL database takes up to 10-15 minutes. Elastic Search does the job for a few milliseconds.

So, the main cases that we solved using Elastic Search:

Search for cards that have the same address (in the card editing window)

When entering a new card in the building, the operator sees what other organizations exist in this building, and can verify information on organizations that are nearby.

Search for cards that have the same phone

It also happens that several organizations can be checked by one phone, so we show which other cards have the same contact.

Search cards in the search form

In addition, the application has a search form that allows you to search by a huge number of criteria. This form is used for planning the reconciliation of organizations, quality control, etc.

Each tab has another 4–5 parameters for a query to the search engine.

Building indexes in the relational database for so many fields is quite wasteful, so Elastic helped us with this problem.

Data upload

One of the business cases of the application is the support for uploading data to Excel. Uploads are used by various specialists to analyze the quality of the collected data, to plan the work of information gathering specialists.

We also use ES to upload data from an Excel search. This is very convenient, since Elastic supports a special mode for selecting data - Scroll.

Scroll mode allows you to "fix" the results of a search query and receive these results in small portions.

This mode has a number of limitations. For example, you cannot sort the data (in fact, you can, but with a drop in performance and memory consumption).

Our tests have shown that we can upload the entire 2GIS database to the Excel file in 24 hours.

When organizing unloadings of such a large volume, the availability of all the necessary data in the storage is of great importance. Thus, we use Elastic as a NoSQL repository, in which data needed only for unloading is stored in non-indexable fields.

The screenshot shows that the index contains denormalized data, we store the id of the city and its name along with the card.

In addition, you can make nested entities, for example, the time of work and rubrics in which the organization is located.

European language support

Must mark this item separately. Not long ago, 2GIS launched several international projects. To support Prague , Limassol , Padua we did not need to refine the search queries. We simply loaded the cards into the index and the search itself earned.

Recently, we have slightly refined queries to support search with a diacritic, but, in fact, this is a built-in Elastic function.

Ensuring Reliability and Resiliency

Elastic supports both sharding and the installation of several nodes in a cluster. Now we have a cluster of two nodes that serve 300 people online.

For 2 years of operation, problems with the search engine occurred only because of the fall of virtual machines or other problems with the infrastructure. Like any search engine, Elastic loves good disks and RAM, so it is recommended to pay special attention to these parameters during load testing.

Conclusion

Elastic Search fully meets our requirements for the search engine and allowed us to implement an important business feature as soon as possible. Reliability, speed and ease of use - these are the components whose combination allowed us to make a choice in his direction. Of course, there are other solutions on the market, a review of which is beyond the scope of this article. The purpose of the article was to show what tasks in business applications can be closed using the free Elastic Search.

Considering our positive experience with Elastic, other internal teams have started using this search engine in their projects.

Source: https://habr.com/ru/post/213765/

All Articles