Smart video player or just gesture recognition

Introduction

This article focuses on the recognition of gestures. I believe that this topic is very relevant today, because this method of entering information is more convenient for a person. In YouTube you can see a lot of clips about recognition, tracking of objects, there are also articles on this topic in Habré, so I decided to experiment and make something of my own, useful and necessary. I decided to make a video player that can be controlled by gestures, because sometimes I am too lazy to take up the mouse, find this slider and rewind a little bit forward or a little back, especially when I watch movies in a foreign language (I often have to rewind there) .

In the article, basically, we will talk about how I implemented gesture recognition, and I’ll only talk about the video player in general.

So what do we want?

We want to be able to give the following commands using gestures:

- Fast forward / rewind

- Pause / continue

- Add / subtract sound

- Next / previous track.

Let's compare the listed actions with gestures:

- Left to right / right to left

- Movement from the center upwards to the right.

- Bottom up / top down

- Movement from the center down-right / from the center down-left

Watch gestures through the webcam. I have a built-in 0.3 megapixel camera on my laptop. It is weak, but with good lighting, it can also be used for such purposes. But I will use an external usb camera. We will show gestures with some monochromatic stick, because we will select it from the background by filtering by color. For example, we will use an ordinary pencil as such a subject. Of course, this is not the best way to recognize a subject, but I wanted to focus on the recognition of a gesture (movement), and not the subject in the figure.

Instruments

I will use Qt framework 5.2 as the development environment. To handle the stream of video from the webcam I will use OpenCV 4.6. The GUI will be completely in QML, and the recognition block will be in C ++.

')

Both tools are open source, both are cross-platform. I developed the player for Linux, but it can be transferred to any other platform, you only need to compile OpenCV with Qt support for the required platform and recompile, rebuild the player. I tried to transfer the player to Windows, but I could not compile OpenCV with Qt support for it. Who will try and who succeeds, please share the manual or binary.

Player structure

The figure below shows the structure of the player. The player works in two streams. The main stream is a GUI and video player. In a separate stream, a recognition block is placed in order to prevent the interface from hanging and video playback. I wrote the interface in QML, the player logic I wrote in JS, and the recognition block in C ++ (it is clear to everyone why). The player "communicates" with the recognition unit using signals and slots. I made a wrapper for the class of recognition in order to facilitate the division of the application into 2 streams. In fact, the wrapper is in the main thread (that is, not as shown in the figure). The wrapper creates an instance of the recognition class and places it in a new, additional stream. Actually, everything about the player, I will continue to talk about recognition and lead code.

Recognition

In order to recognize, we will collect frames and process them with the methods of probability theory. We will collect twenty frames per second (more webcam does not allow). We will process ten frames.

Algorithm:

- get a frame from the webcam and send it to the filter;

- the filter returns a binary image to us, where only a pencil in the form of a white rectangle on a black background is depicted;

- The binary image is sent to the analyzer, where pencil vertices are calculated. The top vertex is entered into the array.

- if the array has reached a size of 10 elements, then this array is sent to a probabilistic analyzer, where the analysis of a sequence of pairs of numbers using the least squares method.

- if the analysis has recognized any command, then this command is sent to the video player.

I will give only 3 basic recognition functions.

The following function monitors the camera if gesture control is enabled:

void MotionDetector::observCam() { m_cap >> m_frame; // filterIm(); // detectStick(); // , drawStick(m_binIm); // showIms(); // } This is how the recognition function looks like:

void MotionDetector::detectStick() { m_contours.clear(); cv::findContours(m_binIm.clone(), m_contours, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_SIMPLE); if (m_contours.empty()) return; bool stickFound = false; for(int i = 0; i < m_contours.size(); ++i) { // , if(cv::contourArea(m_contours[i]) < m_arAtThreshold) continue; // m_stickRect = cv::minAreaRect(m_contours[i]); cv::Point2f vertices[4]; cv::Point top; cv::Point bottom; m_stickRect.points(vertices); if (lineLength(vertices[0], vertices[1]) > lineLength(vertices[1], vertices[2])){ top = cv::Point((vertices[1].x + vertices[2].x) / 2., (vertices[1].y + vertices[2].y) / 2.); bottom = cv::Point((vertices[0].x + vertices[3].x) / 2., (vertices[0].y + vertices[3].y) / 2.); } else{ top = cv::Point((vertices[0].x + vertices[1].x) / 2., (vertices[0].y + vertices[1].y) / 2.); bottom = cv::Point((vertices[2].x + vertices[3].x) / 2., (vertices[2].y + vertices[3].y) / 2.); } if (top.y > bottom.y) qSwap(top, bottom); m_stick.setTop(top); m_stick.setBottom(bottom); stickFound = true; } // switch (m_state){ case ST_OBSERVING: if (!stickFound){ m_state = ST_WAITING; m_pointSeries.clear(); break; } m_pointSeries.append(QPair<double, double>(m_stick.top().x, m_stick.top().y)); if (m_pointSeries.size() >= 10){ m_actionPack = m_pSeriesAnaliser.analize(m_pointSeries); if (!m_actionPack.isEmpty()){ emit sendAction(m_actionPack); } m_pointSeries.clear(); } break; case ST_WAITING: m_state = ST_OBSERVING; break; } } You can read about the least squares method here . Now I will show how I implemented it. Below is a probabilistic analyzer series.

bool SeriesAnaliser::linerCheck(const QVector<QPair<double, double> > &source) { int count = source.size(); // 2 , . QVector<double> x(count); QVector<double> y(count); for (int i = 0; i < count; ++i){ x[i] = source[i].first; y[i] = source[i].second; } double zX, zY, zX2, zXY; // z - . zX - x- .. QVector<double> yT(count); // zX = 0; for (int i = 0; i < count; ++i) zX += x[i]; zY = 0; for (int i = 0; i < count; ++i) zY += y[i]; zX2 = 0; for (int i = 0; i < count; ++i) zX2 += x[i] * x[i]; zXY = 0; for (int i = 0; i < count; ++i) zXY += x[i] * y[i]; // double a = (count * zXY - zX * zY) / (count * zX2 - zX * zX); double b = (zX2 * zY - zX * zXY) / (count * zX2 - zX * zX); // y for (int i = 0; i < count; ++i) yT[i] = x[i] * a + b; double dif = 0; for (int i = 0; i < count; ++i){ dif += qAbs(yT[i] - y[i]); } if (a == 0) a = 10; #ifdef QT_DEBUG qDebug() << QString("%1x+%2").arg(a).arg(b); qDebug() << dif; #endif // > vBorder, , , // epsilan, , , // oblMovMin < a < oblMovMax, , , // 0.6, , , // a < horMov, , , . int vBorder = 3; int epsilan = 50; double oblMovMin = 0.5; double oblMovMax = 1.5; double horMov = 0.2; // , if (qAbs(a) < vBorder && dif > epsilan) return false; // double msInFrame = 1000 / s_fps; double dTime = msInFrame * count; // ms double dDistance; // px double speed = 0; /*px per ser*/ if (qAbs(a) < vBorder) dDistance = x[count - 1] - x[0]; // else dDistance = y[count -1] - y[0]; speed = dDistance / dTime; //px per // , if (qSqrt(qPow(x[0] - x[count - 1], 2) + qPow(y[0] - y[count - 1], 2)) < 15){ return false; } // . if (speed > 0.6) return false; // if (qAbs(a) > oblMovMin && qAbs(a) < oblMovMax){ // if (a < 0){ // s_actionPack = "next"; } else{ if (speed < 0) s_actionPack = "play"; else // s_actionPack = "previous"; } } else if (qAbs(a) < horMov) { s_actionPack = QString("rewind %1").arg(speed * -30000); } else if (qAbs(a) > vBorder){ s_actionPack = QString("volume %1").arg(speed * -1); } else return false; return true; } The following code snippet takes the recognized action (on the video player side):

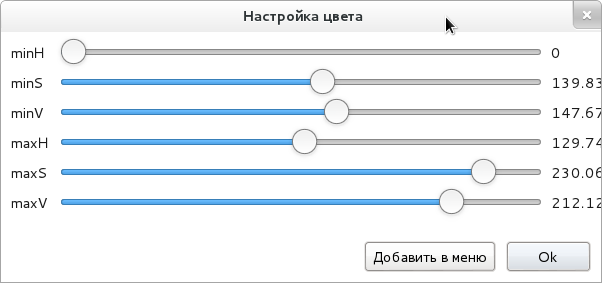

function executeComand(comand){ var comandList = comand.split(' '); console.log(comand); switch (comandList[0]) { case "next": nextMedia(); break; case "previous": previousMedia(); break; case "play": playMedia(); break; case "rewind": mediaPlayer.seek(mediaPlayer.position + Number(comandList[1])); break; case "volume": mediaPlayer.volume += Number(comandList[1]); break; default: break; } } Yes, I almost forgot, the pencil then we stand out from the background in color, and this needs to be set up somewhere. I solved the problem as follows:

That is, we can adjust any color at any consecration, and save this color in the menu so that we can immediately use it without setting.

Here are the results I got:

Conclusion

Now the video player can recognize very simple gestures. In my opinion, the most successful and convenient thing in the player is to rewind / forward gestures. And it is this team that works most well and stably. Although to watch the movie, gesture control will have to be adjusted a little, but then you can not search for a mouse to rewind a little bit backwards.

PS: Who cares , here are the sources of SmartVP .

PPS: I reused many colors, the most good (resistant to fast movements) turned out to be orange.

Source: https://habr.com/ru/post/213417/

All Articles