Configuring pgpool-II + PostgreSQL + Streaming replication + Hot standby in AWS

Hello!

I decided to describe the main points of setting up a PostgreSQL Failover (HA) cluster in the IaaS environment from Amazon - AWS.

A lot of articles have already been written about the configuration of this bundle since the release of version 9 with native replication, so I will not dwell on the configuration of PostgreSQL and pgpool itself, everything is relatively standard. The given pieces of configs are unsuitable for mindless copy-paste, in any case, you have to open your configs and edit the necessary parameters. I do not want to encourage the configuration process using the copy-paste method.

Streaming replication - means that the postgres nodes will themselves pull updates from the wizard. No need for additional archive functionality.

Hot standby - allows slave nodes to serve READ requests for load balancing, in contrast to warm standby, in which the slave server does not serve customer requests, but only constantly pulls up to date from the wizard. In turn, replication can be synchronous and asynchronous (sync or async).

In my example, the usual server slab master-slave bundle in it cannot be used synchronous replication, because during synchronous replication, the master, failing to send a replica to the slave, simply does not fulfill the request and will hang waiting for the slave and the whole point in such a scheme will be lost .

The created configuration should not have a single point of failure. At the pgpool level, we will use its native watchdog functionality to be able to track down the fall of one of the nodes and drag and drop the IP through which the client application is connected. At the postgresql level, we use streaming replication + hot standby. In the event that the master crashes, its role will quickly be taken by the slave, the slave in the master will turn the pgpool by creating the file trigger in $ PGDATA. In the case of a slave falling, we will bring it back to life manually, since any automatic manipulations with the databases are not brought to the good and the abnormal situation of the node's fall in any case requires attention. In all described cases of a fall, the client application must continue to function with minimal downtime.

')

Four virtual machines are created in AWS (Amazon Web Services) cloud: pgpool-1 (IP: 10.0.3.11), pgpool-2 (IP: 10.0.3.12), db-1 (IP: 10.0.3.21), db-2 ( IP: 10.0.3.22). Machines are created immediately in the VPC, in order to be able to assign private addresses and they would be saved between instances of reboots. When creating instances, I used the image of ami-8e987ef9 with Ubuntu. But if you have the opportunity to choose any image - take Amazon Linux, why I think you will learn from the text.

1. db-1 - wizard at the start of the bundle

In accordance with our needs, we adjust the values for

checkpoint_segments, max_wal_senders, wal_keep_segments

To start replication, they can be left by default, and then podtyunit, after reading wiki.postgresql.org/wiki/Tuning_Your_PostgreSQL_Server

In pg_hba.conf we set up access to replication, you can use a password, because I have everything in the cloud VPC is spinning and does not have direct access from the outside, I just registered trust:

2. db-2 - slave at the start of the bundle

The behavior of standby for the slave is controlled by the file recovery.conf, which must be in $ PGDATA.

In my case it was the directory /var/lib/postgresql/9.3/main

recovery.conf:

Do not forget about the settings for accessing pg_hba.conf

3. pgpool-1

4. pgpool-2

The config is identical to pgpool-1, the descriptions of the neighboring node are changed from pgpool-2 to pgpool-1

In / etc / hosts on both nodes, set the name binding to ip:

5. Integration of pgpool to work with our databases

From the pgpool-1 and pgpool-2 side, we create a script from the failover_command parameter that runs when the node is dropped (I have an automatic action performed only when the master node is dropped). All that it does is actually checked by the master if the node is dropped or not, and if the master creates a trigger file on the slave that automatically switches the slave to READ-WRITE mode, i.e. makes him a master:

From the db-1 and db-2 side, we install the pgpool scheme for work:

Create a pgpool_remote_start script in $ PGDATA that will start postgresql on the next node [depending on the version of postgresql you use, you may need the second parameter passed by pgpool pointing to the $ PGDATA directory for the node]:

As well as a script from the recovery_1st_stage_command parameter which will synchronize with the current master of new slaves (also lies in $ PGDATA next to pgpool_remote_start):

5. To be able to execute commands related to restarting services and copying data to remote hosts, you need to configure password-free access:

After setting up access, you need to check it on behalf of the user who will run the scripts while pgpool is running, I have this postgres:

From the host pgpool-1 we perform:

And so for all the necessary hosts, we check access and update the known_hosts file for ssh.

At this stage, a bunch of 4 nodes could already be started for normal operation outside the AWS environment.

After launching, the first pgpool that is launched pulls the virtual address from delegate_IP over itself by executing the command from the if_up_cmd parameter (by default there is just ifconfig).

Record in logs:

All these commands, as well as the statuses of the nodes in the pool, are very well described in the pgpool documentation - www.pgpool.net/docs/pgpool-II-3.3.2/doc/pgpool-en.html

If you disable the first pgpool, the second would drag the delegate_ip over to itself with a command from the if_up_cmd parameter.

When the db-1 or db-2 bend falls, the command from the failover_command parameter is executed.

To return the backend to the pool, use the pcp_attach_node and pcp_recovery_node commands.

What happens in AWS? Everything is the same except that the IP address must be previously assigned to the network interface through the “Assign a secondary private address” setting in the Network Intefaces menu. For Amazon Linux about which I wrote earlier, it is possible to automatically assign this address to a running instance and further crawl it between pgpool-1 and pgpool-2 if necessary (I personally did not test amazon linux, it would be very interesting to find out how smooth it all works ). In the case of an image unadapted for AWS, I need to use additional scripts from the ec2-api-tools set .

The latest version of api-tools is better to download from amazon .

To work ec2-api-tools need Java, set - apt-get install default-jre

The unpacked api-tools archive in aws / bin will contain scripts for managing aws through the console.

But to work with amazon api, you must have an authorization key.

The process of obtaining authentication data is described in detail on amazon here - docs.aws.amazon.com/IAM/latest/UserGuide/ManagingCredentials.html and then docs.aws.amazon.com/IAM/latest/UserGuide/ManagingUserCerts.html

On the first link we will learn how to create a user with keys and assign him the necessary group through the IAM menu ( console.aws.amazon.com/iam/home?#users when creating a key in open form is shown to the owner once if you do not have time to write it - it is necessary to generate another). Amazon, when first attempting to create a key, strongly recommends creating an individual user in the IAM menu for this purpose, instead of creating a key under the account of the AWS administrative account.

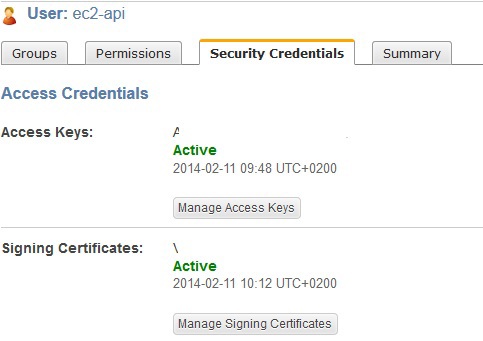

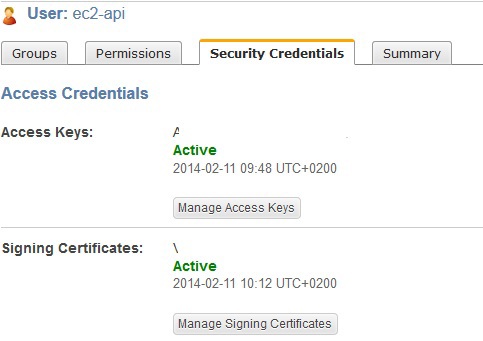

In the second link, we will learn how to create your certificate and register it all in the same IAM AWS menu:

The content of certificate.pem is uploaded to AWS in IAM. Certificates can be managed through the IAM Security Credentials menu:

After all these manipulations, we have:

certificate.pem for EC2_CERT parameter

private-key-in-PCKS8-format.pem for EC2_PRIVATE_KEY, AWS_ACCESS_KEY and AWS_SECRET_KEY.

You can start using ec2-api-tools.

For this, I created an if.sh script that will drag delegate_IP for pgpool between instances. The script as parameters receives the action that must be performed with the interface (up / down) and the desired ip address for the interface. Next, the script calculates the subnet for the entered IP (I use / 24 and I just cut off the last octet, so who doesn’t have the mask / 24, the script will have to be finished). I consider the subnet because On instances, two interfaces are used - the main one and the management, in order to understand which of them needs to hang the secondary ip.

To manage real elastic IP, you can use ec2-associate-address and ec2-unassign-private-ip-addresses.

Actually, these movements had to be performed in order to make friends a pgpool that does not work on an Amazon Linux instance, with AWS.

I decided to describe the main points of setting up a PostgreSQL Failover (HA) cluster in the IaaS environment from Amazon - AWS.

A lot of articles have already been written about the configuration of this bundle since the release of version 9 with native replication, so I will not dwell on the configuration of PostgreSQL and pgpool itself, everything is relatively standard. The given pieces of configs are unsuitable for mindless copy-paste, in any case, you have to open your configs and edit the necessary parameters. I do not want to encourage the configuration process using the copy-paste method.

Terminology:

Streaming replication - means that the postgres nodes will themselves pull updates from the wizard. No need for additional archive functionality.

Hot standby - allows slave nodes to serve READ requests for load balancing, in contrast to warm standby, in which the slave server does not serve customer requests, but only constantly pulls up to date from the wizard. In turn, replication can be synchronous and asynchronous (sync or async).

In my example, the usual server slab master-slave bundle in it cannot be used synchronous replication, because during synchronous replication, the master, failing to send a replica to the slave, simply does not fulfill the request and will hang waiting for the slave and the whole point in such a scheme will be lost .

Input data:

The created configuration should not have a single point of failure. At the pgpool level, we will use its native watchdog functionality to be able to track down the fall of one of the nodes and drag and drop the IP through which the client application is connected. At the postgresql level, we use streaming replication + hot standby. In the event that the master crashes, its role will quickly be taken by the slave, the slave in the master will turn the pgpool by creating the file trigger in $ PGDATA. In the case of a slave falling, we will bring it back to life manually, since any automatic manipulations with the databases are not brought to the good and the abnormal situation of the node's fall in any case requires attention. In all described cases of a fall, the client application must continue to function with minimal downtime.

')

Four virtual machines are created in AWS (Amazon Web Services) cloud: pgpool-1 (IP: 10.0.3.11), pgpool-2 (IP: 10.0.3.12), db-1 (IP: 10.0.3.21), db-2 ( IP: 10.0.3.22). Machines are created immediately in the VPC, in order to be able to assign private addresses and they would be saved between instances of reboots. When creating instances, I used the image of ami-8e987ef9 with Ubuntu. But if you have the opportunity to choose any image - take Amazon Linux, why I think you will learn from the text.

Configuration:

1. db-1 - wizard at the start of the bundle

...

wal_level = hot_standby

# The following parameter is ignored on the master server, but due to the fact that master and slave can change places, we include it in the master config

hot_standby = on

...

In accordance with our needs, we adjust the values for

checkpoint_segments, max_wal_senders, wal_keep_segments

To start replication, they can be left by default, and then podtyunit, after reading wiki.postgresql.org/wiki/Tuning_Your_PostgreSQL_Server

In pg_hba.conf we set up access to replication, you can use a password, because I have everything in the cloud VPC is spinning and does not have direct access from the outside, I just registered trust:

host replication postgres 10.0.3.0/24 trust

2. db-2 - slave at the start of the bundle

...

wal_level = hot_standby

hot_standby = on

...

The behavior of standby for the slave is controlled by the file recovery.conf, which must be in $ PGDATA.

In my case it was the directory /var/lib/postgresql/9.3/main

recovery.conf:

standby_mode = 'on'

primary_conninfo = 'host = 10.0.3.21 port = 5432 user = postgres'

trigger_file = '/var/lib/postgresql/9.3/main/postgresql.trigger'

Do not forget about the settings for accessing pg_hba.conf

3. pgpool-1

pgpool.conf:

...

backend_hostname0 = '10 .0.3.21 '

backend_port0 = 5432

backend_weight0 = 1

backend_data_directory0 = '/var/lib/postgresql/9.3/main'

backend_flag0 = 'ALLOW_TO_FAILOVER'

backend_hostname1 = '10 .0.3.22 '

backend_port1 = 5432

backend_weight1 = 1

backend_data_directory1 = '/var/lib/postgresql/9.3/main'

backend_flag1 = 'ALLOW_TO_FAILOVER'

# Because we have Hot Standby:

load_balance_mode = on

# We will work Streaming replication

master_slave_mode = on

master_slave_sub_mode = 'stream'

sr_check_period = 10

sr_check_user = 'postgres'

sr_check_password = ''

delay_threshold = 100

# It makes sense when slaves> 1

follow_master_command = ''

What to do when the node disappears:

failover_command = '/etc/pgpool2/failover.sh% d% P% H% R'

What to do when the dropped node returns:

failback_command = ''

# Run failover when we cannot connect to the backend

fail_over_on_backend_error = on

search_primary_node_timeout = 10

# What kind of user will we do online recovery

recovery_user = 'postgres'

recovery_password = ''

# At the first stage, pgpool continues to accept connections and requests from clients; the second stage does not.

# The running script should be in $ PGDATA

recovery_1st_stage_command = 'basebackup.sh'

recovery_2nd_stage_command = ''

# How many seconds are waiting for node recovery

recovery_timeout = 90

# We will use watchdog to monitor the status of pgpool

use_watchdog = on

wd_hostname = 'pgpool-1'

wd_port = 9000

wd_authkey = ''

# Virtual address to which the client application will connect:

delegate_IP = '10 .0.3.10 '

# Where will the interface management scripts lie:

ifconfig_path = '/ opt / AWS'

# Execute the command to assign a virtual IP node

if_up_cmd = 'if.sh up $ _IP_ $'

# We execute the command to remove the virtual IP from the node

if_down_cmd = 'if.sh down $ _IP_ $'

#Pgpool performs arping while dragging a virtual interface for an early update of the ARP cache

arping_cmd = ''

How can we check the liveliness of the neighboring pgpool node:

#heartbeat or try to send database requests through it

wd_lifecheck_method = 'heartbeat'

# Interval in seconds between checks:

wd_interval = 4

# Which port helmet:

wd_heartbeat_port = 9694

# Interval between keepalive packages

wd_heartbeat_keepalive = 2

# The time after which we consider the silent node to have disappeared:

wd_heartbeat_deadtime = 15

# Address of the next node:

heartbeat_destination0 = 'pgpool-2'

heartbeat_destination_port0 = 9694

# You can specify on which interface to work heartbeat'u

heartbeat_device0 = ''

# Describe the parameters of another node:

other_pgpool_hostname0 = 'pgpool-2'

other_pgpool_port0 = 9999

other_wd_port0 = 9000

...

4. pgpool-2

The config is identical to pgpool-1, the descriptions of the neighboring node are changed from pgpool-2 to pgpool-1

In / etc / hosts on both nodes, set the name binding to ip:

10.0.3.11 pgpool-1

10.0.3.12 pgpool-2

10.0.3.21 db-1

10.0.3.22 db-2

5. Integration of pgpool to work with our databases

From the pgpool-1 and pgpool-2 side, we create a script from the failover_command parameter that runs when the node is dropped (I have an automatic action performed only when the master node is dropped). All that it does is actually checked by the master if the node is dropped or not, and if the master creates a trigger file on the slave that automatically switches the slave to READ-WRITE mode, i.e. makes him a master:

#!/bin/bash -x FALLING_NODE=$1 # %d OLDPRIMARY_NODE=$2 # %P NEW_PRIMARY=$3 # %H PGDATA=$4 # %R KEY_PATH="/var/lib/postgresql/.ssh/id_rsa" if [ $FALLING_NODE = $OLDPRIMARY_NODE ]; then if [ $UID -eq 0 ] then sudo -u postgres ssh -T -i $KEY_PATH postgres@$NEW_PRIMARY "touch $PGDATA/postgresql.trigger" exit 0; fi ssh -T -i $KEY_PATH postgres@$NEW_PRIMARY "touch $PGDATA/postgresql.trigger" fi; exit 0; From the db-1 and db-2 side, we install the pgpool scheme for work:

sudo -u postgres psql -f /usr/share/postgresql/9.3/extension/pgpool-recovery.sql template1 Create a pgpool_remote_start script in $ PGDATA that will start postgresql on the next node [depending on the version of postgresql you use, you may need the second parameter passed by pgpool pointing to the $ PGDATA directory for the node]:

#! /bin/sh DEST=$1 PGCTL=/usr/bin/pg_ctlcluster KEY_PATH="/var/lib/postgresql/.ssh/id_rsa" ssh -T -i $KEY_PATH postgres@$DEST "$PGCTL 9.3 main stop --force;$PGCTL 9.3 main restart" As well as a script from the recovery_1st_stage_command parameter which will synchronize with the current master of new slaves (also lies in $ PGDATA next to pgpool_remote_start):

#! /bin/sh datadir=$1 desthost=$2 destdir=$3 KEY_PATH="/var/lib/postgresql/.ssh/id_rsa" PGCTL="/usr/bin/pg_ctlcluster" ssh -T -i $KEY_PATH postgres@$desthost "$PGCTL 9.3 main stop --force" psql -c "SELECT pg_start_backup('Streaming Replication', true)" postgres rsync -C -a -c --delete -e ssh --exclude postgresql.conf --exclude postmaster.pid \ --exclude postmaster.opts --exclude pg_log \ --exclude recovery.conf --exclude recovery.done \ --exclude pg_xlog $datadir/ $desthost:$destdir/ ssh -T -i $KEY_PATH postgres@$desthost "cp $destdir/../recovery.done $destdir/recovery.conf;rm $destdir/postgresql.trigger" psql -c "SELECT pg_stop_backup()" postgres 5. To be able to execute commands related to restarting services and copying data to remote hosts, you need to configure password-free access:

pgpool-1 -> db-1, db-2You can use host-based ssh, you can generate ssh keys and enable them in authorized_keys.

pgpool-2 -> db-1, db-2

db-1 -> db-2

db-2 -> db-1

After setting up access, you need to check it on behalf of the user who will run the scripts while pgpool is running, I have this postgres:

From the host pgpool-1 we perform:

sudo -u postgres ssh -i /path_to_key -T postgres@db-1 id And so for all the necessary hosts, we check access and update the known_hosts file for ssh.

At this stage, a bunch of 4 nodes could already be started for normal operation outside the AWS environment.

- We start the master host (db-1)

- Synchronize the slave with it (postgresql is not yet running on it), execute the $ PGDATA directory:

(recovery.done - the created recovery.conf template which refers to the IP wizard)mv main main.bak && sudo -u postgres pg_basebackup -h 10.0.3.21 -D /var/lib/postgresql/9.3/main -U postgres -v -P && cp recovery.done main/recovery.conf && chown postgres:postgres main/recovery.conf - We start postgresql on the slave:

sudo service postgresql restart - We look at the state of replication through “select * from pg_stat_replication”, we see something like this:

application_name | walreceiver

or simply check for the presence of wal sender / receiver in the process list on db-1 and db-2 hosts.

client_addr | 10.0.3.22

state | streaming

sent_location | 1 / 2A000848

write_location | 1 / 2A000848

flush_location | 1 / 2A000848

replay_location | 1 / 2A000848

sync_priority | 0

sync_state | async

After launching, the first pgpool that is launched pulls the virtual address from delegate_IP over itself by executing the command from the if_up_cmd parameter (by default there is just ifconfig).

Record in logs:

wd_escalation: escalated to master pgpool successfullyAfter a while, running the second pgpool in the logs, we see that the neighboring pgpool node was successfully identified and the bundle worked:

find_primary_node: primary node id is 0The status of the pool can be viewed by one of the pcp_ * commands - pcp_pool_status, pcp_node_info, or by querying via pgpool of the show pool_nodes;, show pool_pools; nodes

All these commands, as well as the statuses of the nodes in the pool, are very well described in the pgpool documentation - www.pgpool.net/docs/pgpool-II-3.3.2/doc/pgpool-en.html

If you disable the first pgpool, the second would drag the delegate_ip over to itself with a command from the if_up_cmd parameter.

When the db-1 or db-2 bend falls, the command from the failover_command parameter is executed.

To return the backend to the pool, use the pcp_attach_node and pcp_recovery_node commands.

AWS Staff:

What happens in AWS? Everything is the same except that the IP address must be previously assigned to the network interface through the “Assign a secondary private address” setting in the Network Intefaces menu. For Amazon Linux about which I wrote earlier, it is possible to automatically assign this address to a running instance and further crawl it between pgpool-1 and pgpool-2 if necessary (I personally did not test amazon linux, it would be very interesting to find out how smooth it all works ). In the case of an image unadapted for AWS, I need to use additional scripts from the ec2-api-tools set .

The latest version of api-tools is better to download from amazon .

To work ec2-api-tools need Java, set - apt-get install default-jre

The unpacked api-tools archive in aws / bin will contain scripts for managing aws through the console.

But to work with amazon api, you must have an authorization key.

The process of obtaining authentication data is described in detail on amazon here - docs.aws.amazon.com/IAM/latest/UserGuide/ManagingCredentials.html and then docs.aws.amazon.com/IAM/latest/UserGuide/ManagingUserCerts.html

On the first link we will learn how to create a user with keys and assign him the necessary group through the IAM menu ( console.aws.amazon.com/iam/home?#users when creating a key in open form is shown to the owner once if you do not have time to write it - it is necessary to generate another). Amazon, when first attempting to create a key, strongly recommends creating an individual user in the IAM menu for this purpose, instead of creating a key under the account of the AWS administrative account.

In the second link, we will learn how to create your certificate and register it all in the same IAM AWS menu:

openssl genrsa 1024> private-key.pem

openssl pkcs8 -topk8 -nocrypt -inform PEM -in private-key.pem -out private-key-in-PCKS8-format.pem

openssl req -new -x509 -nodes -sha1 -days 3650 -key private-key.pem -outform PEM> certificate.pem

The content of certificate.pem is uploaded to AWS in IAM. Certificates can be managed through the IAM Security Credentials menu:

After all these manipulations, we have:

certificate.pem for EC2_CERT parameter

private-key-in-PCKS8-format.pem for EC2_PRIVATE_KEY, AWS_ACCESS_KEY and AWS_SECRET_KEY.

You can start using ec2-api-tools.

For this, I created an if.sh script that will drag delegate_IP for pgpool between instances. The script as parameters receives the action that must be performed with the interface (up / down) and the desired ip address for the interface. Next, the script calculates the subnet for the entered IP (I use / 24 and I just cut off the last octet, so who doesn’t have the mask / 24, the script will have to be finished). I consider the subnet because On instances, two interfaces are used - the main one and the management, in order to understand which of them needs to hang the secondary ip.

Script if.sh:

#!/bin/sh if test $# -eq 0 then echo "This scripts adds and removes ip to subinterfaces and to AWS VPC configuration." echo "Don't forget to set variables inside this script." echo echo Usage: $0' [up|down] IP_ADDRESS' echo exit 1 fi #!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! #CORRECT VALUES MUST BE SET PRIOR TO RUN THIS SCRIPT #!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! #Proxy if used: export EC2_JVM_ARGS='-Dhttp.proxySet=true -Dhttps.proxySet=true -Dhttp.proxyHost=xxxx -Dhttp.proxyPort=3128 -Dhttps.proxyHost=xxxx -Dhttps.proxyPort=3128' #Path to unpacked ec2-api from http://s3.amazonaws.com/ec2-downloads/ec2-api-tools.zip export EC2_HOME=/opt #Path to java export JAVA_HOME=/usr #Path to generated private key & cert (READ http://docs.aws.amazon.com/IAM/latest/UserGuide/ManagingUserCerts.html) export EC2_PRIVATE_KEY=/opt/private-key-in-PCKS8-format.pem export EC2_CERT=/opt/certificate.pem #User access & secret key (READ http://docs.aws.amazon.com/IAM/latest/UserGuide/ManagingCredentials.html) export AWS_ACCESS_KEY=YOUR_ACCESS_KEY export AWS_SECRET_KEY=YOUR_SECRET_KEY #Region for this EC2 instance REGION=YOUR_REGION #!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! AWS_PATH=$EC2_HOME VIP=$2 subnet () { SUB=`echo $VIP | awk '{ split($0,a,"."); print a[1]"."a[2]"."a[3]"."; }'` SUBNET=`$AWS_PATH/bin/ec2-describe-subnets --region $REGION | grep -F $SUB | awk '{print $2}'` echo Subnet-id: $SUBNET if [ -z "$SUBNET" ]; then echo "Wrong subnet!" exit 1; fi Instance_ID=`/usr/bin/curl --silent http://169.254.169.254/latest/meta-data/instance-id` echo Instance_ID=$Instance_ID ENI_ID=`$AWS_PATH/bin/ec2-describe-instances $Instance_ID --region $REGION | cut -f 2,3 | grep $SUBNET | awk '{print $1}'` echo ENI_ID=$ENI_ID } if_up () { subnet /usr/bin/sudo /sbin/ifconfig eth1:0 inet $VIP netmask 255.255.255.255 $AWS_PATH/bin/ec2-assign-private-ip-addresses -n $ENI_ID --secondary-private-ip-address $VIP --allow-reassignment --region $REGION } if_down (){ subnet /usr/bin/sudo /sbin/ifconfig eth1:0 down $AWS_PATH/bin/ec2-unassign-private-ip-addresses -n $ENI_ID --secondary-private-ip-address $VIP --region $REGION } case $1 in [uU][pP]) if_up break ;; [dD][oO][wW][nN]) if_down break ;; *) echo "Up/Down command missed!" exit 1 esac /usr/sbin/service networking restart > /dev/null 2>&1 To manage real elastic IP, you can use ec2-associate-address and ec2-unassign-private-ip-addresses.

Actually, these movements had to be performed in order to make friends a pgpool that does not work on an Amazon Linux instance, with AWS.

Source: https://habr.com/ru/post/213409/

All Articles