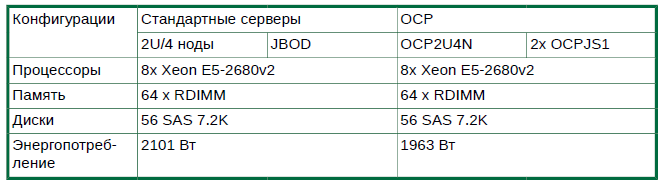

Therascale OCP Solution

What is the Open Compute Project (OCP)?

In Russia, very little is known about this, mostly advertising claims about billions of Facebook savings using OCP.

Officially, the Open Compute Project is a Facebook-based community aimed at creating the most optimal infrastructure for the DC with minimal attention to existing options.

In fact, Facebook has long tried to come up with the best option for the datacenter, the sleep of reason gave birth to monsters like a triplet (triple rack, one of which is a huge uninterruptible power supply). How long, whether short ideas wandered, but one fine day someone had a great idea - to create a community and attracted enthusiasts to throw ideas.

')

It is worth noting that the result was very good.

OCP objectives:

What happened?

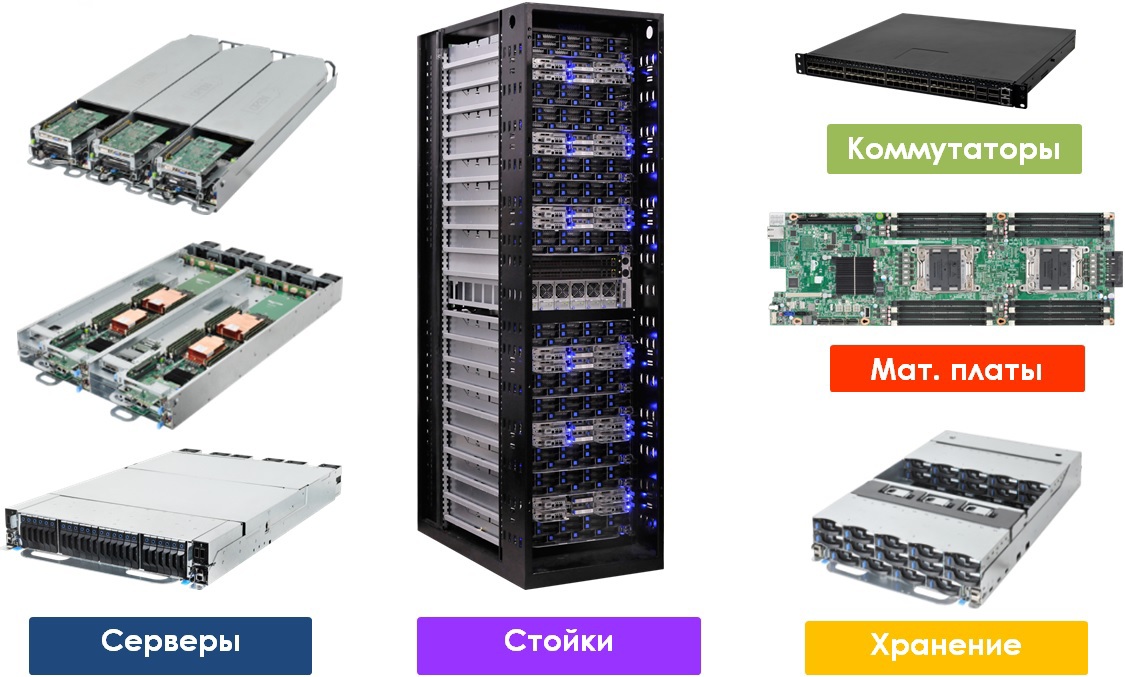

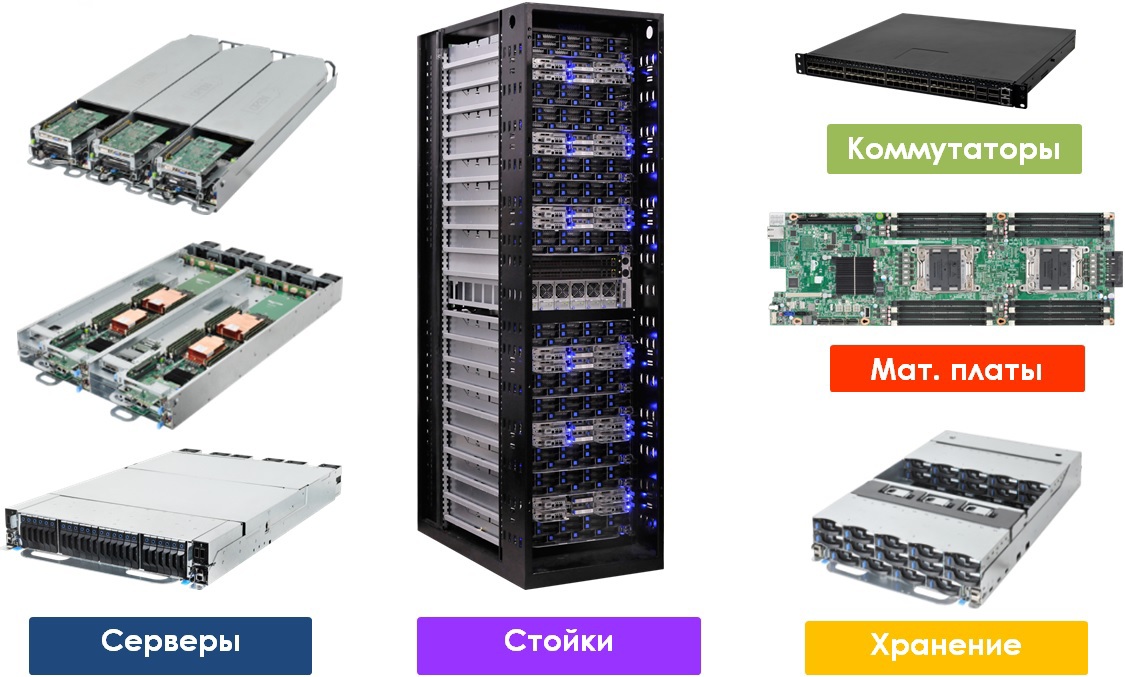

It turned out a complete line for Software-Defined Datacenter .

All that is needed

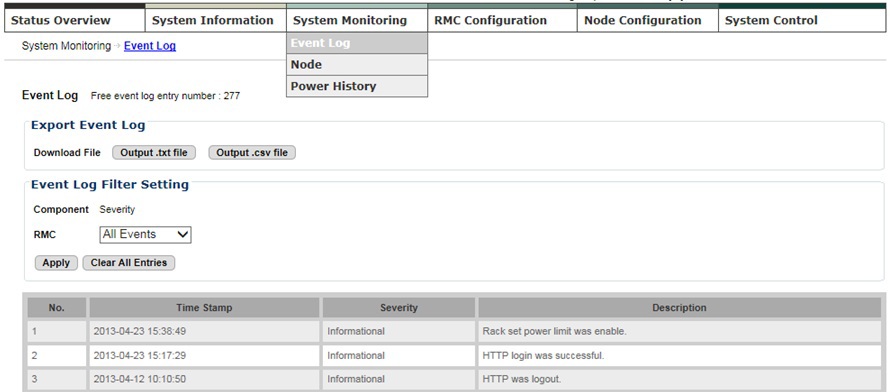

Not forgotten and remote control system. OCP RMC (Rack Management Controller) monitors the status of servers and power supplies, and is managed via Web, SMASH CLI or SNMP.

Rack controller

Opportunities:

Supported Protocols:

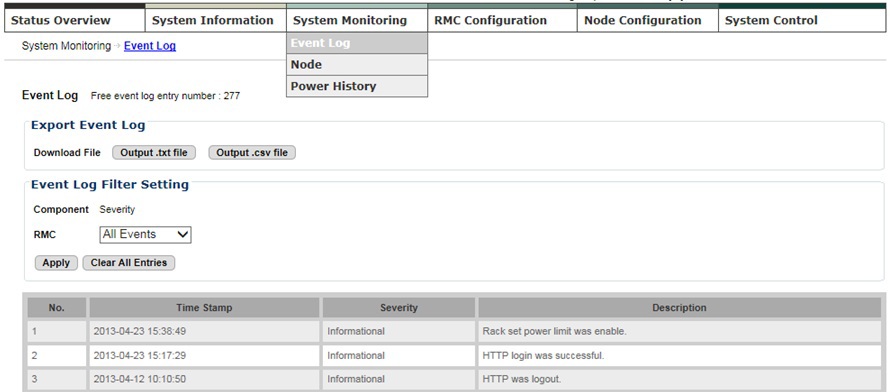

Screenshots:

The event log

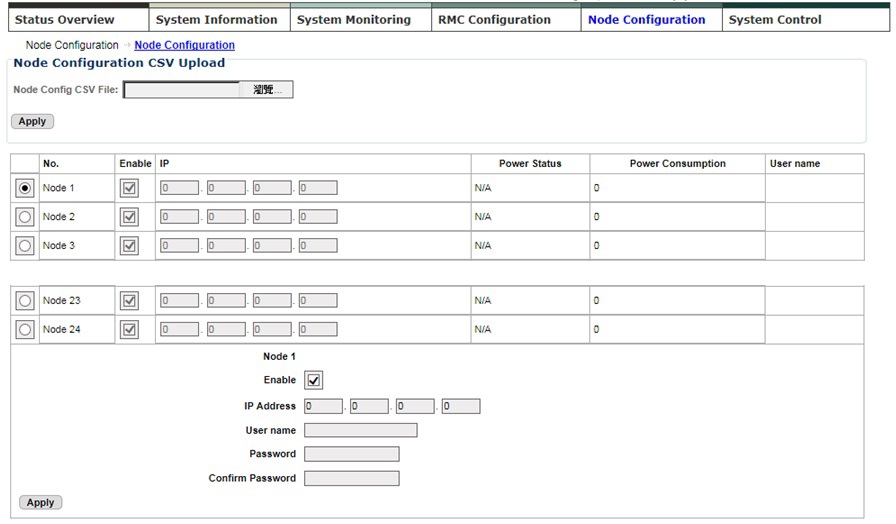

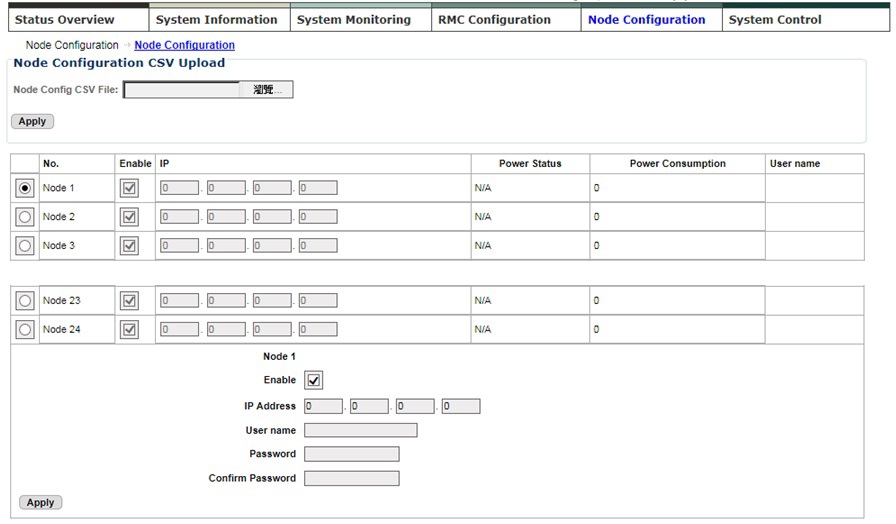

Configuring nodes

This is how node configurations are defined.

A. Enable / Disable Consumption Limit (Y / N)

B. ME IP Address

C. ME Username

D. ME Password

It should be noted that Facebook relies on the capabilities of the Intel Management Engine and does not use IPMI / KVM over IP, considering them redundant. In the event of a breakdown, the entire server changes, without trying to monitor the boot process via remote control. In this case, there is an option 10G SFP + daughter card with an integrated full-featured service processor and all the joys of IPMI / KVM over IP with virtual media included.

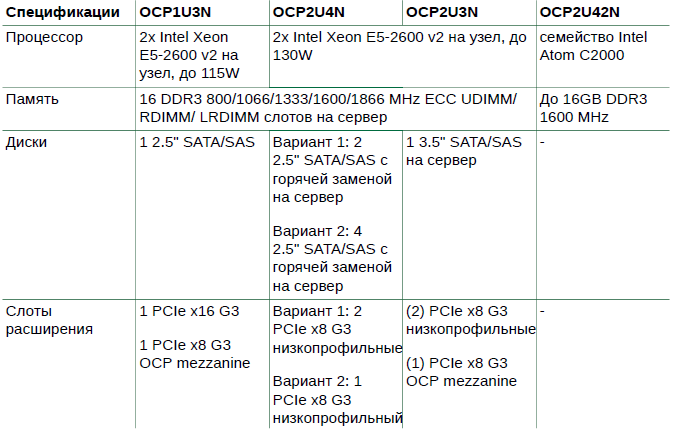

The lineup

From a general idea, it's time to move on to the proposed models.

Rack

Servers

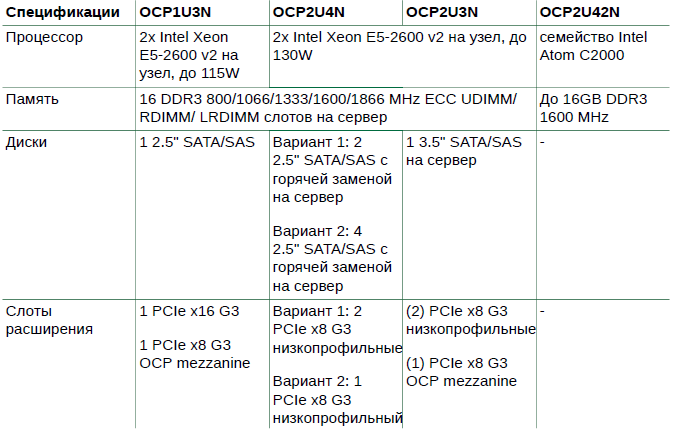

The ruler is designed based on minimalism, there is only what is really necessary. The main network is made in the form of a subsidiary (mezzanine) board, there are options

10G, InfiniBand, FC.

The OCP2U4N platform is made in the 2OU (Open Unit) form factor and accommodates 4 servers. Four hot-swappable disks and one PCI-E or two disks and two PCI-E slots on each server plus OCP mezz for high density of computing resources, disk space and expansion cards (for example, flash accelerators). The default network interface is the OCP Mezz daughter board with two 10G SFP + ports and a full-featured BMC processor.

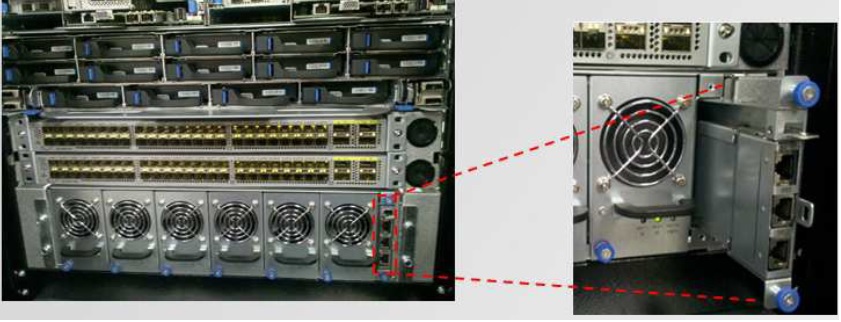

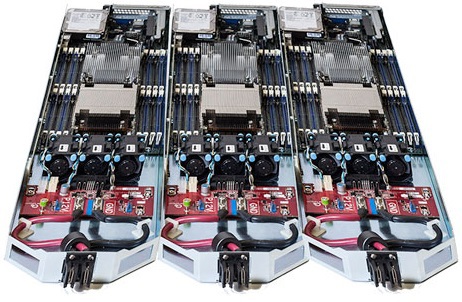

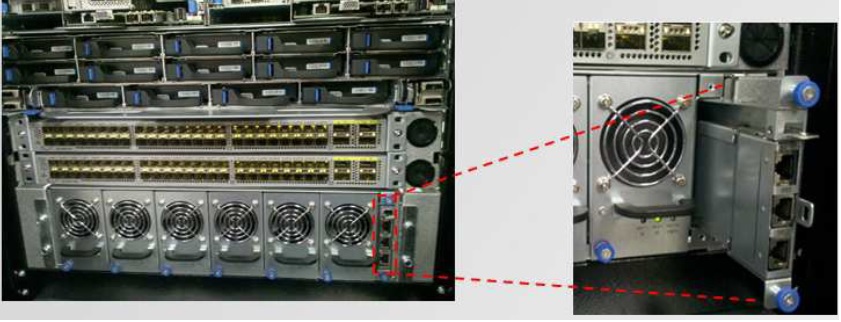

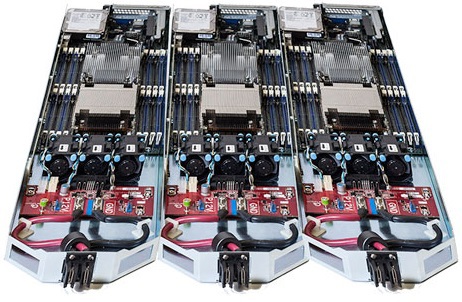

View closer

Hot-swap fans common to all servers are used.

Universal servers for various tasks, from web 2.0 to computing. Centralized power and the absence of unnecessary items on the board increase the MTBF rate by 58% compared to traditional rack systems. Two expansion slots, OCP mezz and hard disk space complete the picture. The default network interface is the OCP Mezz daughter board with two 10G SFP + ports and a full-featured BMC processor.

The OCP1U3N platform for computing tasks is the most utilitarian. 16 memory slots, one PCI-E slot, OCP mezz for InfiniBand or 40G Ethernet, one hard disk space and a couple of network interfaces, including management. 90 nodes per rack with air cooling (115W per processor) plus switches — great results!

Microserver? Who mentioned the microserver?

And they too are. 42 servers based on Intel Atom C2000 series processors in 2OU format, two switches with support for OpenFlow switching based on Intel FM5224 matrix with two 40G uplinks on each. Almost 4 gigabits of inbound bandwidth per server will ensure the operation of any distributed application. All components are hot-swappable for easy deployment and maintenance.

Storage system

Any specialized SHD, only Software Defined Storage on disk regiments. Although no one forbids installing hardware RAID controllers.

A disk shelf with a cunning format in 2OU format accommodates 28 3.5 "hot-swap disks. The cunning way of double-layer disk placement allowed us to pack 28 spindles into the case, which are fixed in baskets without using screws (the video was higher). SAS expanders are fixed in the case, if you pull out the basket for servicing the second row of disks, there will be no torment with the cables that remain in place.

Another disk shelf, also made in 2OU format, and holds 30 3.5 "hot-swappable disks. Two pallets with disks, each with 15 spindles plus two SAS expanders, everything is replaced with a hot one without additional tools.

Similar to the previous product, instead of expanders, two servers based on the Intel Atom C2000 are installed (one for 15 disks). Each server has a 10G SFP + interface to the network and external SAS ports for expanding the space. A good choice for cold data.

Network infrastructure

Switches are used completely standard in size. As you have noticed

from the description of models, gigabit for the main network is not used in principle, the minimum - 10G. Facebook itself is eyeing 40G and higher, but so far the cost of the transition does not allow it.

Another important trend within the Software Defined Datacenter strategy is the transition to software-defined networks, Software Defined Networking (SDN). It is assumed that the transition to SDN will drastically speed up the deployment of networks, reduce operating costs and severely spoil all network manufacturers with proprietary technologies :)

By the way, our previous article described the ideal switch for SDN and a way to get it completely free :)

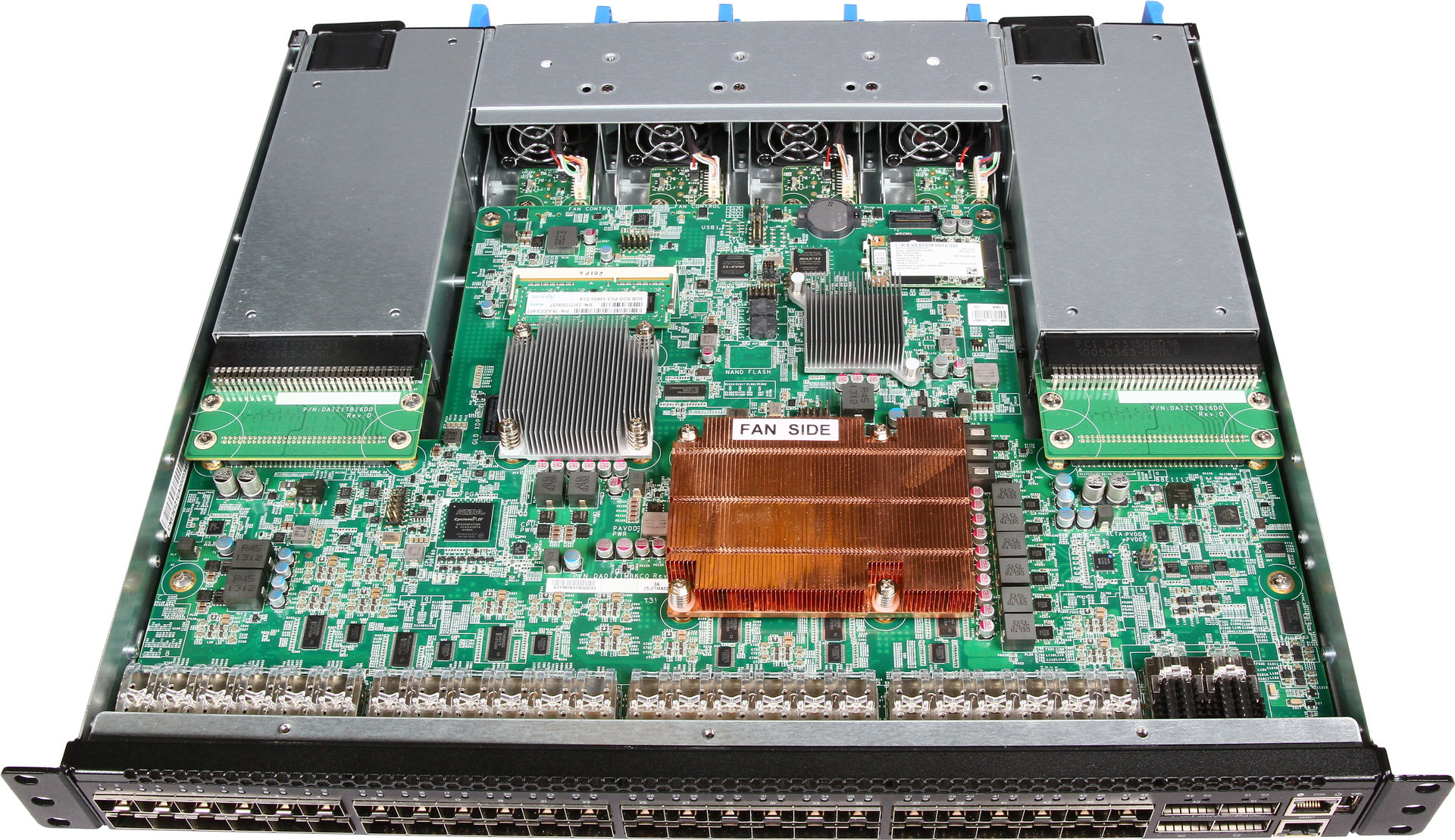

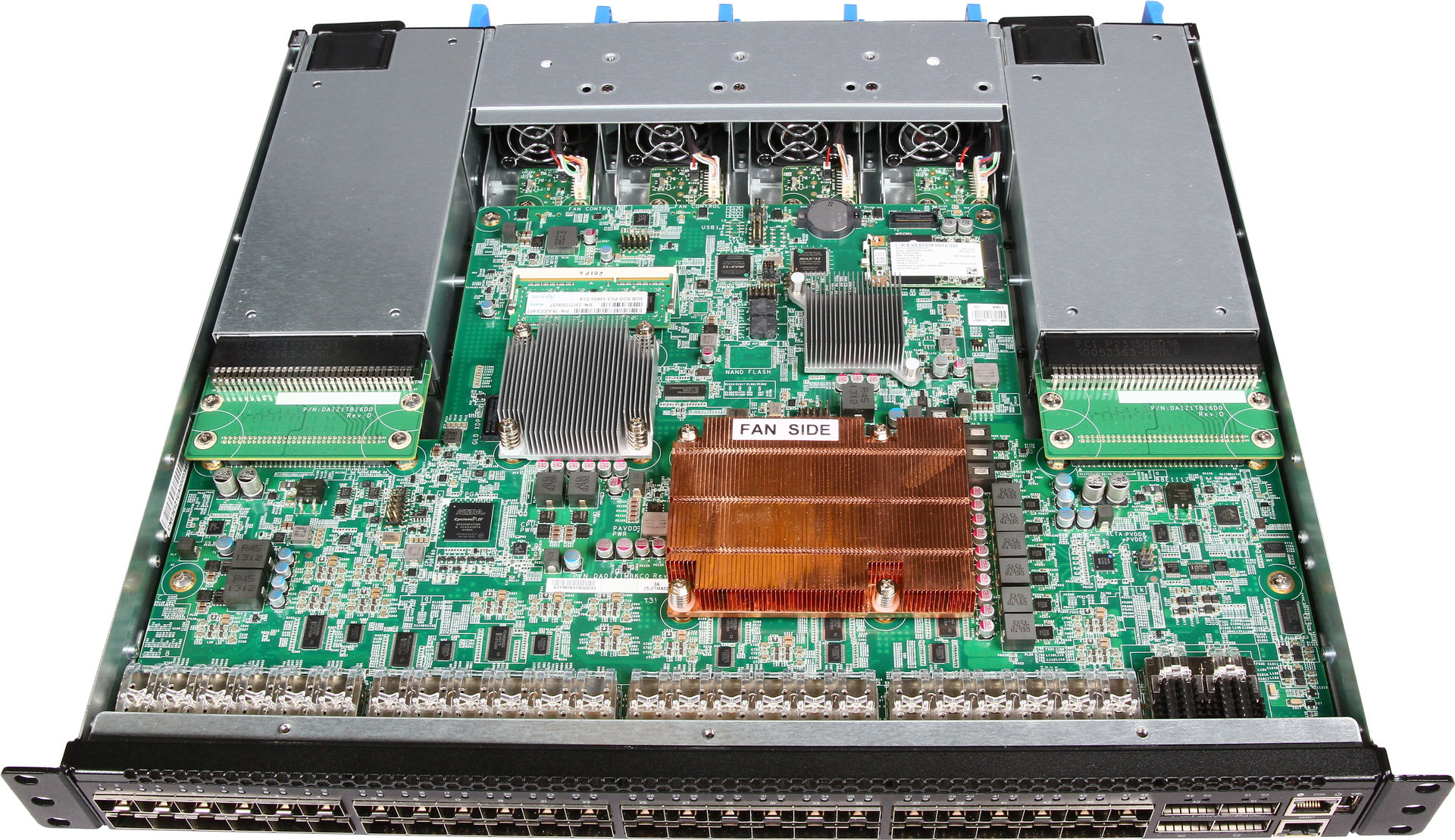

Software Defined Network (SDN) based on Intel ONS open platform

View of the switch from the inside

Total

OCP, at first glance, looks strange to a wide audience. At second glance, everything becomes much more interesting. The solution is suitable not only for large Internet companies, but also for enterprises with large internal IT capacities.

In passive:

In the asset:

If you are considering a solution that will take (in the foreseeable future) a rack and more, then switching to OCP will be a very interesting option. Proprietary solutions will tie you to a specific, very limited on the possibilities infrastructure.

Well, we presented a solution based on Open Compute in the product line.

In Russia, very little is known about this, mostly advertising claims about billions of Facebook savings using OCP.

Officially, the Open Compute Project is a Facebook-based community aimed at creating the most optimal infrastructure for the DC with minimal attention to existing options.

In fact, Facebook has long tried to come up with the best option for the datacenter, the sleep of reason gave birth to monsters like a triplet (triple rack, one of which is a huge uninterruptible power supply). How long, whether short ideas wandered, but one fine day someone had a great idea - to create a community and attracted enthusiasts to throw ideas.

')

It is worth noting that the result was very good.

OCP objectives:

- Increase MTBF.

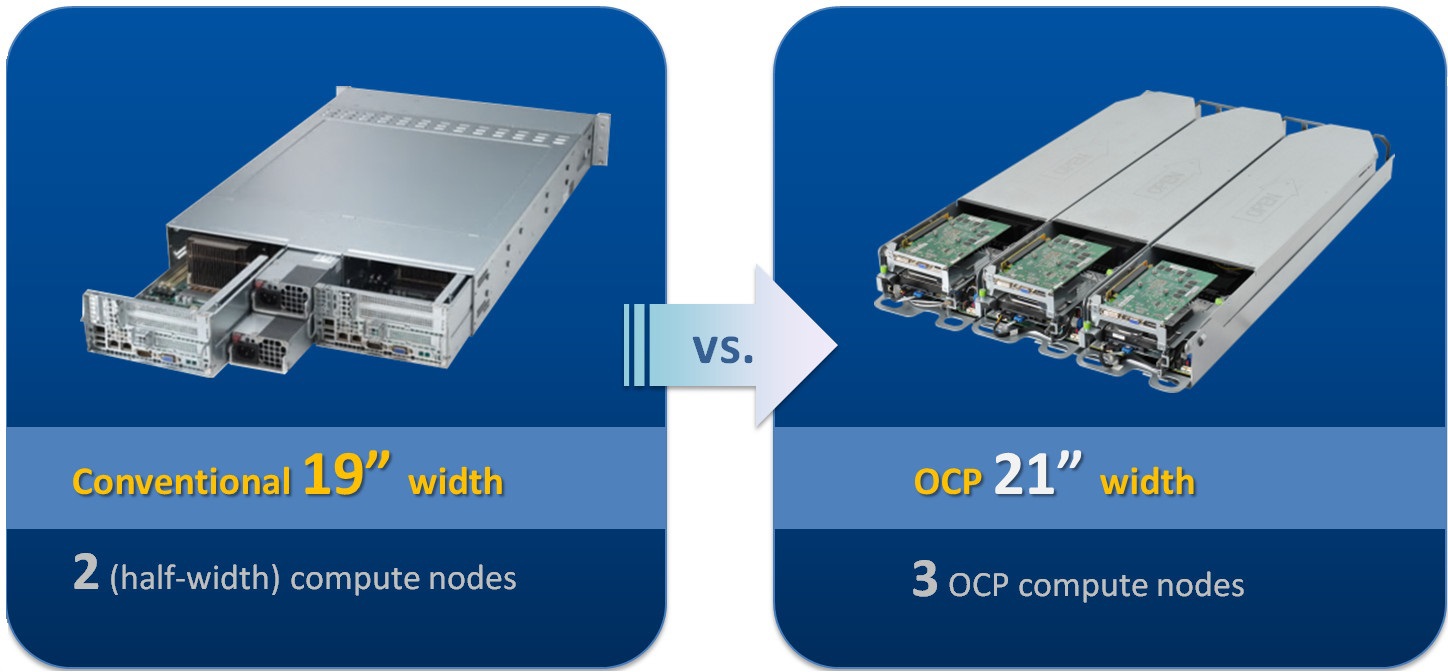

The rejection of the "extra" elements on the board increased the operating time by 35%. - Increase the density of racks.

The transition to 21 inches allows you to accommodate more servers while maintaining the functionality of each of them.

Wider rack - more fit - Simplify and speed up maintenance.

- Optimize to work from the cold aisle.

Stop running between rows. - Increase energy efficiency.

At an external temperature of 35 degrees

Reduced energy consumption by 11% under the same load and external conditions.

What happened?

It turned out a complete line for Software-Defined Datacenter .

All that is needed

Not forgotten and remote control system. OCP RMC (Rack Management Controller) monitors the status of servers and power supplies, and is managed via Web, SMASH CLI or SNMP.

Rack controller

Opportunities:

- Remote power management.

- Monitor rack and server consumption.

- Setting power limits for the rack and nodes.

- Monitoring the state of BP and servers.

- Supports hot swap.

- Authentication via LDAP.

Supported Protocols:

- IPMI / RMCP +

- SMASH CLI

- SNMP

- HTTP / HTTPS

- NTP, SMTP, Syslog

Screenshots:

The event log

Configuring nodes

This is how node configurations are defined.

A. Enable / Disable Consumption Limit (Y / N)

B. ME IP Address

C. ME Username

D. ME Password

It should be noted that Facebook relies on the capabilities of the Intel Management Engine and does not use IPMI / KVM over IP, considering them redundant. In the event of a breakdown, the entire server changes, without trying to monitor the boot process via remote control. In this case, there is an option 10G SFP + daughter card with an integrated full-featured service processor and all the joys of IPMI / KVM over IP with virtual media included.

The lineup

From a general idea, it's time to move on to the proposed models.

Rack

Servers

The ruler is designed based on minimalism, there is only what is really necessary. The main network is made in the form of a subsidiary (mezzanine) board, there are options

10G, InfiniBand, FC.

The OCP2U4N platform is made in the 2OU (Open Unit) form factor and accommodates 4 servers. Four hot-swappable disks and one PCI-E or two disks and two PCI-E slots on each server plus OCP mezz for high density of computing resources, disk space and expansion cards (for example, flash accelerators). The default network interface is the OCP Mezz daughter board with two 10G SFP + ports and a full-featured BMC processor.

View closer

Hot-swap fans common to all servers are used.

Universal servers for various tasks, from web 2.0 to computing. Centralized power and the absence of unnecessary items on the board increase the MTBF rate by 58% compared to traditional rack systems. Two expansion slots, OCP mezz and hard disk space complete the picture. The default network interface is the OCP Mezz daughter board with two 10G SFP + ports and a full-featured BMC processor.

The OCP1U3N platform for computing tasks is the most utilitarian. 16 memory slots, one PCI-E slot, OCP mezz for InfiniBand or 40G Ethernet, one hard disk space and a couple of network interfaces, including management. 90 nodes per rack with air cooling (115W per processor) plus switches — great results!

Microserver? Who mentioned the microserver?

And they too are. 42 servers based on Intel Atom C2000 series processors in 2OU format, two switches with support for OpenFlow switching based on Intel FM5224 matrix with two 40G uplinks on each. Almost 4 gigabits of inbound bandwidth per server will ensure the operation of any distributed application. All components are hot-swappable for easy deployment and maintenance.

Storage system

Any specialized SHD, only Software Defined Storage on disk regiments. Although no one forbids installing hardware RAID controllers.

A disk shelf with a cunning format in 2OU format accommodates 28 3.5 "hot-swap disks. The cunning way of double-layer disk placement allowed us to pack 28 spindles into the case, which are fixed in baskets without using screws (the video was higher). SAS expanders are fixed in the case, if you pull out the basket for servicing the second row of disks, there will be no torment with the cables that remain in place.

Another disk shelf, also made in 2OU format, and holds 30 3.5 "hot-swappable disks. Two pallets with disks, each with 15 spindles plus two SAS expanders, everything is replaced with a hot one without additional tools.

Similar to the previous product, instead of expanders, two servers based on the Intel Atom C2000 are installed (one for 15 disks). Each server has a 10G SFP + interface to the network and external SAS ports for expanding the space. A good choice for cold data.

Network infrastructure

Switches are used completely standard in size. As you have noticed

from the description of models, gigabit for the main network is not used in principle, the minimum - 10G. Facebook itself is eyeing 40G and higher, but so far the cost of the transition does not allow it.

Another important trend within the Software Defined Datacenter strategy is the transition to software-defined networks, Software Defined Networking (SDN). It is assumed that the transition to SDN will drastically speed up the deployment of networks, reduce operating costs and severely spoil all network manufacturers with proprietary technologies :)

By the way, our previous article described the ideal switch for SDN and a way to get it completely free :)

Software Defined Network (SDN) based on Intel ONS open platform

View of the switch from the inside

Total

OCP, at first glance, looks strange to a wide audience. At second glance, everything becomes much more interesting. The solution is suitable not only for large Internet companies, but also for enterprises with large internal IT capacities.

In passive:

- Unusual format of the interior of the rack, incompatible with 19 ".

- Unusual approach to the formation of solutions in general.

In the asset:

- Reduced energy costs.

- Simplicity and incredible ease of maintenance.

- Reduced acquisition costs.

If you are considering a solution that will take (in the foreseeable future) a rack and more, then switching to OCP will be a very interesting option. Proprietary solutions will tie you to a specific, very limited on the possibilities infrastructure.

Well, we presented a solution based on Open Compute in the product line.

Source: https://habr.com/ru/post/213257/

All Articles